Game developer Naughty Dog celebrated the release of The Last of Us Part 1 on PC less than a week ago, but based on early feedback, perhaps they should have spent more time fine-tuning the port than planning their victory laps.

Today we're taking a look at the game's GPU performance even though controversy centers around poor performance, with many gamers taking the time to express their disappointment in the form of a negative Steam review (over 10k negative reviews as of writing), and this is unusual because us gamers are normally a really positive bunch.

The primary complaints from gamers have been horrible stuttering and constant crashing, and having now run 26 different graphics cards through the game, we think the reason for this is quite obvious: it appears to be a VRAM issue.

In The Last of Us Part I, you'll quickly run out of VRAM on graphics cards with just 8GB, even at 1080p.

Most gamers like to dial up the quality settings and when doing so in The Last of Us Part I, you'll quickly run out of VRAM on graphics cards with just 8GB, even at 1080p. Those running a GeForce RTX 3070, for example, are probably accustomed to maxing out games at 1080p and still receiving a smooth high refresh experience, but that won't be the case here.

The Last of Us Part I will happily consume over 12 GB of VRAM at 1080p using the Ultra quality preset, and this isn't just allocation, rather the game appears to actually use that much memory, and this means trouble for those of you with 8GB graphics cards trying to play using the ultra quality settings.

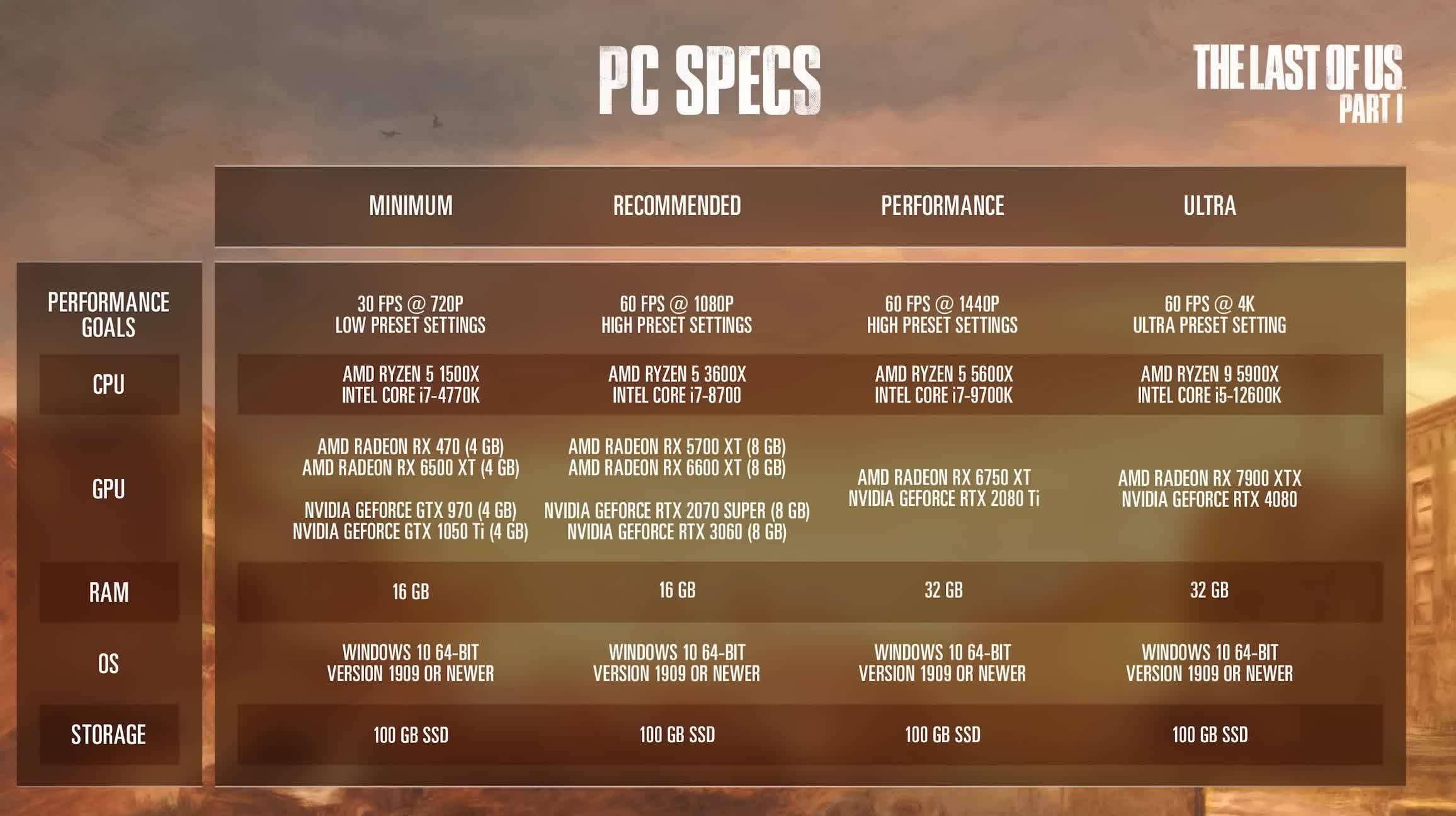

We can understand the confusion around this issue though as the developers' own recommended PC specs don't say you can use an 8GB graphics card at 1080p using the ultra preset. Rather they provide ultra preset recommendations at 4K, though they do recommend 8GB graphics cards for 1080p high.

Anyway, it's not super clear, so that's why we're here to provide you with a detailed GPU benchmark. Granted, it's only about half as detailed as we'd like with 26 graphics cards rather than 50+. That's because we've wasted around 10 hours watching the game build shaders, and that ate quite heavily into our benchmark time.

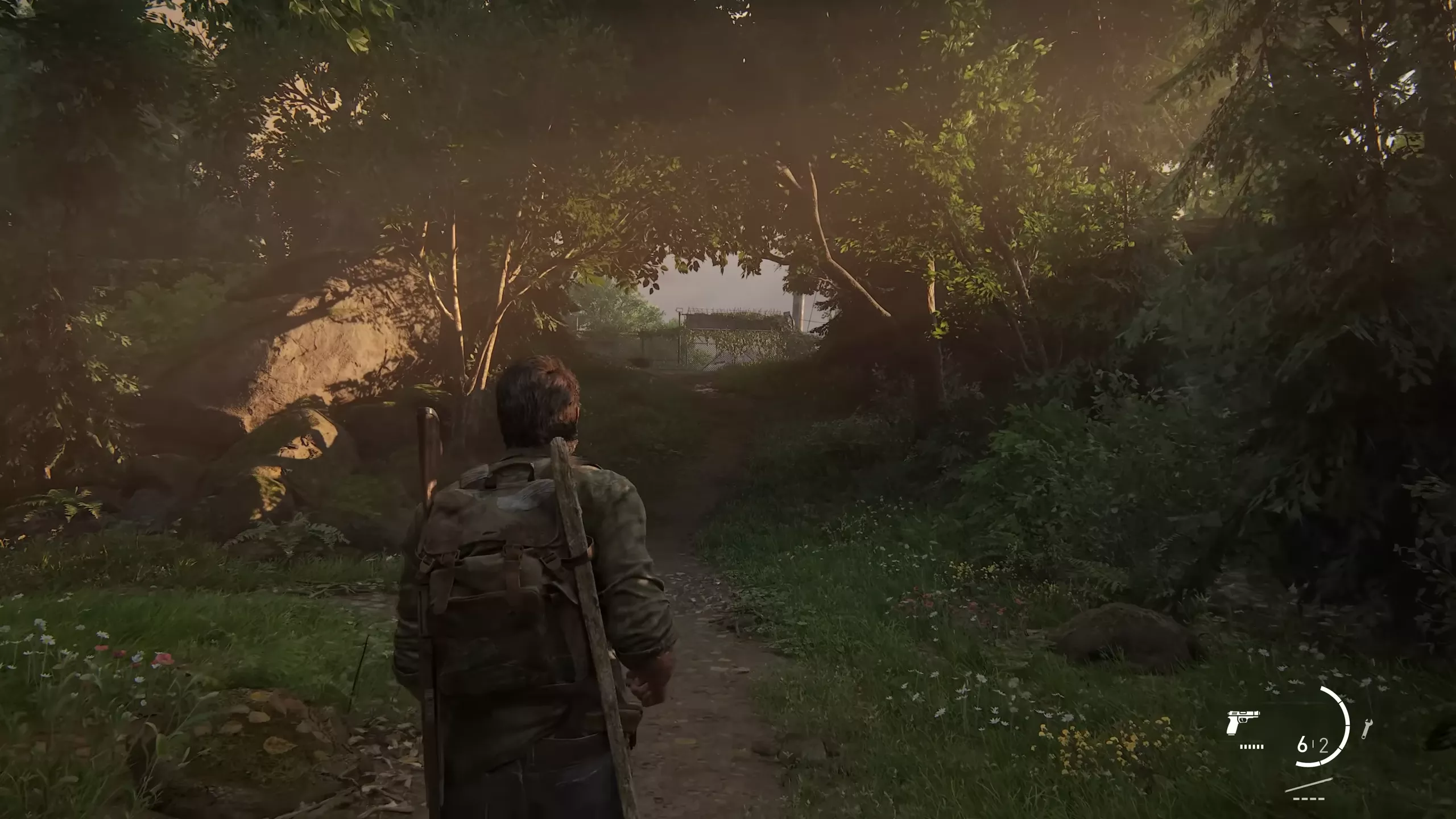

For testing we're using The Woods section of the game, as this appeared to be one of the more graphically demanding areas with a lot of foliage. VRAM usage is high here, we wouldn't say it was notably higher than other sections of the game though, which makes our results a little strange when compared to other reviews.

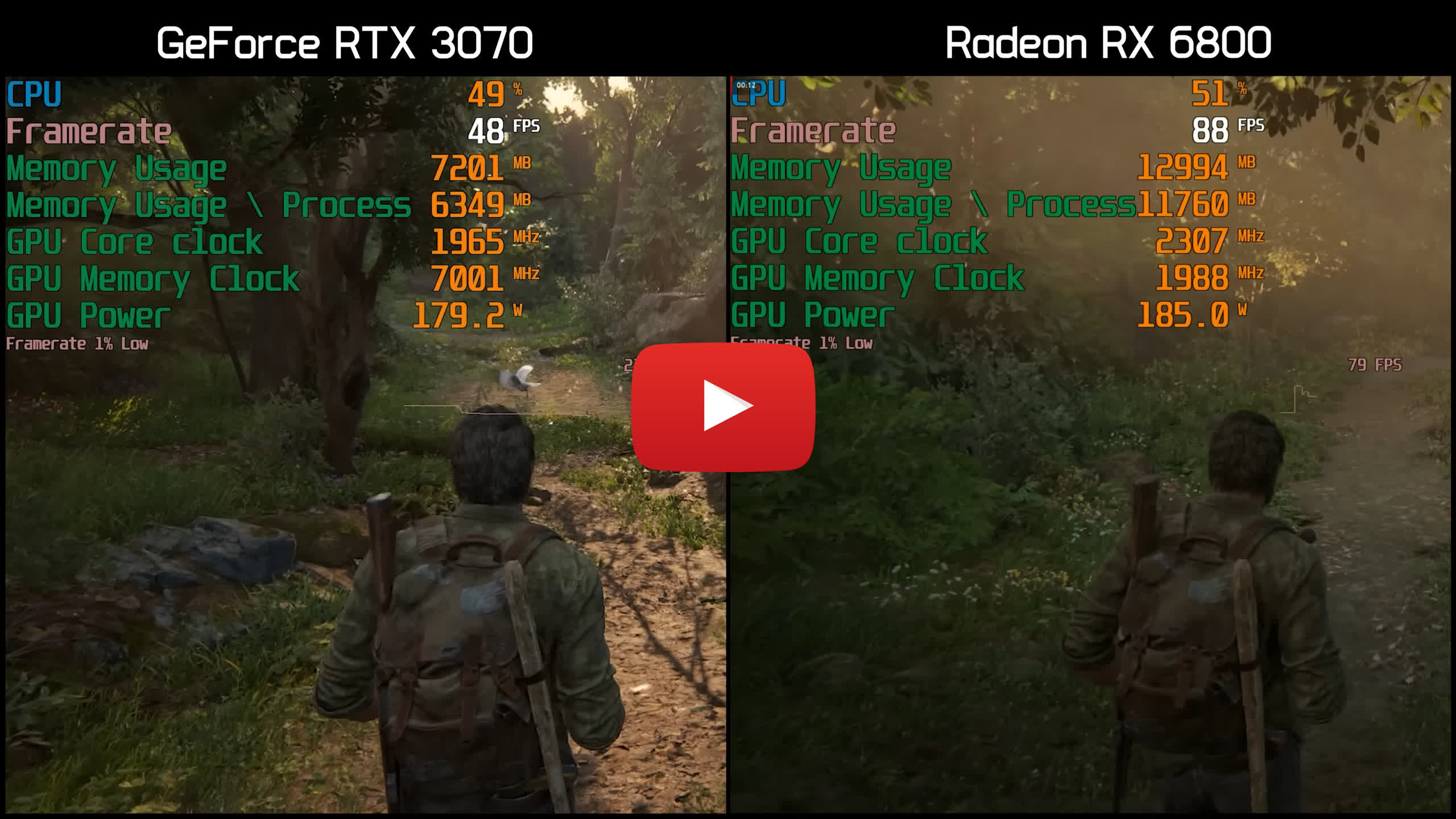

To get that out of the way, we've seen a few other reviewers benchmark this game showing reasonable minimum or 1% low performance for 8GB graphics cards when using the ultra preset at 1080p, and even 1440p. Yet, our 1% lows are a blowout on 8GB cards, and this appears to be in line with user reports and footage from gamers on YouTube, showing horrible 1% lows with otherwise capable GPUs such as the RTX 3070, so let's take a look at that.

Here's a side by side comparison of the RTX 3070 (8GB) and the Radeon RX 6800 (16GB). The footage doesn't line up exactly, it's just the same section of the game, but we can clearly show you how bad the 1% lows are on the GeForce with less VRAM. In this single example, we're looking at 1% lows of around 20 fps on the RTX 3070, while the RX 6800 is up around 80 fps. You can also see stuttering on the GeForce side, and here's a quick look at the frame time graph where you can see the 6800 is smooth while the RTX 3070 is a mess.

For a better representation of image quality comparisons, check out the HUB video below:

If you come across a different benchmark showing much better 1% lows than we are going to show for 8GB graphics cards, it's likely because the scene they used was less demanding (or some other change in hardware we're not accounting for).

For our testing we're using the Ryzen 9 7950X3D with the second CCD disabled - this is to ensure maximum gaming performance, essentially testing with the yet to be released 7800X3D. This CPU has been tested on the Gigabyte X670E Aorus Master using 32GB of DDR5-6000 CL30 memory. On the drivers front, we used AMD Adrenalin 23.3.2 and Nvidia Game Ready 531.41 drivers.

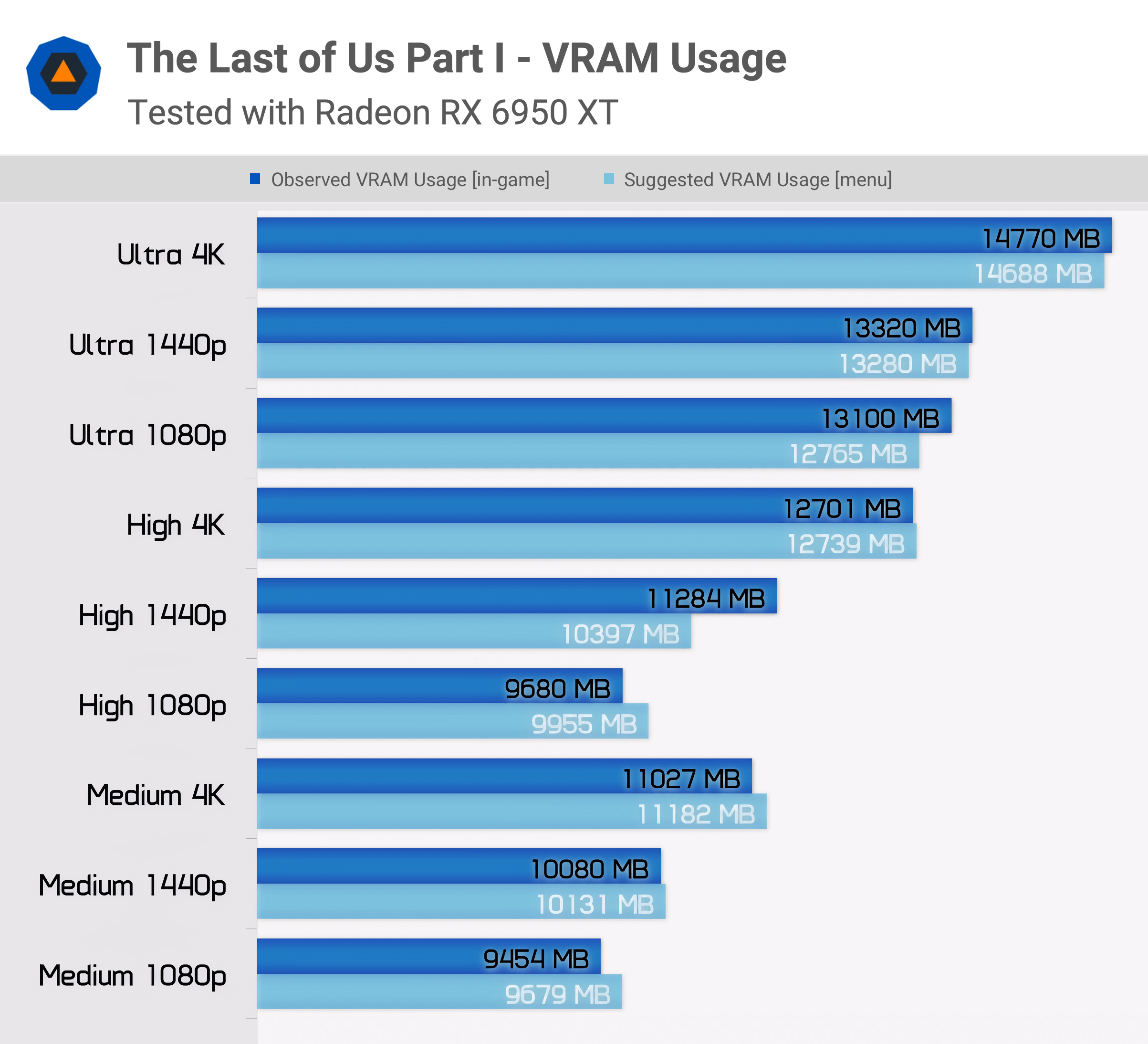

VRAM Usage

First, here's a quick look at VRAM usage at various resolutions and presets. From 4K high to any resolution using the ultra quality preset, we're running well over 12 GB of VRAM and the suggested VRAM requirements in the game menu were bang on with what we saw in our benchmark pass.

At 1440p using high settings we dropped down to around 10 GB of VRAM usage in our benchmark pass, while the game suggested it could use up to 11.3 GB. Once we dropped to 1080p with the high quality preset, the VRAM usage dipped just below 10 GB.

With medium quality settings, we're looking at VRAM usage between 9.5 and 11 GB depending on the resolution. Based on what, it would seem as though up to around 11 GB of VRAM usage is generally okay on an 8GB graphics card, but going beyond 12 GB kills performance.

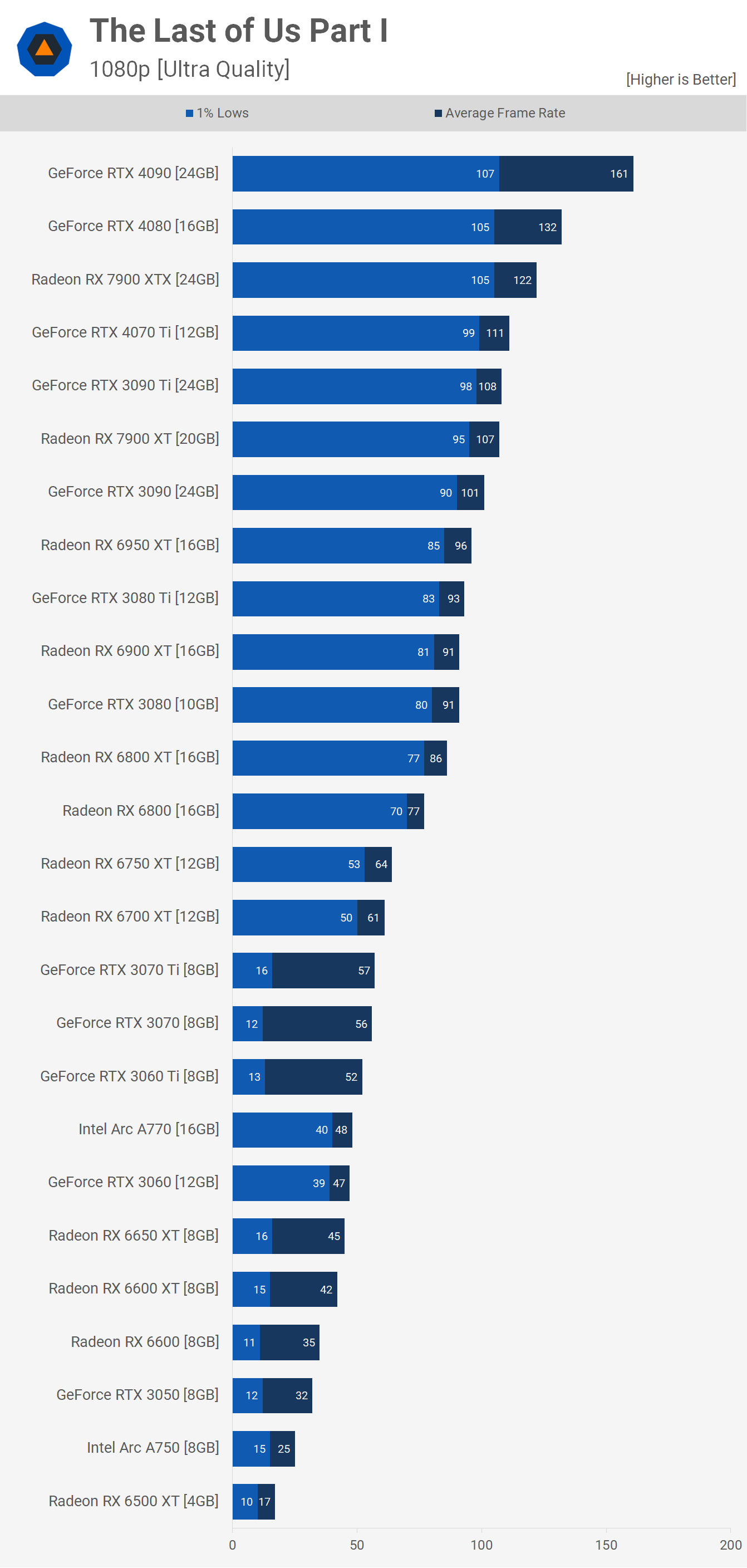

Ultra Quality Benchmarks

Here's the 1080p ultra data and we have a few interesting things to note. First, there appears to be some kind of system bottleneck, limiting 1% low performance on the RTX 4090, probably a CPU limitation, or it could just be an issue with the game that needs to be addressed. In any case, the RTX 4090 pumped out 161 fps at 1080p, though with 107 fps for the 1% lows it was comparable to the RTX 4080 and Radeon RX 7900 XTX in that regard.

The RTX 4070 Ti and 7900 XT delivered comparable results as the GeForce GPU was just 4% faster, and they were split by the RTX 3090 Ti. The RTX 3090 and 6950 XT were neck and neck, as were the 6800 XT and RTX 3080.

Where things get messy for Nvidia are previous generation mid-range comparisons. The Radeon RX 6800, for example, averaged 77 fps whereas the RTX 3070 managed just 56 fps, making the Radeon GPU 38% faster. But worse than that was the horrible and frankly unplayable 1% lows of the 8 GB RTX 3070.

This means gamers will receive a better gaming experience with the Intel Arc A770, RTX 3060, or in particular the Radeon 6700 XT and 6750 XT, as all these models feature 12 GB of more VRAM.

Meanwhile, any Radeon or Arc GPU with 8 GB of VRAM or less struggled, resulting in constant stutters. This graph clearly demonstrates why so many users are complaining about stuttering issues in The Last of Us Part I: 28% of all steam users run a graphics card with 8 GB of VRAM with the second largest bracket being 6 GB. Just 10% of all Steam users have a 12 GB graphics card, 3% have 10GB, less than a percent have 16 GB and 1.5% have 24 GB. This is quite clearly the issue for those trying to play the game using ultra quality settings.

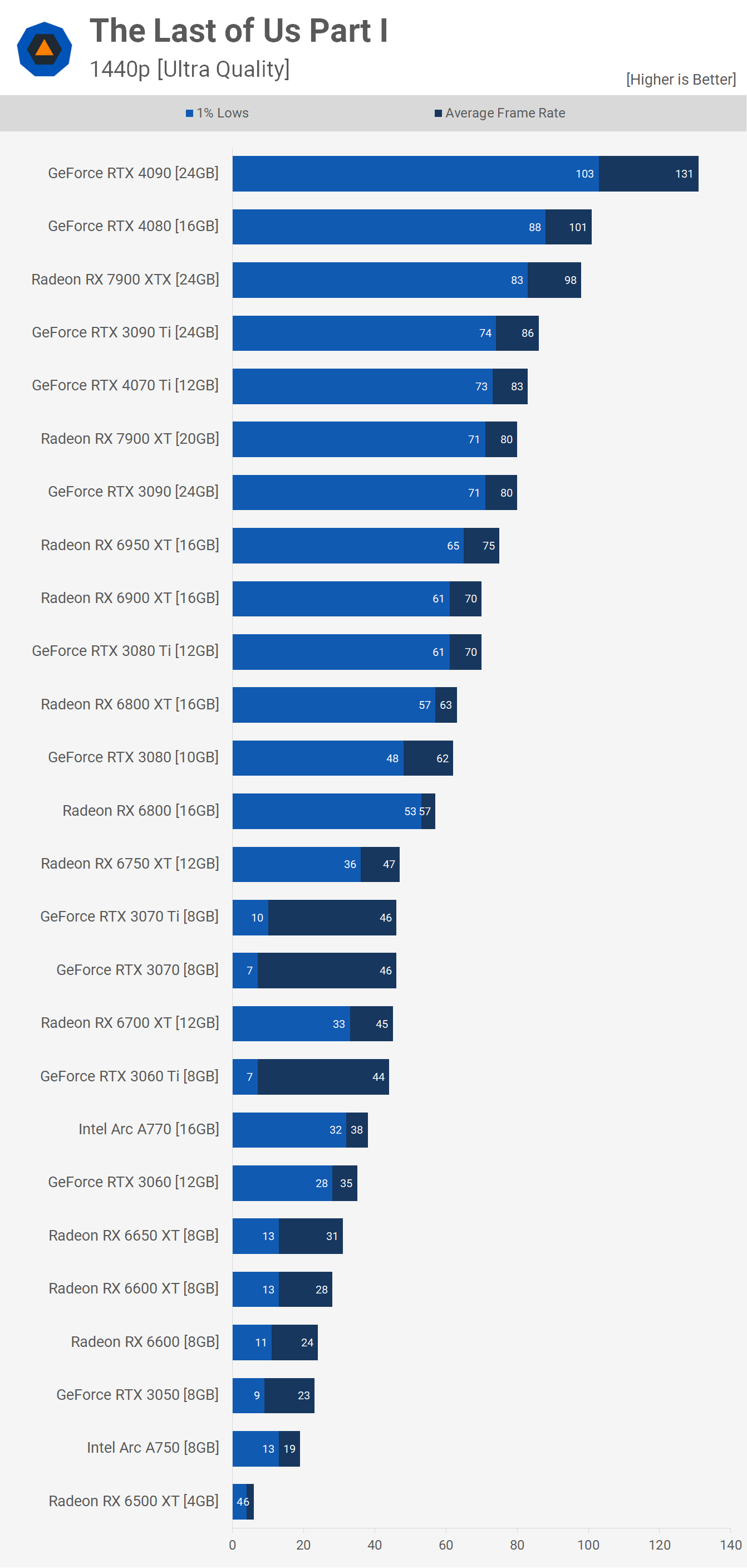

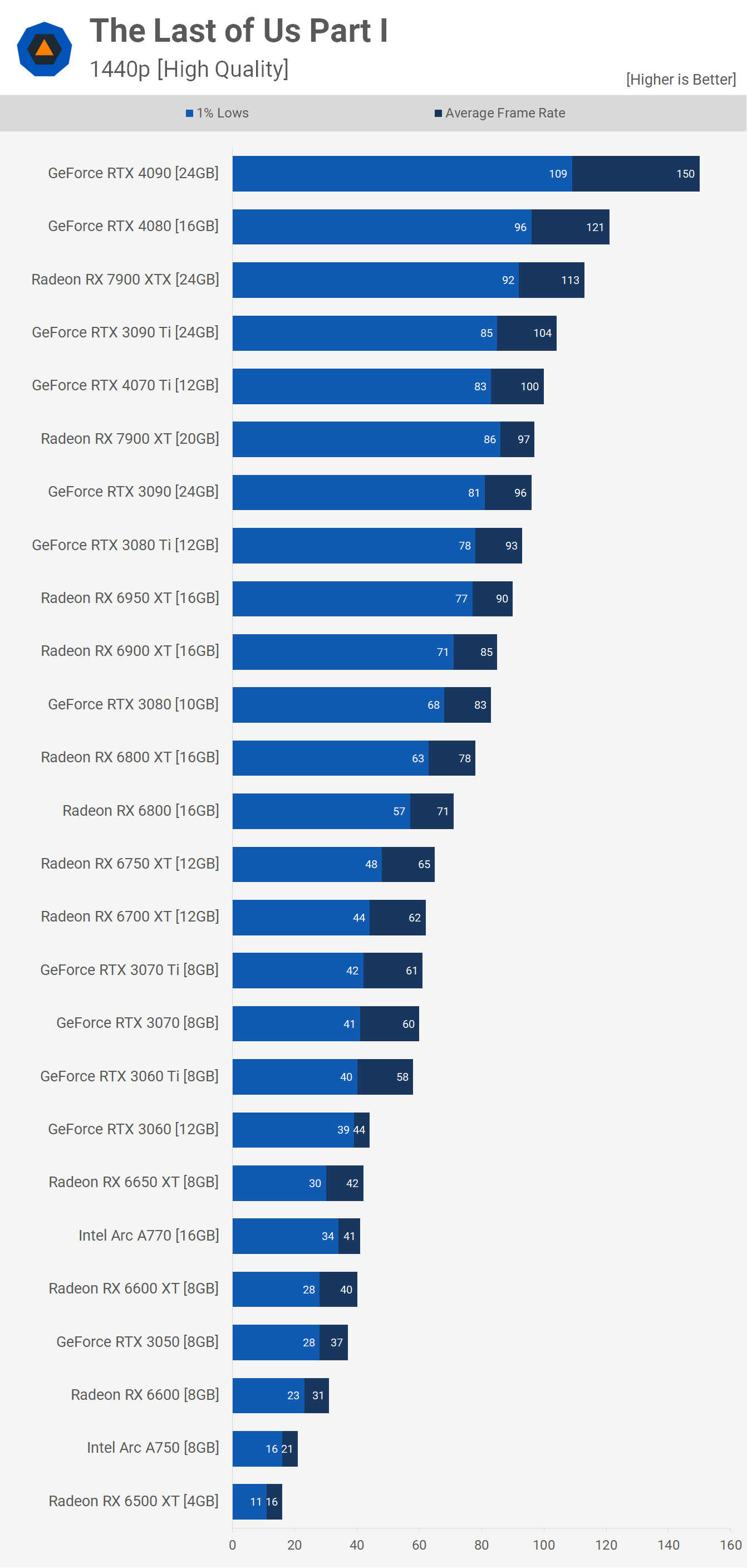

Moving to 1440p allows the RTX 4090 to pull away from the RTX 4080, even when looking at the 1% lows. The RTX 4080 was slightly faster than the 7900 XTX again, though they are close, as is the 4070 Ti and 7900 XT.

The GeForce RTX 3080 10 GB still performed quite well though 1% lows did slip when compared to the Radeon 6800 XT, and this is without question a VRAM issue.

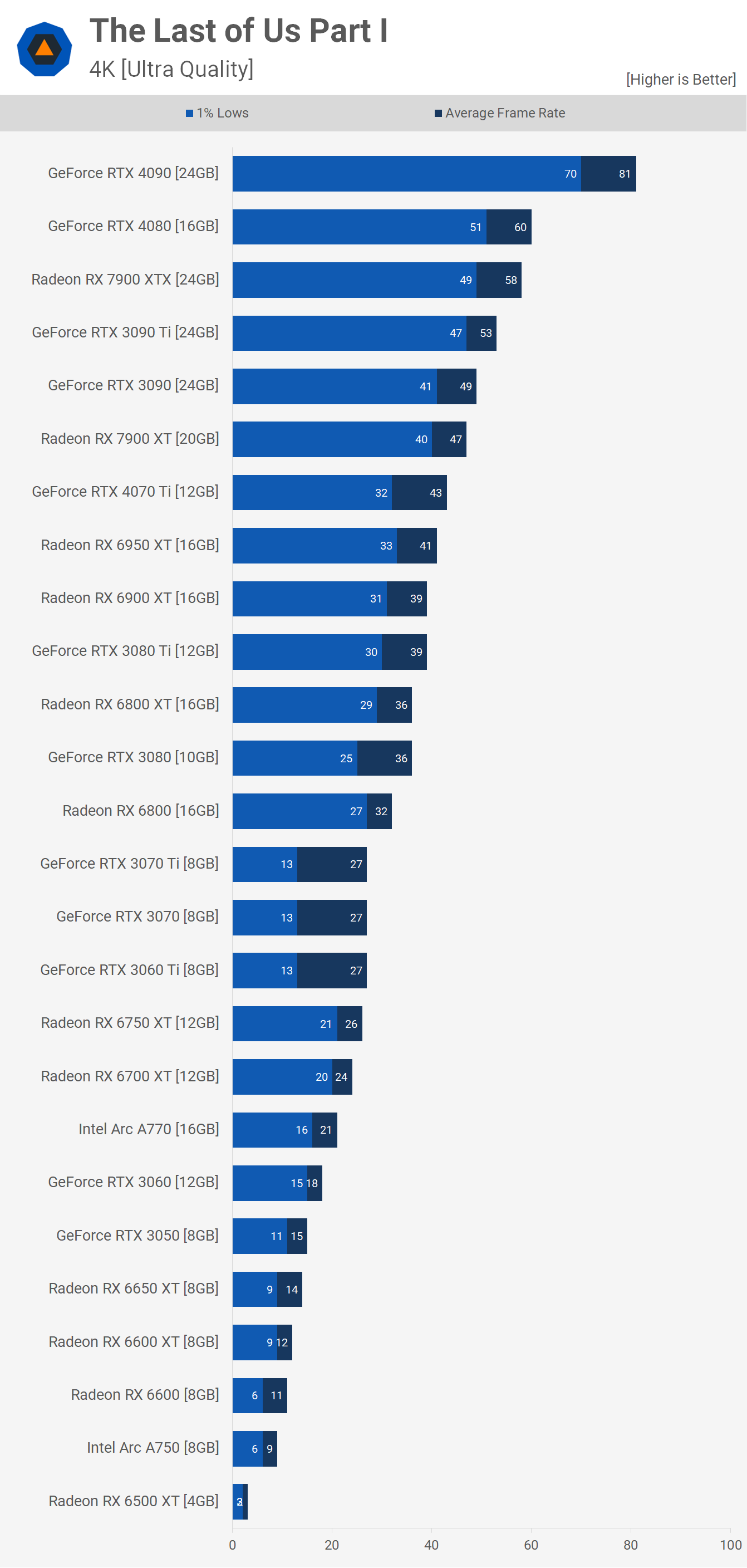

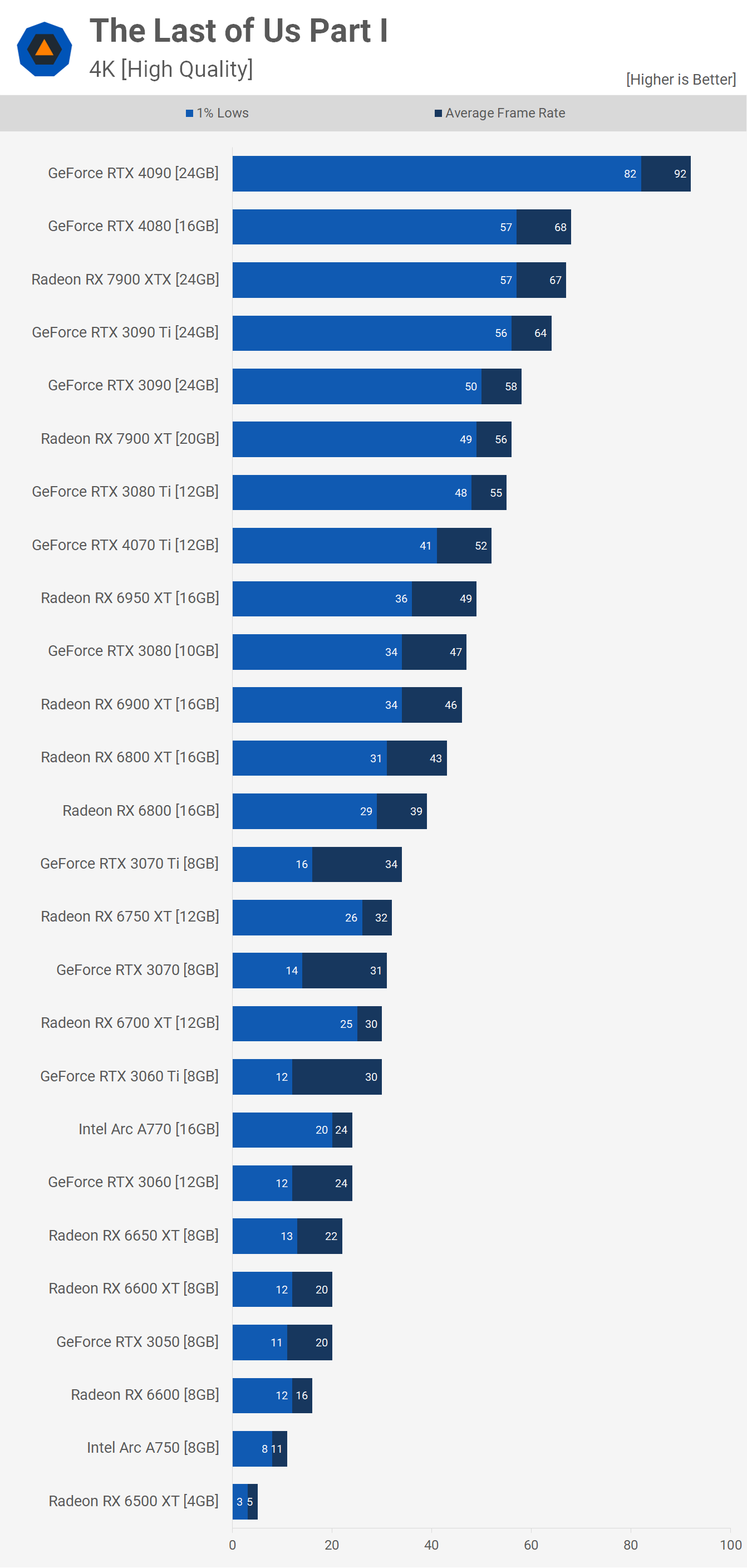

Then at 4K you'll ideally want a GeForce RTX 4090 - the flagship GeForce GPU really shined here with an impressive 81 fps. The RTX 4080 was the only other GPU to reach 60 fps as the Radeon 7900 XTX fell short with 58 fps. The RTX 3090 Ti managed 53 fps and then we drop down to 47 fps with the 7900 XT, which is certainly playable but not a desirable frame rate for a $800 GPU.

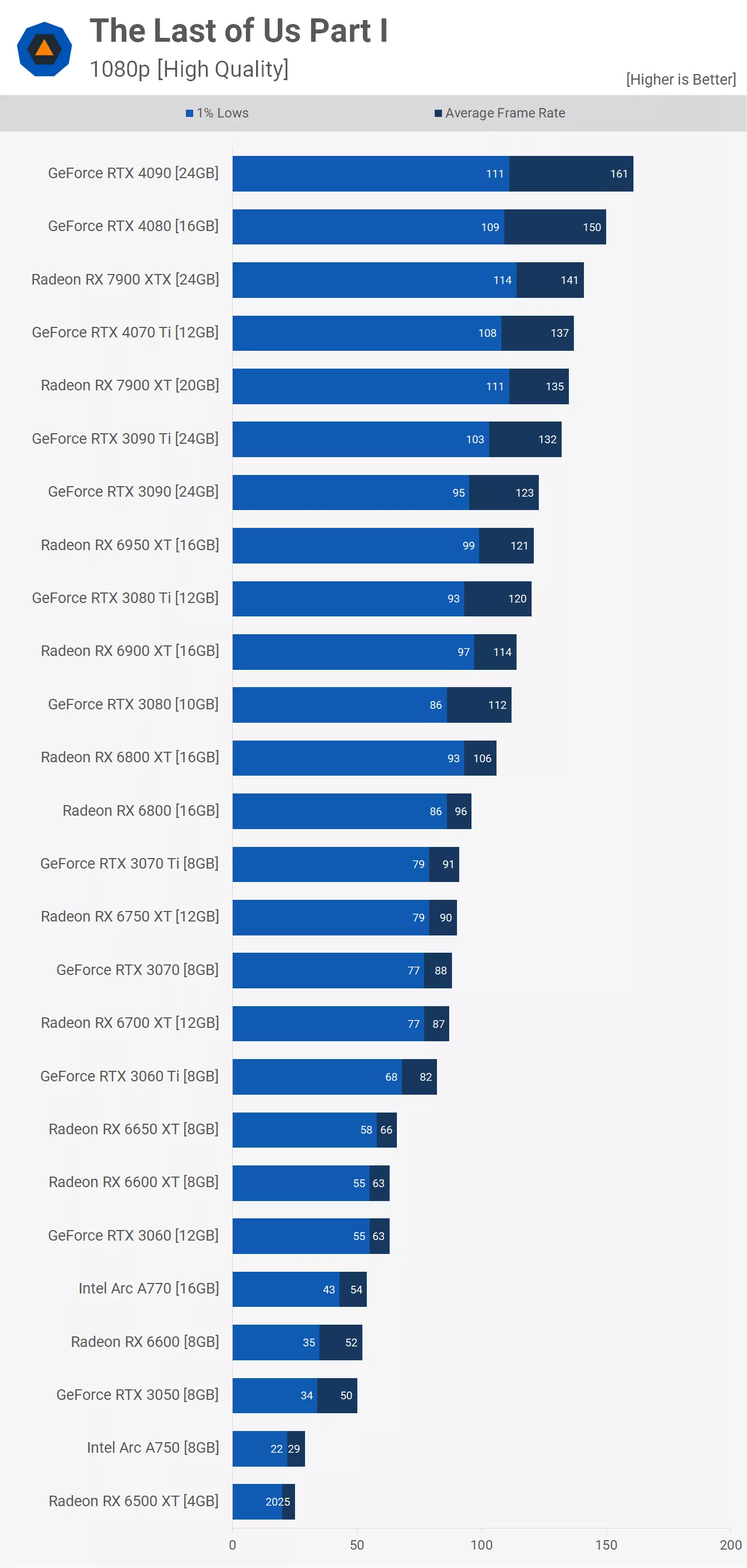

High Quality Benchmarks

Now reducing the graphics quality preset to high, we find all tested graphics cards worked quite well at 1080p, at least from the RTX 3050 and up. For some reason, the Intel Arc A750 is pretty broken here, it was tested with the same driver as the A770, so maybe this is a VRAM issue, perhaps memory management isn't as well optimised on Arc as it is GeForce and Radeon, or it could just be a bug...

The good news is you can play the game using either a GeForce RTX 3050 or Radeon RX 6600 and for more consistent performance the RX 6600 XT/6650 XT or RTX 3060 will work well. Then if you want to keep 1% lows above 60 fps the RTX 3060 Ti or 6700 XT will do the trick.

Moving to 1440p will require quite a bit more GPU power. Realistically you'll want an RTX 3060 Ti or 6700 XT for around 60 fps on average. Then if you want to keep 1% lows above 60 fps, you'll need either a Radeon 6800 XT or GeForce RTX 3080.

For playing at 4K with high settings we're once again running into VRAM issues with 8 GB graphics cards, but the 10GB RTX 3080 seems fine with 1% lows of 34 fps and an average frame rate of 47 fps. Not exactly mind-blowing performance, but it's certainly playable.

Ideally you will want an RTX 3090 Ti, 4080, 4090 or 7900 XT. We found surprising how poorly the RTX 4070 Ti performed, only just edging out the 6950 XT, though this only meant the 7900 XT was 8% faster.

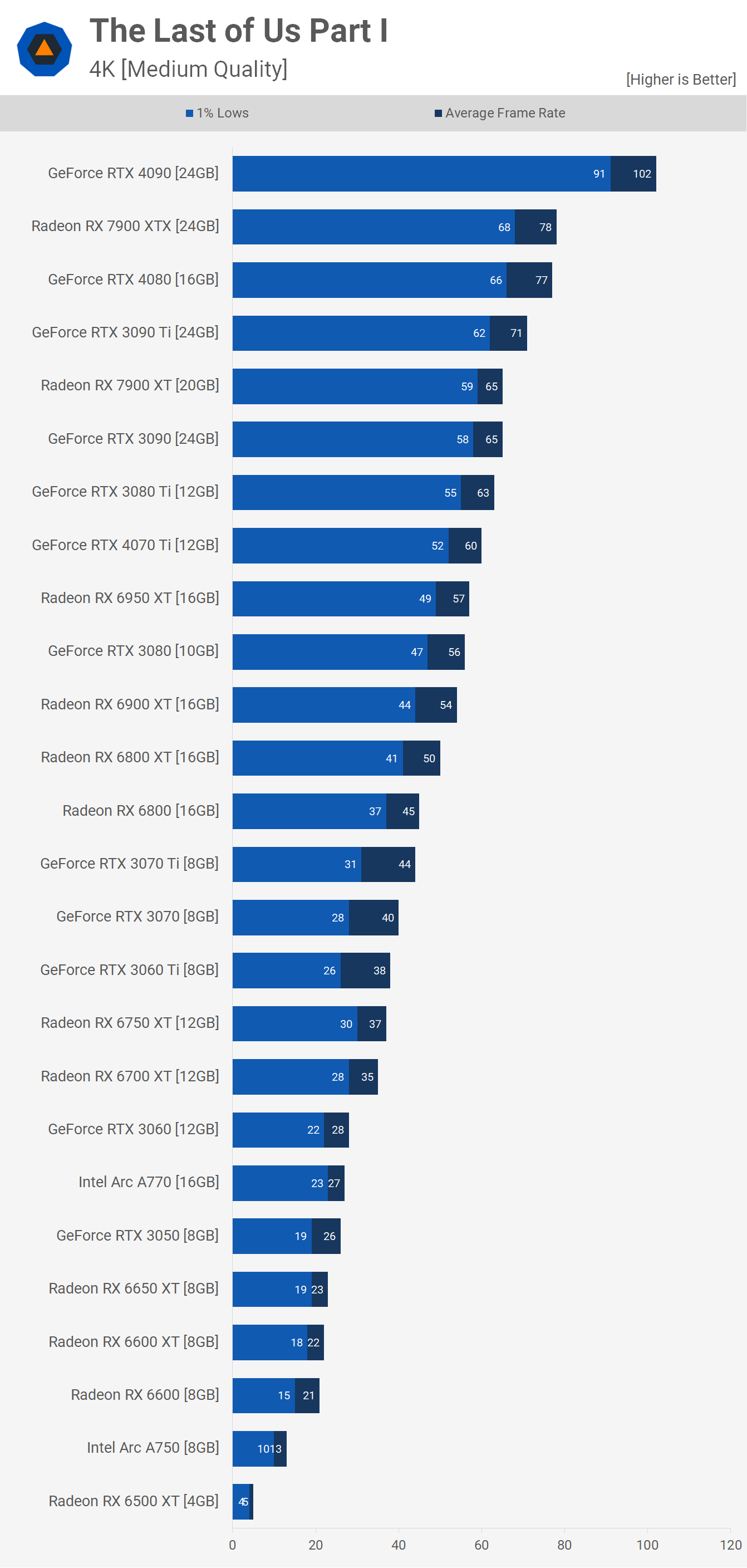

Medium Quality Benchmarks

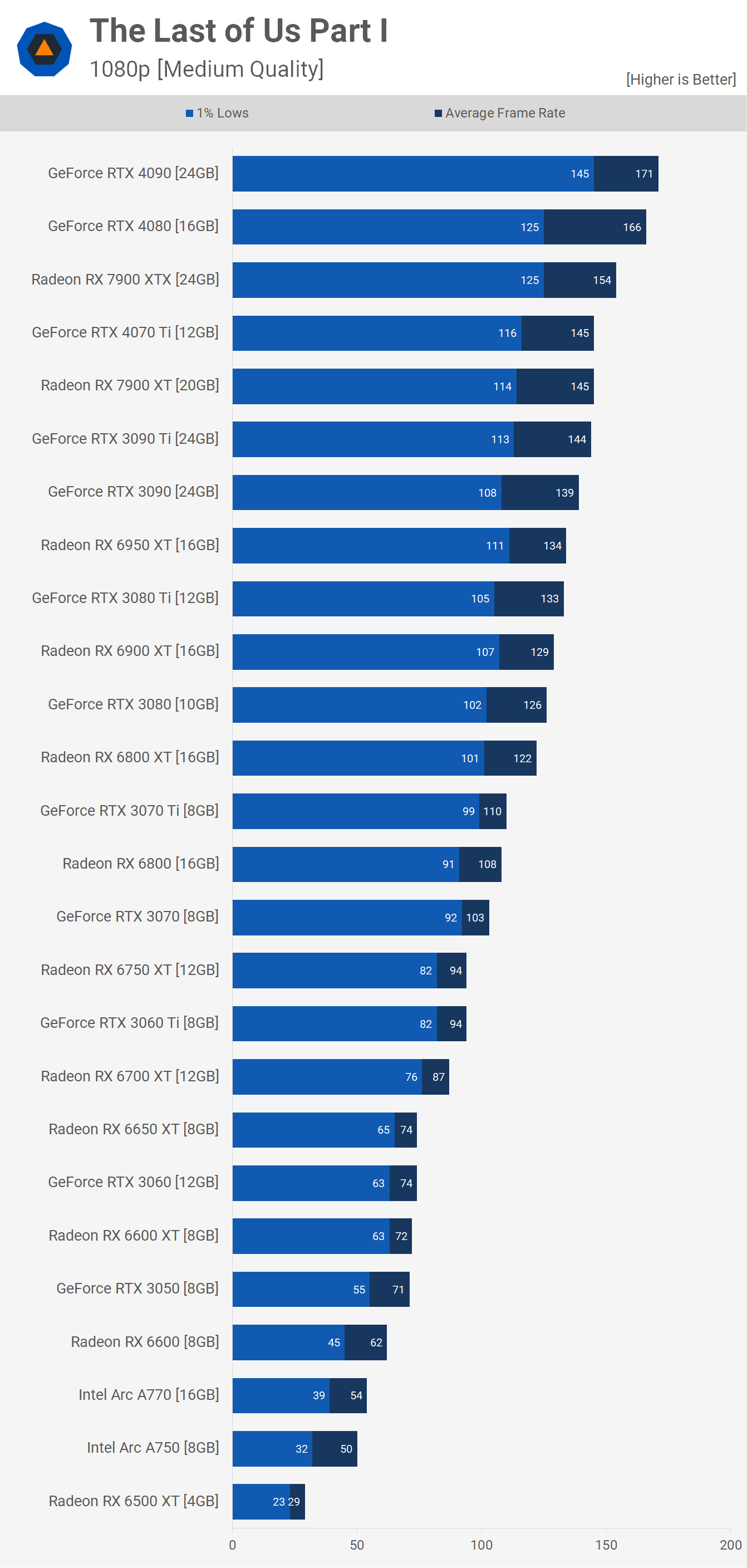

Let's now take a look at performance using the medium quality preset and basically everything is playable here, except for the Radeon RX 6500 XT which could only manage 29 fps at 1080p. By the way, using the lowest possible quality settings at 1080p saw the 6500 XT render just over 40 fps, what a garbage product.

For an average of 60 fps you'll only require a Radeon RX 6600 at 1080p, and to keep 1% lows over 60 fps the 6600 XT or RTX 3060 will suffice. The game still looks very impressive using the medium quality preset, so if you are suffering from stuttering issues, we'd certainly recommend trying the medium preset.

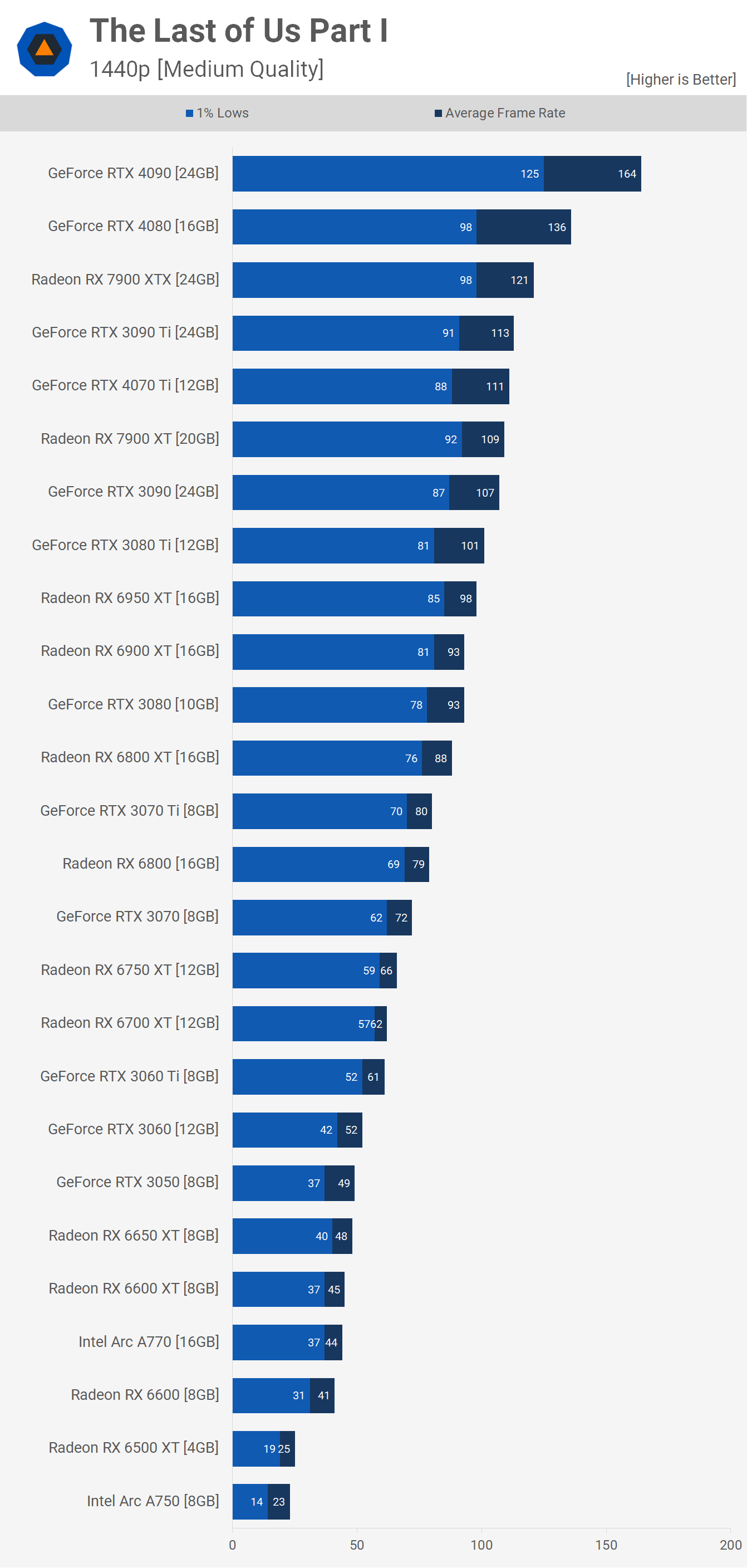

Even at 1440p, it's easy to achieve 60 fps performance, a GeForce RTX 3060 Ti or Radeon 6700 XT will work and frame time performance was excellent, even with 8GB of VRAM. Oddly though, the Arc A750 tanked in performance, so we're not sure what the issue there is.

And of course, here's a look at 4K. The GeForce RTX 4090 pumped out over 100 fps on average, making it 31% faster than the 7900 XTX. Meanwhile, the flagship Radeon GPU was a few frames faster than the RTX 4080, which was just 8% faster than the RTX 3090 Ti.

The Radeon 7900 XT and GeForce RTX 3090 were good for 65 fps, while the RTX 3080 Ti also snuck past 60 fps.

What We Learned

Using slightly dialed-down quality settings, The Last of Us Part I appears quite easy to run, and provided you can manage the game's VRAM requirements, you shouldn't have any issues. It's remarkable how well a previous generation GPU such as the Radeon RX 6800 plays this game, it's buttery smooth and that's probably not something you'd expect to find after all the online controversy.

Of course, you could certainly argue that the game shouldn't require more than 8 GB of VRAM and point to other games that look as good or better with less extreme requirements, you're certainly welcome to that opinion. That said, we don't agree with that. The game looks stunning, the texture quality is excellent, the geometry is impressive, and we're talking about VRAM requirements for the highest quality preset.

The game does scale well using lower quality settings and we'd say it's silly to limit VRAM usage for high-end GPUs. If you can improve visuals through higher quality textures, and high levels of detail, then by all means do so.

It's not like the game doesn't work on 8 GB graphics cards, it does, and very well, but not with the ultra quality preset. This isn't the first time we've seen this happen and it's going to become commonplace - 8 GB of VRAM is entry-level now, and while we feel for those who spent big on an RTX 3070 Ti, for example, the writing was on the wall and we've been warning of this for a while now. Then again, sacrifice a little texture quality and the game will run well in this kind of GPU.

If you are experiencing stuttering issues, check the suggested VRAM requirements in the menu and make sure you're not exceeding them, doing so can also lead to crashing. If you stay within your VRAM budget, have a half-decent CPU and at least 16 GB of RAM, the game should play very well.