The most popular desktop CPU series of the past five years has been AMD's Ryzen 5 range, with parts like the Ryzen 3600 selling in incredible volumes. Following that, the 5600X was also very popular, and despite a delayed release, the non-X version proved to be another highly popular part.

The Ryzen 5600X didn't exactly thrill us with its $300 MSRP, but compared to competing parts, it still represented great value. As is often the case, AMD was quick to apply discounts, and eventually, almost a year and a half later, they released the standard 5600 chip (non-X) at $200. It was quickly discounted, dropping as low as $120-130.

Needless to say, the Ryzen 5 5600 and 5600X were always competitively priced and presented excellent value for gamers on a tight budget. However, how well have they aged, and should you have bought an 8 or even 16-core model instead?

AMD Zen 3 in Context

Despite the 5600 series offering gamers excellent value, not everyone was convinced. Some claimed the 5600 series was a poor investment, recommending instead the Ryzen 7 5800X or the 5700X, which were released alongside the 5600. Others even suggested the Ryzen 9 5950X or bust, which seems borderline crazy, but maybe they were right. It's been nearly four years since Zen 3 was launched, so today we want to see how they compare in modern games.

Obviously, the Ryzen 5 5600X is going to be slower than the R7 5800X, and therefore the 5950X, but the question is, how much slower, and how usable is it? It's also worth looking back at pricing because the 5950X was never affordable. A strong case could be made for the 5700X as a gaming CPU, and we certainly made that case back in the day.

As we saw it, the smart options for gamers were the Ryzen 5 5600 and Ryzen 7 5700X, both offering exceptional value at their release price – which later dropped further as we mentioned. By early 2023, the 5600 was much better value than the 5600X, while the 5700X was much better than the 5800X, both offering a 24% discount for essentially the same level of performance.

Meanwhile, the Ryzen 9 5950X cost 160% more than the 5700X and a bit over 280% more than the 5600, so it was quite a bit more expensive at $500.

With the 5600 and 5600X being virtually identical in terms of performance, and both being unlocked CPUs (and the same applies to the 5700X and 5800X), we won't test all of these CPUs and clutter the data. Instead, we are comparing the 5600X, 5800X, 5950X, and for all those 3D V-Cache lovers, the 5800X3D will also be included. All CPUs were tested using 32GB of DDR4-3600 CL14 memory and the RTX 4090. So let's get into it…

Benchmarks

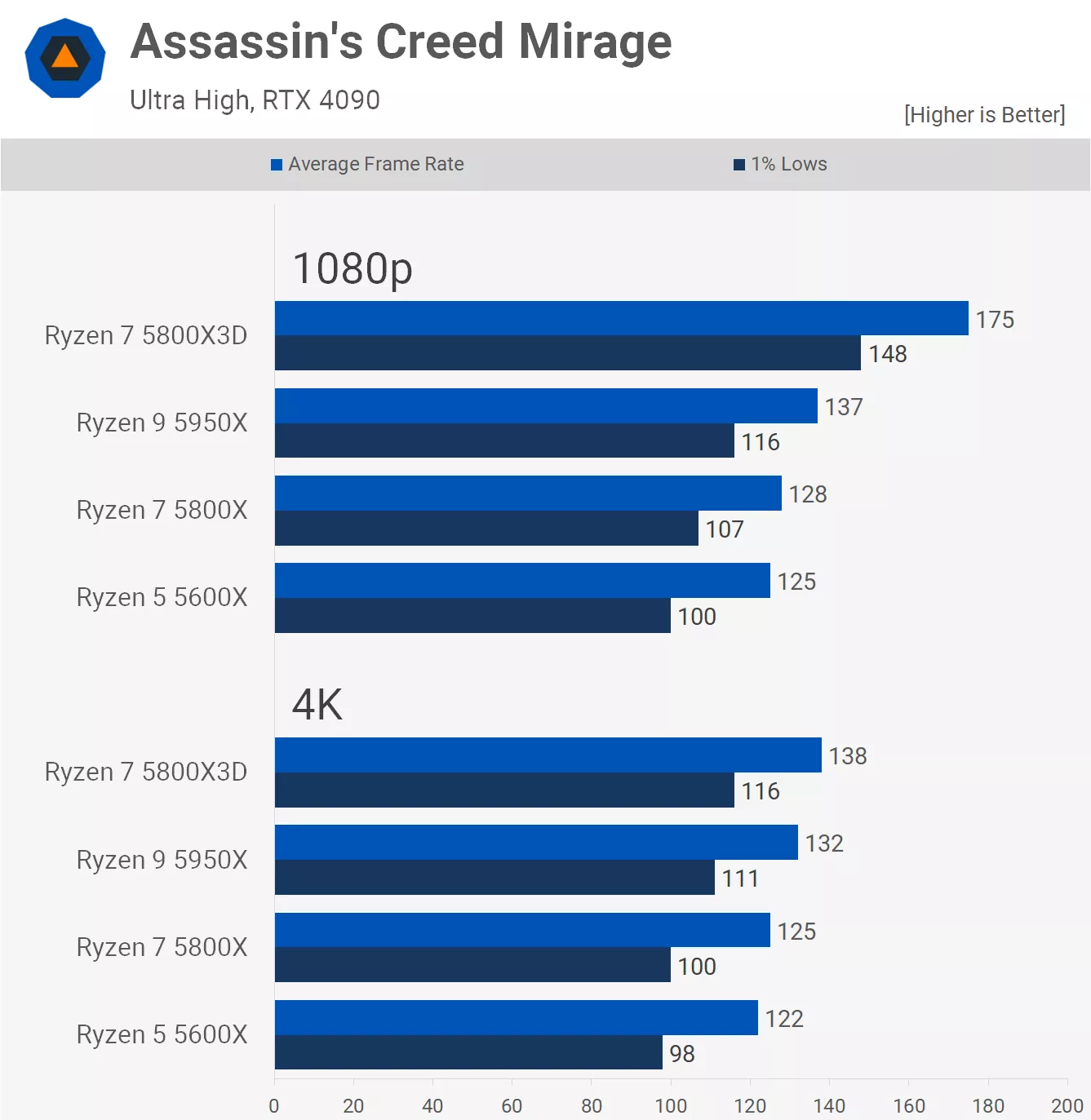

First up, we have Assassin's Creed Mirage. At 1080p, the 5600X is very close to the 5800X, which is what we typically found three years ago. The 5700X was just 2.5% faster for the average frame rate and 7% faster for the 1% lows. The 5950X was faster again, boosting the average frame rate over the 5600X by 10% with a 16% uplift for the 1% lows, a nice gain, but not exactly worth paying well over three times more for. The hero here is, of course, the 5800X3D, which was 40% faster than the 5600X.

We included 4K data as well, as this is always heavily requested. Keep in mind that what we're looking at here is mostly the limits of the RTX 4090 using the 'Ultra High' preset at 4K. If you enable upscaling or lower the quality settings, the margins will start to resemble what we see at 1080p.

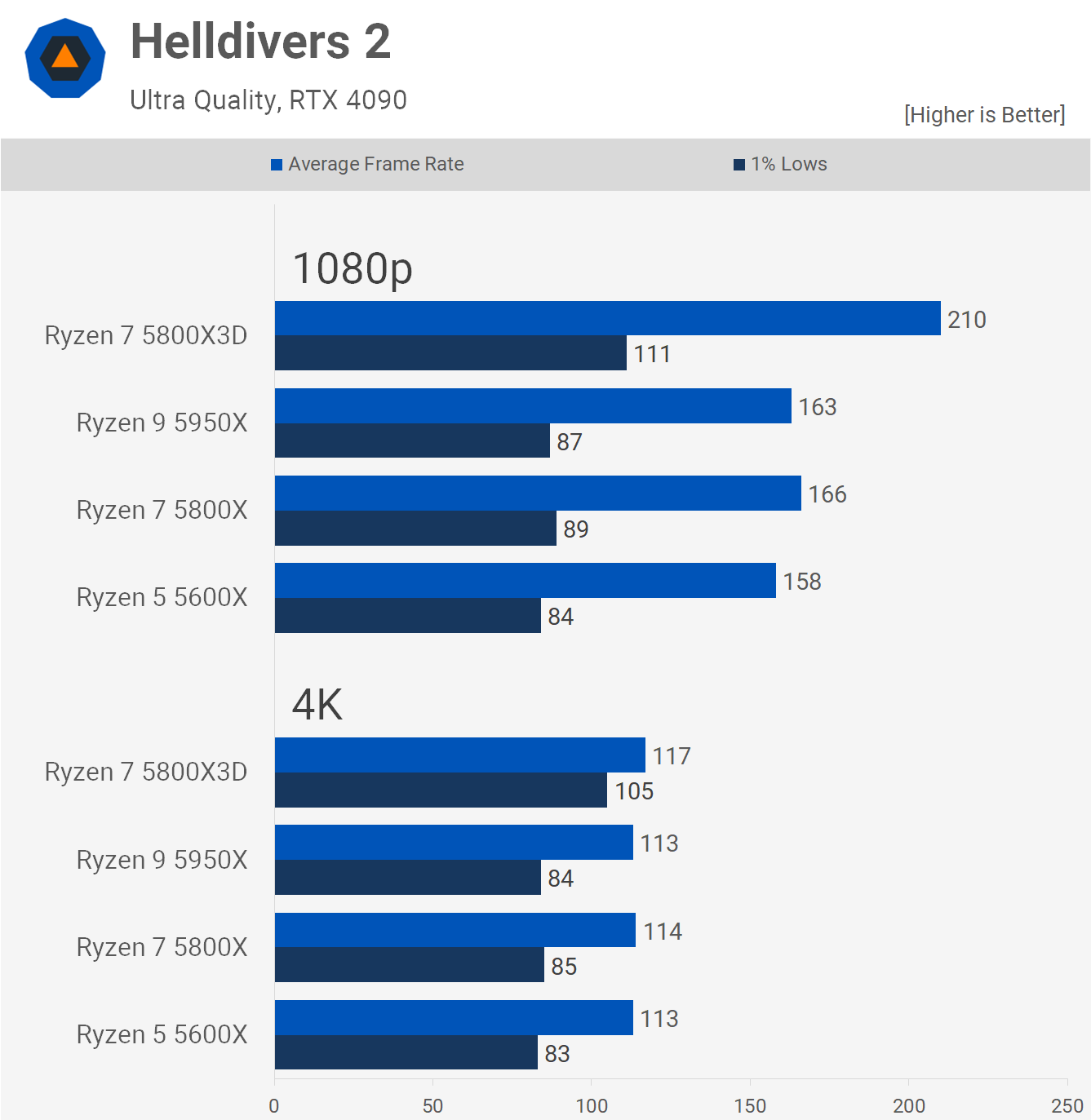

Moving on to Helldivers 2, we see even less of a difference between the 5600X, 5800X, and 5950X. All three parts delivered comparable performance at 1080p. The 5800X3D was much faster, boosting the average frame rate by at least 27%, highlighting the importance of L3 cache over core count in gaming performance.

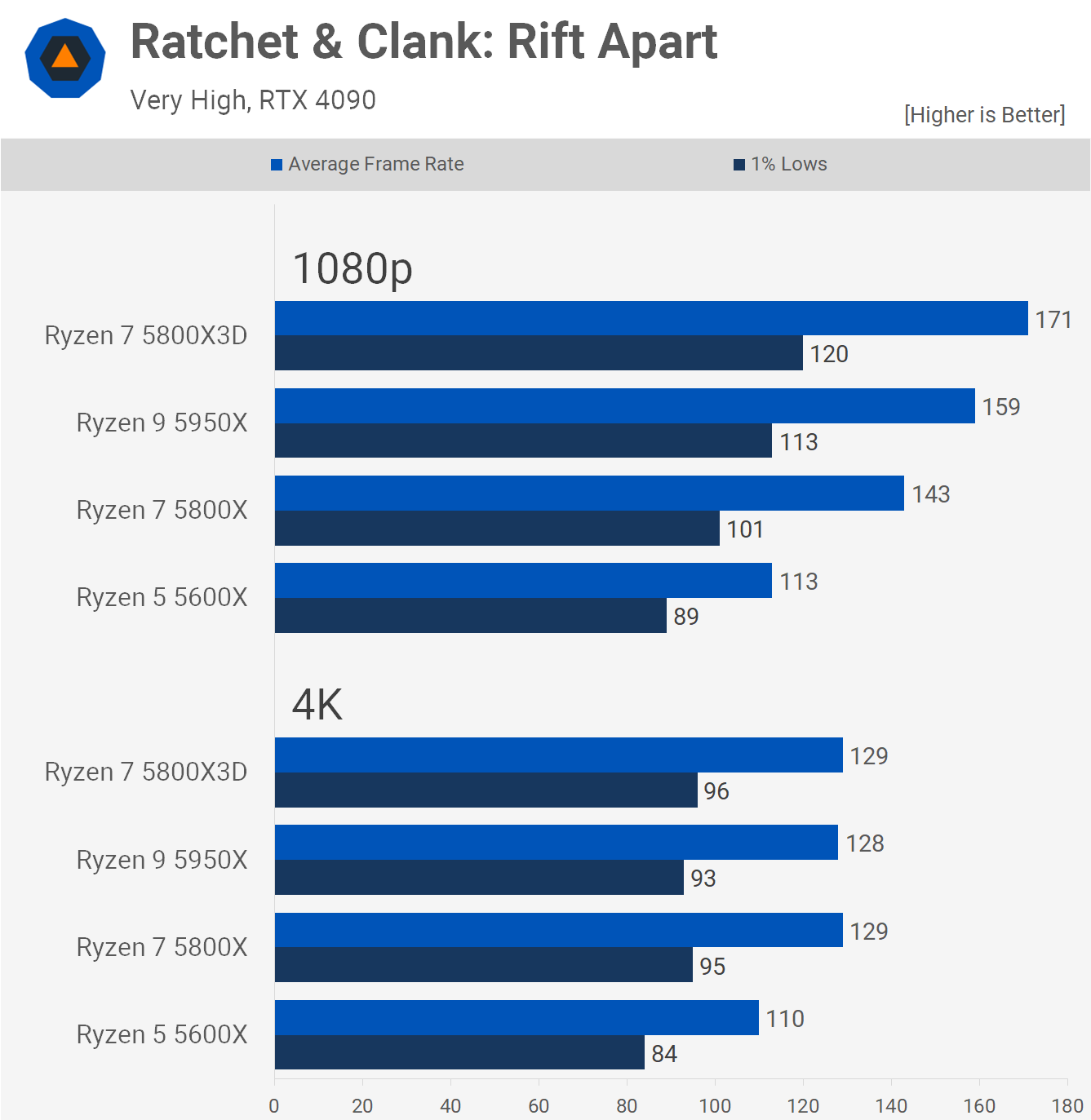

Ratchet & Clank: Rift Apart shows a dramatic performance uplift from the 5600X to the 5800X. Here, the 8-core processor was a massive 27% faster at 1080p, with 1% lows being 13% greater. This is a significant performance increase for the 5800X, though the 5600X still delivered a solid experience overall.

At 4K, the 5600X was the only CPU that couldn't max out the RTX 4090. However, for a CPU that cost at most $200, achieving 110 fps on average is acceptable. Frame time performance was excellent during a 20-minute playthrough with the 5600X.

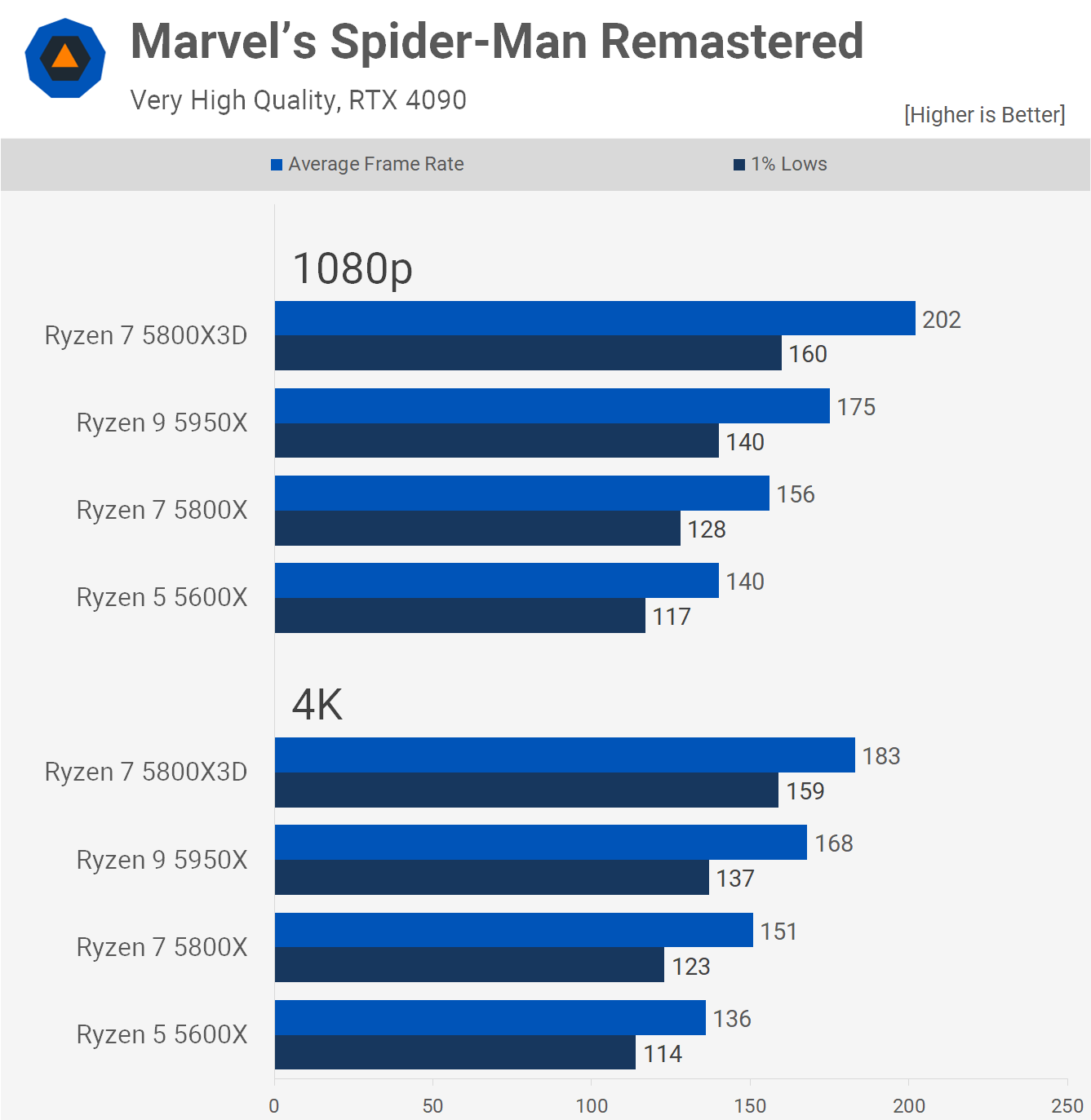

Spider-Man Remastered also sees performance scale up with core count, although L3 cache is far more important for boosting frame rates. In this example, the 5800X was 11% faster than the 5600X, while the 5950X was 25% faster. The 5800X3D was 15% faster than the 5950X and 44% faster than the 5600X.

Although the 5950X was significantly faster than the 5600X, with an average of 140 fps and 117 fps for the 1% lows, the 6-core Zen 3 processor was hardly slow.

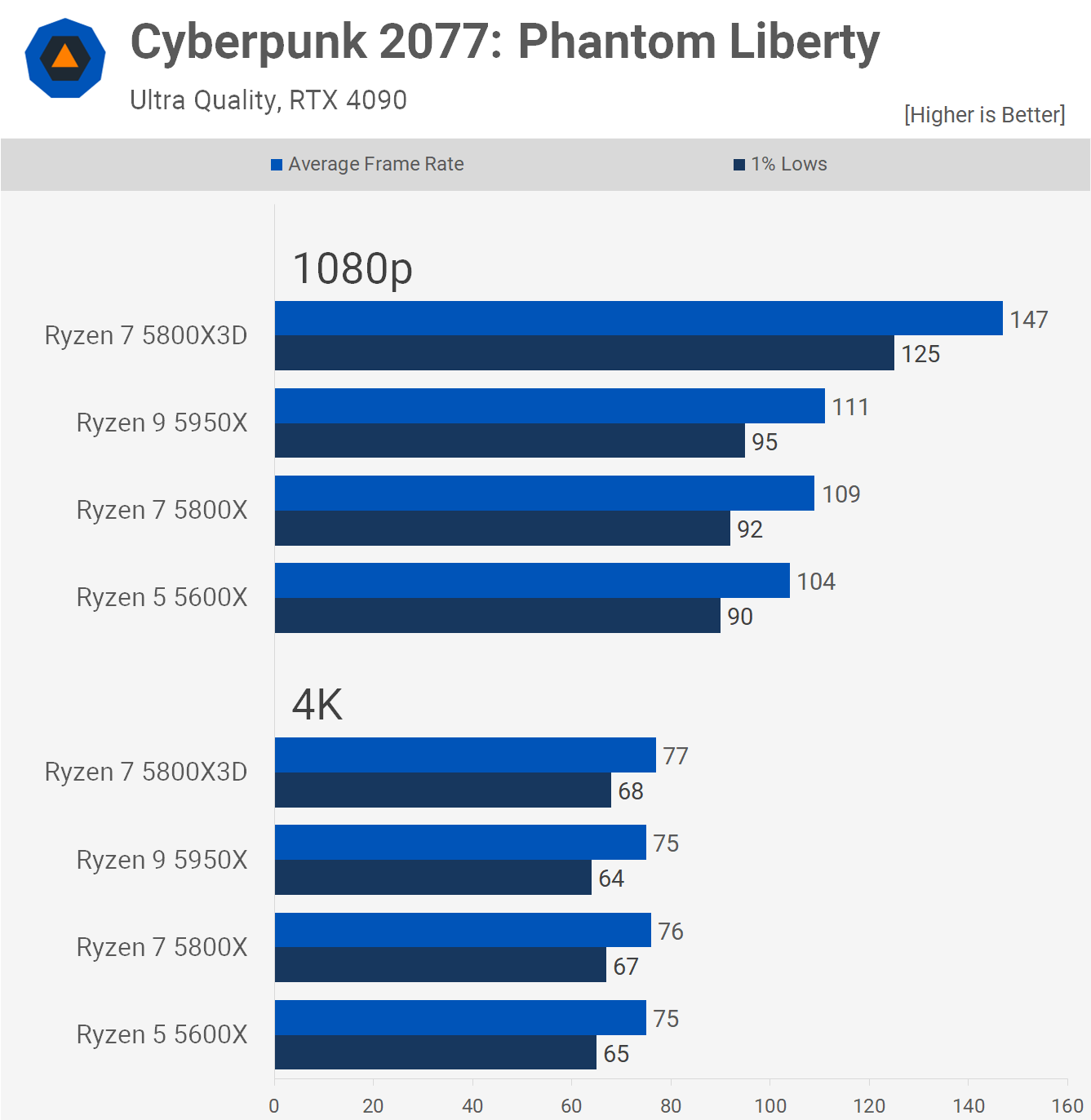

Cyberpunk 2077: Phantom Liberty is a very CPU-intensive title. Despite this, the 5600X, 5800X, and 5950X performed comparably, unlike in Ratchet & Clank. Again, AMD's 3D V-Cache made a significant difference, boosting the 5800X3D's performance to be 32% faster than the 5950X. At 4K, we were heavily GPU-limited to just shy of 80 fps, so enabling upscaling to reach around 100 fps is likely necessary.

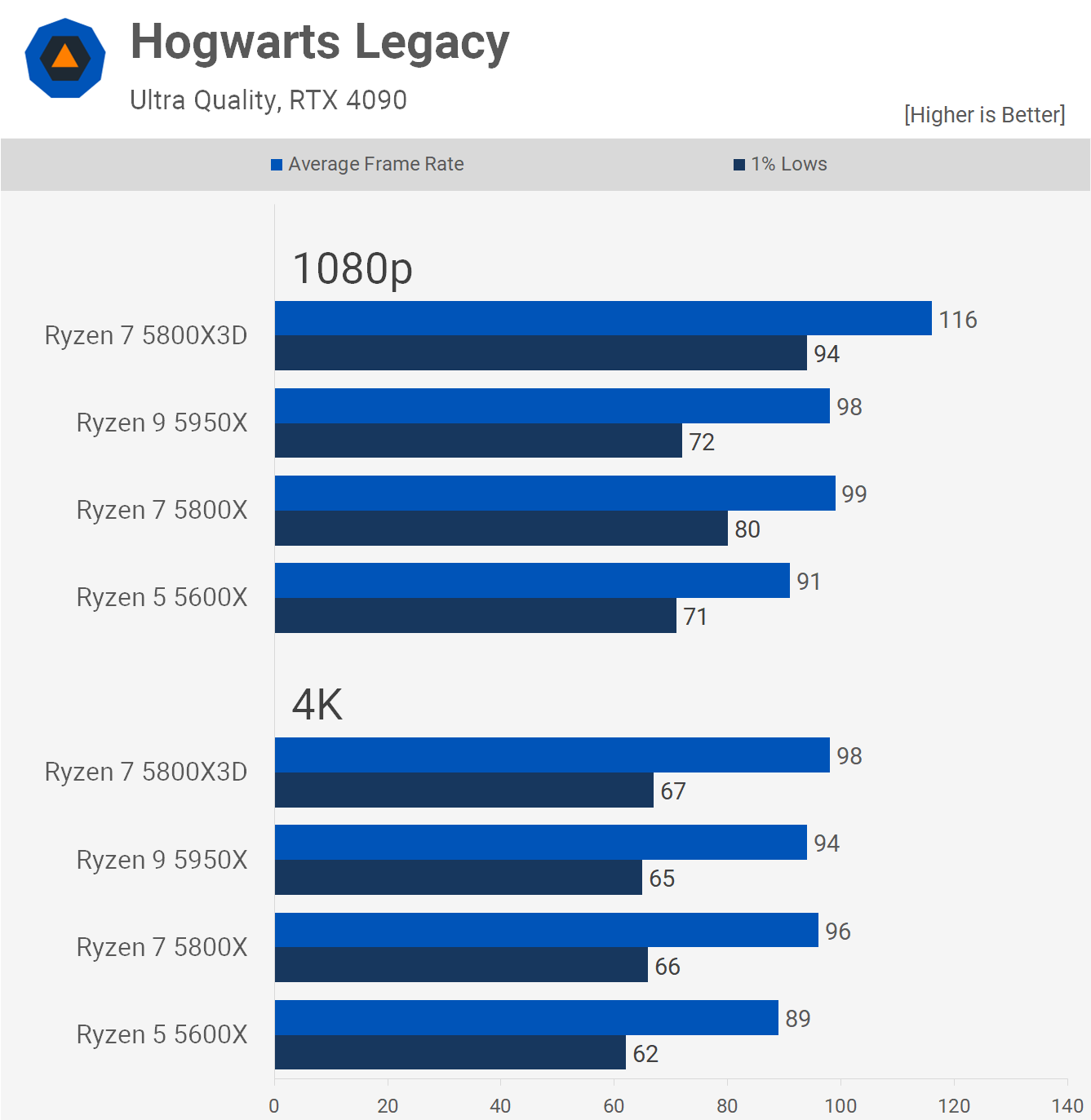

Hogwarts Legacy is another CPU-demanding game. While the 8 and 16-core models are faster, the difference isn't substantial. For example, the 5800X was just 9% faster than the 5600X, while the 5950X offered mixed performance, possibly due to a scheduling issue. While 8-core parts offer better performance, it's hard to say if they provide better value than the 6-core models.

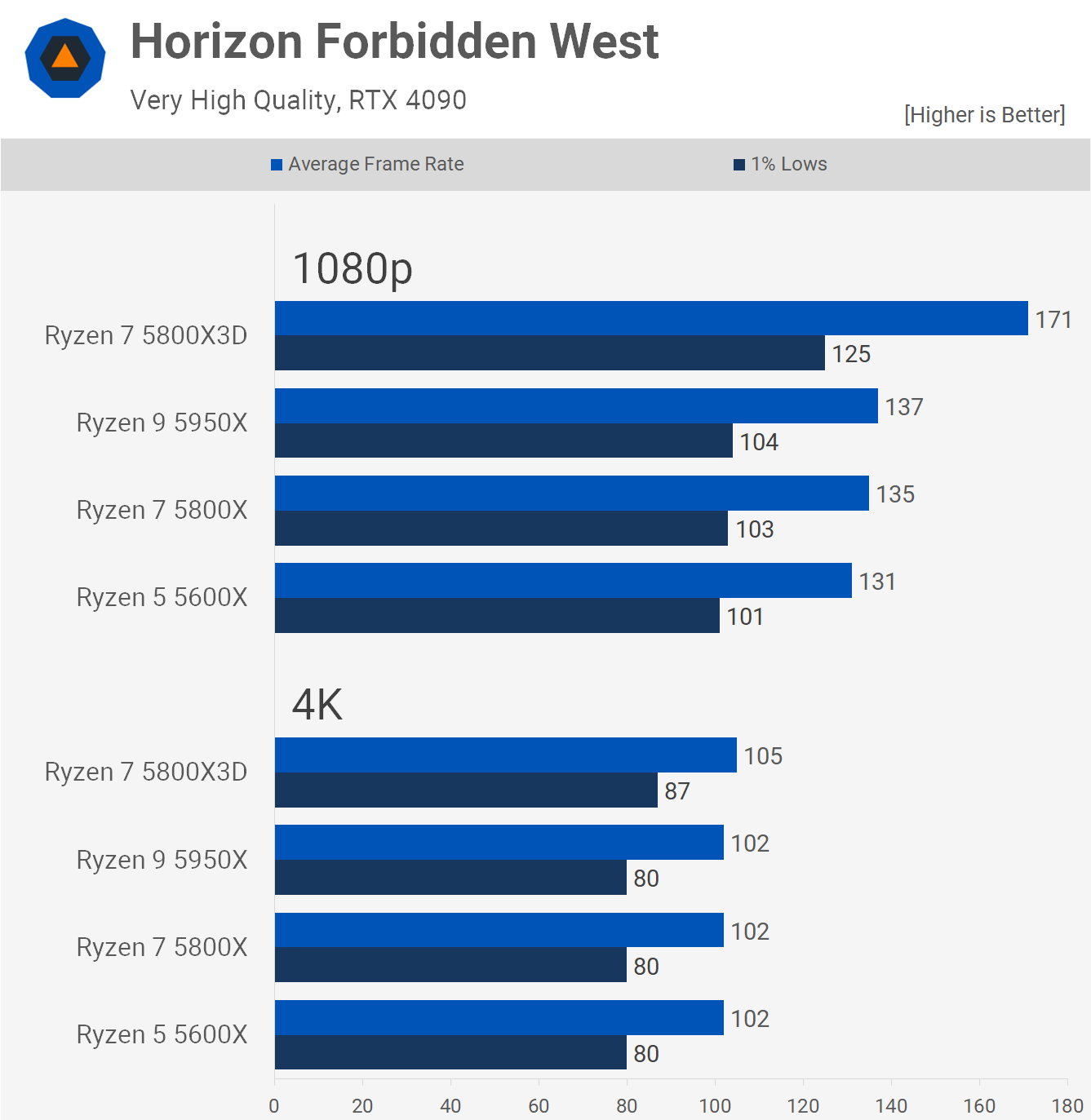

Horizon Forbidden West appears to play as well on the 5600X as it does on the 5800X and 5950X, with a mere 3% increase from the 5600X to the 5800X. Meanwhile, the 5800X3D was up to 25% faster than the 5950X.

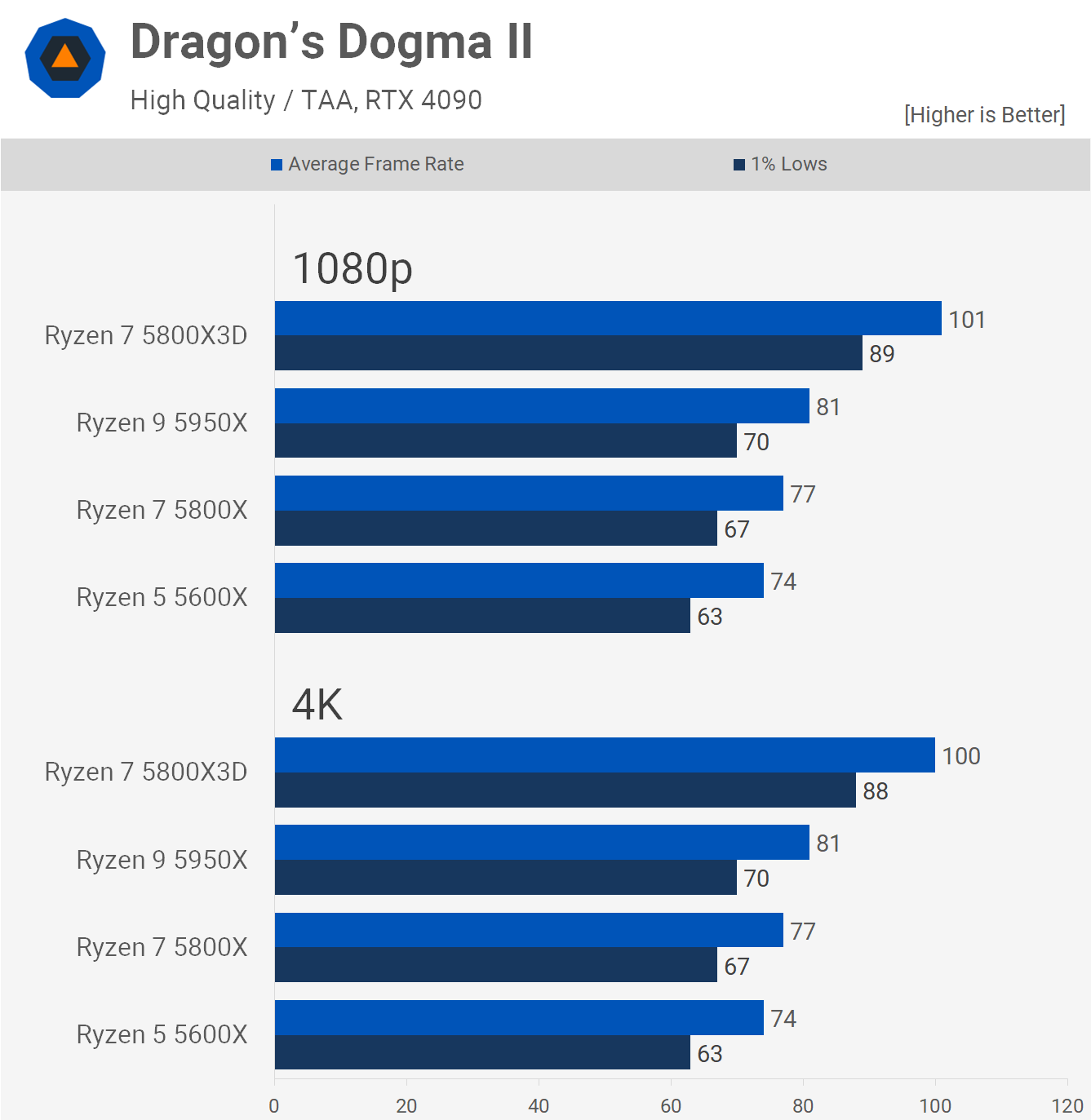

Dragon's Dogma II is known to be a very CPU-bound game, yet the 5800X is just 4% faster than the 5600X, while the 5950X is 9% faster. The 5800X3D, on the other hand, was 36% faster, highlighting the importance of L3 cache. Adding cores helped, but the improvement wasn't significant, and the 5600X was still very usable with just six cores.

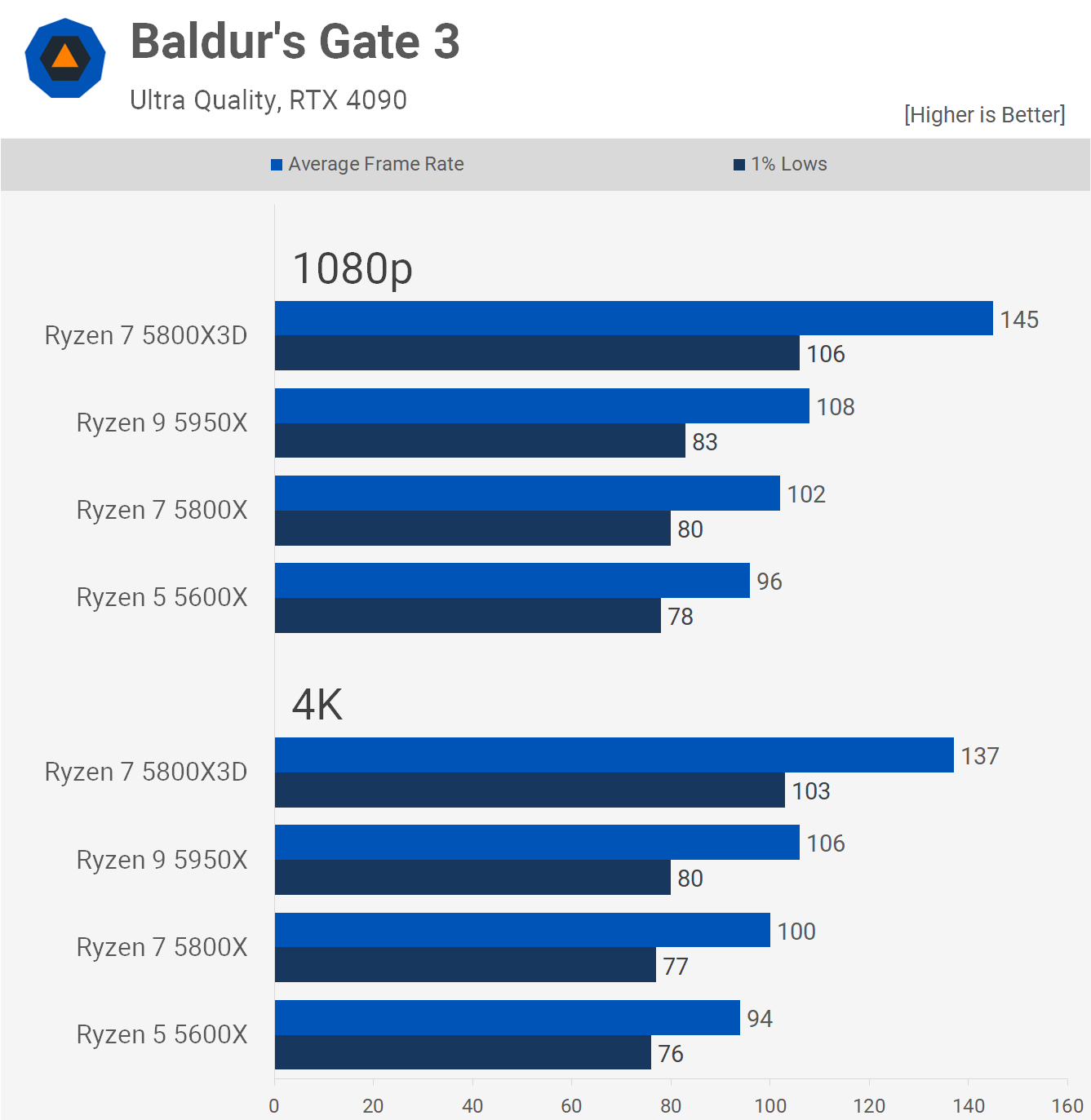

Another game where the 5600X performs very well is Baldur's Gate 3. Here, the 5800X offers just a 6% performance improvement, while the 5950X is 13% faster. While extra cores do lead to a performance improvement, it's not significant. The 3D V-Cache of the 5800X3D boosts performance significantly, making it 51% faster than the 5600X and 42% faster than the 5800X.

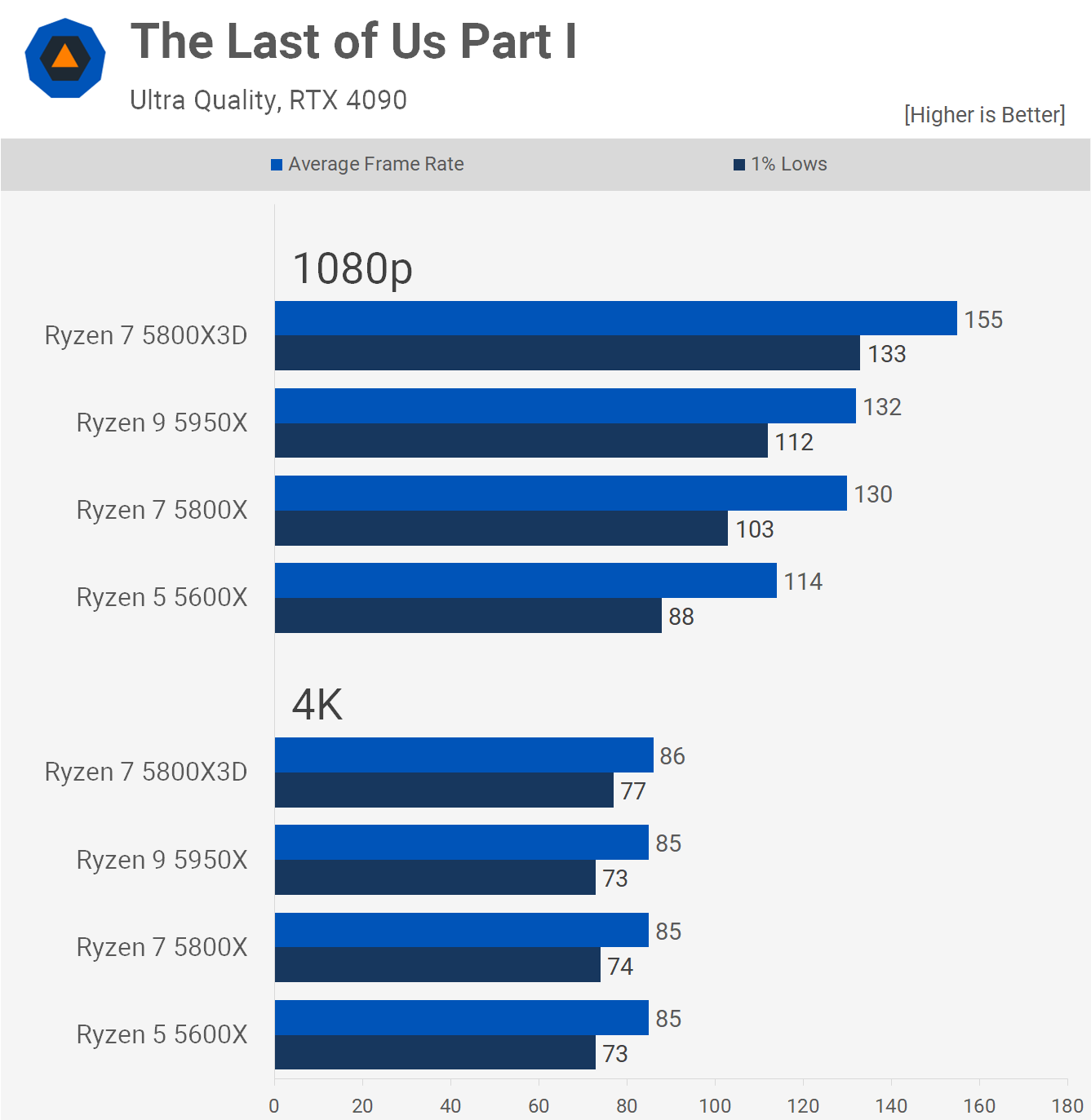

The Last of Us Part I is a CPU-demanding title that leans heavily on the 5600X, resulting in the 5800X being 14% faster. Meanwhile, the 5800X and 5950X were comparable, with the 5800X3D delivering the best performance.

Finally, we have Starfield, another example where the 6-core processor struggles to keep pace with the 5800X. However, performance is still quite good, with 1% lows exceeding 60 fps. The 5800X was 15% faster than the 5600X, while the 5950X was 23% faster.

Taking the Average

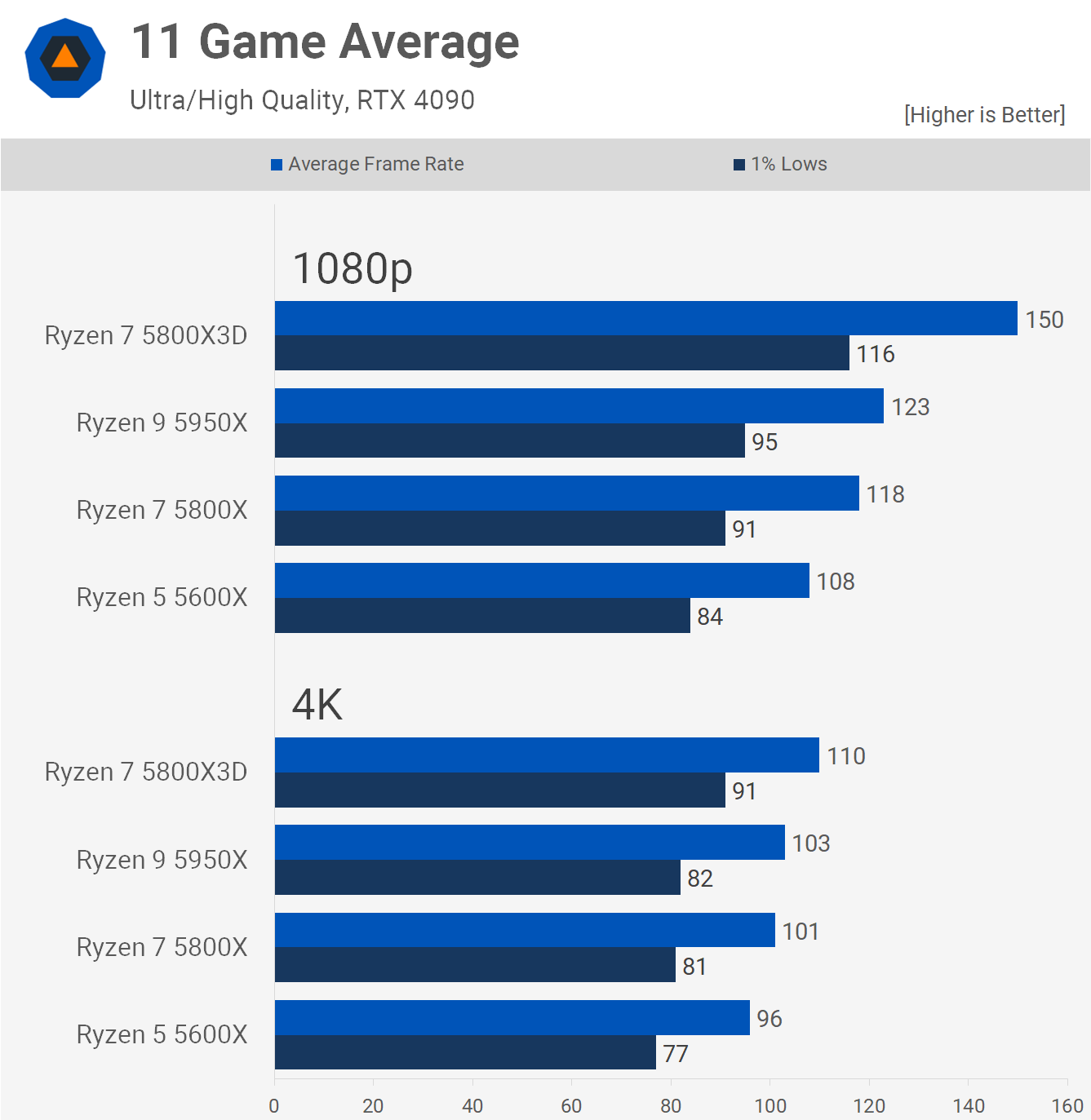

Here's a look at the average performance across the 11 games tested, calculated using the geometric mean. On average, the 5800X was 9% faster than the 5600X, while the 5950X was 14% faster. For a serious performance bump, the 5800X3D was 39% faster and 27% faster than the 5800X.

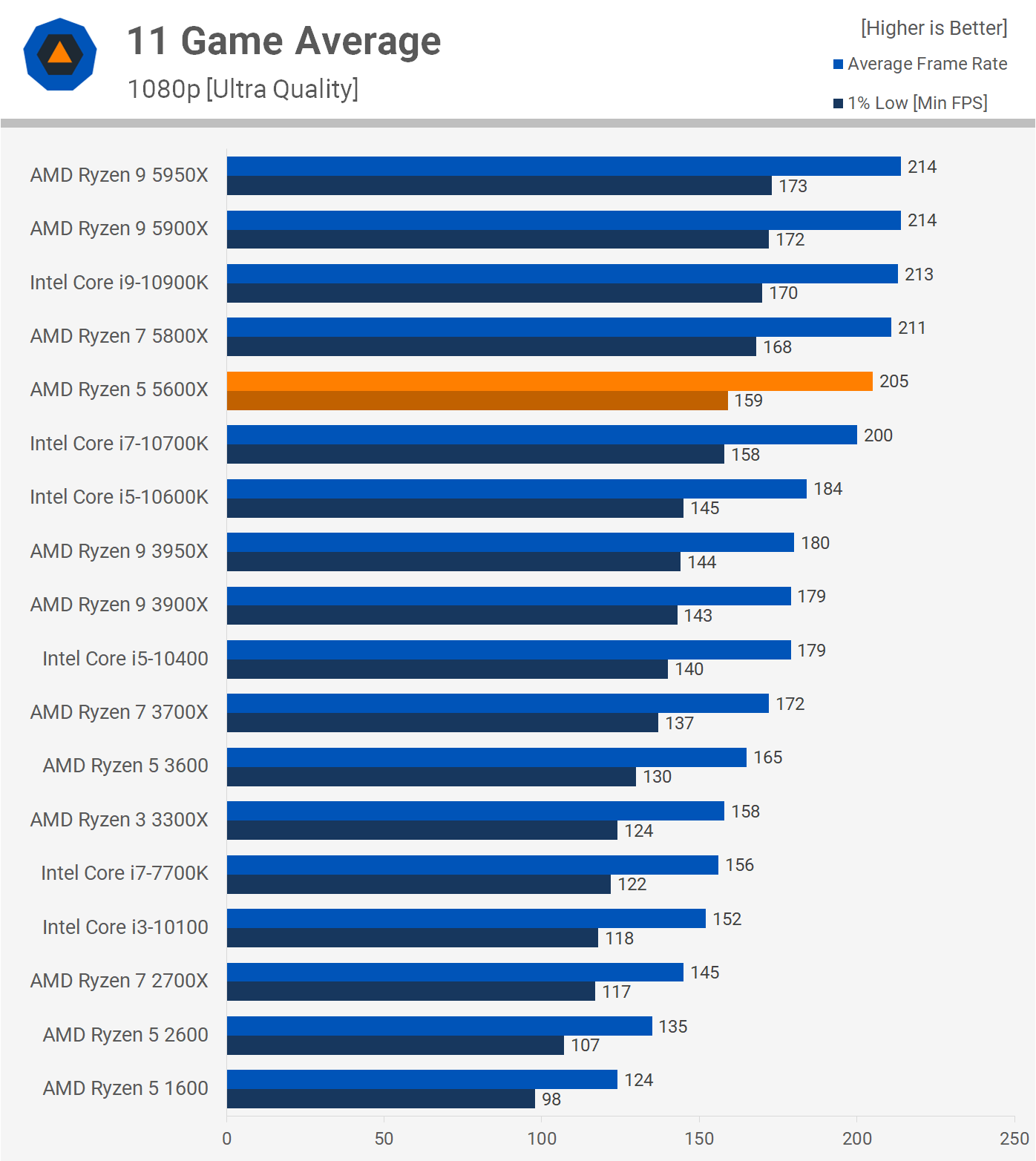

For comparison, here's the 11-game average data from our 5600X day-one review published back in November 2020. At that time, the 5950X was just 4% faster than the 5600X on average, with only two examples where it was 12% faster. Games have certainly become more CPU-demanding over the past 3.5 years, which was to be expected.

What We Learned

So there you have it. In many of today's most demanding AAA games, the Ryzen 5 5600X is on average 8% slower than the 5800X, and 12% slower than the 5950X. Not bad given how much cheaper it was. Not only that, but overall performance was excellent, as there wasn't a single example where frame rates weren't comfortably above 60 fps when CPU-bound.

If we go back to when the 5600X and 5800X were first released, along with the 5950X, it's hard to make a case for the higher core count parts for gamers on a tight budget. Simply saying, "spend more because more cores are better and more future-proof," just doesn't cut it.

Upon release, the 5800X cost 50% more than the 5600X, or $150, which is far from nothing. Although we acknowledged back then that the extra cores were nice to have if you could afford them, they were hardly a requirement for gaming, and 3.5 years later, that's as true as ever. Though it's now much easier to make a case for the upgrade as there are multiple examples we can point to where you are getting more, it might not offset the cost completely, but it's a real performance upgrade.

The Ryzen 9 5950X was absurd for gaming and still is to this day, with the 5800X3D completely annihilating it, offering 22% better performance on average – a much bigger margin than we see between the 5600X and 5950X, despite the 167% increase in cores.

Of course, if you were also tackling core-heavy productivity workloads, then the 5950X makes absolute sense, but strictly for gaming, it was never a good choice. So it's fair to say anyone who upsold gamers on the 5950X, just for gaming, really misled them out of a lot of money.

To be clear though, we don't think all gamers should have bought into the Ryzen 5 5600 series, just as we don't think all gamers should buy a Ryzen 5 or Core i5 today. What we thought 3.5 years ago is what we still think today: these products are viable options, they're priced appropriately, and they present as excellent solutions for gamers on a more limited budget.

Sadly, Intel now generally offers much better value than AMD at the entry-level end of the market, and this is something AMD will need to address with AM5 if they wish to capture the retail market end-to-end.

All said and done, it was interesting to find a few examples where the 5600X was starting to fall behind the higher core count models by a meaningful margin, such as in Ratchet & Clank, Spider-Man, Starfield, and The Last of Us Part 1.

Had we included 50 or more games, covering both modern titles and popular games released over the past few years, the overall margins would be reduced as most games aren't demanding enough to put a lot of stress on the 5600X.

Circling back to some recently published content, it's important to note that you shouldn't buy a CPU for gaming or anything really based on core count. What matters is the overall CPU performance. For example, the 5800X3D was much faster than the 5950X in this testing, despite having half as many cores, and the new Ryzen 5 7600 is comparable to the 5800X3D, despite offering two fewer cores and much less L3 cache.

Core count is somewhat relevant when comparing CPUs of the same architecture, but it becomes almost useless when comparing CPUs of different architectures, like when comparing Zen 3 and Zen 4 parts. It becomes even more complicated with Intel's P-core and E-core enabled parts.

Then there's the resolution angle. While 4K CPU benchmarking may interest some, remember it's not about the resolution, but rather your target frame rate. For example, if you want 144 fps in Cyberpunk 2077, then discovering that the RTX 4090 will only deliver around 75 fps at 4K using the "ultra preset" isn't super useful. In that specific example, all we're doing is testing the RTX 4090, as all CPUs were capable of driving 70-80 fps in our test.

What you need to know is whether the CPU can achieve your desired frame rate and what settings you need to use for your GPU to render those frames. In short, GPU-limited 4K testing using ultra or high-quality settings with an RTX 4090 tells you almost nothing useful about CPU performance, and certainly nothing you can't learn from the 1080p data.