It's finally time to show you what AMD's new flagship RDNA 3 GPU has to offer.

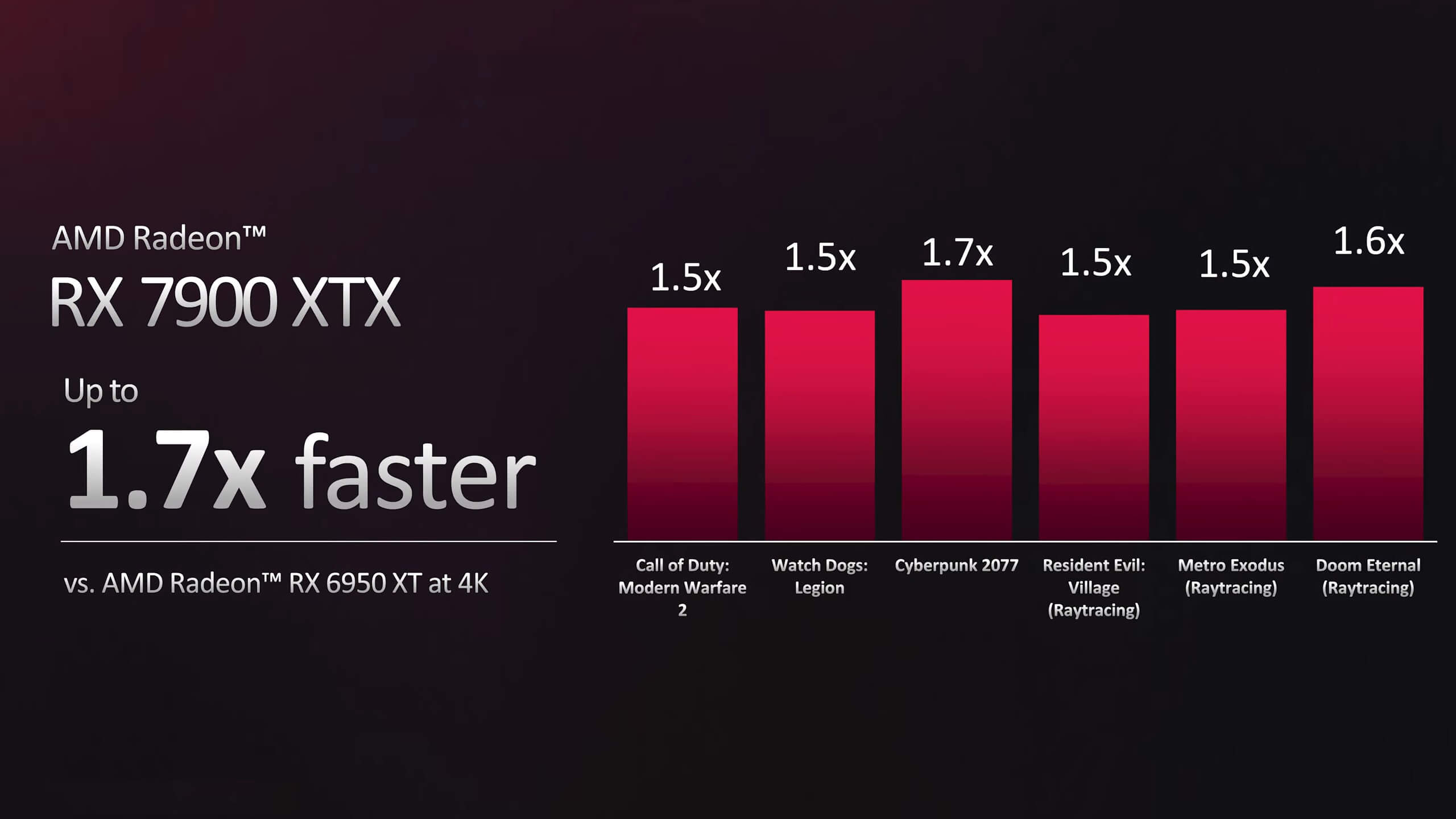

The Radeon RX 7900 XTX has been widely anticipated as gamers have been readying for a new GPU generation, but Nvidia's new products, while fast, are too expensive for most to even consider. Meanwhile, AMD was touting 50-70% performance gains for the Radeon 7900 XTX over the previous 6950 XT flagship when they unveiled the new card, which would place it within striking distance of the much more expensive RTX 4090 – at least for rasterization performance – which added to the hype and anticipation of the release.

Salivating at the promise of RTX 4090-like performance for $1,000, many things have been said in the waiting. However, after AMD's RDNA 3 announcement we were quick to note that if AMD was really offering performance that was close to the RTX 4090, the price would certainly be higher (no reason for AMD to leave money on the table) – or that ray tracing performance must be really weak – or AMD was overselling the 7900 XTX's capabilities for some reason. We'll find out which one was it today.

We have covered the RX 7900 XTX's specs before, but here's a quick rundown.

The new Radeon is the first high-performance consumer GPU to use chiplet technology. Also, AMD has moved to a dual-shader design within each compute unit, effectively doubling the shader unit count inside each CU. The top-of-the-line Radeon RX 7900 XTX is coming in at an MSRP of $1,000, while the similarly named RX 7900 XT will cost $900.

| Radeon RX 7900 XTX | Radeon RX 7900 XT | Radeon RX 6950 XT | Radeon RX 6900 XT | |

|---|---|---|---|---|

| Price (MSRP) | $1,000 | $900 | $1,100 | $1,000 |

| Release Date | Dec 13, 2022 | May 10, 2022 | Dec 8, 2020 | |

| Process | N5 (GCD) / N6 (MCD) | TSMC N7 | ||

| Transistors (billion) | 57.7 | 26.8 | ||

| Chiplets | 1 × GCD / 6 × MCD | 1 × GCD / 5 × MCD | N/A | |

| Die Size (mm2) | 300 mm2 GCD / 37 mm2 MCDs | 520 mm2 | ||

| Core Config | 6144 / 384 / 192 | 5376 / 336 / 192 | 5120 / 320 / 128 | |

| GPU Boost Clock | 2500 MHz | 2400 MHz | 2310 MHz | 2250 MHz |

| Memory Capacity | 24 GB | 20 GB | 16 GB | |

| Memory Speed | 20 Gbps | 18 Gbps | 16 Gbps | |

| Memory Type | GDDR6 | |||

| Bus Type / Bandwidth | 384-bit / 960 GB/s | 320-bit / 800 GB/s | 256-bit / 576 GB/s | 256-bit / 512 GB/s |

| Total Board Power | 355W | 300W | 335W | 300W |

The 7900 XTX arrives packing 6144 cores, while the XT has been cut down by 13% to 5376 cores. Boost clocks for the XTX are rated at 2.5 GHz versus 2.4 GHz for XT, while the Infinity Cache capacity has been set to 96 MB and 80 MB respectively (a 17% reduction), offering bandwidth of 3.5 GB/s and 2.9 GB/s. Both new Radeon graphics cards utilize 20 Gbps GDDR6 memory, but only the XTX receives a 384-bit wide memory bus for 960 GB/s bandwidth, compared to the XT which has been cut down to a 320-bit wide memory bus resulting in 800 GB/s, another 17% reduction.

For a marginal 10% discount, the Radeon 7900 XT doesn't appear as it's going to be particularly great value on paper, but we'll look at that GPU in a separate review, for now we want to focus on the XTX. Other noteworthy features of the 7900 XTX is the 355 watt total board power (TBP) rating, which is higher than the GeForce RTX 4080, but like the 4080 it does use the PCIe 4.0 x16 interface.

Of course, the most interesting aspect of the new RDNA 3 GPU is its use of chiplet technology. Rather than going all out with a massive monolithic die, RDNA 3 uses a combination of smaller dies in a similar strategy to that of AMD's latest Ryzen CPUs. This means that to get top-end GPU configurations, AMD no longer needs to make a single 520 mm2 die like RDNA 2, or approach the behemoth that is the AD102 600 mm2 die used in the RTX 4090.

AMD's RDNA3 chiplet approach includes two components: the Graphics Chiplet Die or GCD, which houses the main WGPs and processing hardware; plus multiple Memory Chiplet Dies or MCDs, which include the infinity cache and memory controllers. On the flagship 7900 XTX, we get one GCD plus six MCDs, while the 7900 XT features a cut down GCD plus five MCDs.

AMD's architecture benefits in multiple ways from the move to chiplets. One is that they can now split their GPU design over multiple nodes, reducing the amount of expensive leading edge silicon they need in every GPU. The GCD is built on TSMC's latest N5 node, but the MCDs are built on TSMC N6, which is a derivative of their older and less costly 7nm process. This ends AMD's reliance on needing huge chunks of the latest silicon for every high-end GPU.

The other main advantage is yields. Large monolithic dies have lower yields than smaller dies, so whenever a design can be split into multiple smaller dies, there's a good chance yields will increase substantially. AMD has found great success with this approach on Ryzen, where modern Zen 4 chiplets are about 70 mm2, tiny enough that yields should be well over 90%. RDNA3 won't have as much of an advantage as Zen 4 as the GCD is still relatively large compared to a CPU, but at 300 mm2 it's half the size of AD102 and just 60% as large as Navi 21. That sort of size saving will have big implications for yields.

Flanking the GCD are six MCDs about 37 mm2 in size, so these are tiny chiplets just half the size of a Zen 3 CPU core chiplet. Each has a 64-bit GDDR6 controller and 16MB of cache. On a mature node like N6 these should have extremely high yields, so AMD will benefit here a lot even though they need six of them for the 7900 XTX. All up, while flagship Navi 31 RDNA3 GPUs still need over 500 mm2 of total silicon, this chiplet approach split across two nodes should reduce manufacturing costs and increase yields.

Now, it will be interesting to see how this approach plays out in an actual product. For testing today all GPUs have been benchmarked at the official clock specifications, with no factory overclocking. The CPU used is the Ryzen 7 5800X3D with 32GB of dual-rank, dual-channel DDR4-3200 CL14 memory on the MSI MPG X570S Carbon Max Wi-Fi motherboard. In total we've tested 16 games at 1440p and 4K, so let's get into the data…

Benchmarks

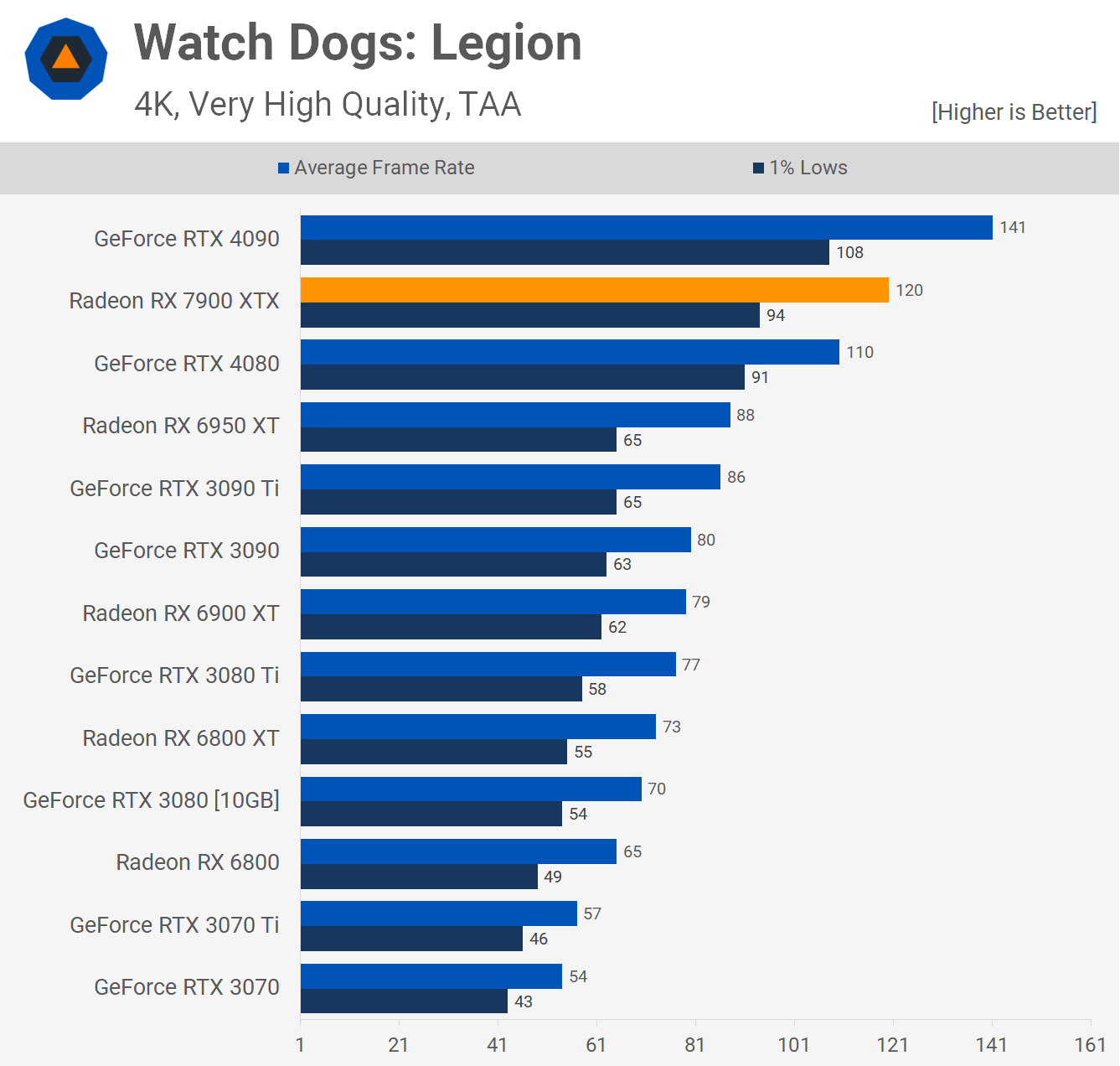

First up we have Watch Dogs: Legion, and here the 7900 XT looks mighty impressive, cranking out 172 fps at 1440p to make it faster than not just the RTX 4090, but also the RTX 4080, beating the latter by a 12% margin. Of course, the RTX 4090 is GPU bound here, but it's interesting to see that under CPU bound conditions the Radeon GPU does have a bit more headroom.

The CPU bottleneck is removed at 4K, but even so the 7900 XTX was still 9% faster than the RTX 4080. Then when compared to AMD's previous generation flagship part, the 6950 XT, we see a 36% performance uplift for the new 7900 XTX.

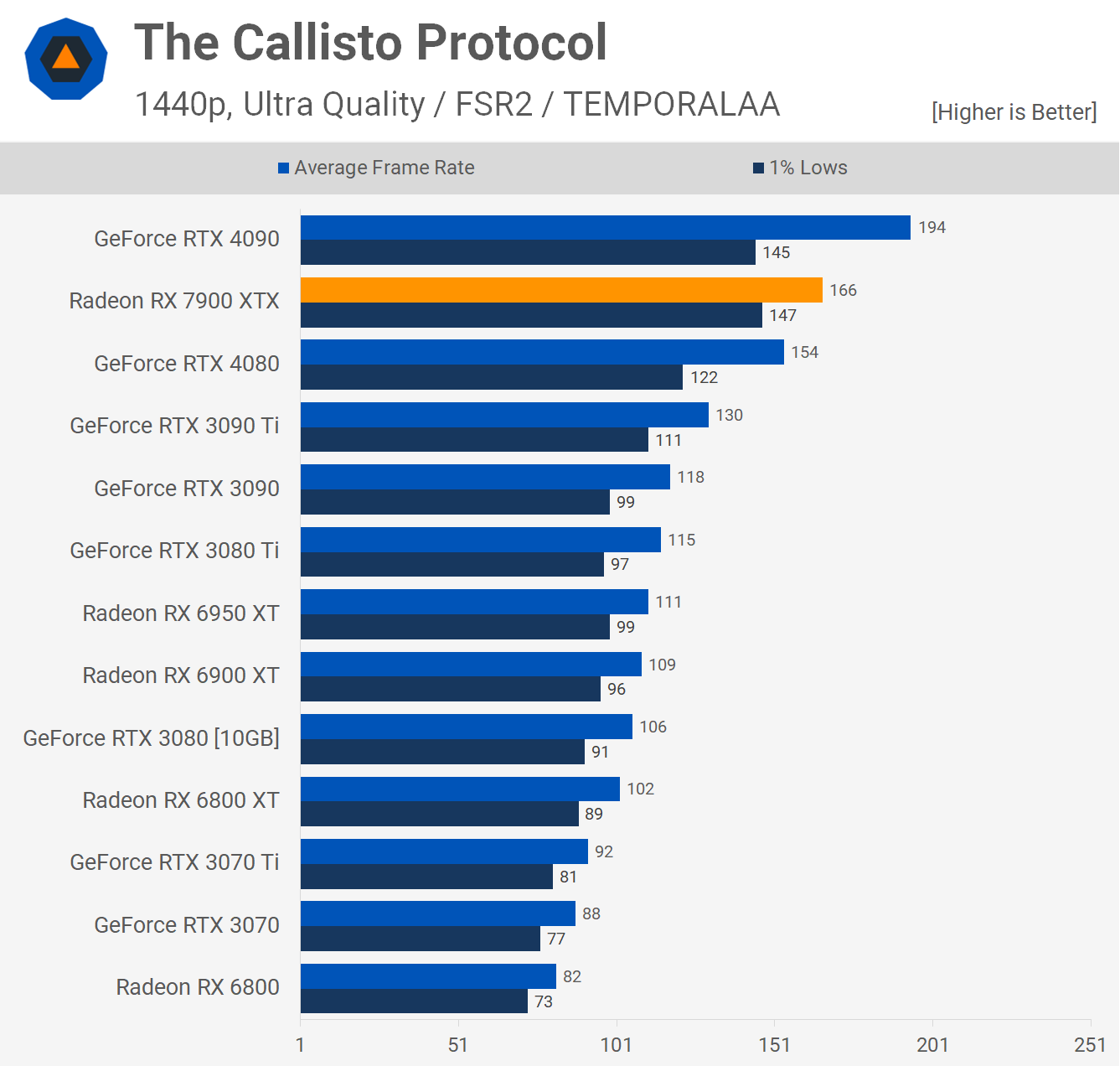

The Callisto Protocol is a new game that we've added to our benchmarks and it's one where the 7900 XTX appears to work quite well, and please note we're not using the built-in benchmark for this testing. With 166 fps on average the new Radeon GPU was 8% faster than the RTX 4080 and 50% faster than the 6950 XT, so that's a solid gain at 1440p.

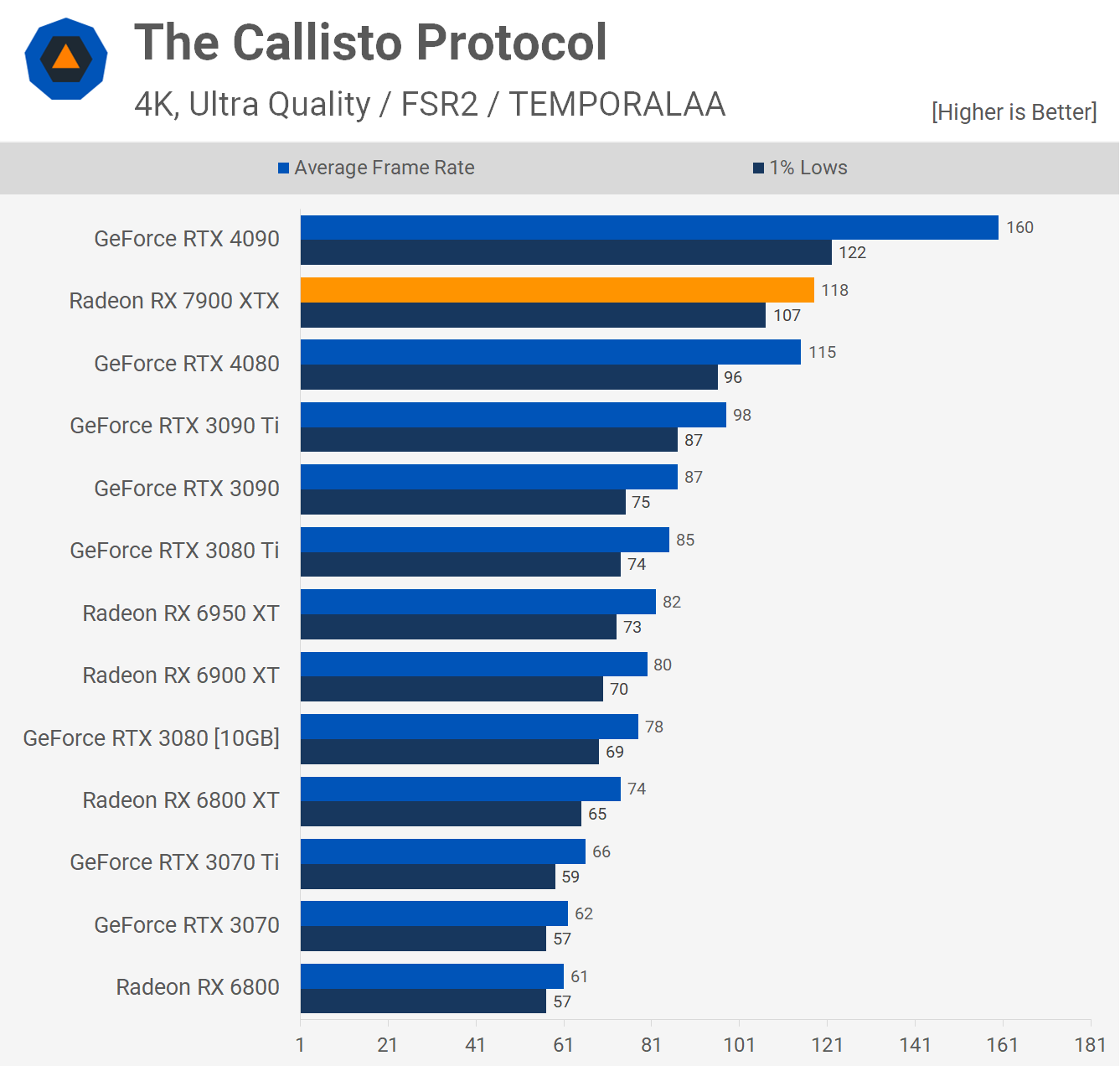

Then at 4K with the ultra quality preset which uses FSR2, the 7900 XTX and RTX 4080 were neck and neck, making the RDNA 3 GPU 44% faster than the 6950 XT.

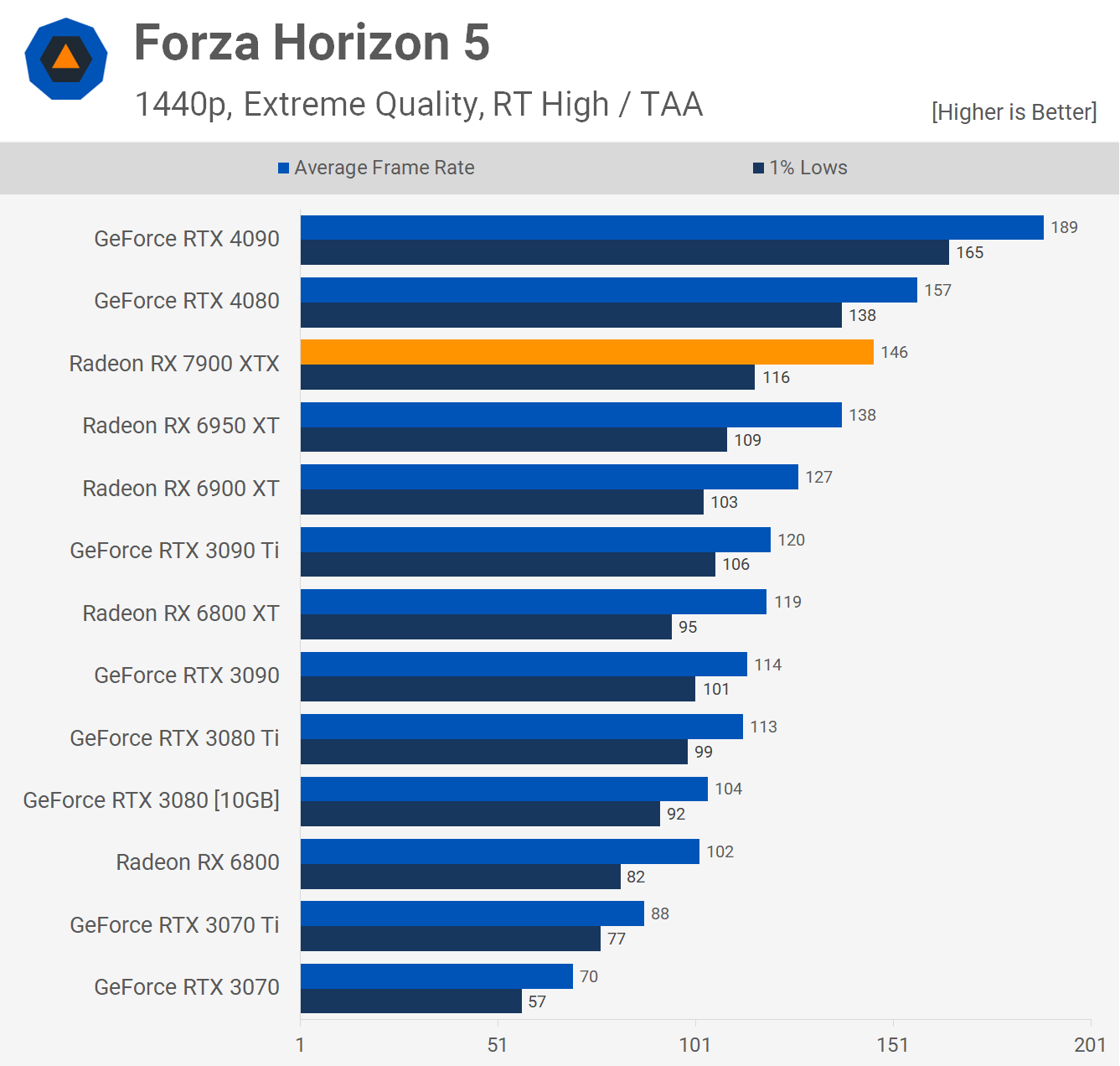

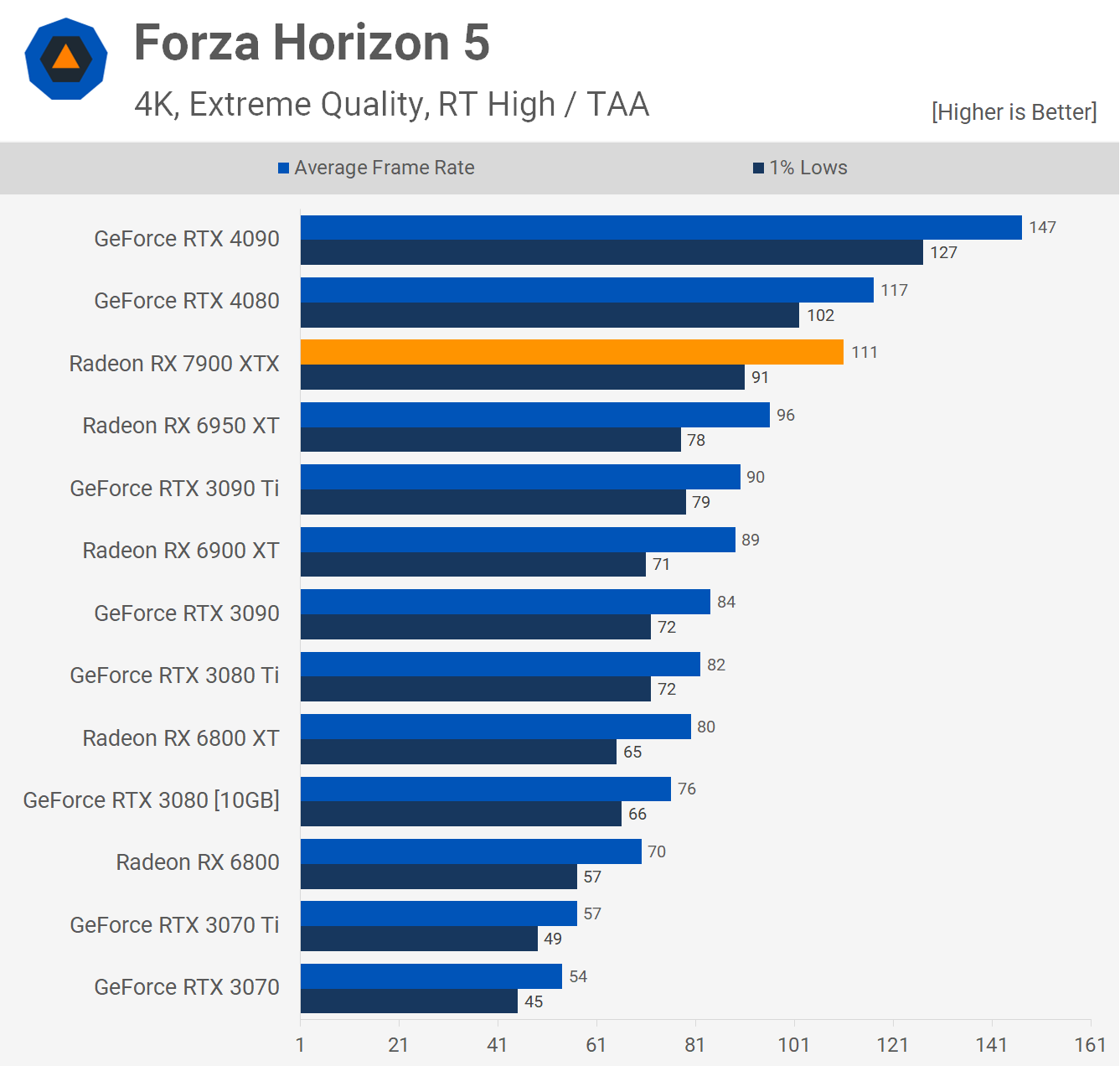

We think AMD's dealing with a driver related issue for RDNA 3 in Forza Horizon 5 because performance here wasn't good. There were no stability issues or bugs seen when testing, performance was just much lower than you'd expect. At 1440p, for example, the 7900 XTX was a mere 6% faster than the 6950 XT. And that sucks.

This margin improved a lot at 4K, but even so the 7900 XTX was just 16% faster than the 6950 XT, a far cry from the 50% minimum AMD suggested. This meant the new Radeon GPU was 5% slower than the RTX 4080, so a disappointing result all round.

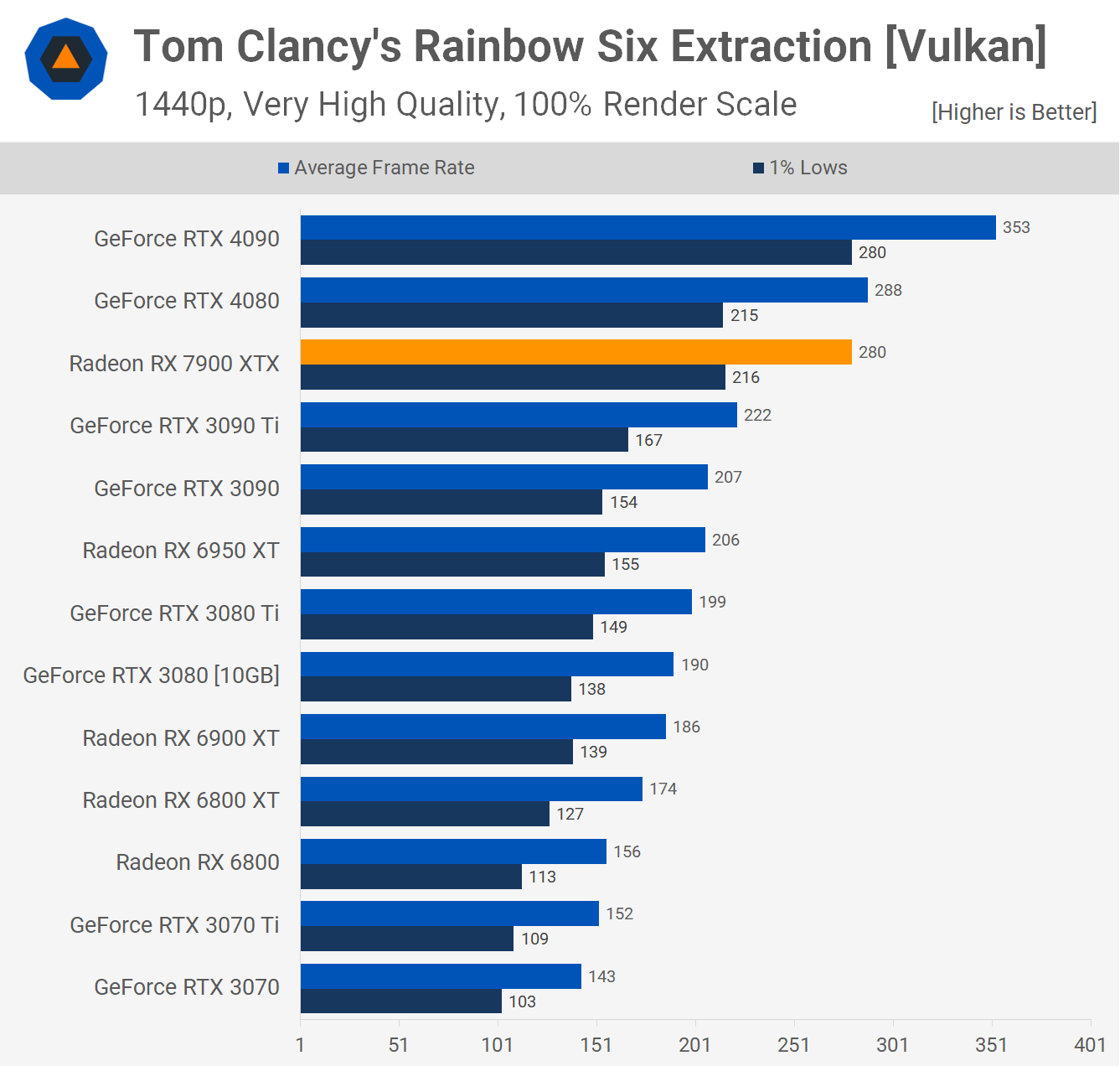

The 7900 XTX trailed the RTX 4080 at 1440p in Rainbow Six Extraction by a slim margin, making it just 26% faster than the 3090 Ti and 36% faster than the 6950 XT. Although those are some decent margins, it's a lot less than AMD initially indicated.

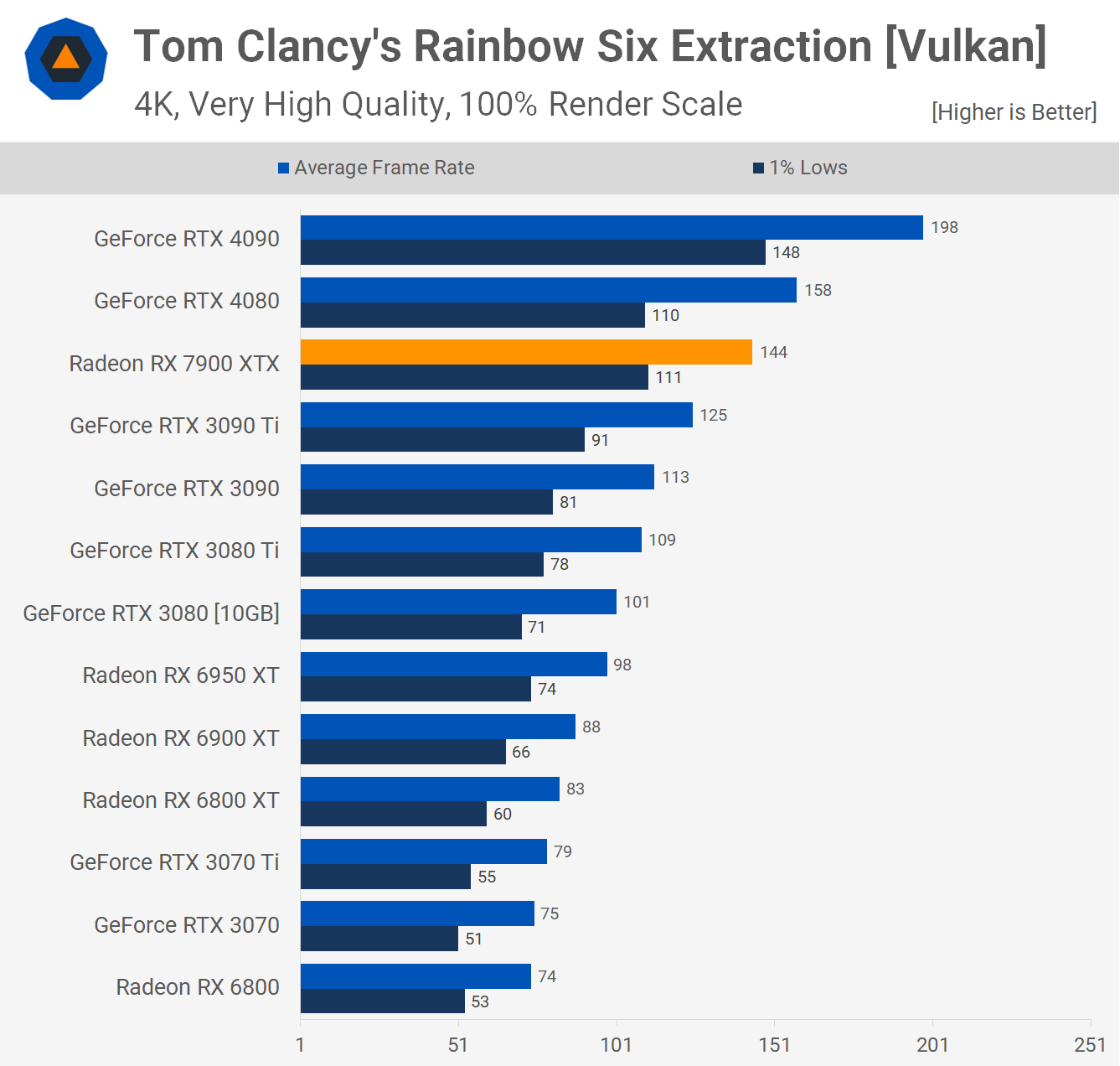

Then at 4K, the 7900 XTX falls behind the RTX 4080 by a 9% margin and is now just 15% faster than the 3090 Ti, though it was at least 47% faster than the 6950 XT.

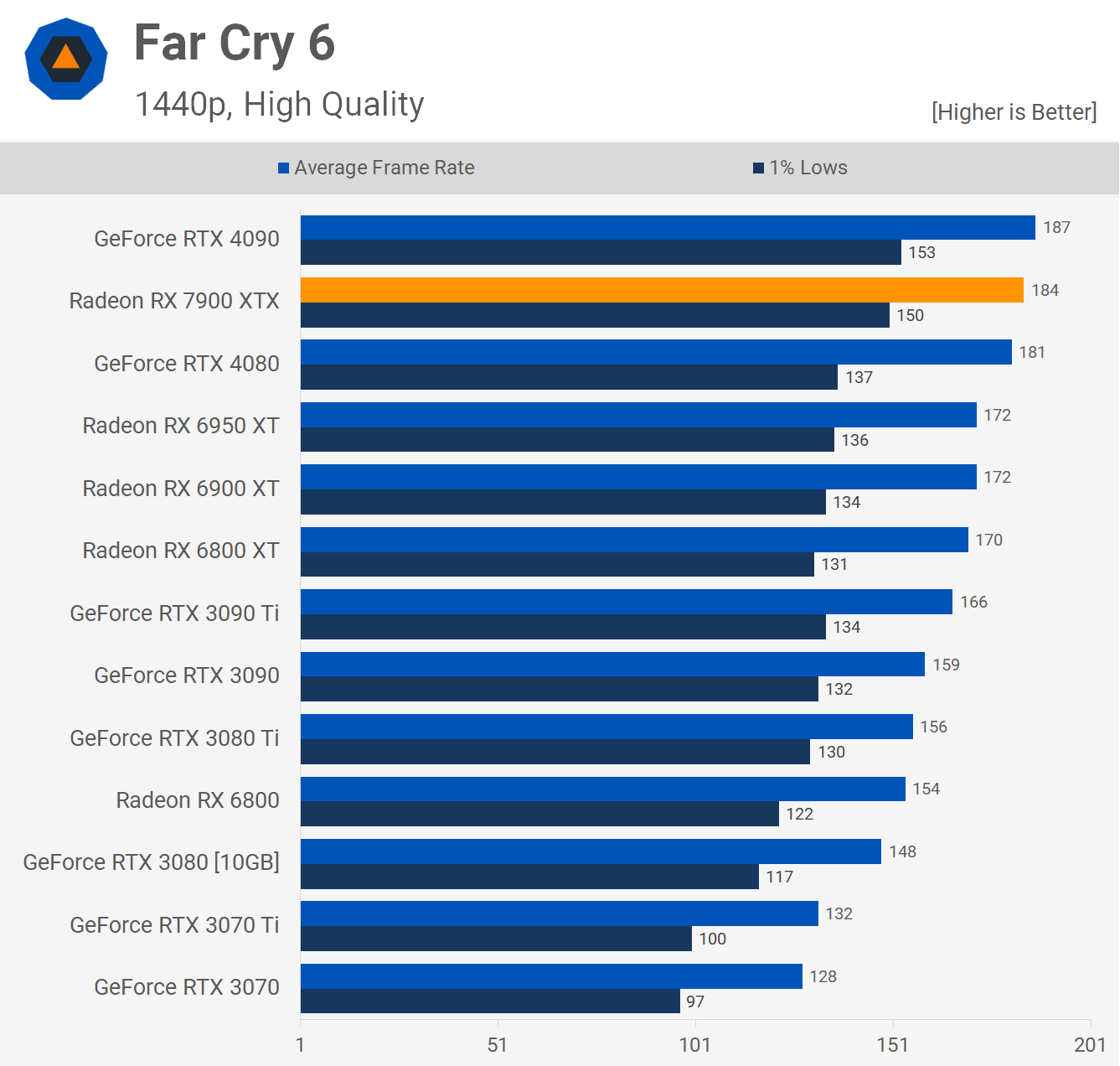

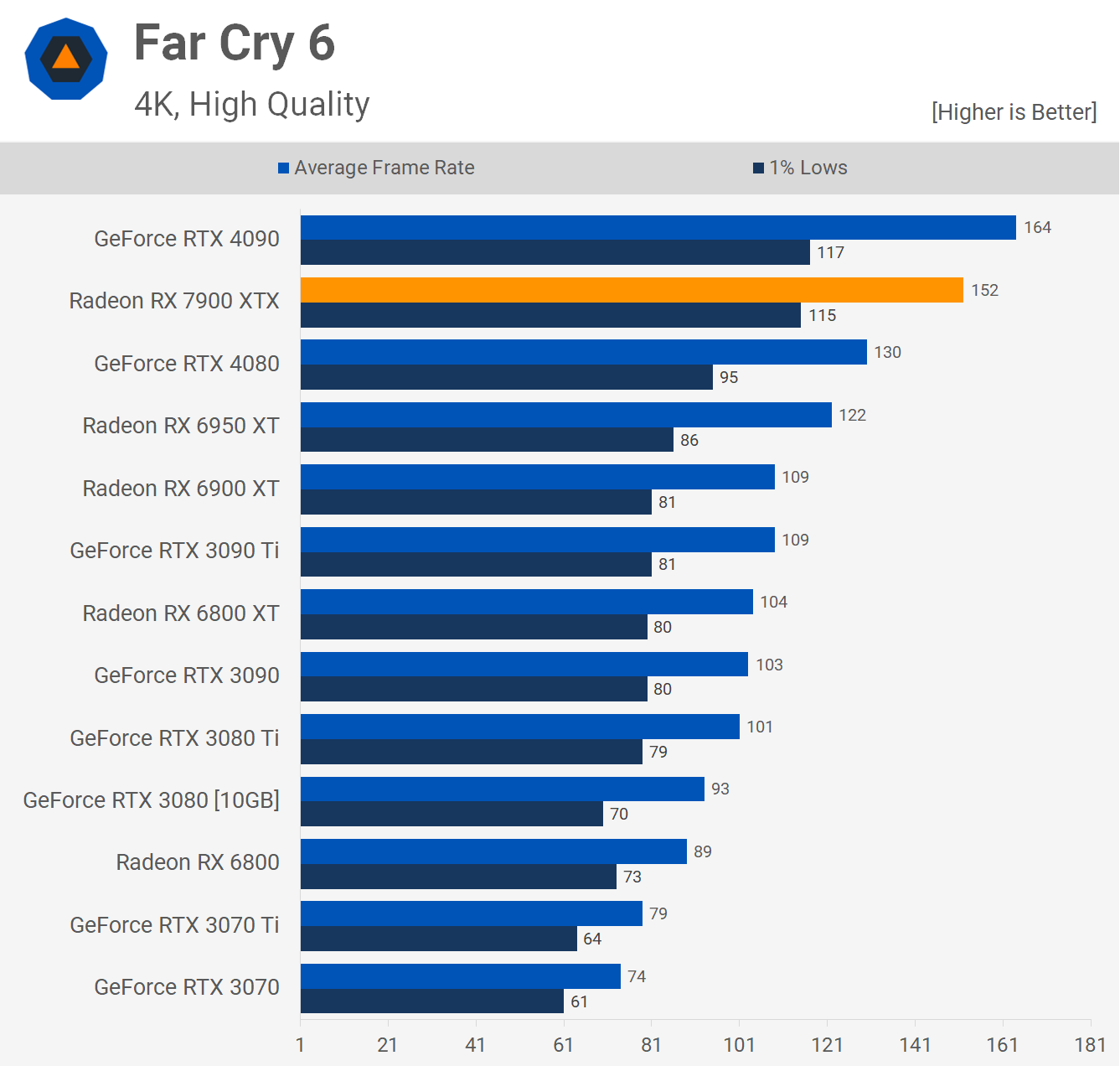

Far Cry 6 is largely GPU bound at 1440p, so the 7900 XT is able to match the RTX 4090, despite being just 7% faster than the 6950 XT. Moving to 4K does alleviate the CPU bottleneck and now the 7900 XTX is 17% faster than the RTX 4080 and 25% faster than the 6950 XT.

Although that's a reasonable margin we expect most will be disappointed with what we're seeing here relative to the 6950 XT.

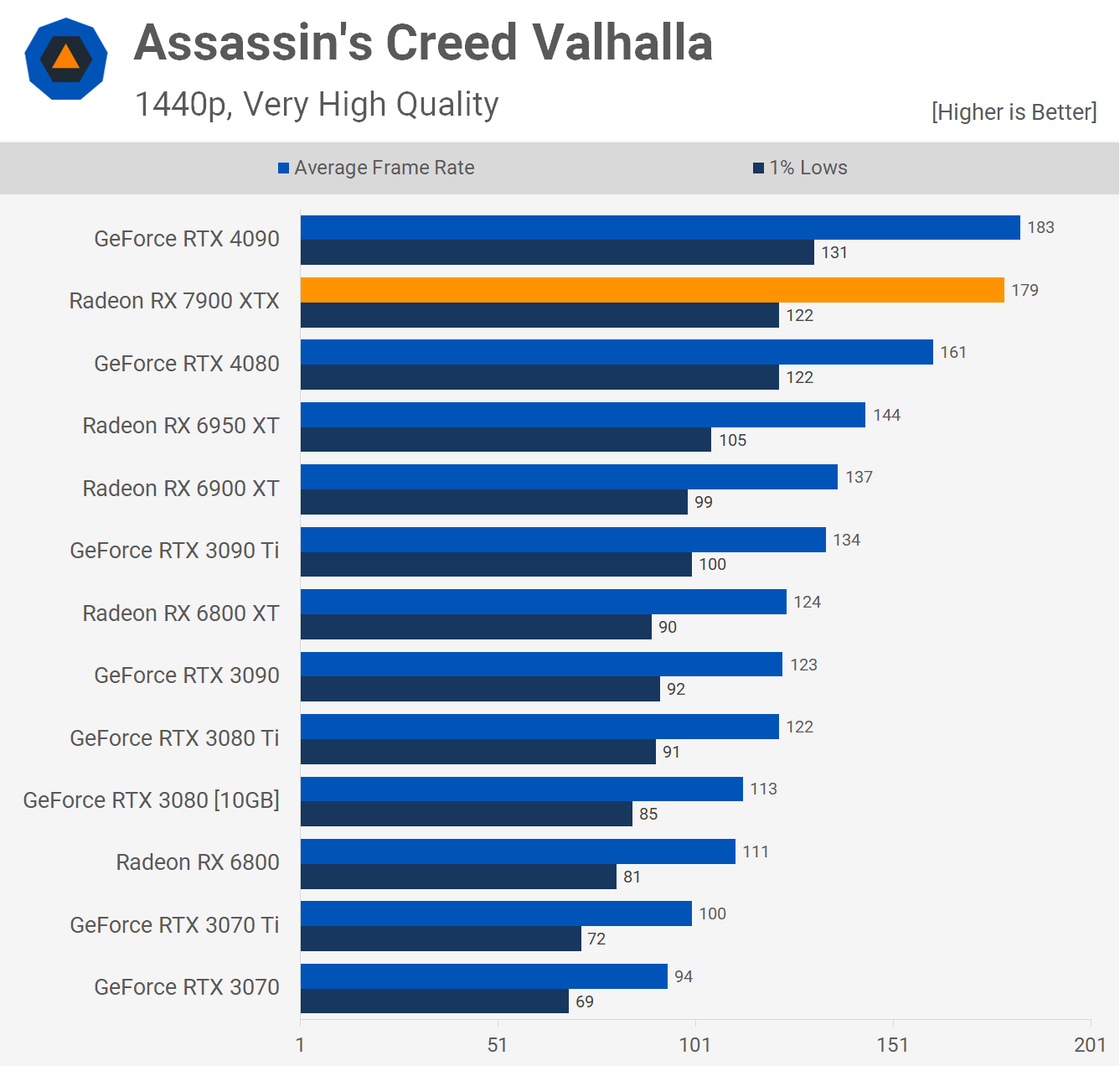

The 7900 XTX looks good in Assassin's Creed Valhalla, outpacing the RTX 4080 by an 11% margin at 1440p, though this is a title where Radeon GPUs traditionally do very well and if we turn our attention to the 6950 XT comparison, the result it a lot less exciting as the new RDNA 3 flagship is just 24% faster.

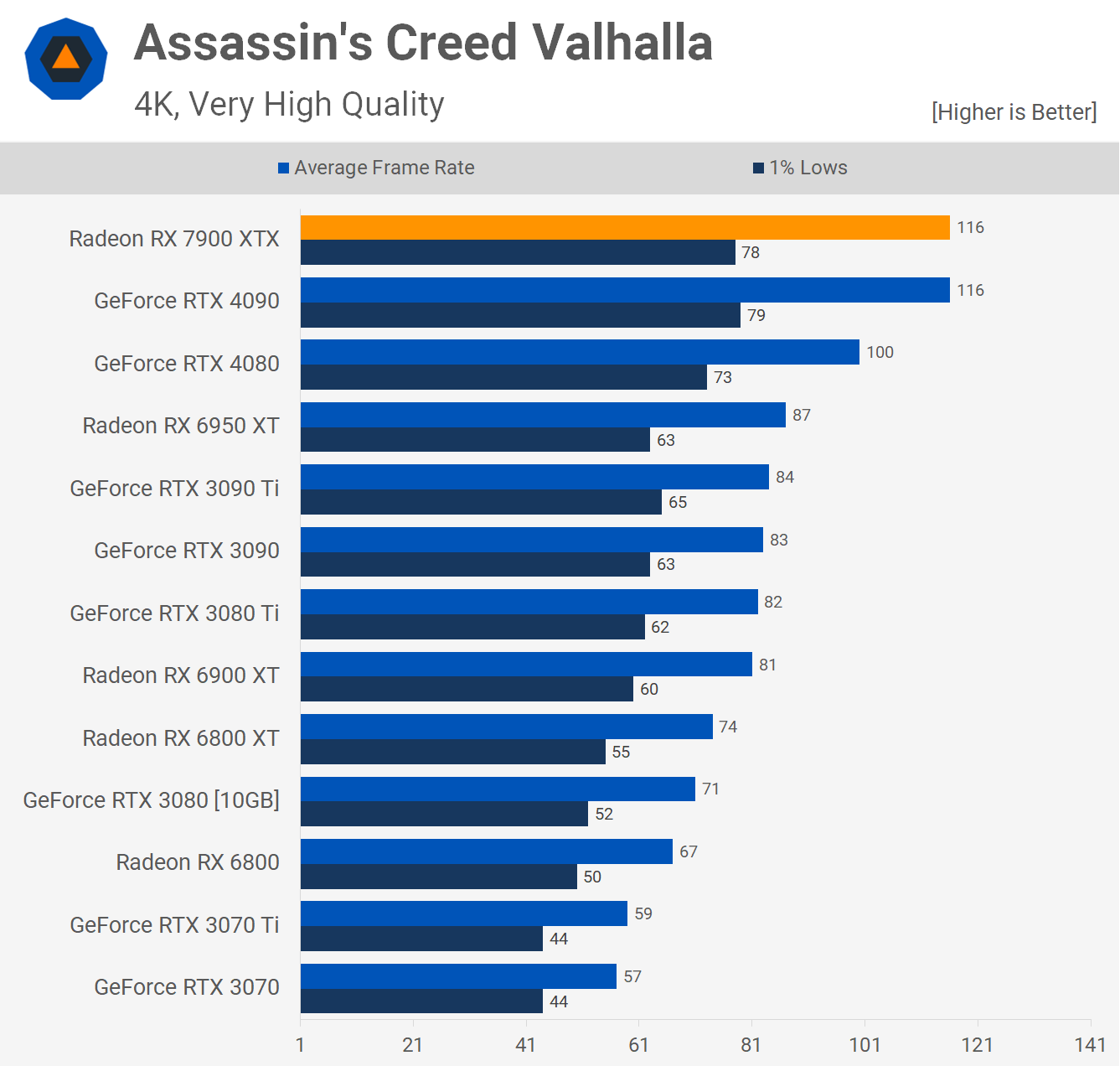

Interestingly, at 4K the 7900 XTX performs better relative to the GeForce GPUs than what we saw at 1440p as here it matched the RTX 4090, making it 16% faster than the 4080 and this time 33% faster than the 6950 XT, which is a decent margin, but also a lot less than the 50-70% margin AMD suggested during the RDNA 3 announcement.

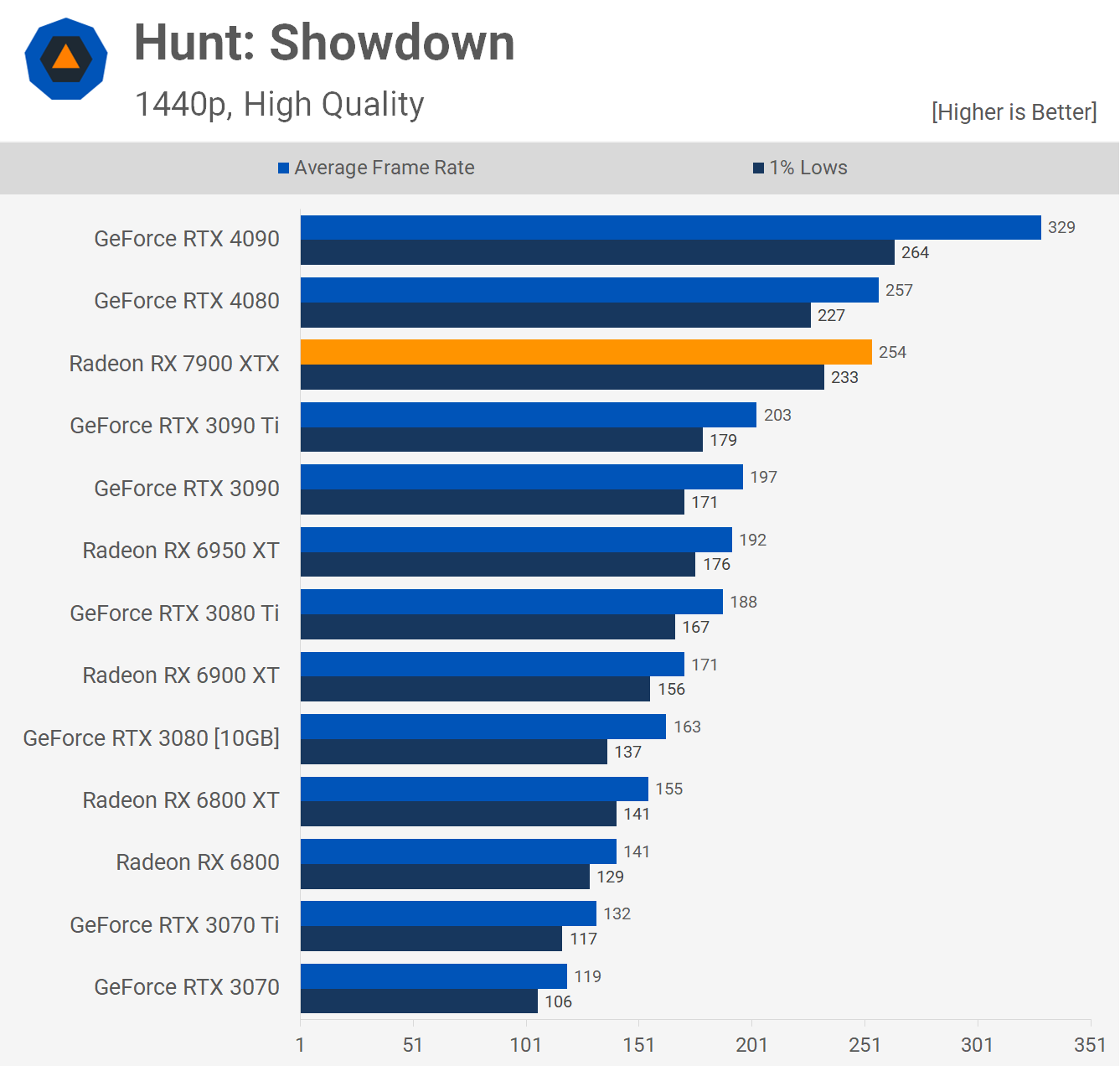

Moving on to Hunt: Showdown where the 7900 XTX and RTX 4080 are neck and neck, delivering just over 250 fps. That made AMD's flagship just 25% faster than the RTX 3090 Ti and 32% faster than the 6950 XT.

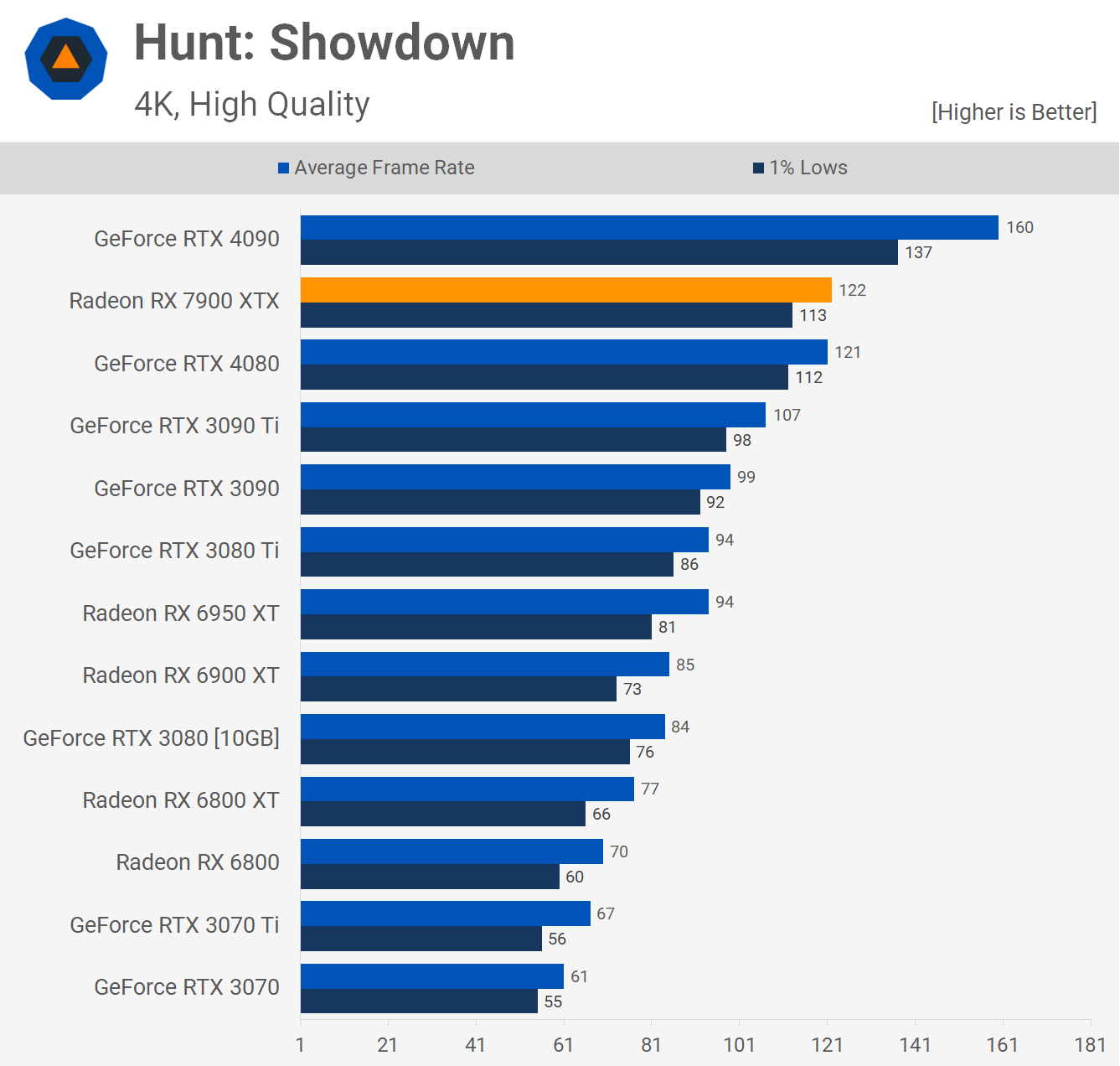

The 4K data is much the same, as in the 7900 XTX and RTX 4080 are on par, though this time that meant the 7900 XTX was just 14% faster than the 3090 Ti and 30% faster than the 6950 XT.

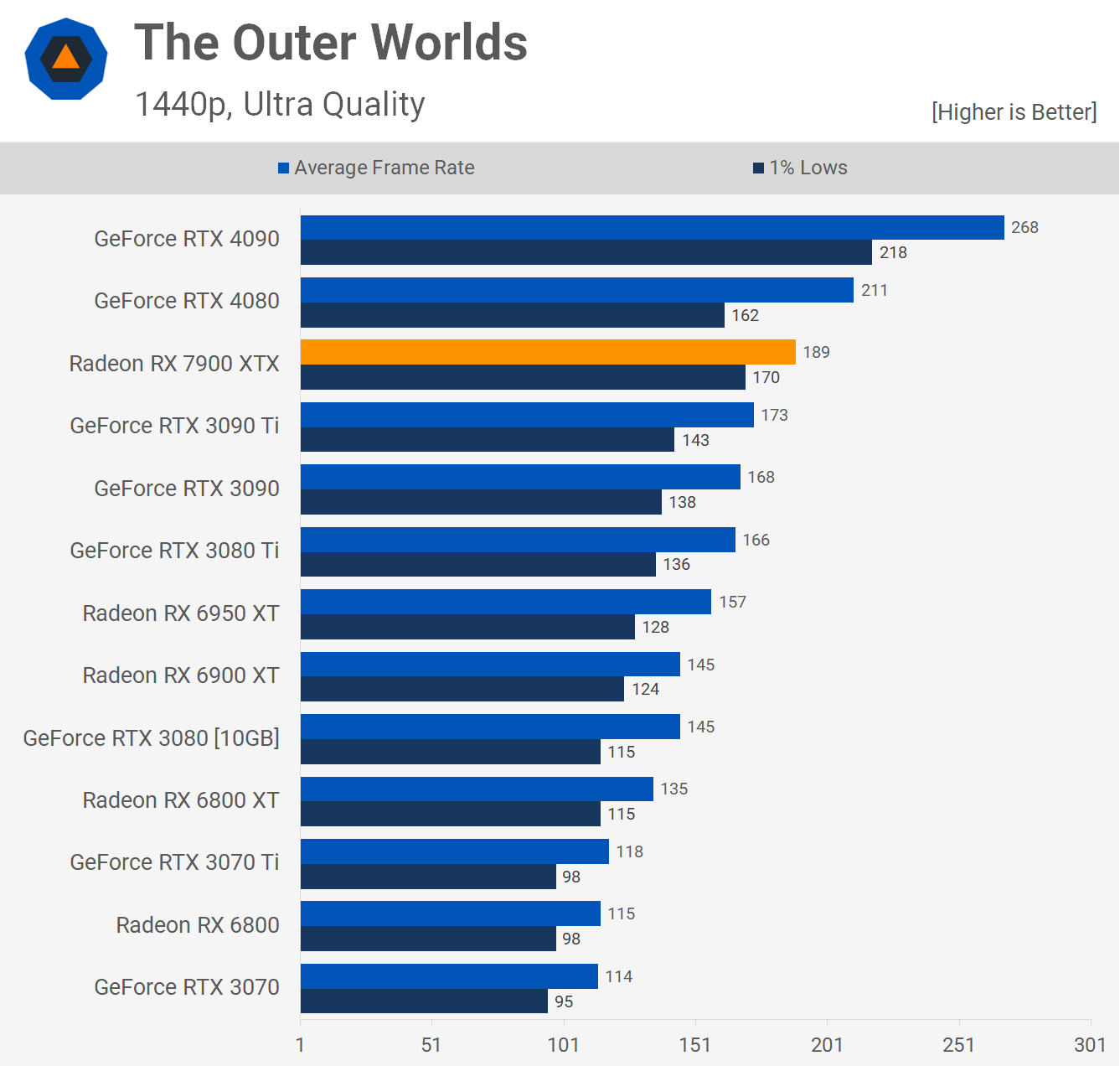

The Outer Worlds uses the Unreal Engine 4 which typically favors GeForce GPUs and that is indeed the case with this title. Although the 7900 XTX was 10% slower than the RTX 4080 when comparing the average frame rate, the 1% lows were higher for the Radeon GPU and we do see a bit of this in our testing.

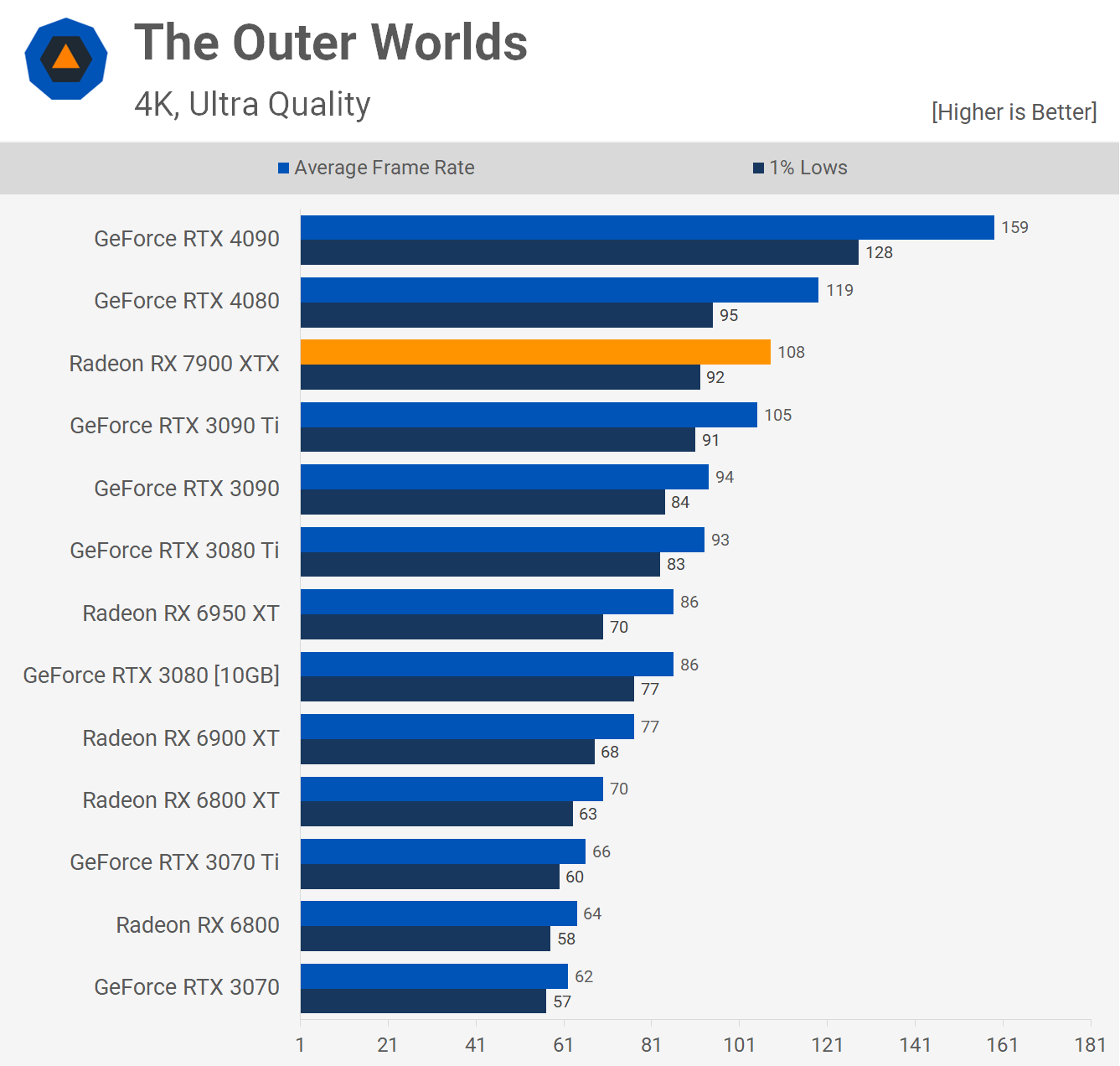

At 4K the 1% lows are more inline with the average frame rates and here the 7900 XTX was 9% slower than the RTX 4080 and just 26% faster than the 6950 XT.

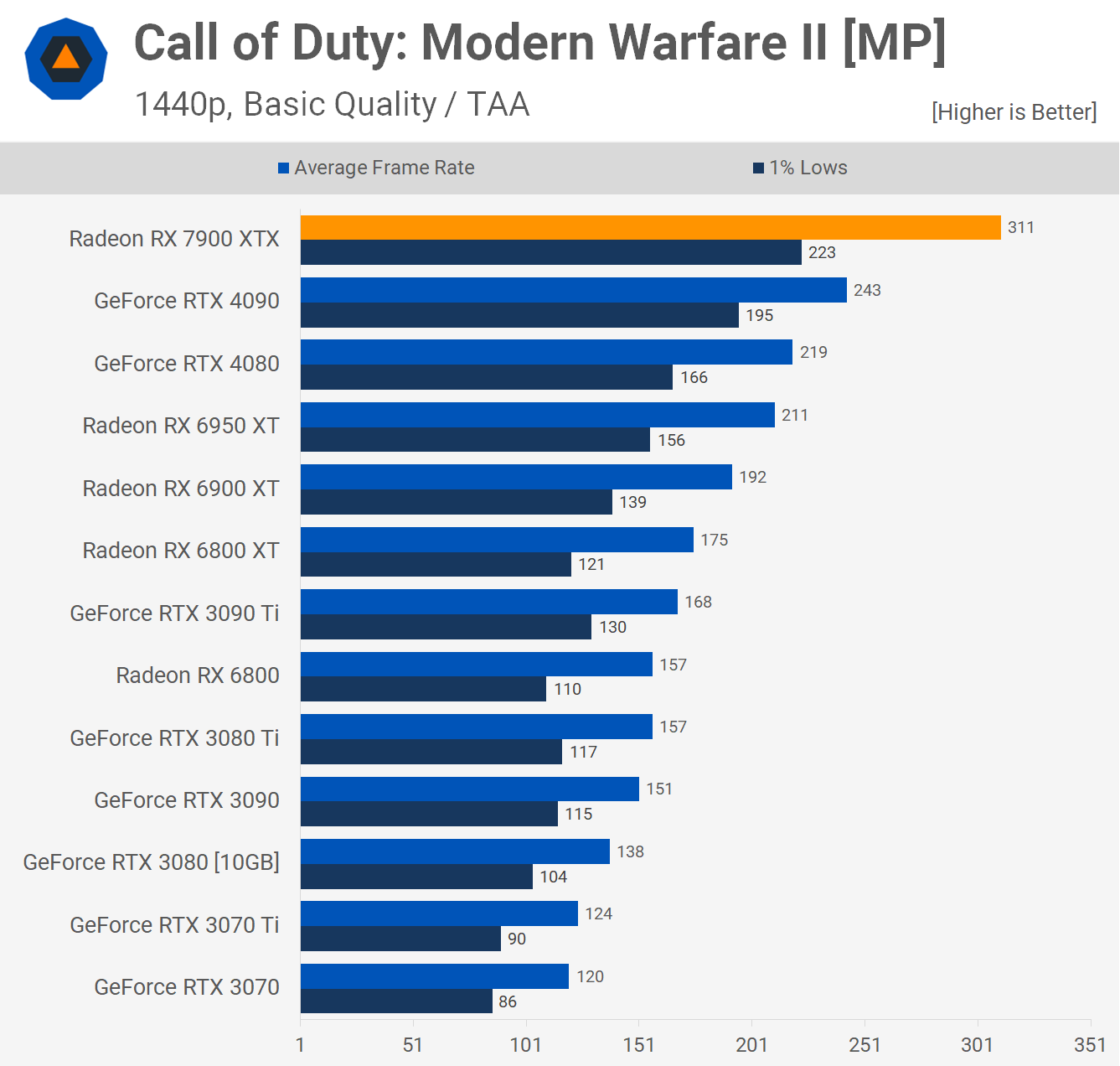

As we've seen in our recent Modern Warfare II benchmarks, this game loves Radeon GPUs, and this is certainly true when looking at the 7900 XTX, pumping out an incredible 311 fps on average at 1440p. That made it a massive 42% faster than the RTX 4080 and 28% faster than the 4090.

These results are certainly outliers in our testing, but Modern Warfare 2 and Warzone 2 are very popular games, so this is great news for AMD.

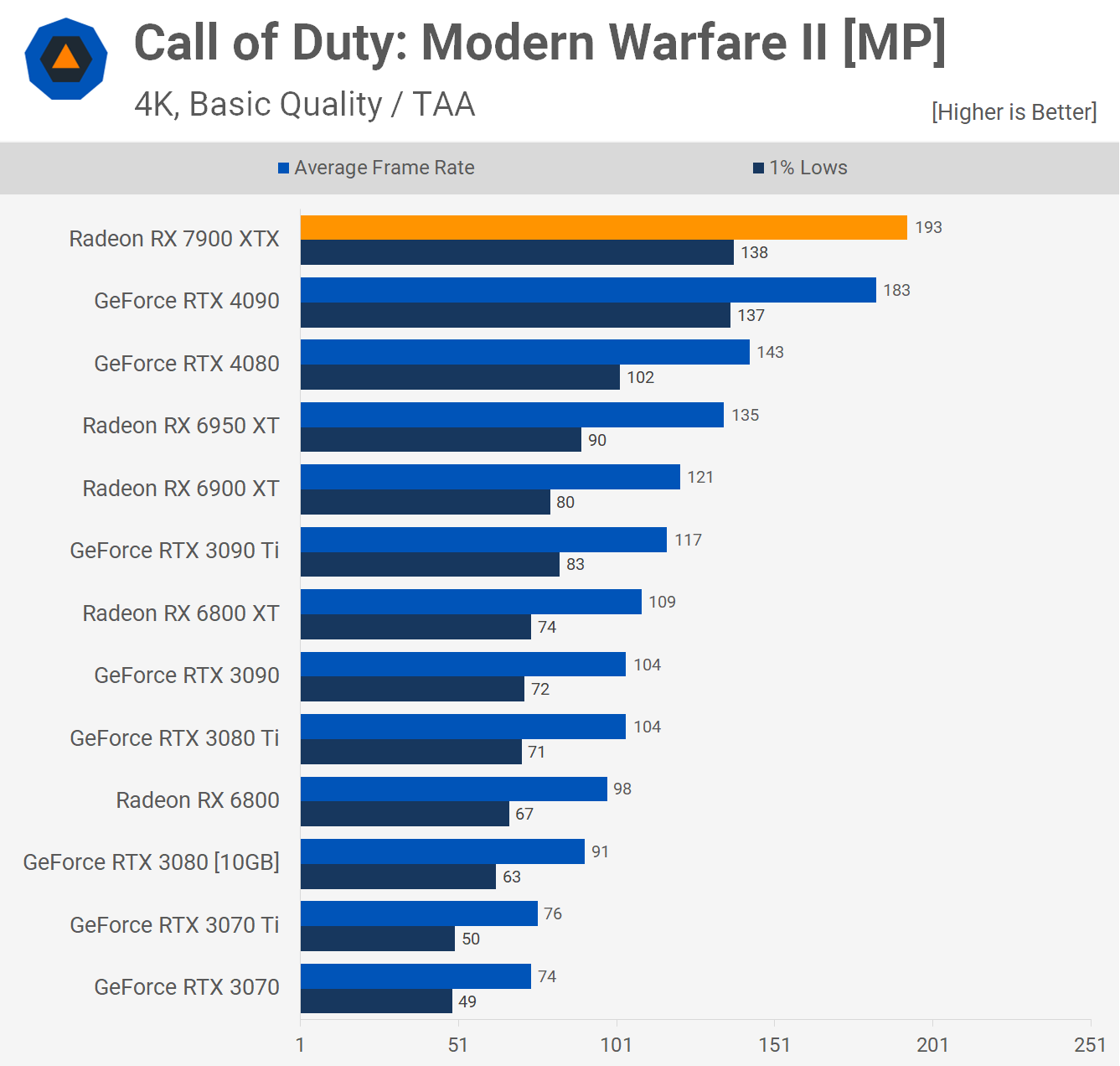

The 7900 XTX even managed to edge out the RTX 4090 at 4K with 193 fps, making it 5% faster and 35% faster than the 4080. Moreover, a 43% improvement over the 6950 XT is impressive, though given AMD;s announcement you're probably expecting this sort of margin to be the norm, if not a worst case scenario for the new RDNA 3 flagship.

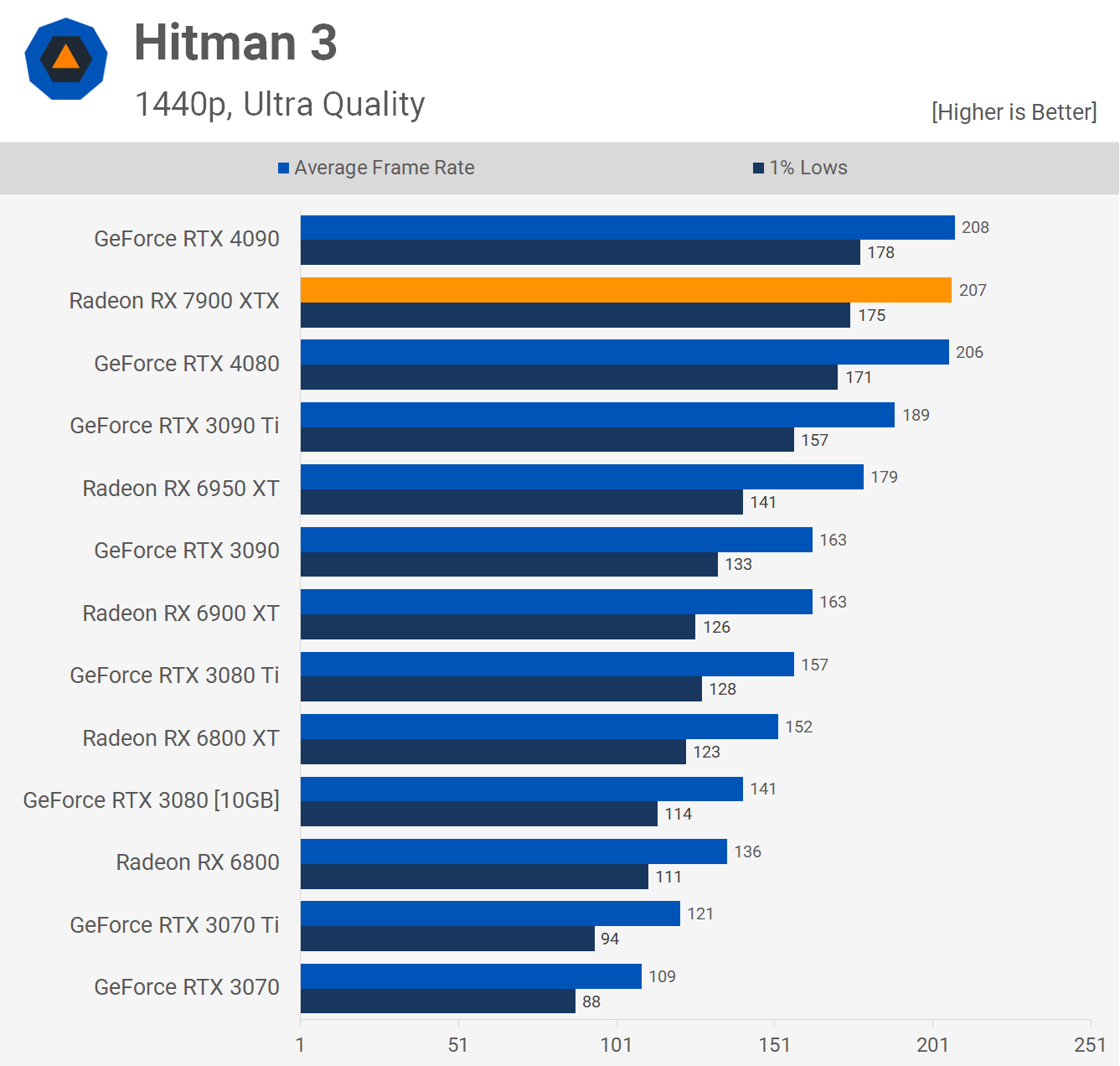

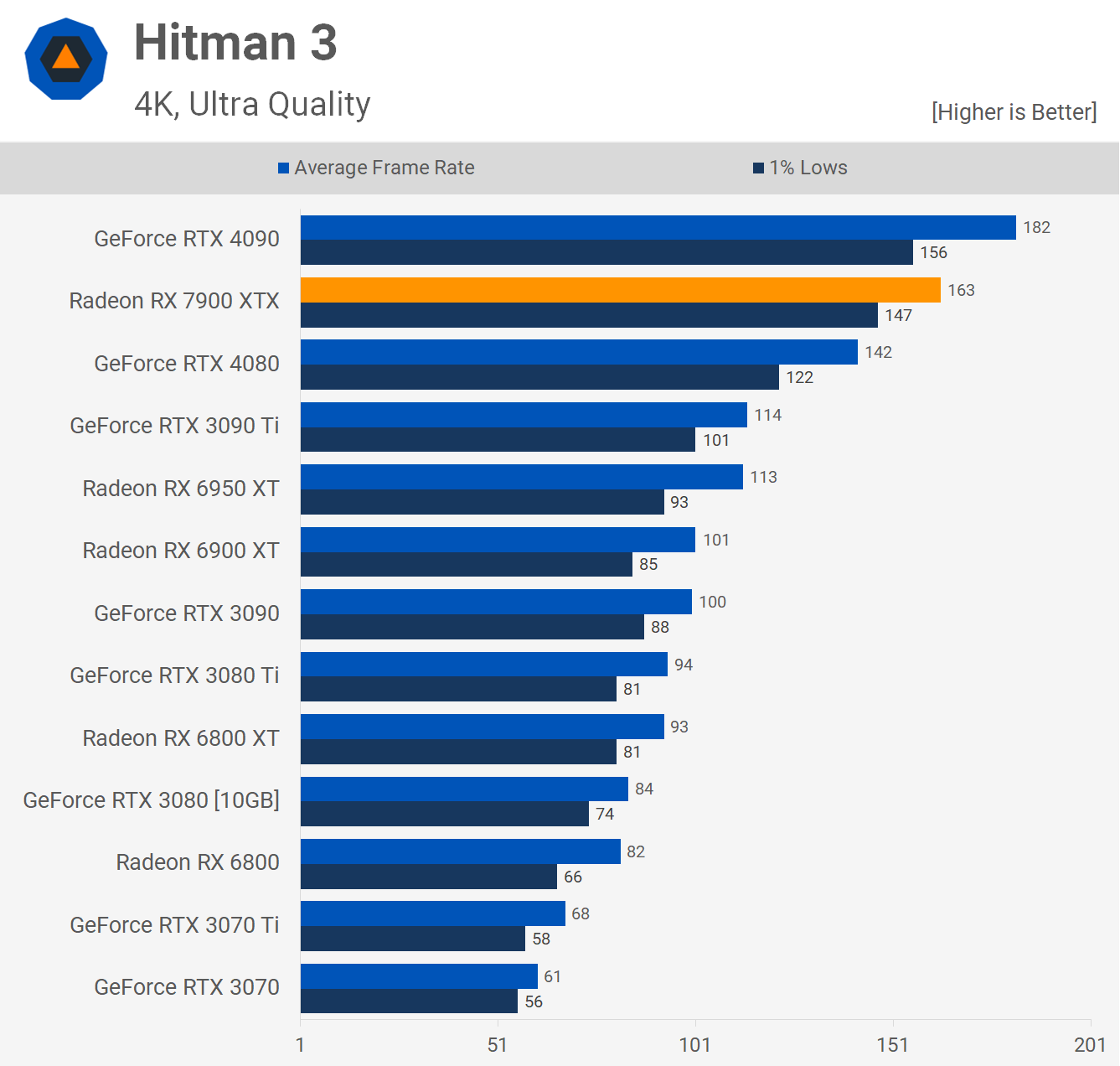

The Hitman 3 results are heavily CPU limited at 1440p with these new next gen high-end GPUs, so the 7900 XTX was able to match the 4080 and 4090. Bumping up the resolution to 4K showed some great results for the new RDNA 3 GPU as it was 15% faster than the RTX 4080 and just 10% slower than the RTX 4090. I suspect these are the sort of margins that gamers anticipating the arrival of the 7900 XTX were expecting to be the norm.

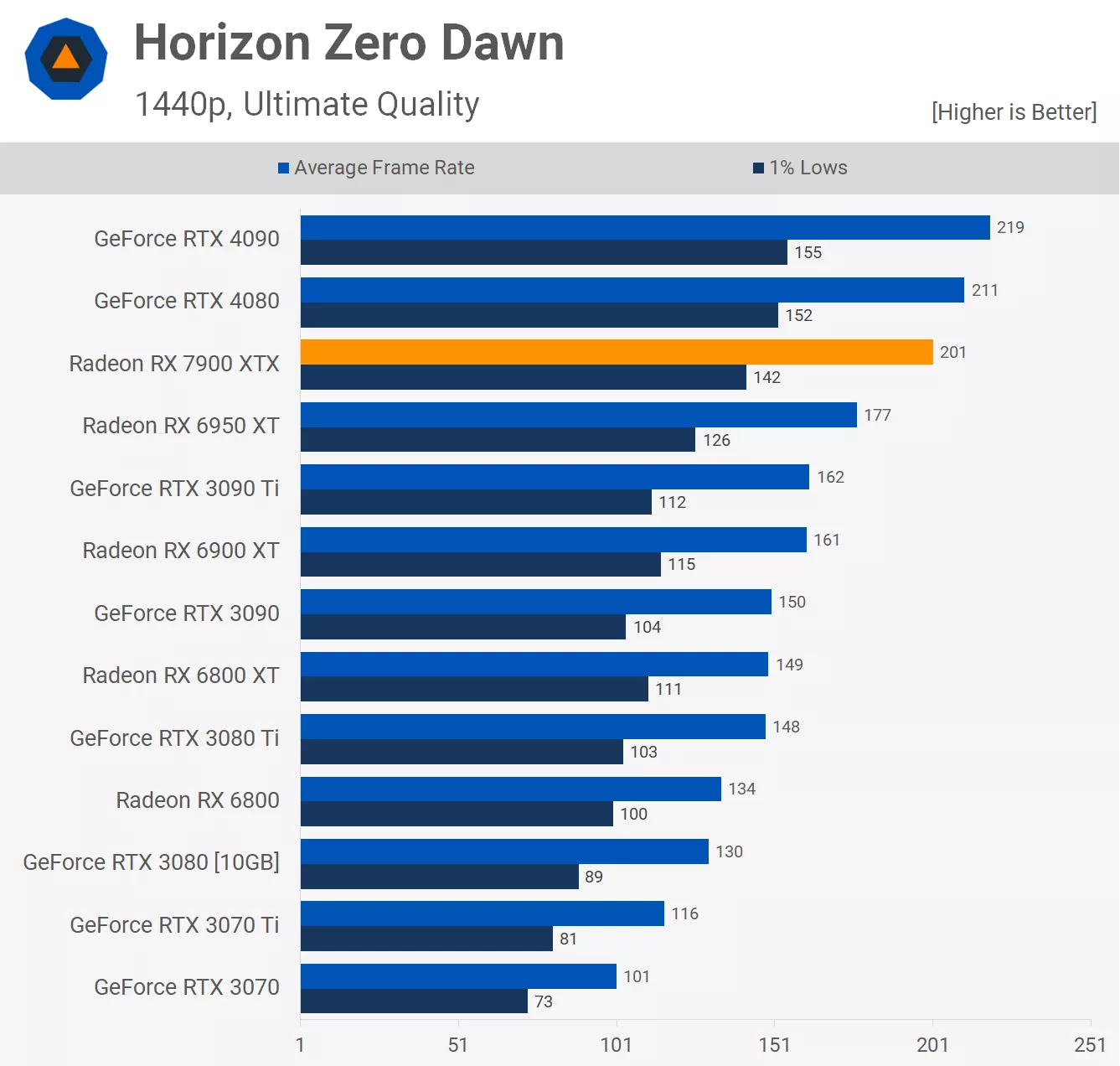

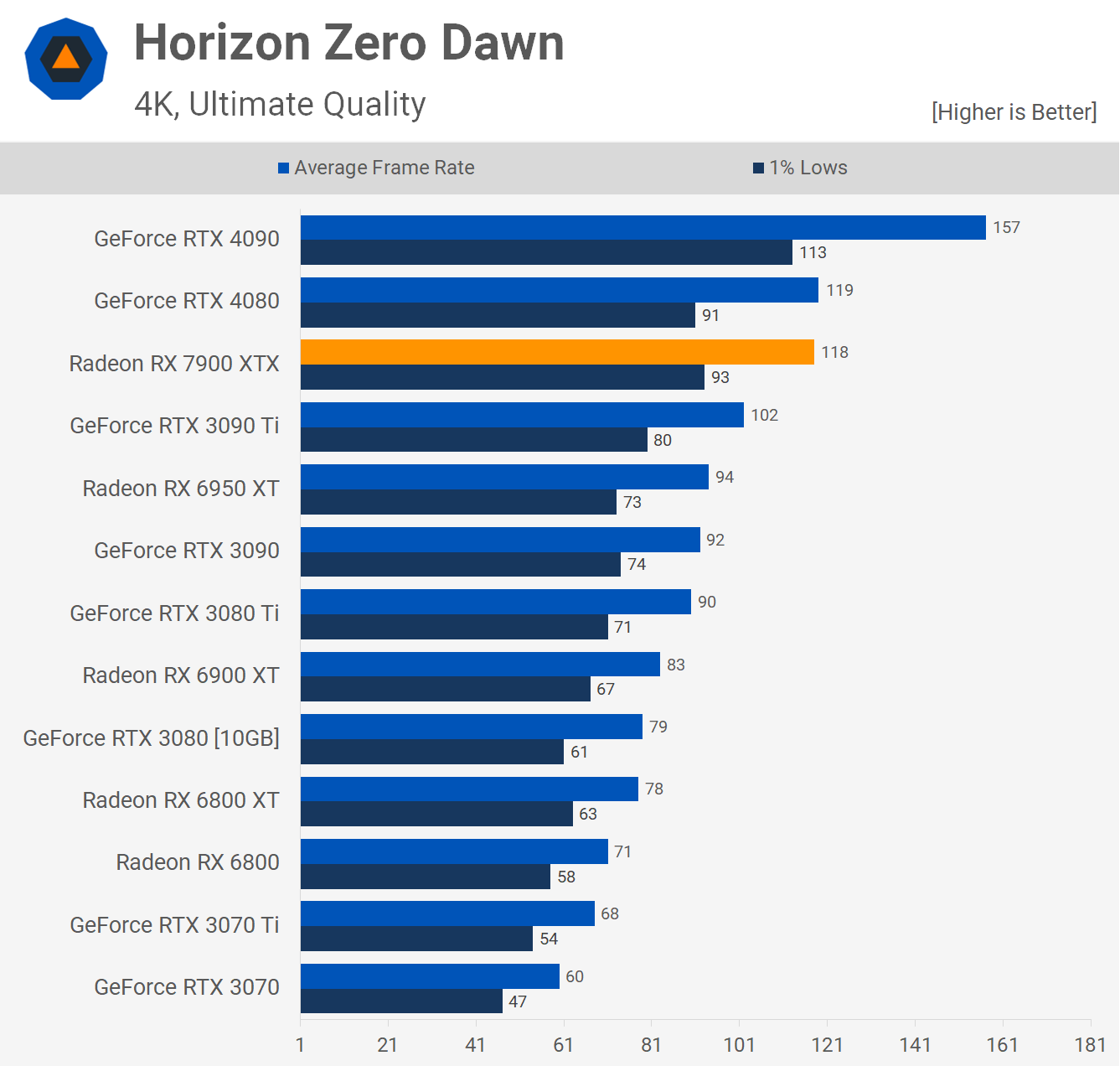

Generally Radeon GPUs perform really well in Horizon Zero Dawn, but I've got to say the 7900 XTX was quite underwhelming here, trailing the RTX 4080 by a 5% margin at 1440p to come in just 14% faster than the 6950 XT, yikes.

The 4K results were a lot better but even so we're looking at RTX 4080-like performance, meaning the 7900 XTX was just 26% faster than the 6950 XT and 16% faster than the RTX 3090 Ti.

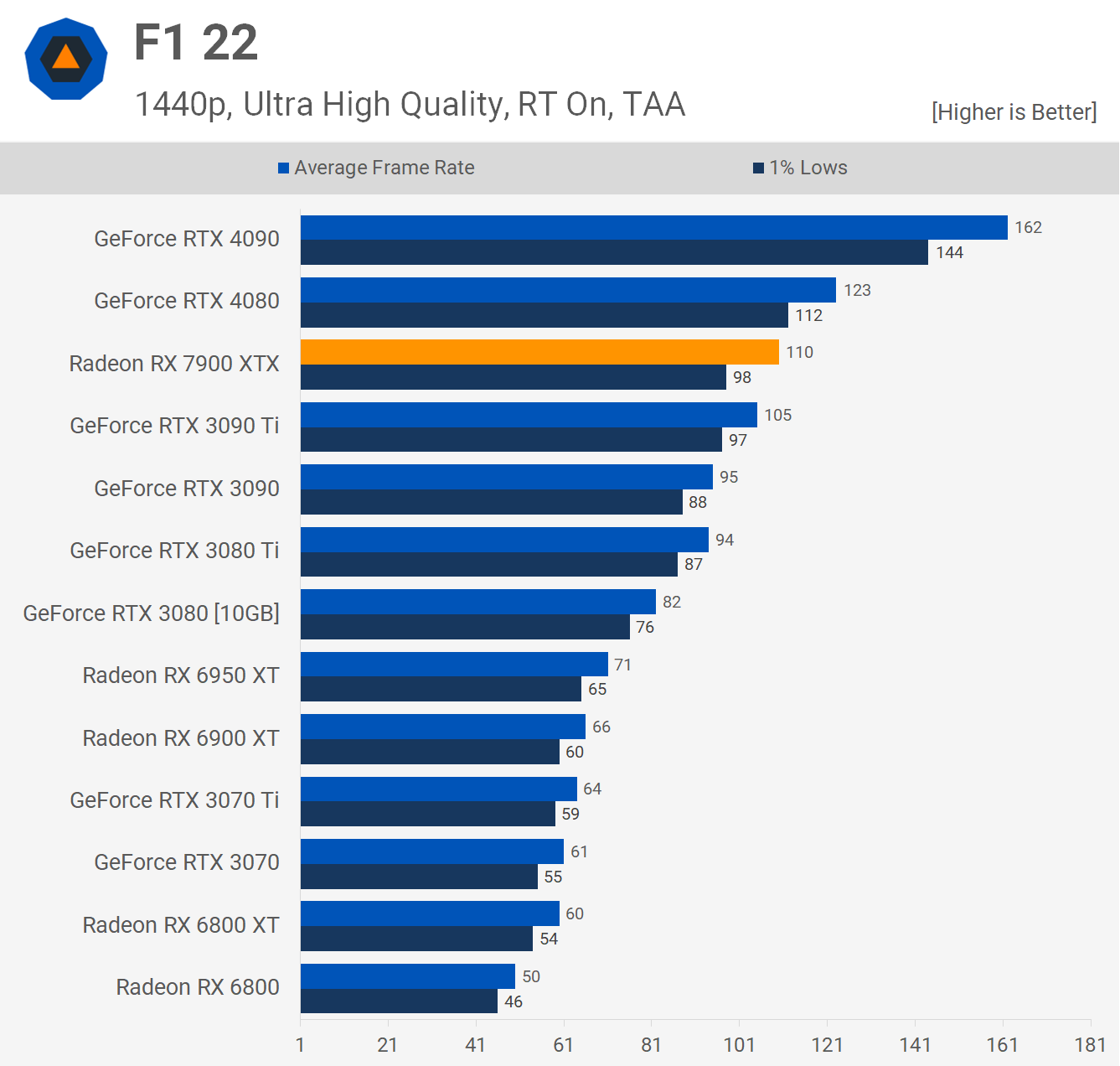

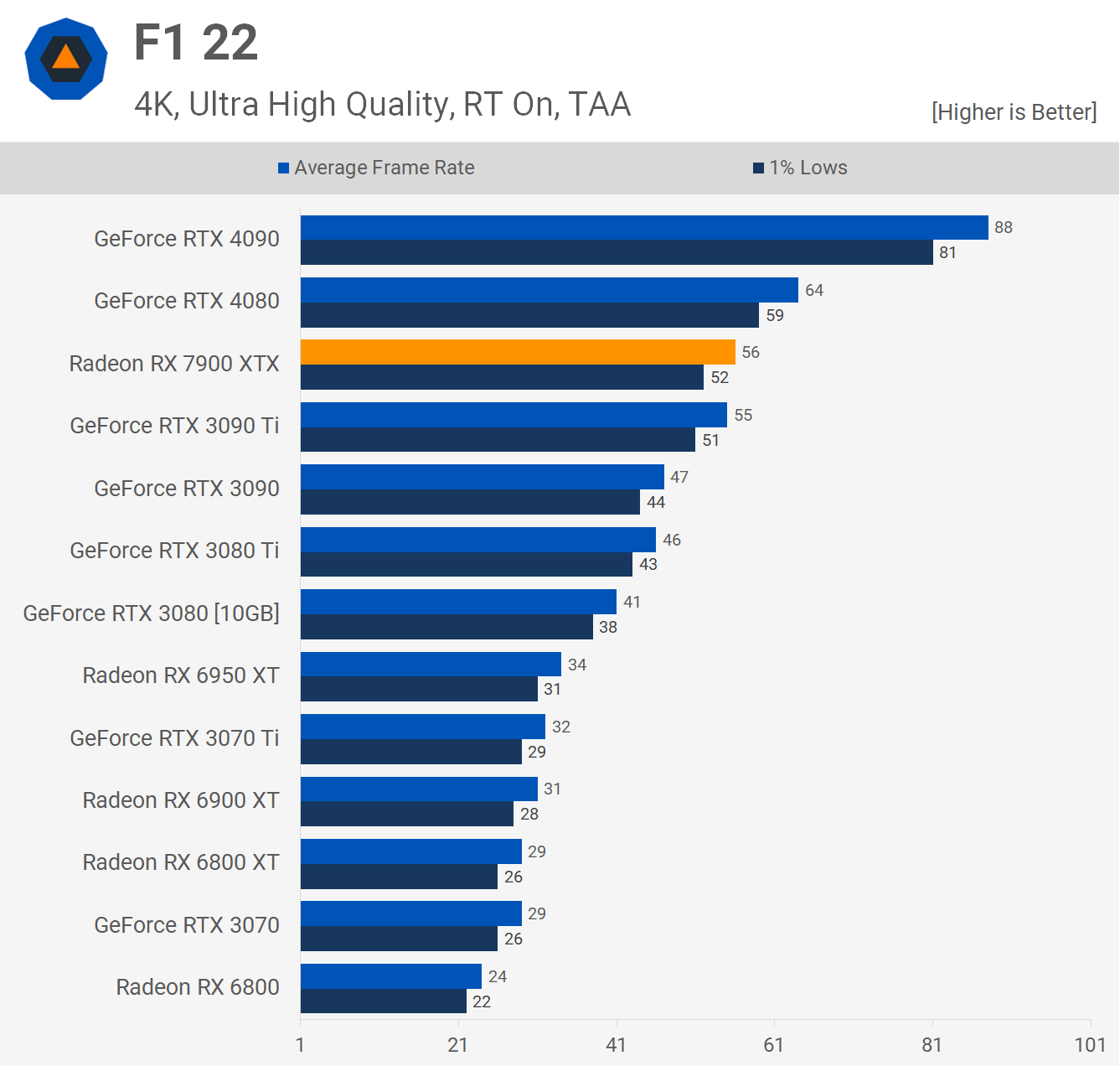

F1 22 enables ray tracing by default with the Ultra High preset, and this is why the 7900 XTX is slower than expected here, AMD's still well behind when it comes to RT performance and we'll look more at this shortly.

For now, we can see that when playing F1 22 using the highest quality preset that the 7900 XTX is 11% slower than the RTX 4080 and a mere 5% faster than the 3090 Ti. In this example it is 55% faster than the 6950 XT, suggesting that RDNA 3's ray tracing is improved from RDNA 2.

Then at 4K, the 7900 XTX drops down to the 3090 Ti with 56 fps on average making it 13% slower than the RTX 4080 and 36% slower than the 4090. A bit of a wipeout there for AMD. That said, thanks to the use of ray tracing it was a massive 65% faster than the 6950 XT, so there's that.

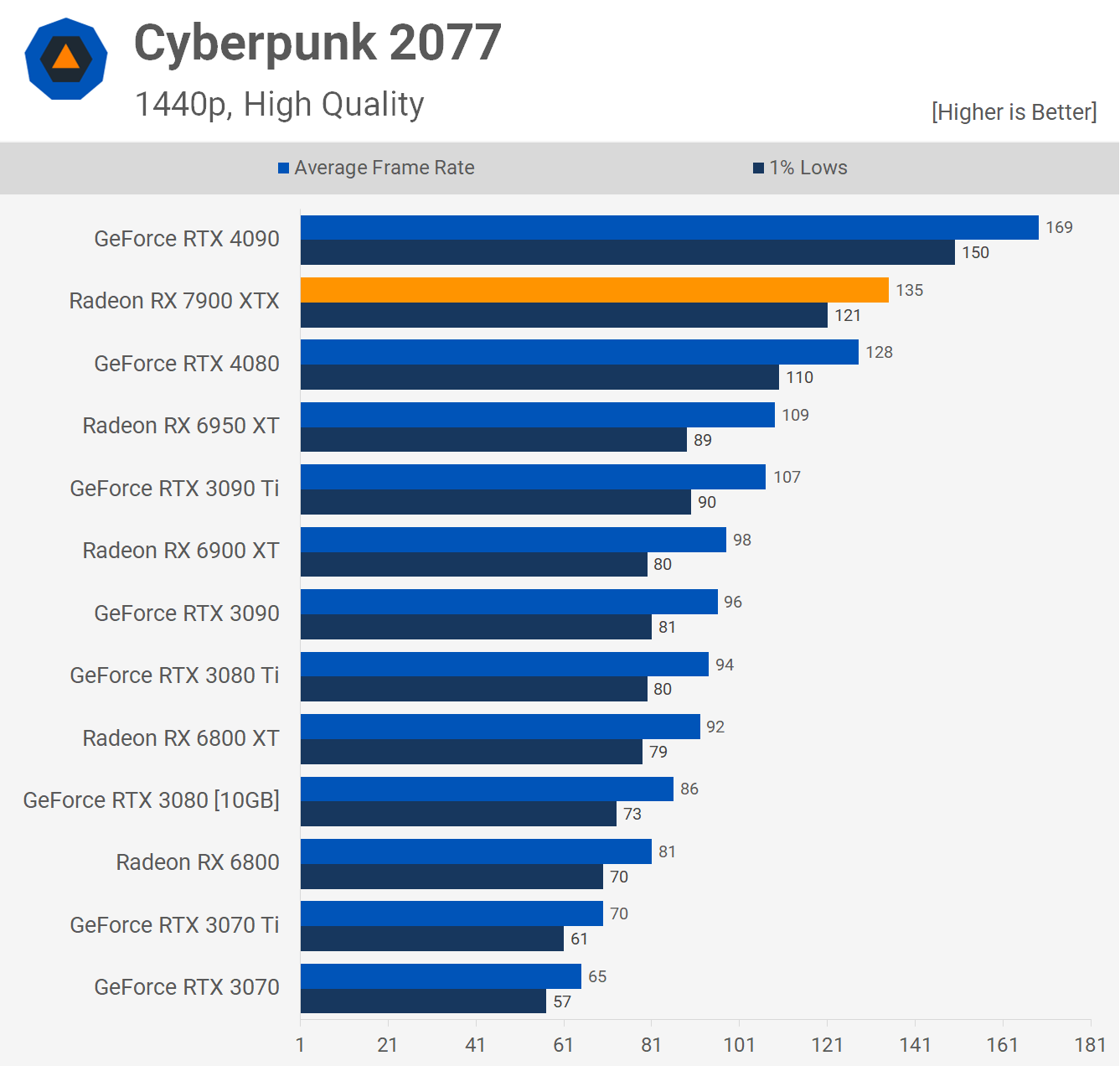

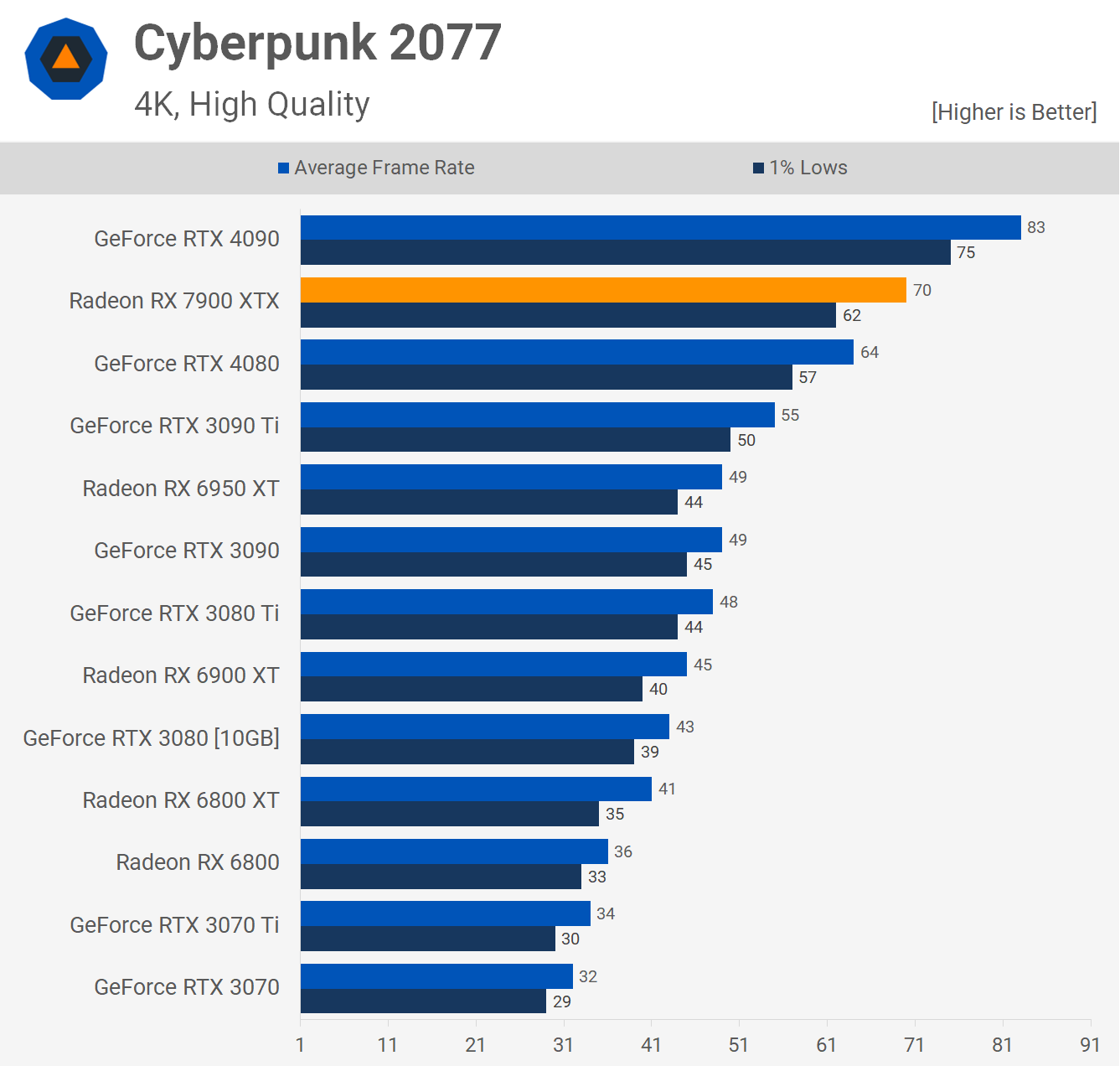

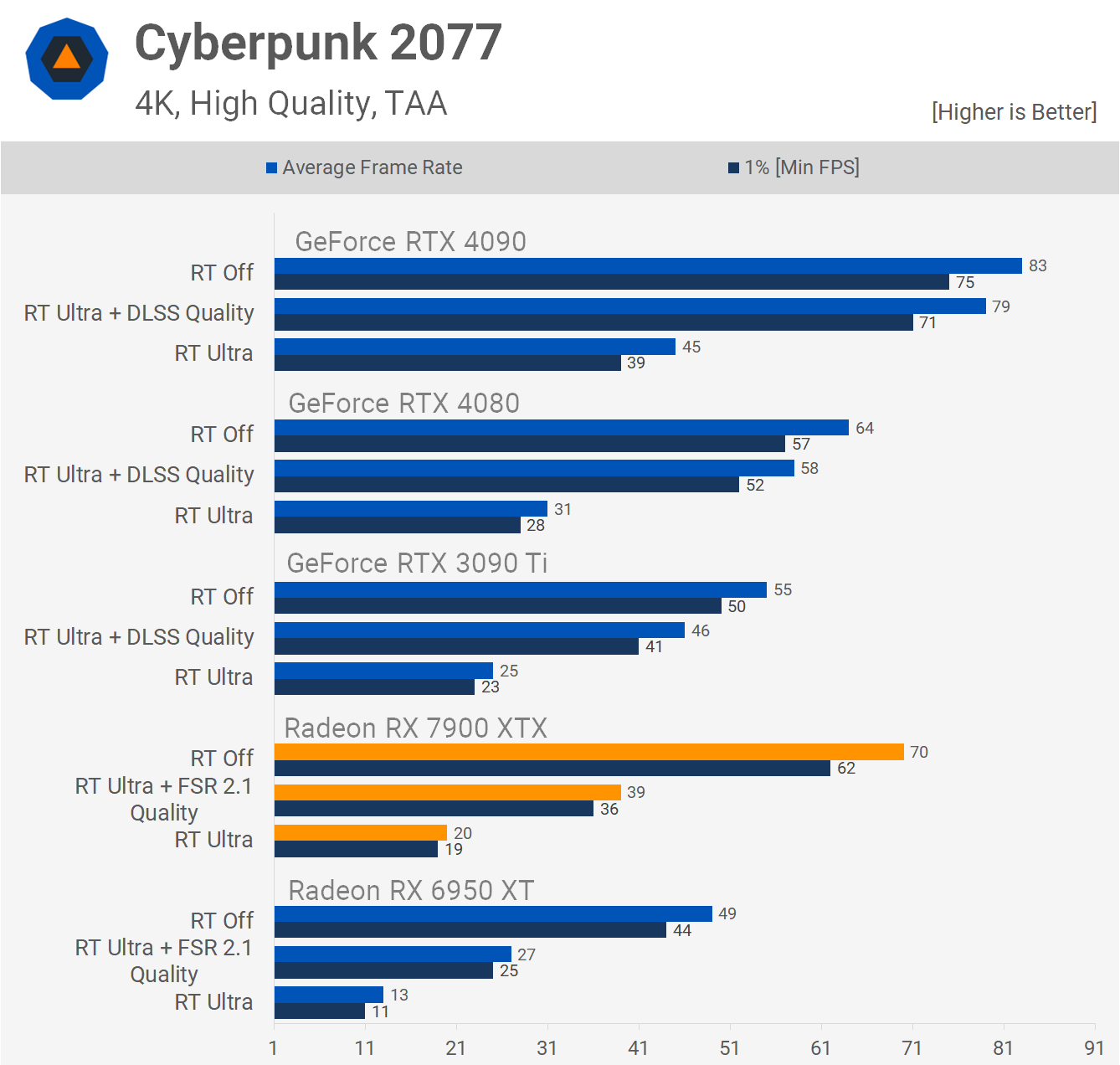

The Cyberpunk 2077 performance at 1440p looks quite good for the 7900 XTX as it was 5% faster than the RTX 4080, delivering 135 fps on average. That said, when compared to previous generation products it was just 24% faster than the 6950 XT and 26% faster than the 3090 Ti.

The 7900 XTX does remain strong at the 4K resolution and was able to extend its margin over the RTX 4080 out to 9%, with 70 fps on average. That made it 27% faster than the 3090 Ti and 43% faster than the 6950 XT which is a decent margin.

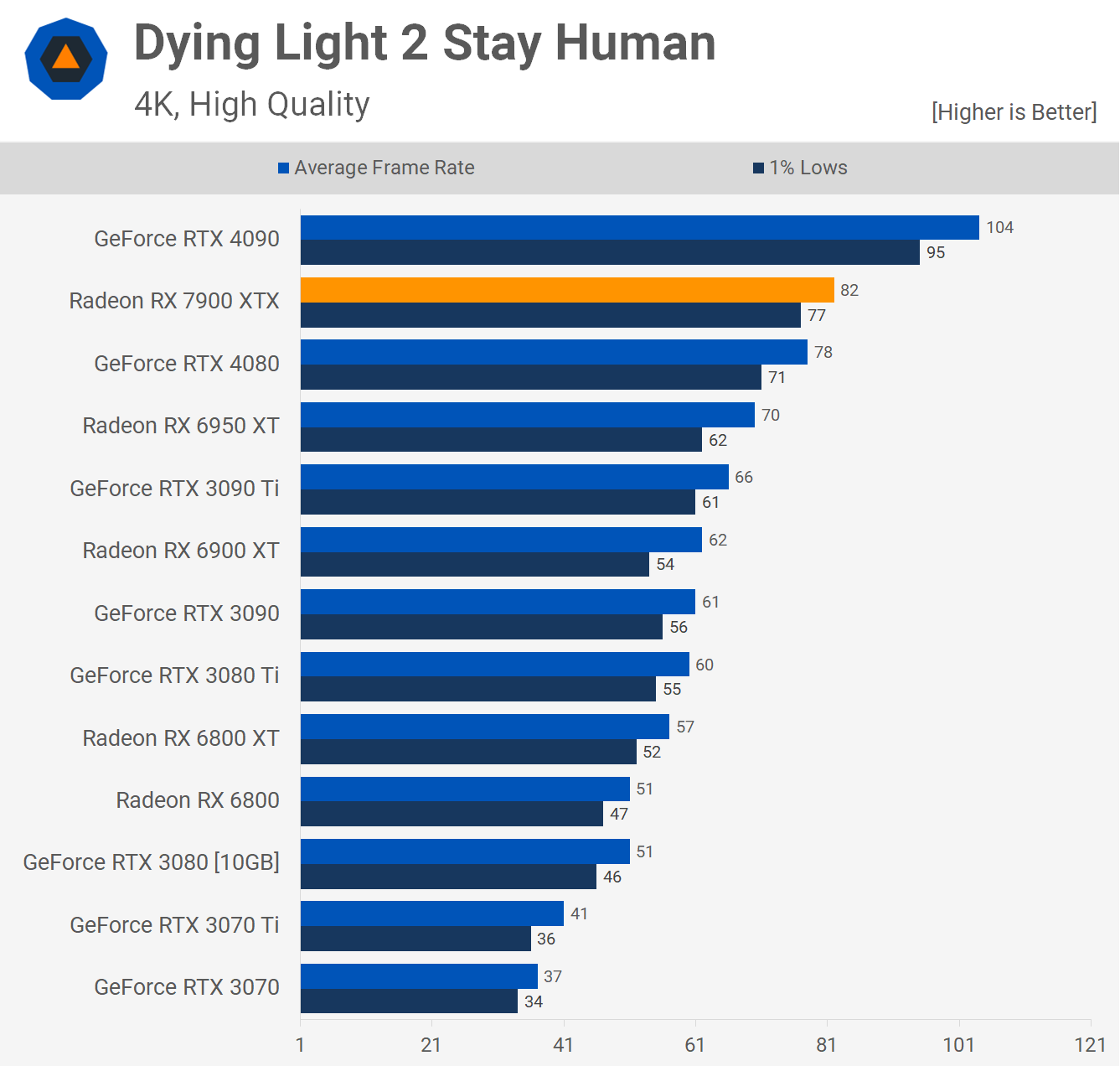

The Dying Light 2 results are a bit underwhelming at 1440p as the 7900 XTX was only able to match the average frame rate of the RTX 4080 with much lower 1% lows. It was also just 17% faster than the 6950 XT which is no doubt a very disappointing result for those of you with a previous generation flagship hoping to upgrade.

The 4K data is more favorable as the 7900 XTX was 5% faster than the RTX 4080 and 1% lows look a lot better. That said, the result relative to AMD's own 6950 XT was very disappointing as this new GPU was just 17% faster.

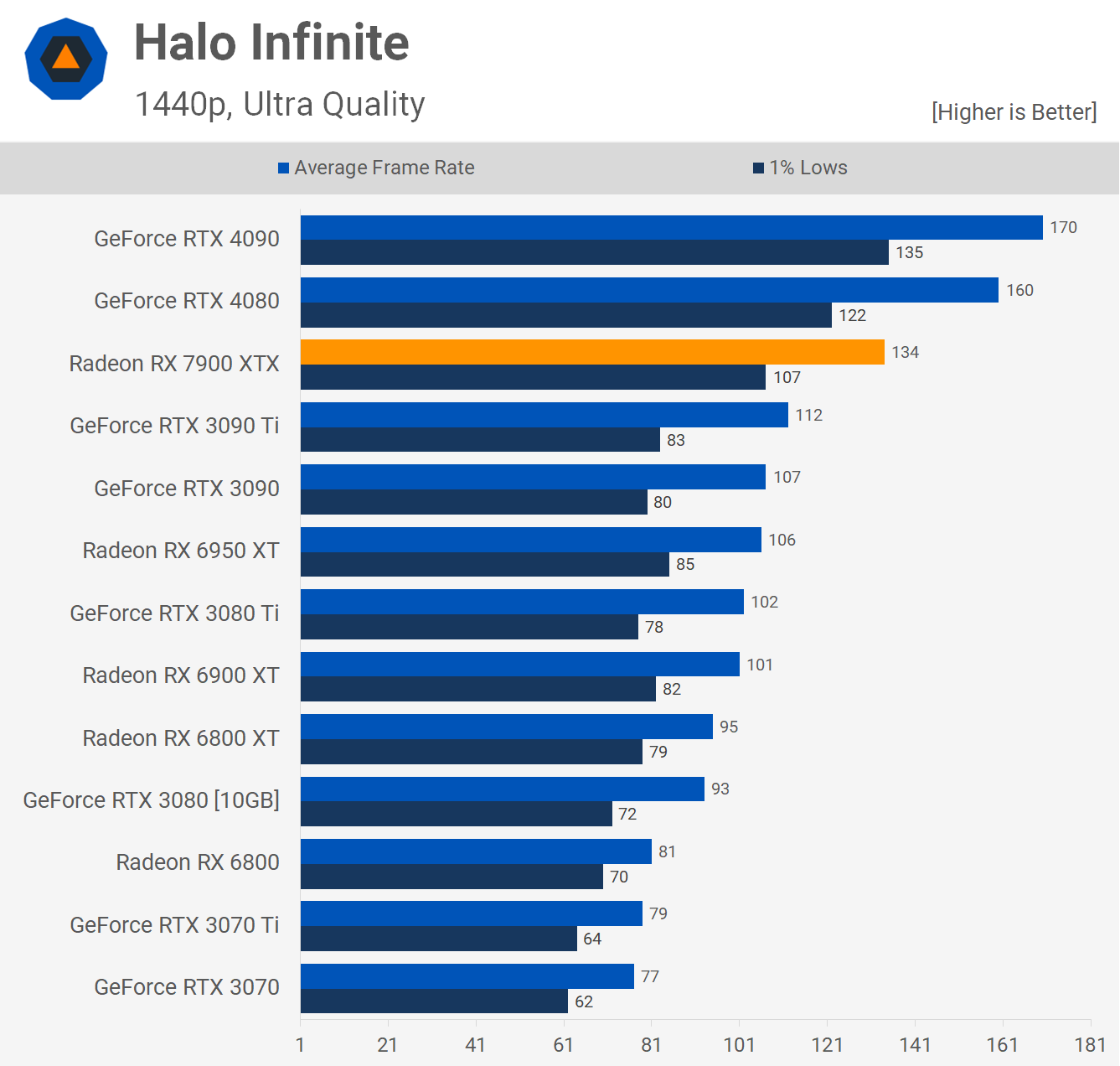

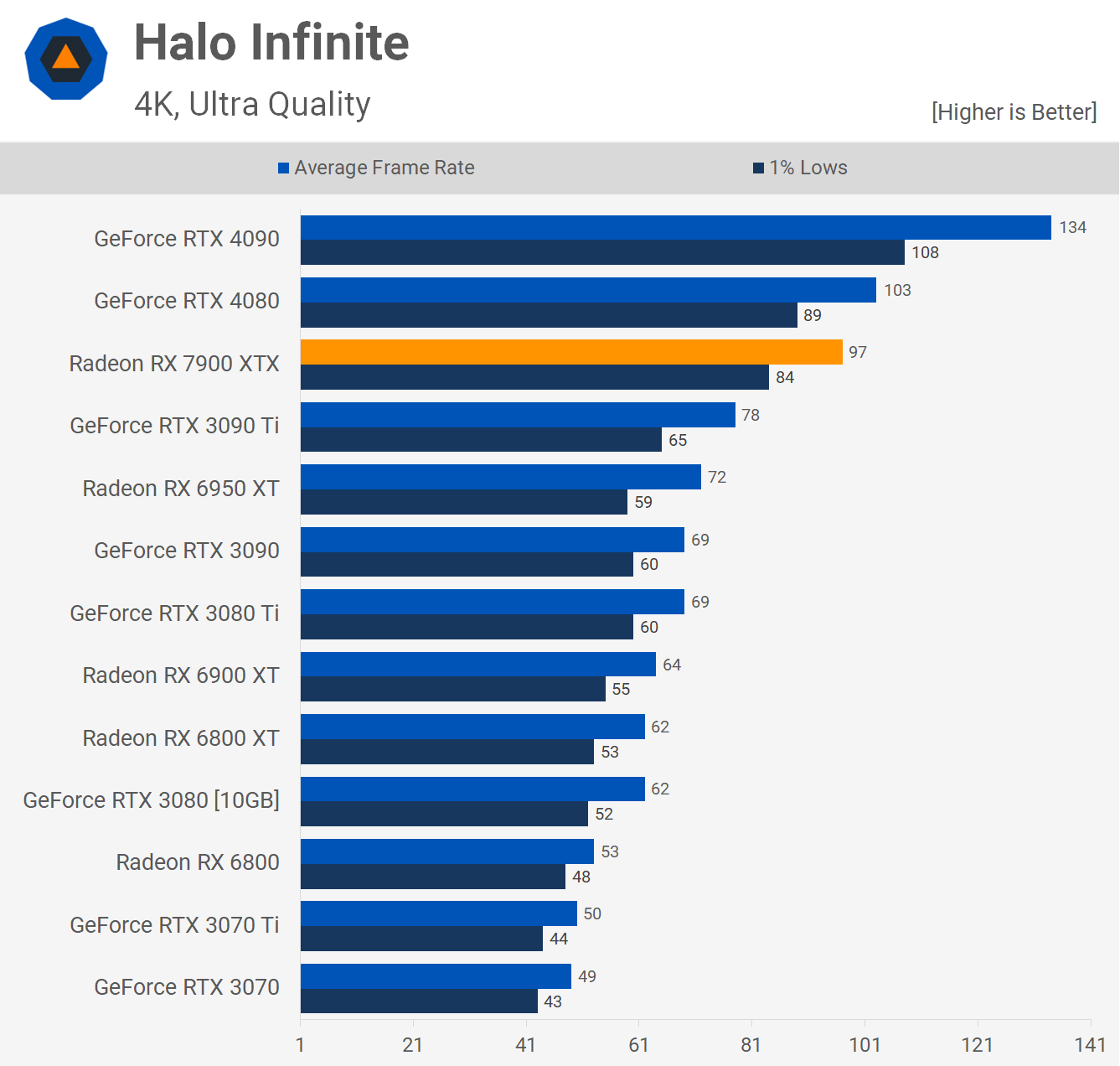

Halo Infinite is a game AMD skipped over for their review guide, opting not to show performance, and that's likely because the 7900 XTX is very weak here. AMD says they're looking into it, so it's possible a future driver corrects performance, but for now this is what it looks like.

This once pro-AMD title sees the 7900 XTX trailing the RTX 4080 by a rather large 16% margin, making it just 21% faster than the RTX 3090 Ti and 26% faster than the 6950 XT – disappointing compared to previous generation flagship parts.

The 7900 XTX shapes up a bit better at 4K as it was 6% slower than the RTX 4080 and a more impressive 24% faster than the RTX 3090 Ti and 35% faster than the 6950 XT.

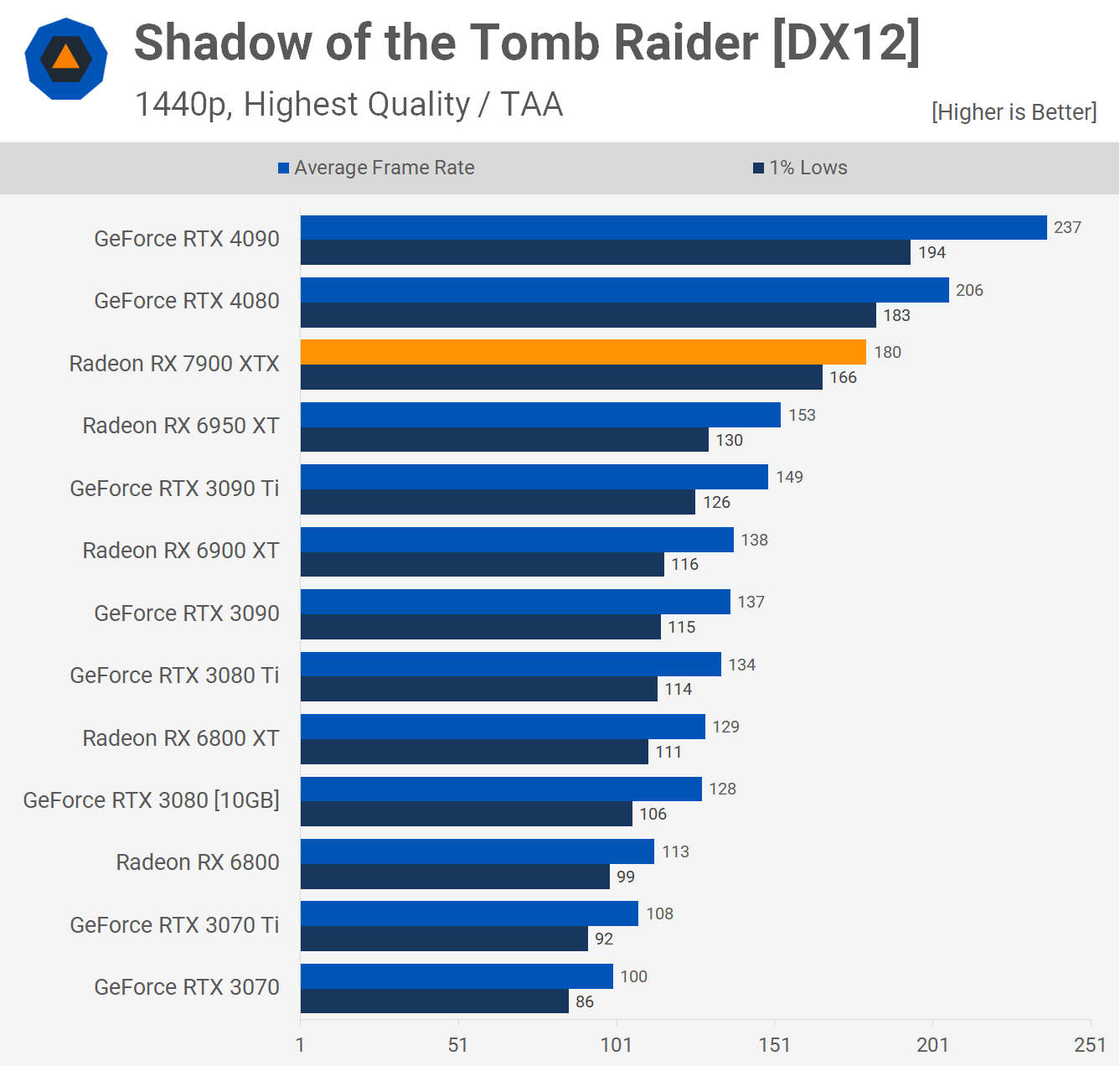

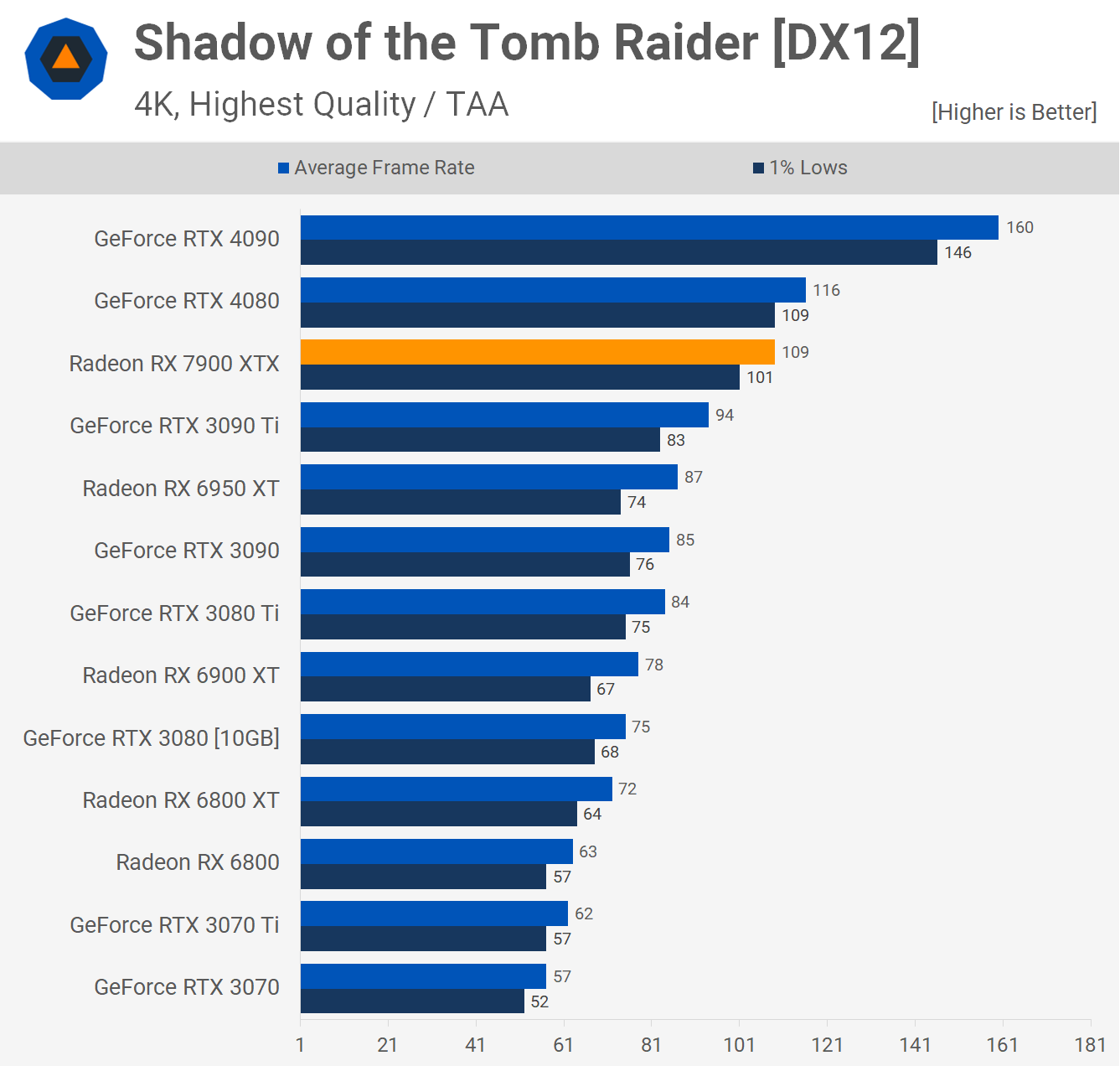

Last up we have Shadow of the Tomb Raider and we were quite surprised to find the 7900 XTX trailing the 4080 by a 13% margin, worse still this meant it was just 18% faster than the 6950 XT.

The margins did improve a little at 4K, it was 6% slower than the RTX 4080 and 25% faster than the Radeon 6950 XT, so better, but still quite poor overall.

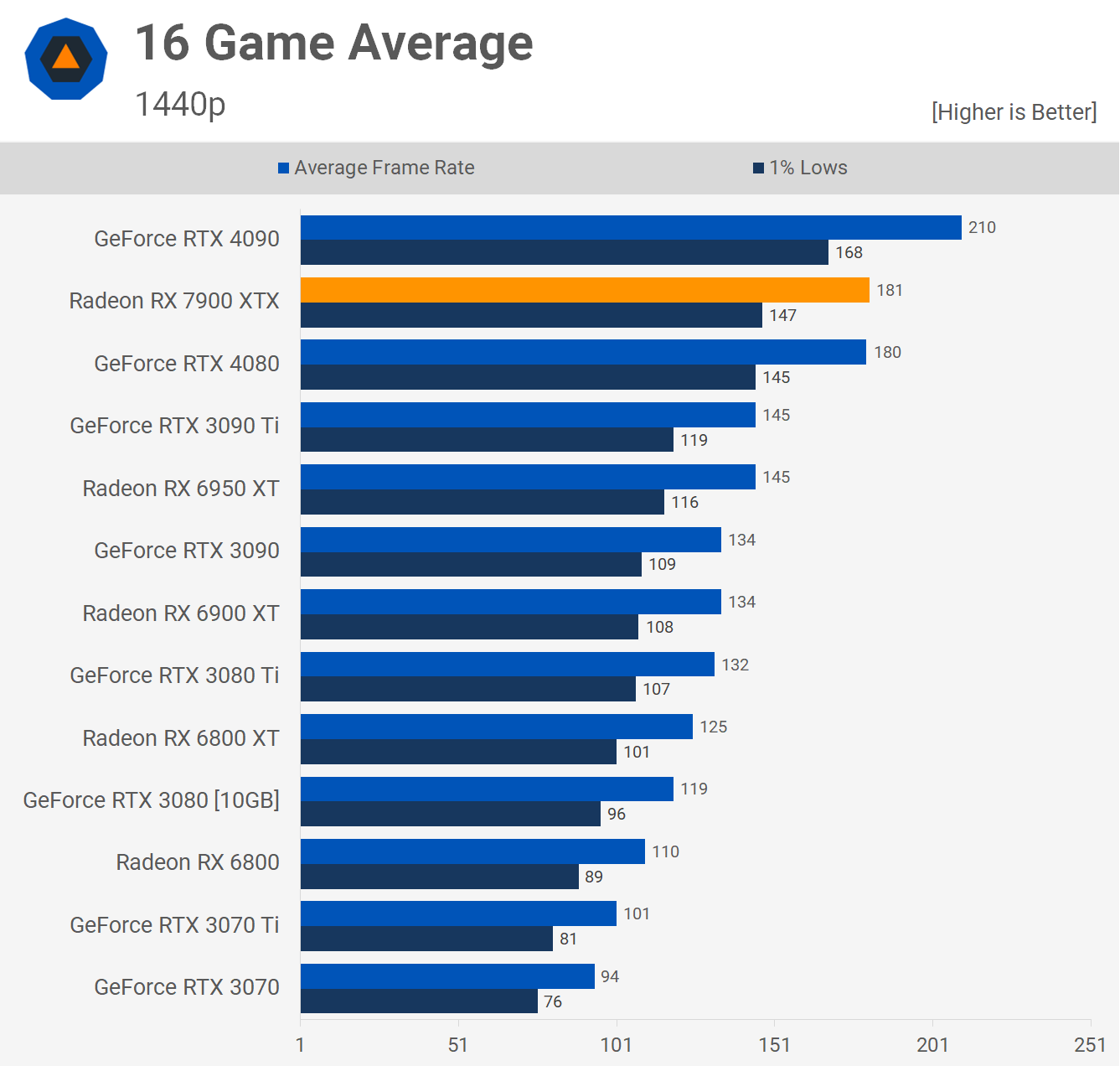

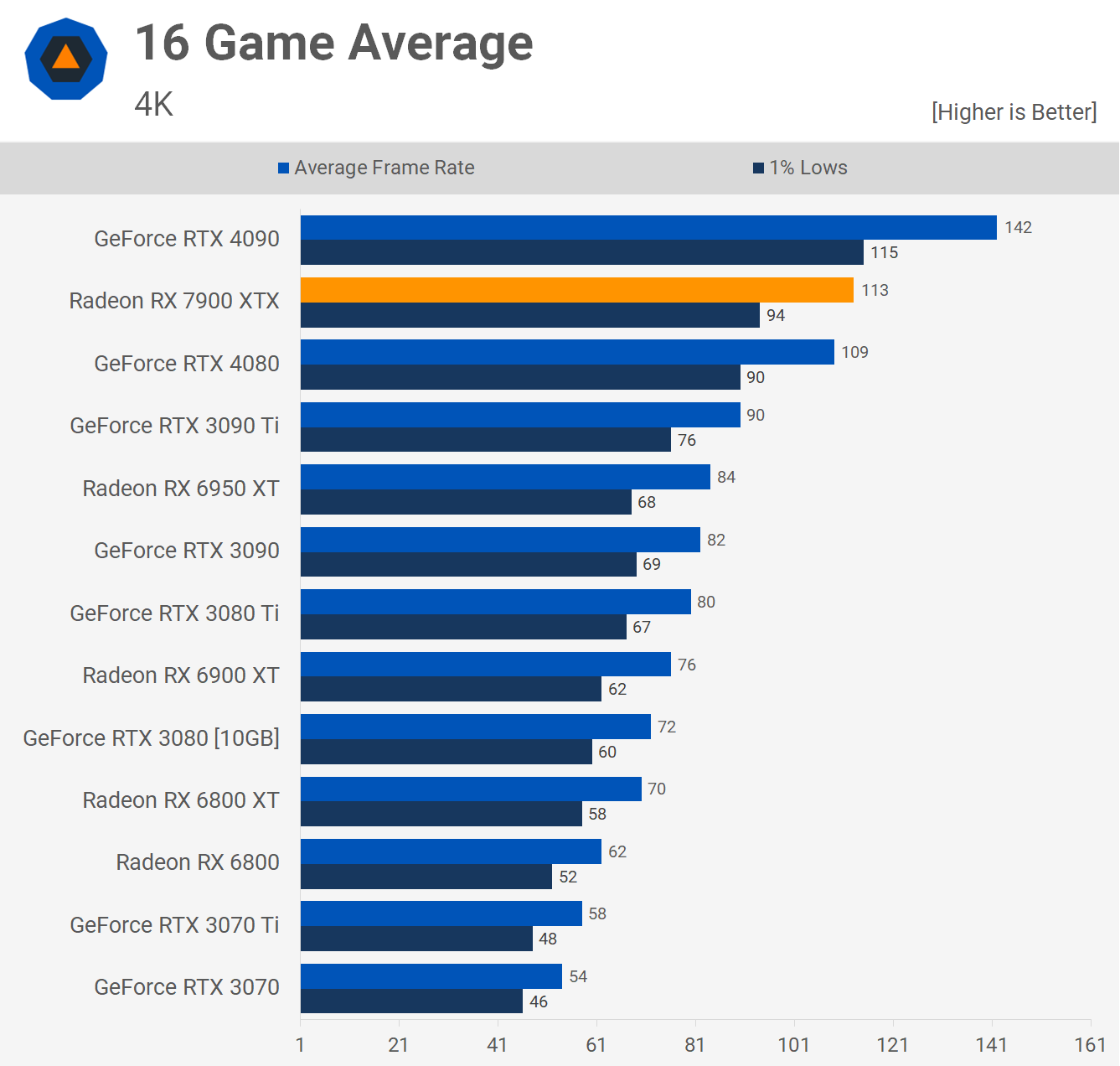

16 Game Average

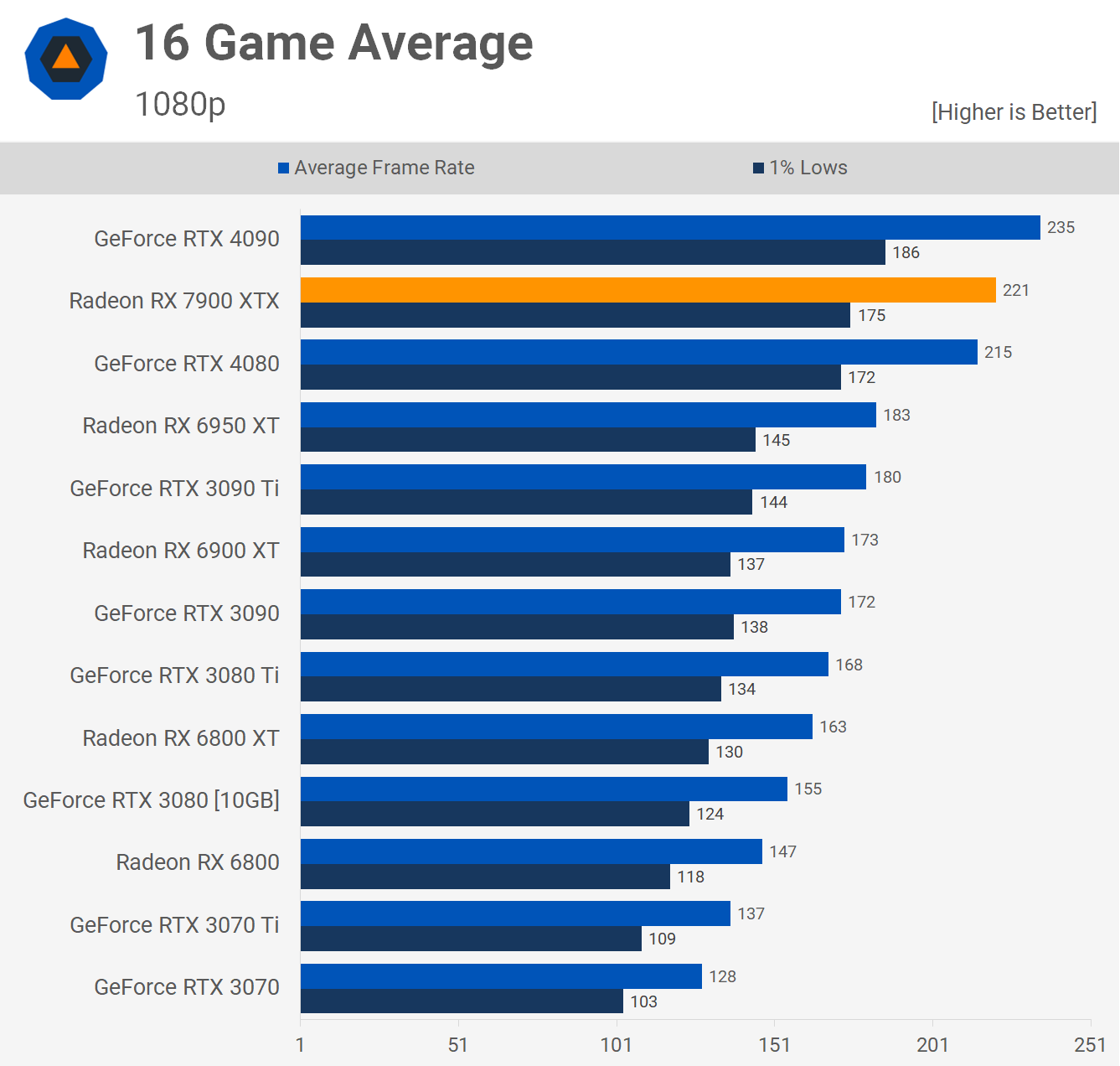

For looking at the 16 game average we'll start with the 1440p data. On average, the Radeon 7900 XTX and GeForce RTX 4080 were neck and neck with around 180 fps on average.

That means the 7900 XTX is 17% slower than the RTX 4090, which for the price is obviously a great result, though it's also only 25% faster than the RTX 3090 Ti and Radeon 6950 XT. It was also ~35% faster than the RTX 3090 and 6900 XT, so for those with previous generation parts it might not be worth the upgrade.

Now, at 4K the Radeon 7900 XTX was 20% slower than the RTX 4090, but 4% faster than the GeForce RTX 4080, so we're looking at 4080-like performance again. That made the Radeon 7900 XTX 26% faster than the 3090 Ti and 35% faster than the 6950 XT.

That's not nearly as good as the 50% or greater many of you were hoping for. The new flagship was 49% faster than AMD's 6900 XT though.

Although we didn't go over the 1080p data as it's largely CPU limited, we've got the averages for you, so let's take a quick look.

The Radeon 7900 XTX and GeForce RTX 4080 are basically on par, but this time the Radeon GPU is just 6% slower than the 4090, but only because of the CPU bottleneck. The 7900 XTX was also just 21% faster than the 6950 XT.

Ray Tracing + DLSS Performance

Now let's take a look at ray tracing performance, with and without upscaling. If you recall the Radeon 7900 XTX was 13% slower than the RTX 4080 in our F1 22 test above as the game enables RT effects by default when using the Ultra High preset.

Here with the Ultra High preset but with RT effects disabled, the 7900 XTX pumped out 216 fps, making it 13% faster than the RTX 4080 and 51% faster than the 6950 XT.

Now with RT + upscaling enabled – FSR 1.0 for Radeon GPUs – the Radeon 7900 XTX was still 10% faster than the RTX 4080 using DLSS, but this is hardly an apples to apples comparison. At native 4K, the 7900 XTX was 13% slower than the 4080, but a massive 65% faster than the 6950 XT, so there are good gains to be had over previous generation RDNA 2 GPUs when using ray tracing.

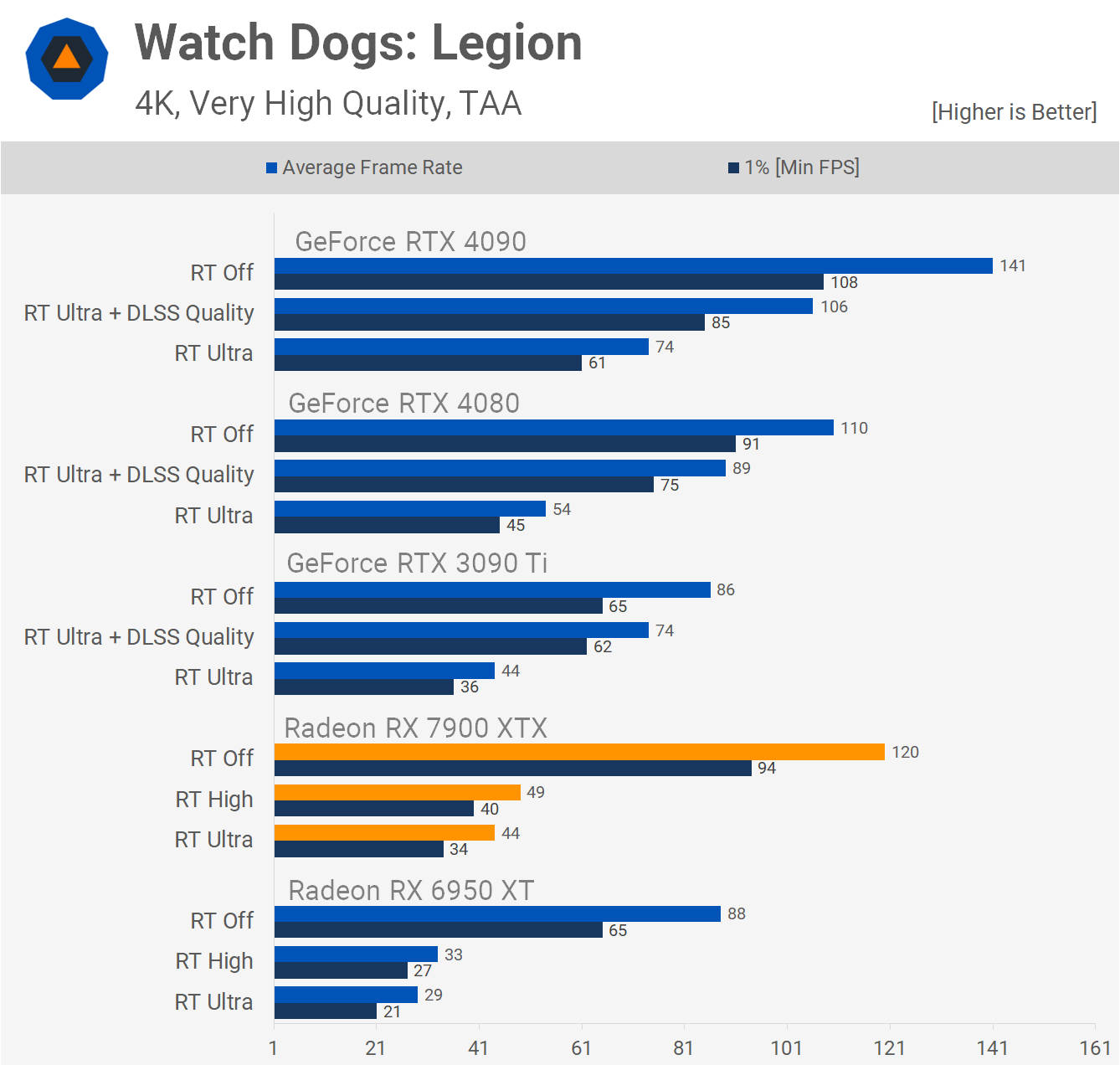

Next we have Watch Dogs: Legion which doesn't support FSR, so upscaling is only available for GeForce GPUs. In short, using the Very High preset with ray tracing disabled the 7900 XTX was 9% faster than the RTX 4080 and 36% faster than the 6950 XT.

With ray tracing set to ultra but no upscaling the 7900 XTX was 19% slower than the RTX 4080 with just 44 fps on average, but was 52% faster than the 6950 XT. So some decent gains there for RDNA 3 over RDNA 2, but it also meant the 7900 XTX was only up to speed with last generation's 3090 Ti when it came to RT performance.

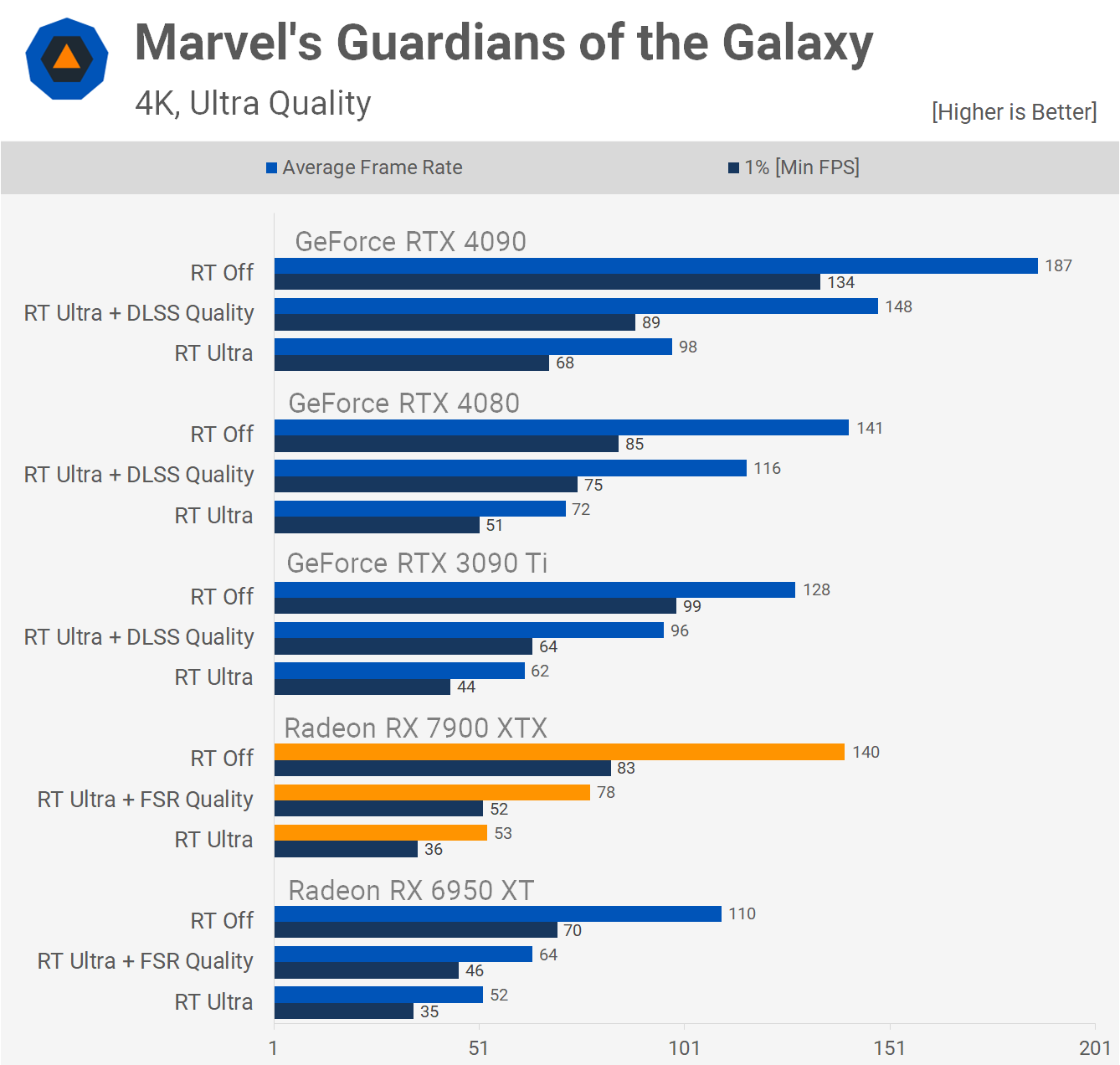

Moving on to Marvel's Guardians of the Galaxy, we see that the 7900 XTX and RTX 4080 are neck and neck with 140 fps, making them 27% faster than the 6950 XT. With ray tracing enabled, but no upscaling, the 7900 XTX rendered 53 fps on average which is basically the same performance received by the 6950 XT, and 27% slower than the RTX 4080, so we have to assume this is some kind of driver issue.

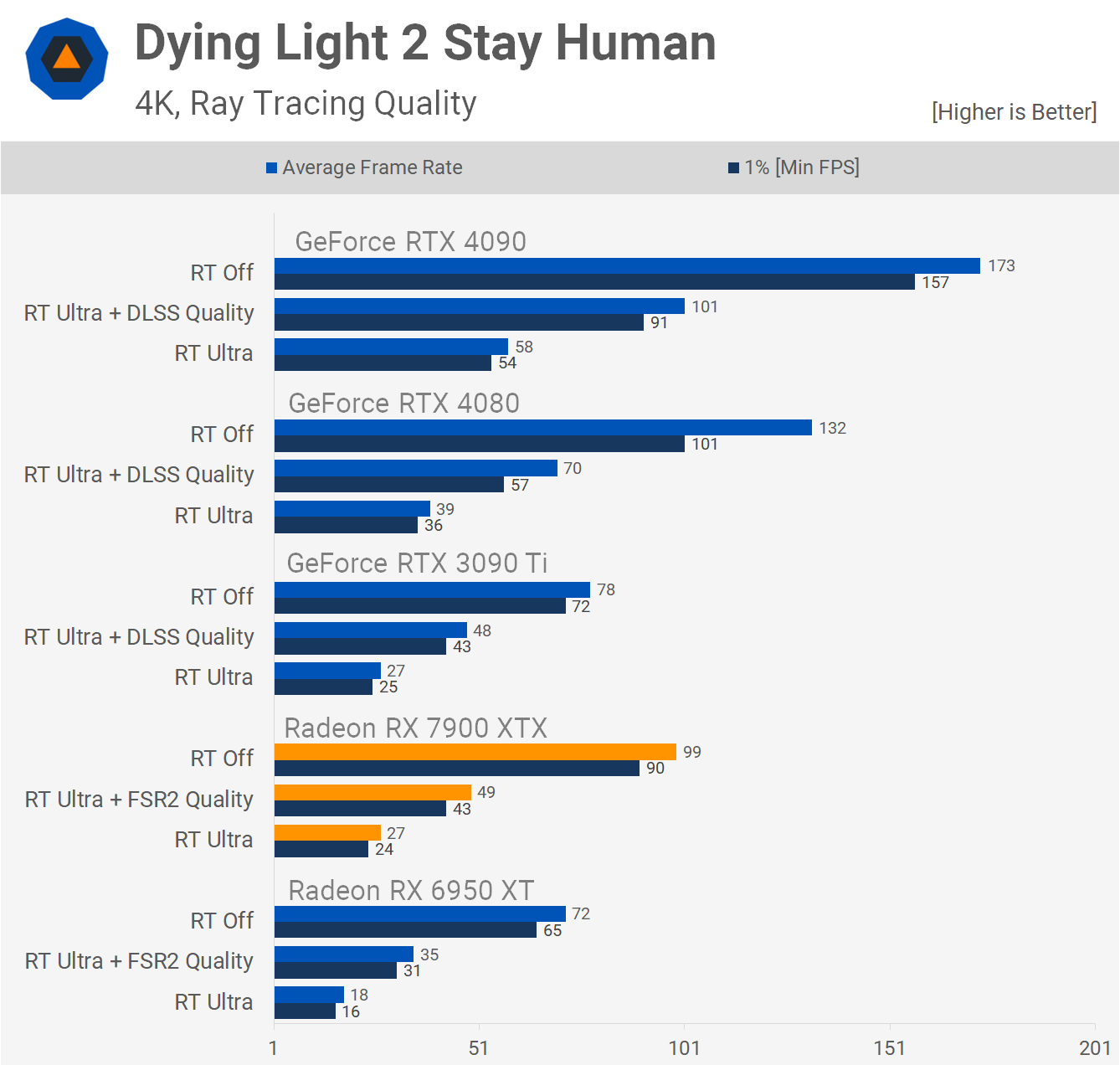

Next we have Dying Light 2 and until the performance seen previously in Dying Light 2 which used the 'High' preset in the DX11 mode, the ray tracing preset forces DX12 and this seems to break the 7900 XTX.

Using the high preset it was 5% faster than the RTX 4080, but here without RT enabled (disabling RT effects) that would otherwise be enabled using this preset, the 7900 XTX was 25% slower than the RTX 4080.

With those RT effects enabled (the standard untouched 'Ray Tracing' quality preset), the 7900 XTX managed just 27 fps on average, the same performance delivered by the RTX 3090 Ti and 50% stronger performance than that of the Radeon 6950 XT, but it was also 31% slower than the RTX 4080. So good gains relative to previous gen AMD hardware, but not good when compared to Ada Lovelace.

The last game where we're showcasing ray tracing performance is Cyberpunk 2077 and here the Radeon 7900 XTX couldn't even match the RTX 3090 Ti, with just 20 fps on average making it 20% slower than the 3090 Ti and 35% slower than the RTX 4080. But it was 54% faster than the 6950 XT.

With upscaling enabled, or FSR 2.1 quality for the Radeon GPU, the 7900 XTX was able to deliver 39 fps making it 44% faster than the 6950 XT, but 15% slower than the 3090 Ti using DLSS and 33% slower than the RTX 4080.

Power Consumption

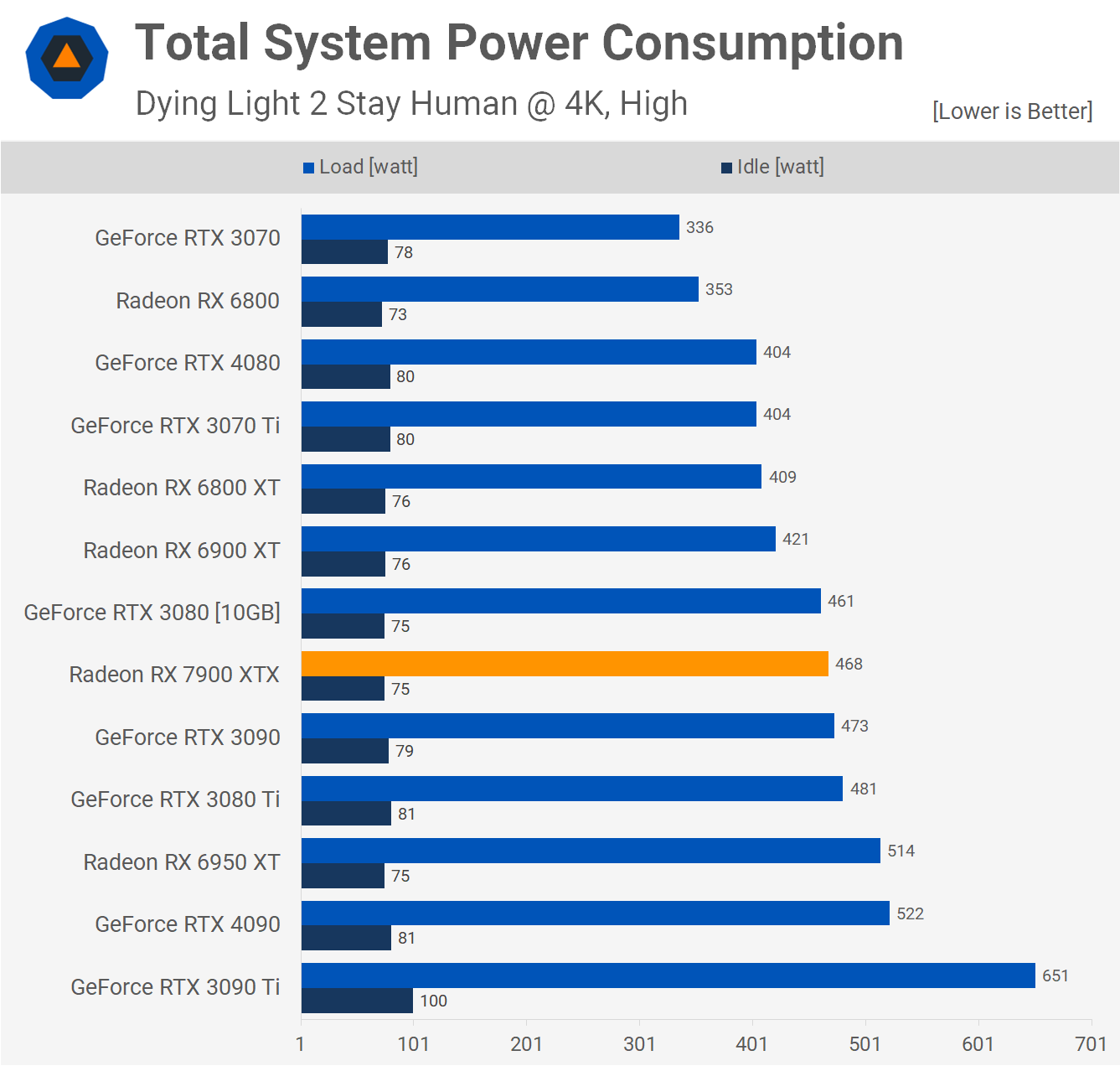

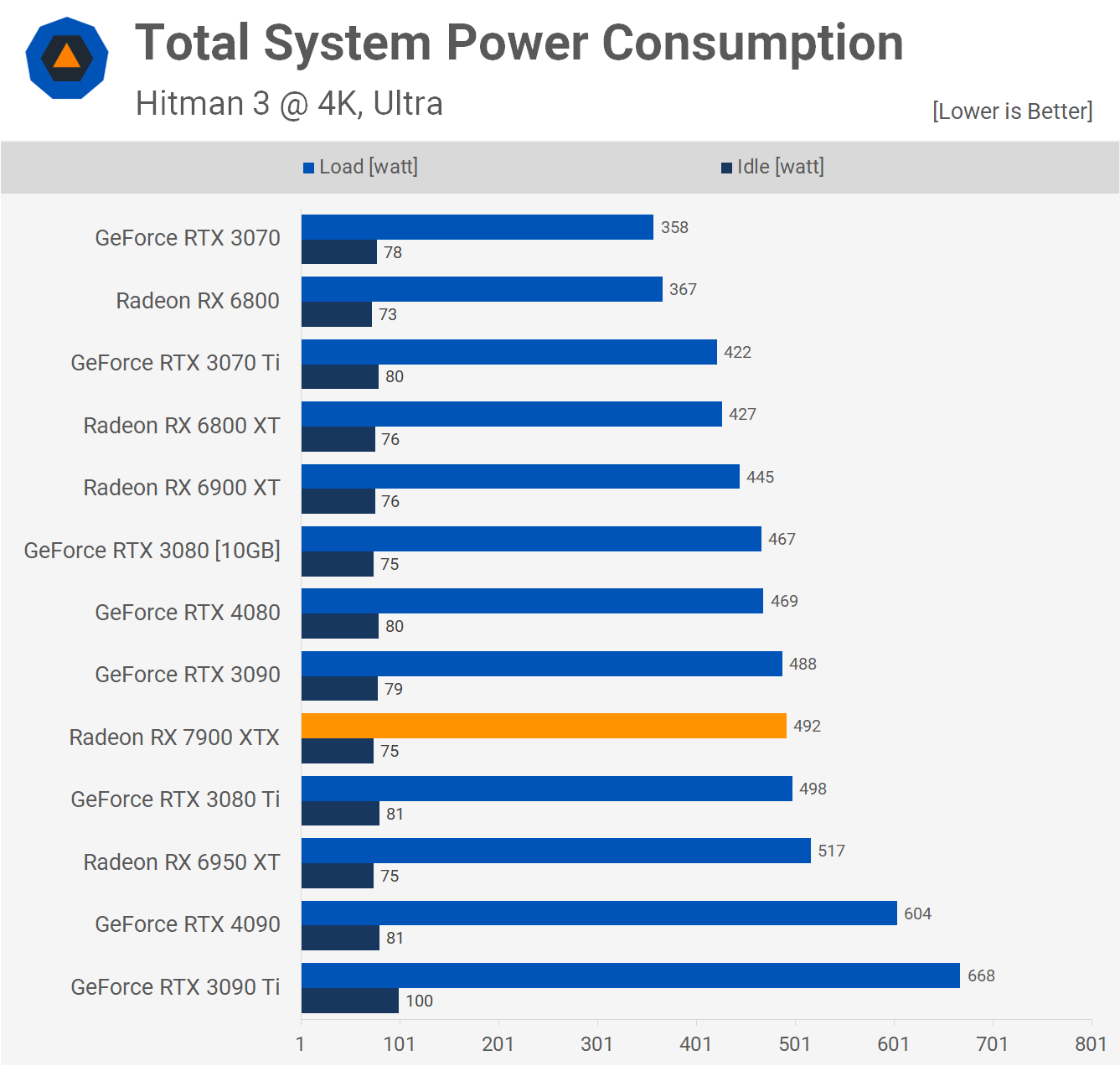

Looking at total system power consumption the 7900 XTX pushed total usage to 468 watts in Dying Light 2, so in terms of power usage it is comparable to the RTX 3080 and RTX 3090. System usage was 9% lower than that of the Radeon 6950 XT, but 16% greater than the RTX 4080, making the GeForce the more efficient GPU.

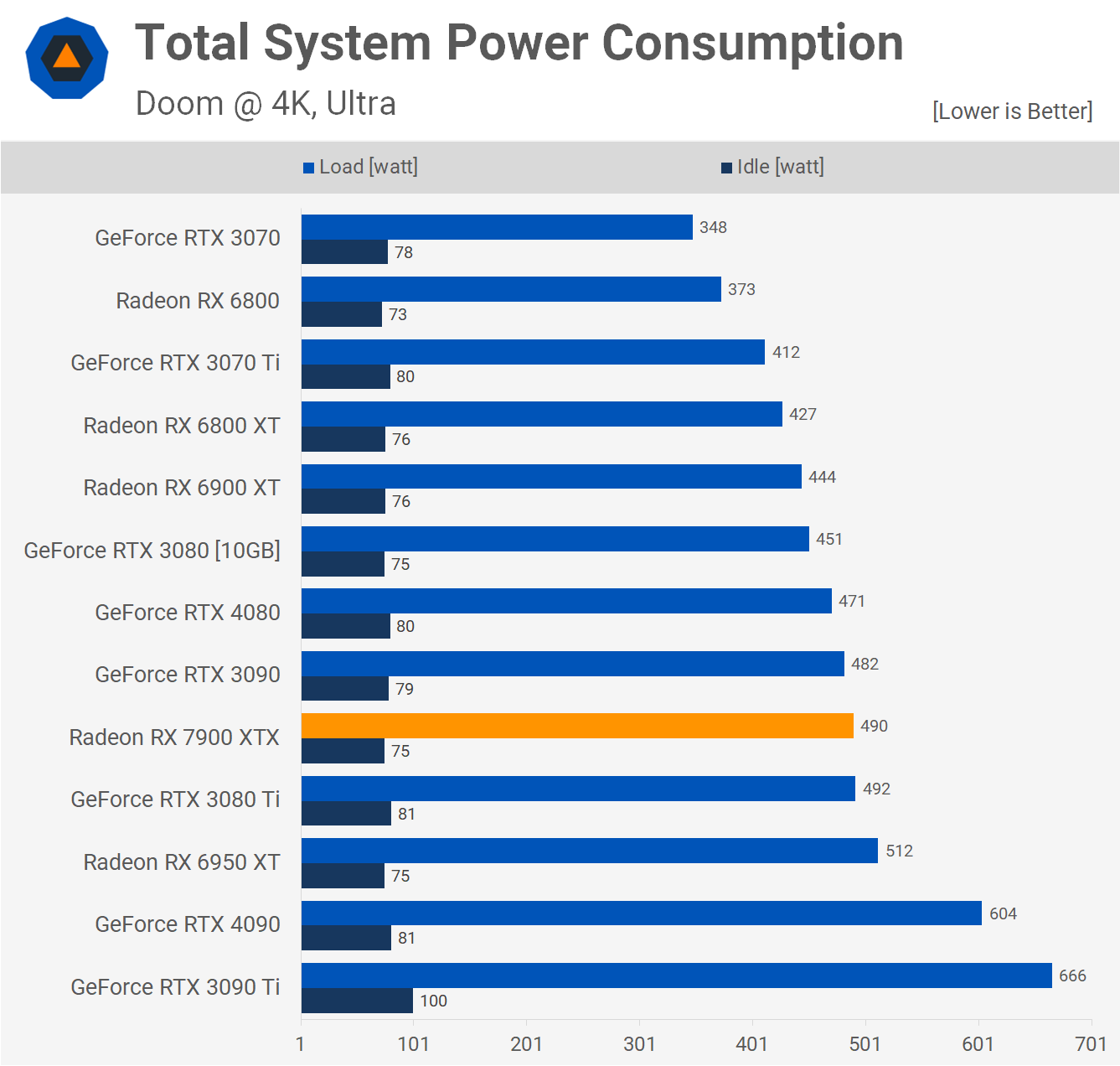

Power consumption between the 4080 and 7900 XTX was more comparable in Doom Eternal, here the Radeon GPU pushed system usage just 4% higher, though it was also just 4% lower than the 6950 XT.

Then we have Hitman 3 and again the 4080 and 7900 XTX were very similar, basically comparable with other previous generation high-end GPUs, excluding the 3090 Ti which was a power pig.

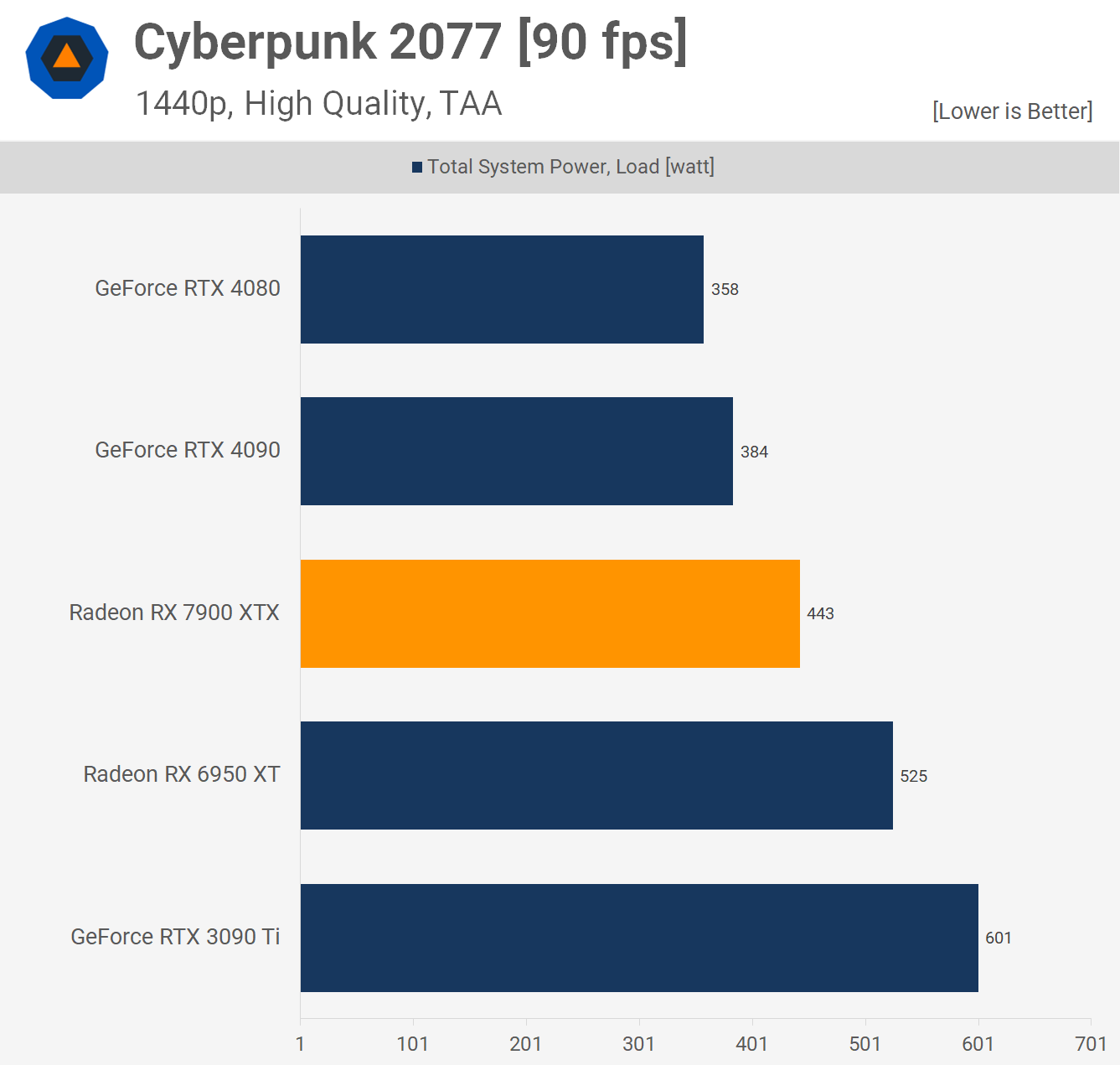

When locking the frame rate to 90 fps in Cyberpunk 2077 the 7900 XTX pushes total system power usage 24% higher than that of the RTX 4080, again confirming that RDNA 3 isn't nearly as power efficient as Nvidia's Ada Lovelace architecture.

Cost per Frame

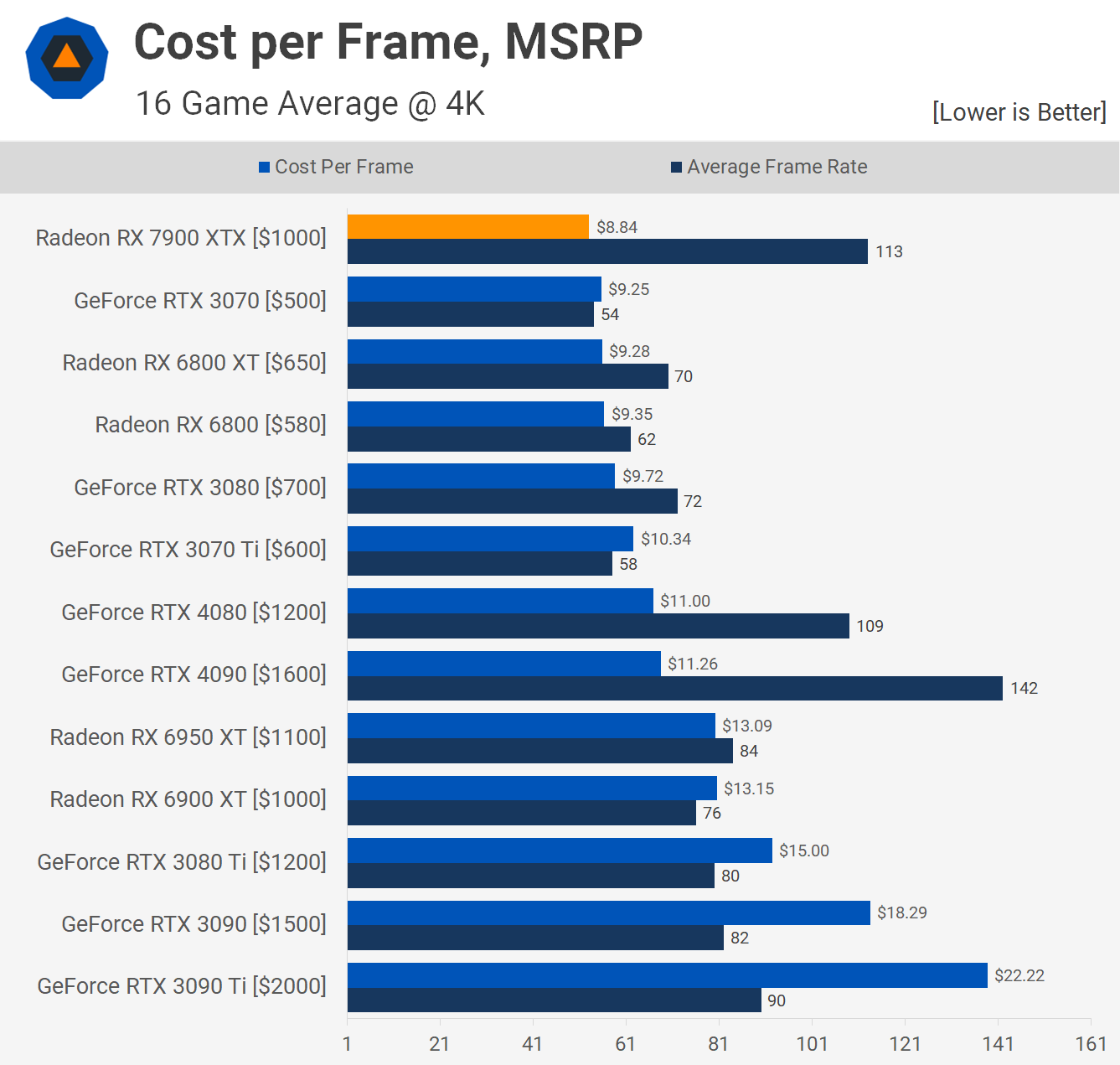

Now for the all-important cost per frame analysis, we'll start with the MSRP's using 4K data. If all current and previous generation GPUs were to be sold at the MSRP, the 7900 XTX would actually be the best value deal on the market, undercutting the 6800 XT by a 5% margin and the 6950 XT by a massive 32%.

More importantly, it's also a 20% improvement in terms of cost per frame when compared to the RTX 4080.

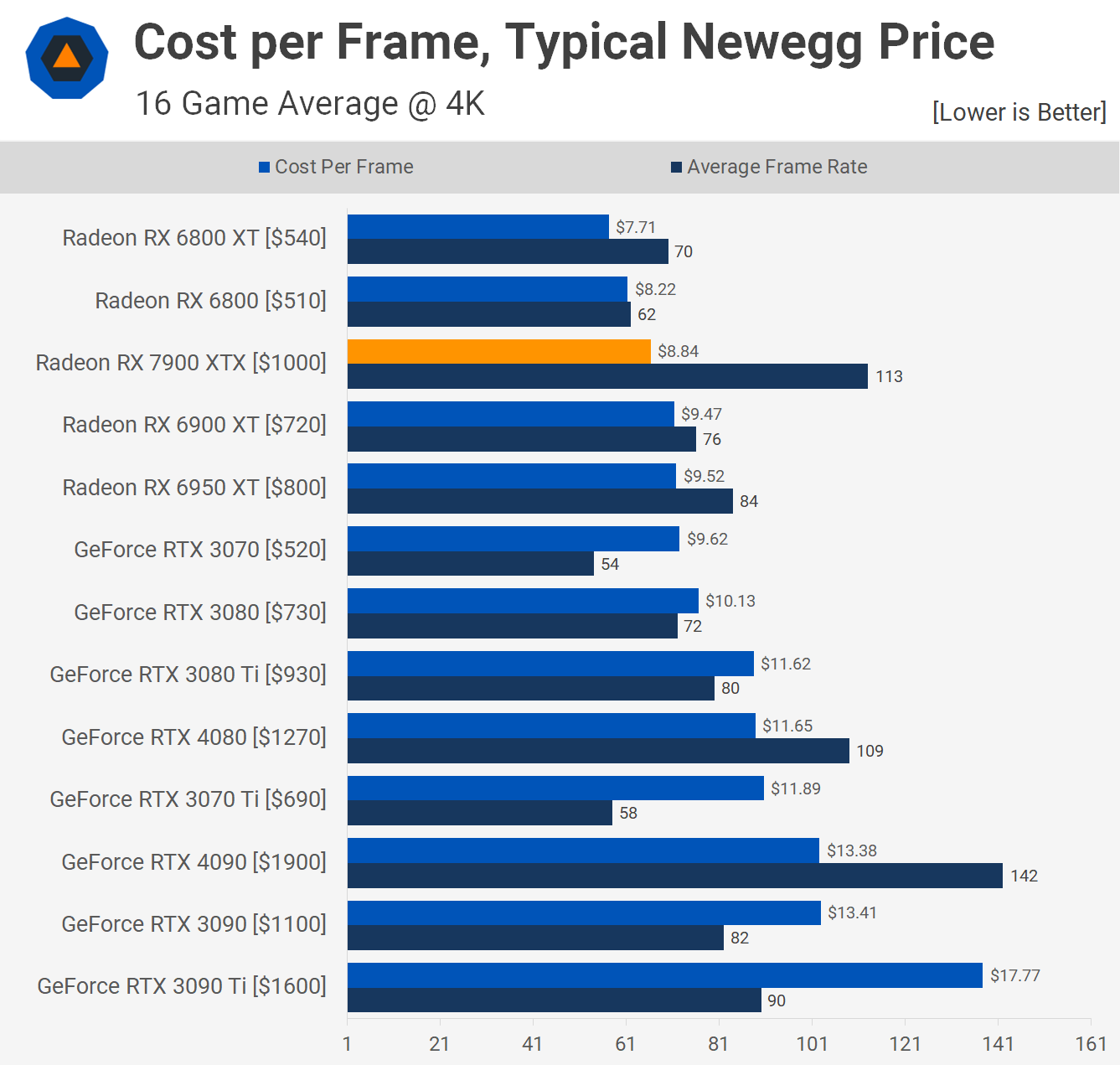

Now here's a look at GPU pricing, using current retail data gathered from Newegg, which will be about a week old by the time you read this review. The RTX 4080 is currently selling for at least $1,270, or $70 above MSRP and for this comparison we have to assume the 7900 XTX will be available for the advertised $1,000, but that's by no means guaranteed.

But assuming it is, that will be a 24% discount in terms of cost per frame when compared to the RTX 4080, and as we just saw with both at the MSRP the Radeon GPU will be at least 20% better value.

In today's market, it's also slightly better value than the 6900 XT and 6950 XT, so there's that, while the only products to offer a better price to performance ratio include the Radeon RX 6800 and 6800 XT, both of which are significantly slower. So when it comes to rasterization performance (meaning, no ray tracing), the Radeon RX 7900 XTX is quite good in terms of value, at least relative to the terrible value RTX 4080, hmm…

Cooling

Before wrapping up testing, here's a quick look at thermal and clock behavior of the AMD reference graphics card model. Installed inside an ATX case in a 21c room, the Radeon RX 7900 XTX peaked at a hot spot temperature of 80c after an hour of gameplay with a peak average die temperature of 67c. This was achieved with a fan speed of 1900 RPM.

The typical operating clock frequency was 2275 MHz and the memory operated at 19.9 Gbps, just shy of the advertised 20 Gbps. Overall, the reference card ran cool and relatively quiet, so we'd expect all partner models to work very well, and that's something we'll look at in the near future.

All That Hype – What We Learned

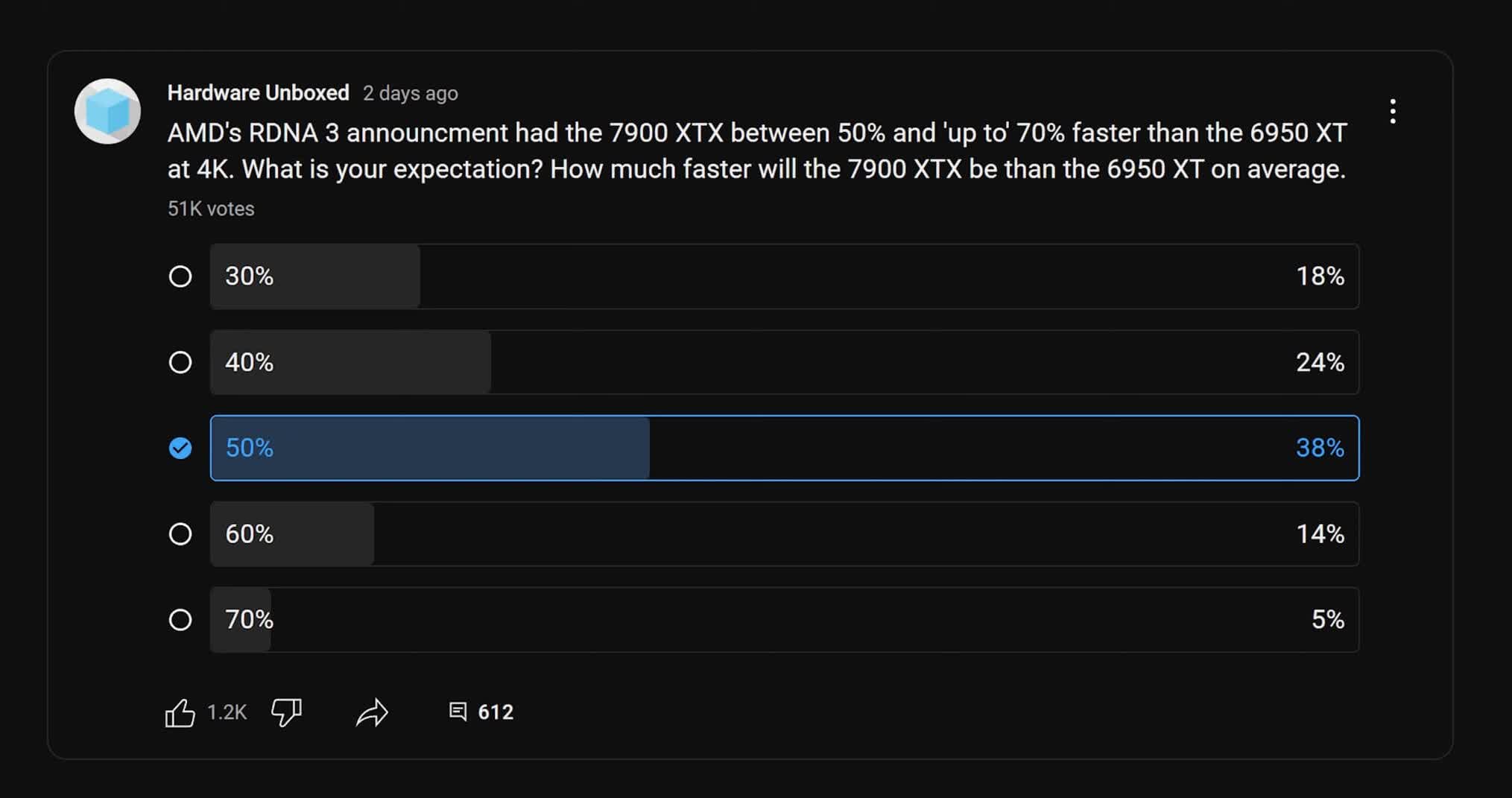

Regarding to how hyped the Radeon RX 7900 XTX has been, AMD themselves are largely to blame for this. But just to make sure we weren't too off base with this, we polled HUB's audience, asking what their expectations were based on AMD's RDNA 3 announcement, where they compared the 7900 XTX to the 6950 XT, showing gains or between 50-70%.

The majority of you expected the 7900 XTX to be at least 50% faster than the 6950 XT on average, based on what AMD had said. With 60% of people voting 50% or more, it's clear AMD oversold their new flagship and set too high expectations. At 50% faster than the 6950 XT, the Radeon 7900 XTX would be at least 10-15% faster than the RTX 4080, and not a great deal slower than the RTX 4090.

In reality, the Radeon RX 7900 XTX is on average 35% faster than the 6950 XT, placing it on par with the RTX 4080. AMD's own review guide showed the 7900 XTX to be on average 43% faster than the 6950 XT, slightly more favorable than our match up, though the games tested were different. Point is, we think people were expecting more and that might lead to unnecessary negative feedback from gamers. If that's the case, AMD has no one to blame but themselves.

Putting hype aside then, is the 7900 XTX any good? Minus a few teething issues, which we'll discuss in a moment, we think this is a decent product, not bad compared to the competition, but not great either.

Although the Radeon RX 7900 XTX looks quite good in terms of value for those interested primarily in rasterization performance, at $1,000, it's not an affordable GPU. Sure, it looks pretty good next to the RTX 4080, delivering the same level of performance for $200 less, but the GeForce RTX 4080 sucks at the current asking price, and that's not just us saying it, it's gamers at large as sales across the globe for the RTX 4080 has been extremely weak.

That's a problem because in almost every measurable metric the RTX 4080 is a superior product to the 7900 XTX. The RTX 4080 offers comparable rasterization performance, significantly better ray tracing performance, better upscaling as DLSS is superior to FSR, it also uses less power, and the media engine is better supported. The only thing going for the Radeon 7900 XTX is the fact that it's 17% cheaper, but when spending $1,000 on a graphics card, do you really care about $200? Wouldn't you just spend the extra money to get the superior product? If we were talking about lower price points, say $600 to $700, the percentage difference is much the same, but we feel someone looking to spend $600 might value $100 a lot more than someone spending $1,000 values $200.

We feel AMD needs to be coming in 20-25% cheaper, so $900 would be a more appropriate price given what we've seen. That's the kind of discount where we can say for most of you the benefits of the GeForce GPU simply aren't worth the price premium.

After all, if you mostly play multiplayer games, then ray tracing isn't too appealing and you're after raw FPS performance, and the 7900 XTX does deliver. But at 17% less than the RTX 4080, it's still not the obvious choice.

Our time with the Radeon 7900 XTX wasn't flawless either. We ran into a few game crashes and we spoke with other reviewers who suffered from the same kind of issues. This could simply be an issue with prerelease drivers that AMD will sort out in time for public release, or it could be a taste of something gamers will experience for weeks or months to come. We also ran into a frustrating black screen issue, that required us to disconnect and reconnect the display, the game didn't crash, but the display would flicker and go blank. This was rare and only happened twice in our testing, but it's worth mentioning given the other stability issues with the review driver.

Overall, the Radeon RX 7900 XTX is a pretty good GPU, at least relative to its GeForce competitor, but whether or not it's worth $1,000 will depend on how much stock you place in ray tracing performance. For those with previous generation flagships, the 7900 XTX simply won't be worth the upgrade and while it's a big leap forward from something like the Radeon 6800, it's also roughly twice the price, so it's not moving the needle forward in terms of value. The Radeon RX 6800 and 7900 XTX really could be members of the same product family.

Frankly, neither the 7900 XTX or RTX 4080 would have us racing out to buy one, so we guess we'll wait around to see what the lower-tier models have to offer, but we're certainly not holding our breath for anything amazing.

Shopping Shortcuts:

- AMD Radeon RX 7900 XTX on Amazon

- AMD Radeon RX 7900 XT on Amazon

- Nvidia GeForce RTX 4090 on Amazon

- AMD Ryzen 7 5800X3D on Amazon

- AMD Ryzen 7 7700X on Amazon

- Intel Core i5-13600K on Amazon

- Intel Core i7-13700K on Amazon

Further Testing

Since we published this day-one review of the Radeon RX 7900 XTX, we have run additional benchmarks and comparisons you may be interested in: