It feels like we've been talking about these upcoming Intel GPUs for years now, and maybe we have, but today we're finally able to show you Intel's long awaited Arc graphics cards.

About a year ago, we covered Intel's Architecture Day 2021 where they detailed Arc Alchemist GPUs along with XeSS for AI-based upscaling. This was exciting stuff at the time due to an industry-wide shortage of graphics cards, so a new player in the GPU market, let alone from Intel, would have been very welcome. Sadly though, Intel missed that incredible window of opportunity by about a year.

Today marks the introduction of the Arc A750 and A770 graphics cards.

There are two versions of the Intel A770, the standard 8GB model and a more premium 16GB model. The A770 Limited Edition that we have for testing comes in at $350, while the standard 8GB model costs $330. Then there's the slightly cut down A750 which will cost $290.

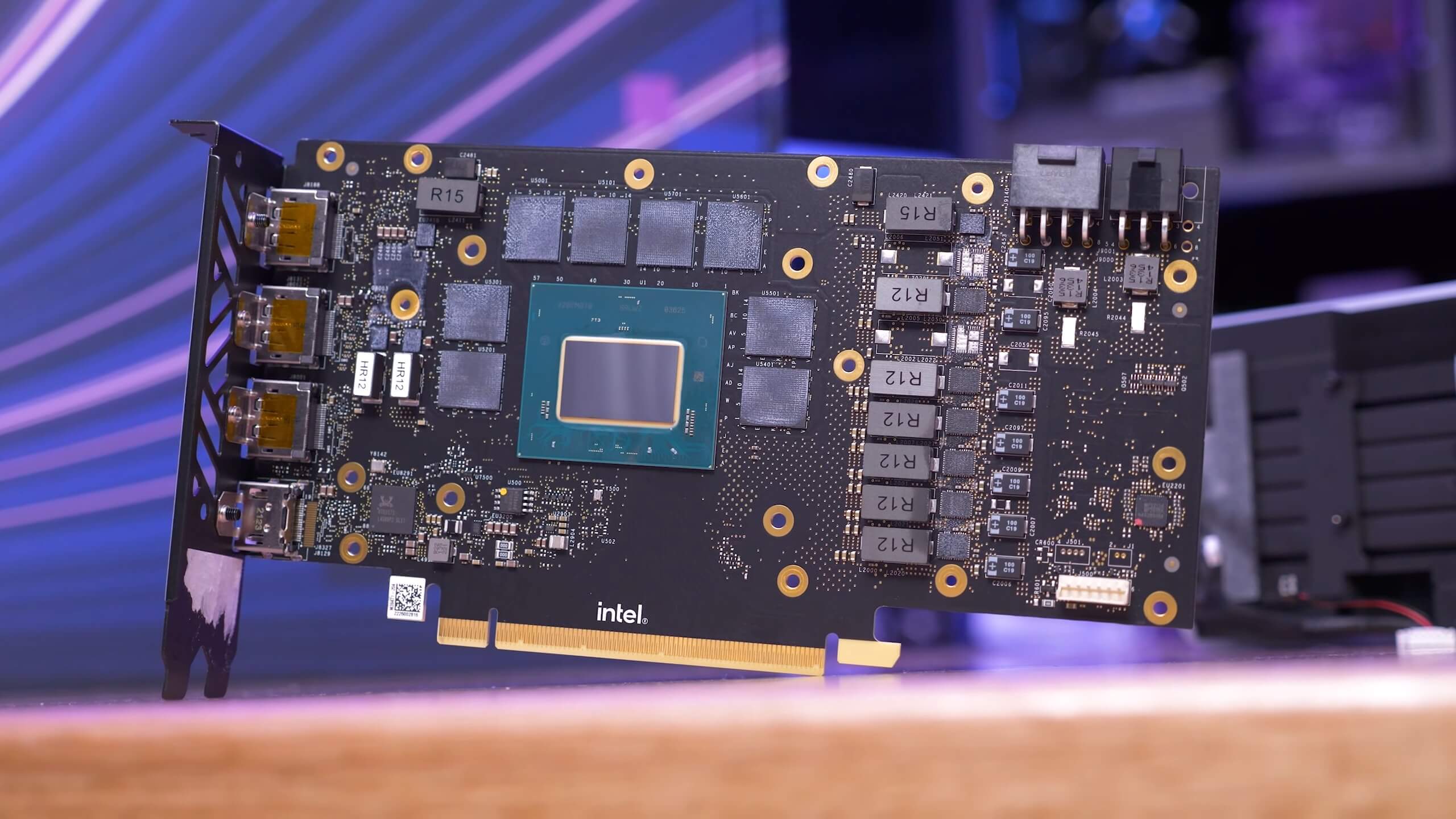

The A770 features 32 Xe cores, 32 ray tracing units, an operating frequency of 2100 MHz and a 225w power rating. The 16GB version enjoys a memory bandwidth of up to 560 GB/s, while the 8GB version not only has half as much VRAM, but the bandwidth has also been cut by 9% as it uses 16 Gbps GDDR6 memory opposed to 17.5 Gbps GDDR6.

Then we have the A750 which has 13% fewer cores and ray tracing units at 28, 50 MHz shaved off the operating frequency, and the same 8GB of VRAM as the A770 with the same 512 GB/s memory bandwidth at 225w total board power rating. We should also note that all models feature a 256-bit wide memory bus, PCIe 4.0 x16 support, and require an 8-pin plus a 6-pin power input.

Those are the key specs and for this review we'll be using Intel reference cards for both the A770 and A750.

Arc Alchemist GPUs also offer a number of hardware accelerated media features covering media transcode, hyper encode, HW-accelerated AV1 encode and AI acceleration delivered by Intel XMX. However, we don't normally cover productivity performance or encoding/decoding benchmarks in our gaming-focused reviews and this review is no exception. Besides the fact that we don't normally cover this stuff, we're more pressed for time than usual, coming off the back of Zen 4 testing, and of course, next week we have the GeForce RTX 4090 launch.

Another key aspect of Intel GPUs is XeSS – the company's version of DLSS / FSR – but this is a feature we plan to cover in greater detail soon. Just as was the case with DLSS, these upscaling technologies require a great deal of scrutiny and we plan to do just that in a dedicated feature soon.

Now, for testing all GPUs we've used the official clock specifications, so no factory overclocking. Our test system was powered by the Ryzen 7 5800X3D with 32GB of dual-rank, dual-channel DDR4-3200 CL14 memory on the Asus ROG Crosshair VIII Extreme motherboard using the latest BIOS supporting the AGESA 1207 microcode. In total, we've tested 12 games at 1080p and 1440p, so let's get into the data...

Benchmarks

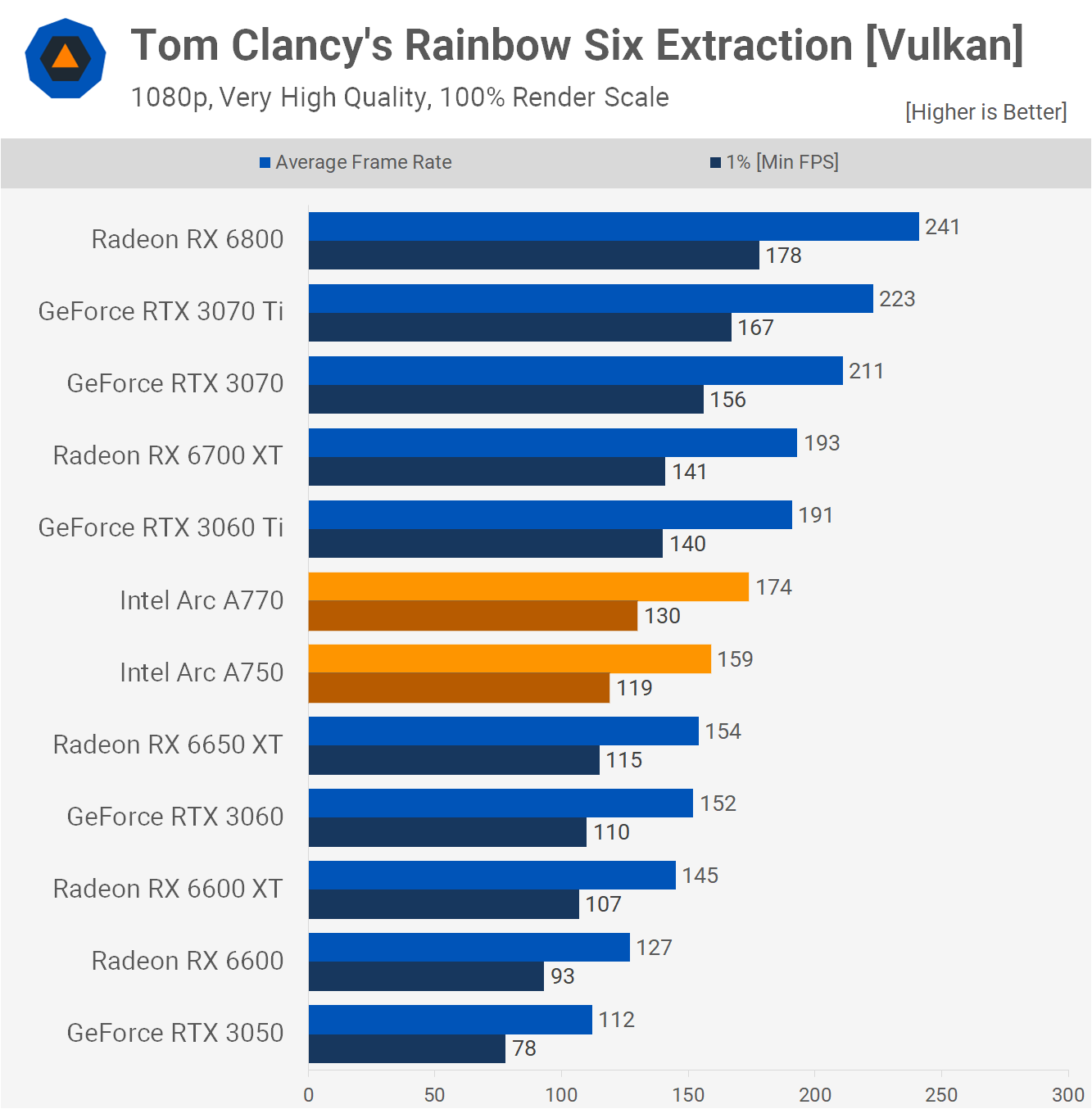

First up we have Rainbow Six Extraction and this was the only game to have an issue in our testing. The game didn't crash, but the menus had a strange bug which Intel says they're aware of and the next driver update solves it, so perhaps a non-issue moving forward. The game played well and performance was excellent.

At 1080p, the A750 was faster than both the Radeon 6650 XT and GeForce RTX 3060, while the A770 was 14% faster than the 6650 XT, that's an impressive result and a good win for Intel.

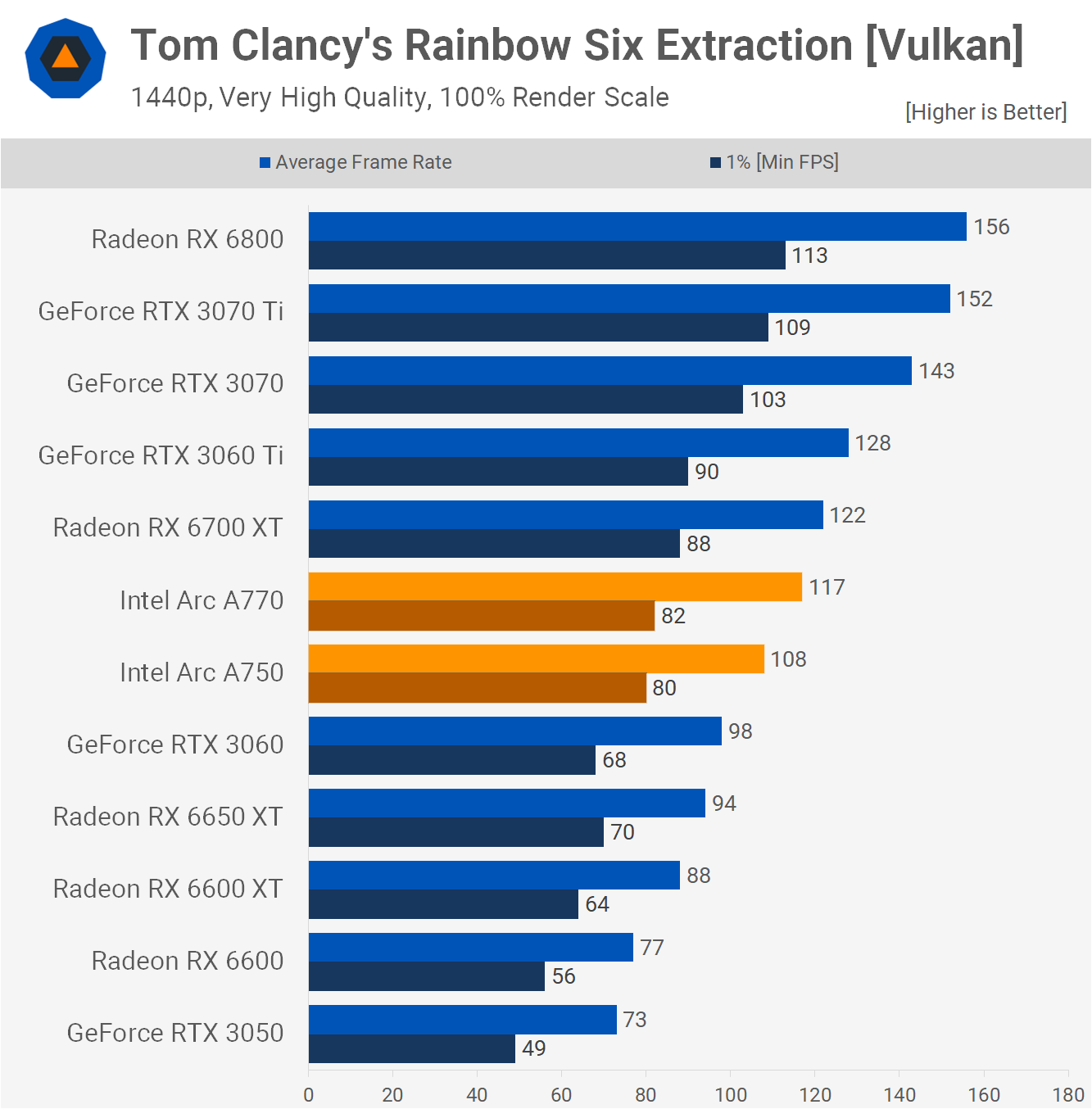

Quite incredibly, that margin blows out to 24% at 1440p as the A770 managed 117 fps and the A750 108 fps. In fact, the A770 wasn't that much slower than the Radeon 6700 XT, very impressive.

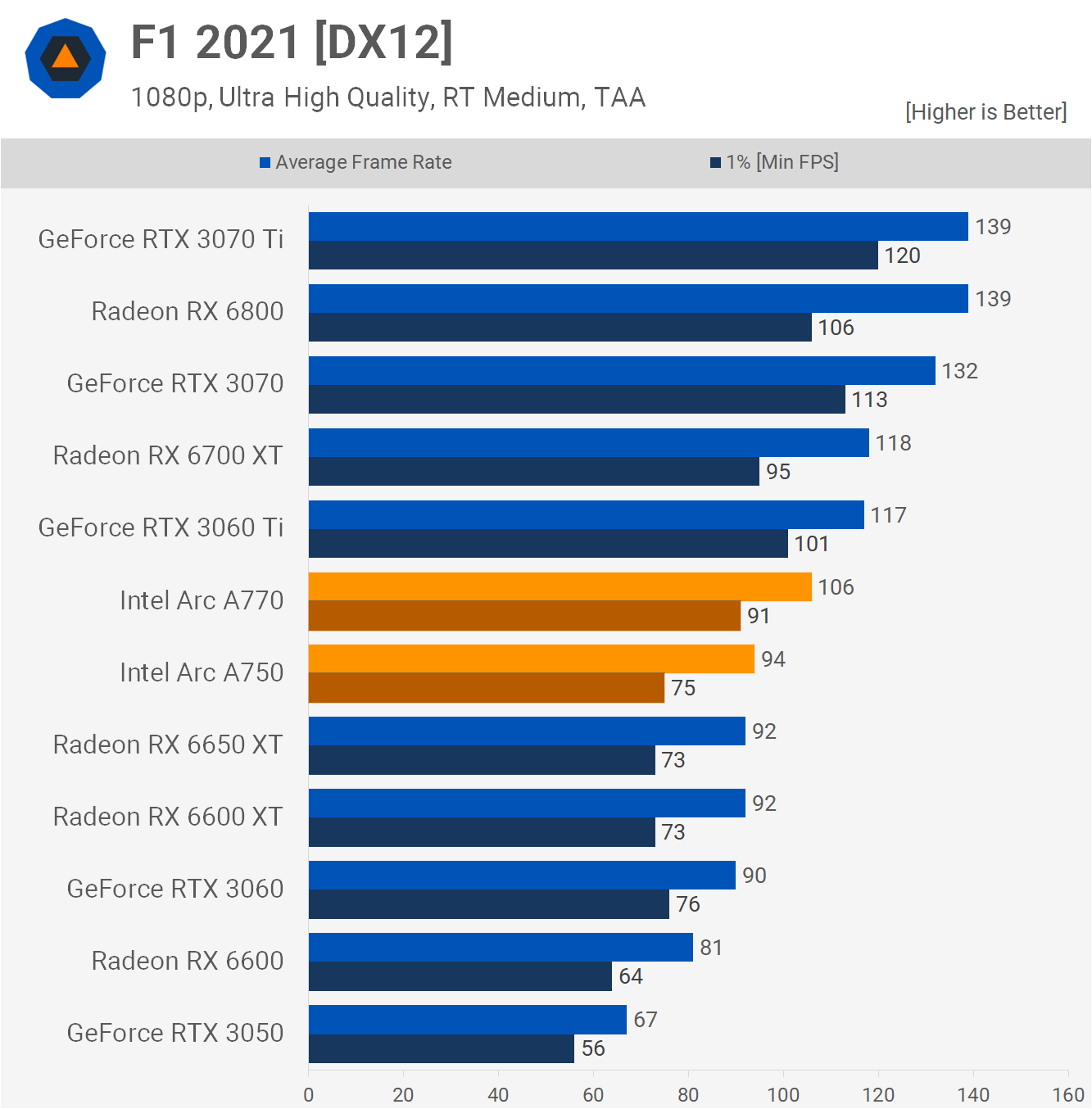

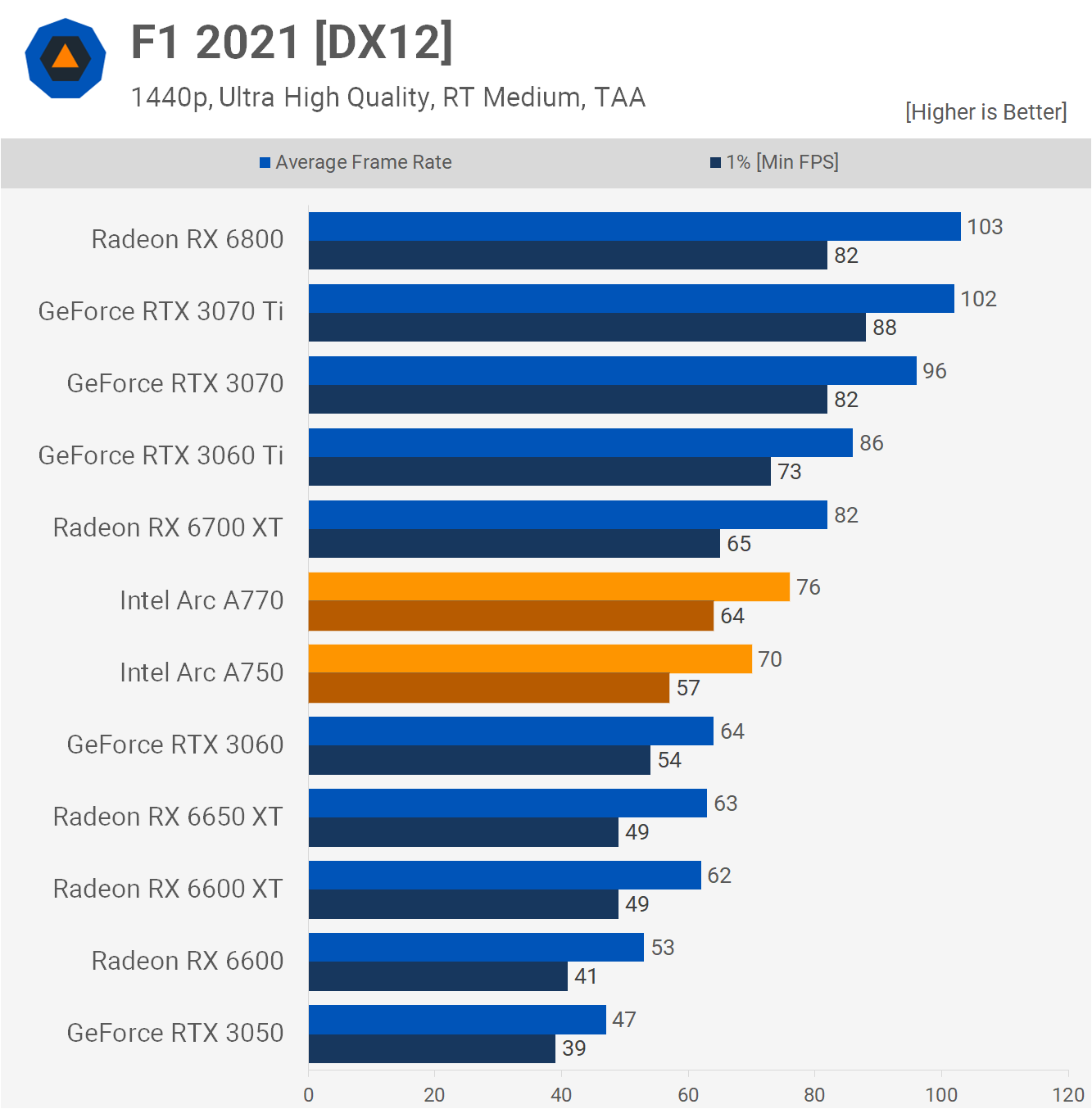

The F1 2021 results were also good. The Arc A770 was 15% faster than the 6650 XT at 1080p and just 10% slower than the 6700 XT. It also blew the RTX 3060 out of the water to come in just 9% slower than the 3060 Ti. The Arc A750 also did well, just edging out the 6650 XT and RTX 3060.

Jumping up to 1440p saw the A770 lead the 6650 XT by a massive 21% margin, pumping out 76 fps to just 63 fps for the Radeon GPU, and that's with ray tracing enabled. The Arc A750 was also strong here, beating the RTX 3060 by a 9% margin. Another very solid result here for Intel.

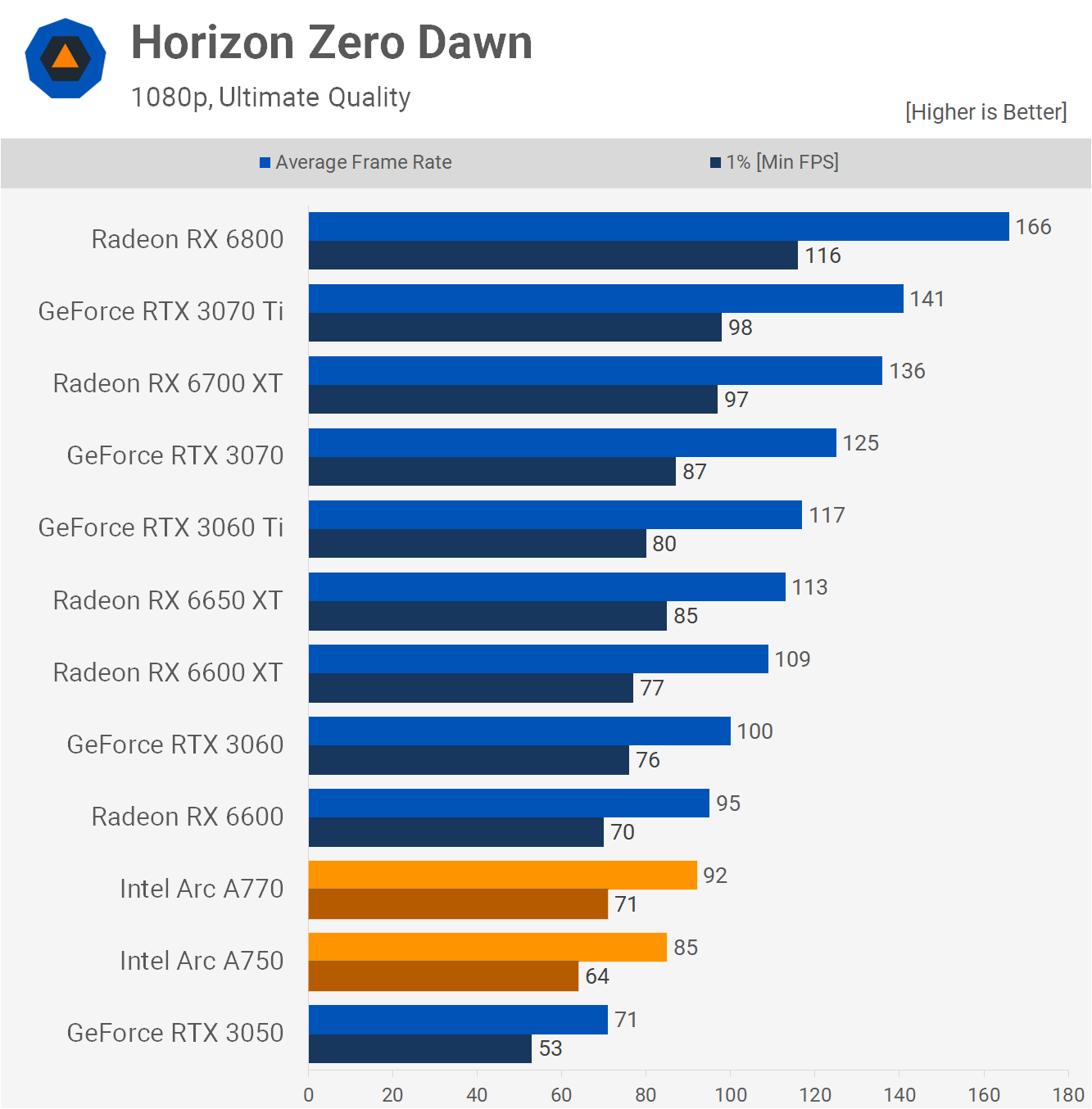

Unfortunately things go a bit skeewiff for the Arc GPUs in Horizon Zero Dawn. At 1080p, the A770 came in behind even the Radeon RX 6600, making it 19% slower than the 6650 XT and 8% slower than the RTX 3060. Given what we'd seen so far, that's a disappointing result.

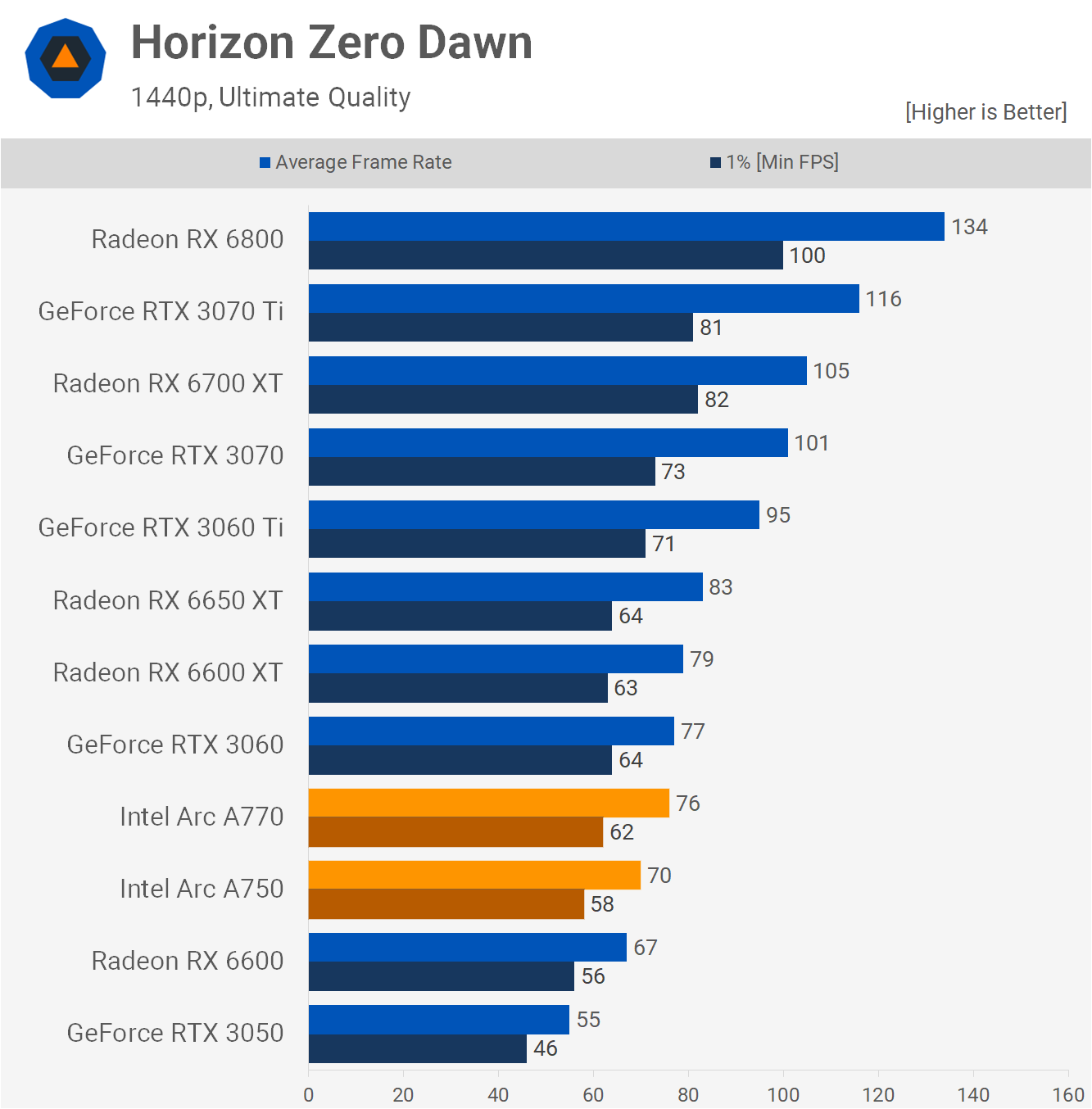

Increasing the resolution to 1440p does help and now the A770 can be seen matching the GeForce RTX 3060, but it's still 8% slower than the 6650 XT. Meanwhile, the Arc A750 is only delivering 6600-like performance. This is not a good title for Arc.

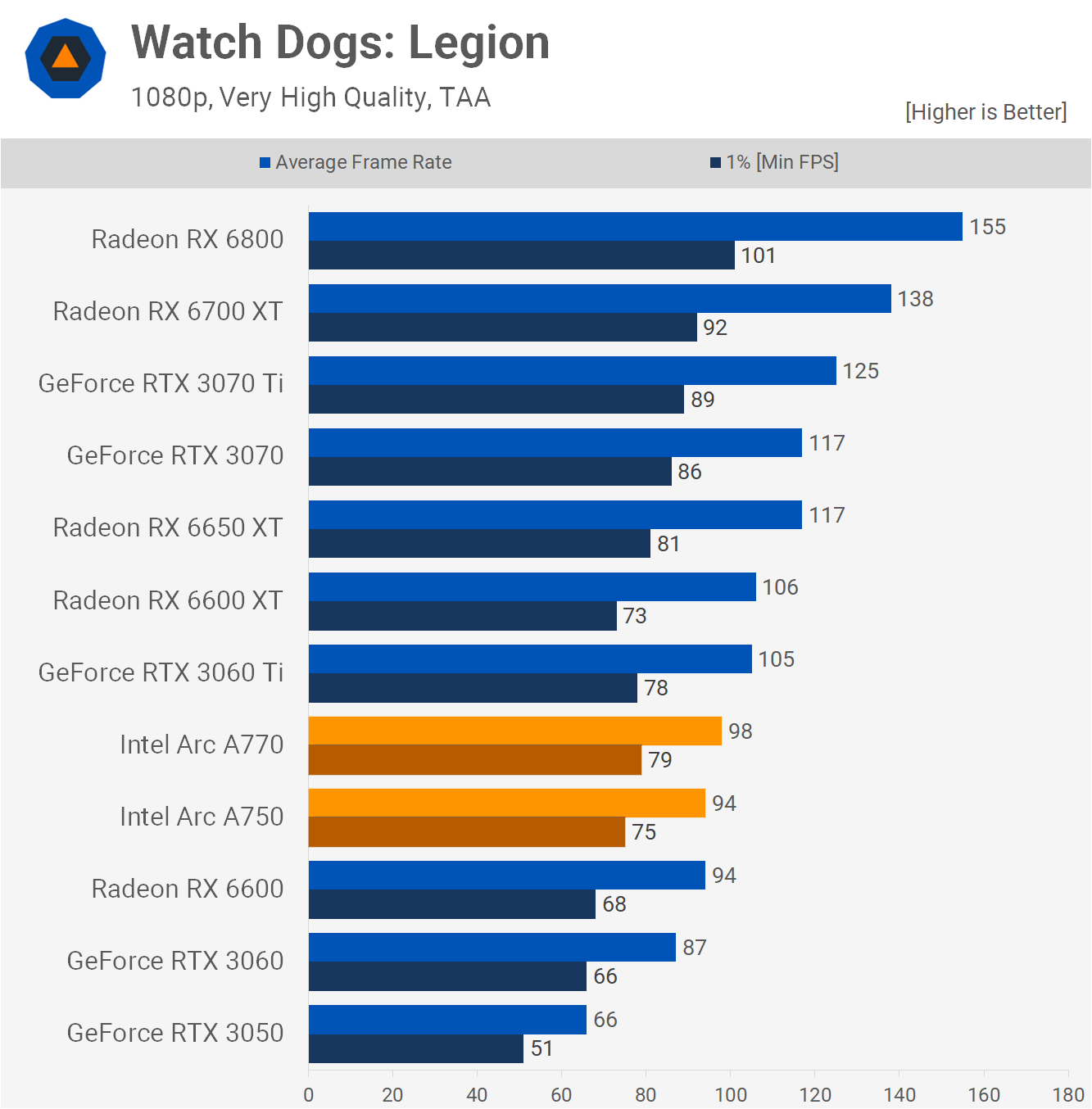

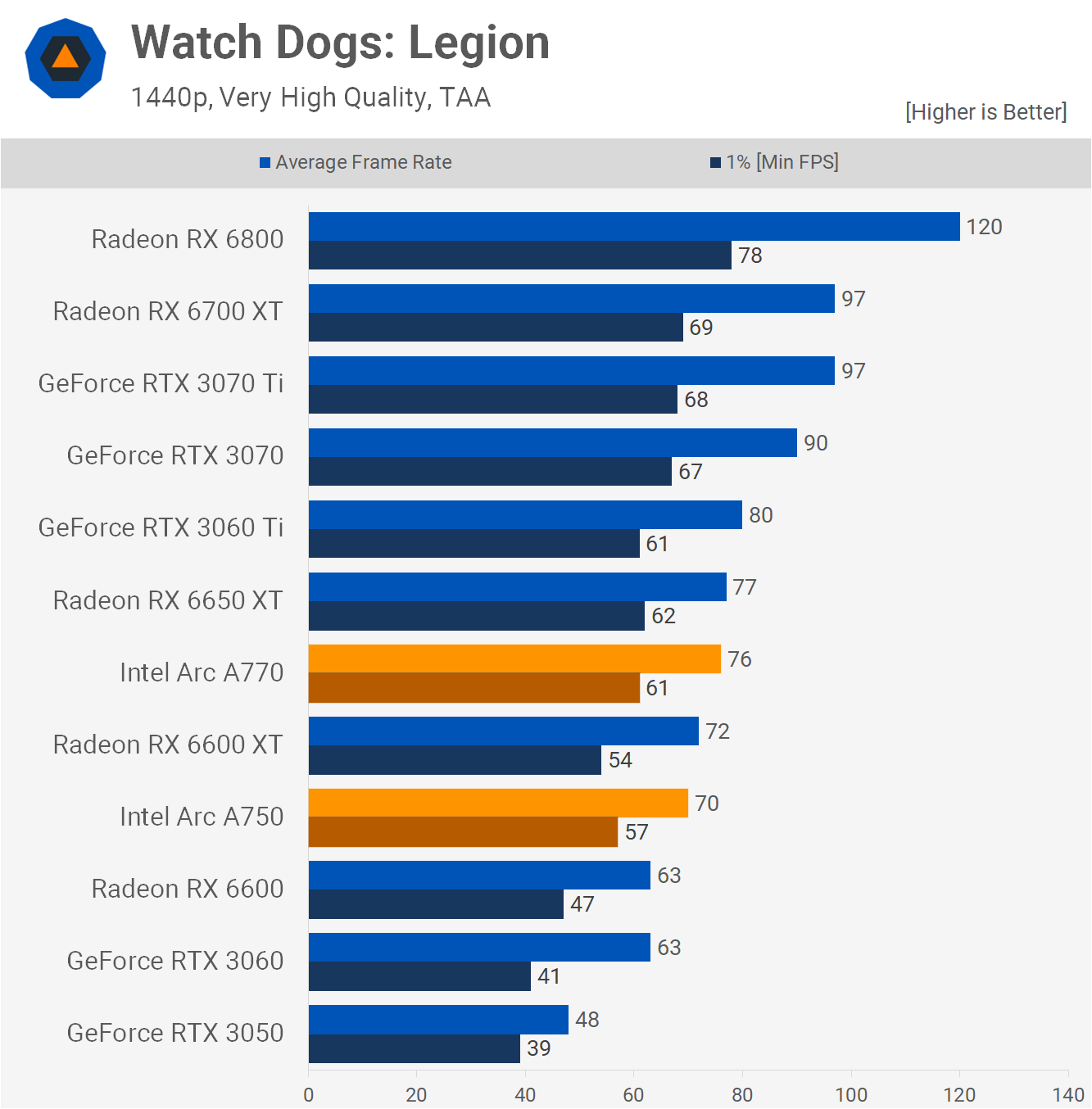

It's a similar story in Watch Dogs: Legion, though performance is very good compared to Nvidia, but less impressive compared to AMD. The Arc A770 for example was 13% faster than the RTX 3060 but a 16% slower than the Radeon 6650 XT.

Basically, the A750 was only able to match the cheaper RX 6600, so that's a brutal outcome for Intel.

However, increasing the resolution to 1440p drastically changes the picture and now the A770 is able to match the 6650 XT with 76 fps on average. The A750 also jumped up to the 6600 XT with 70 fps and both GPUs were much faster than the RTX 3060, so it would seem these Arc GPUs much prefer 1440p opposed to 1080p, at least from a competitive standpoint.

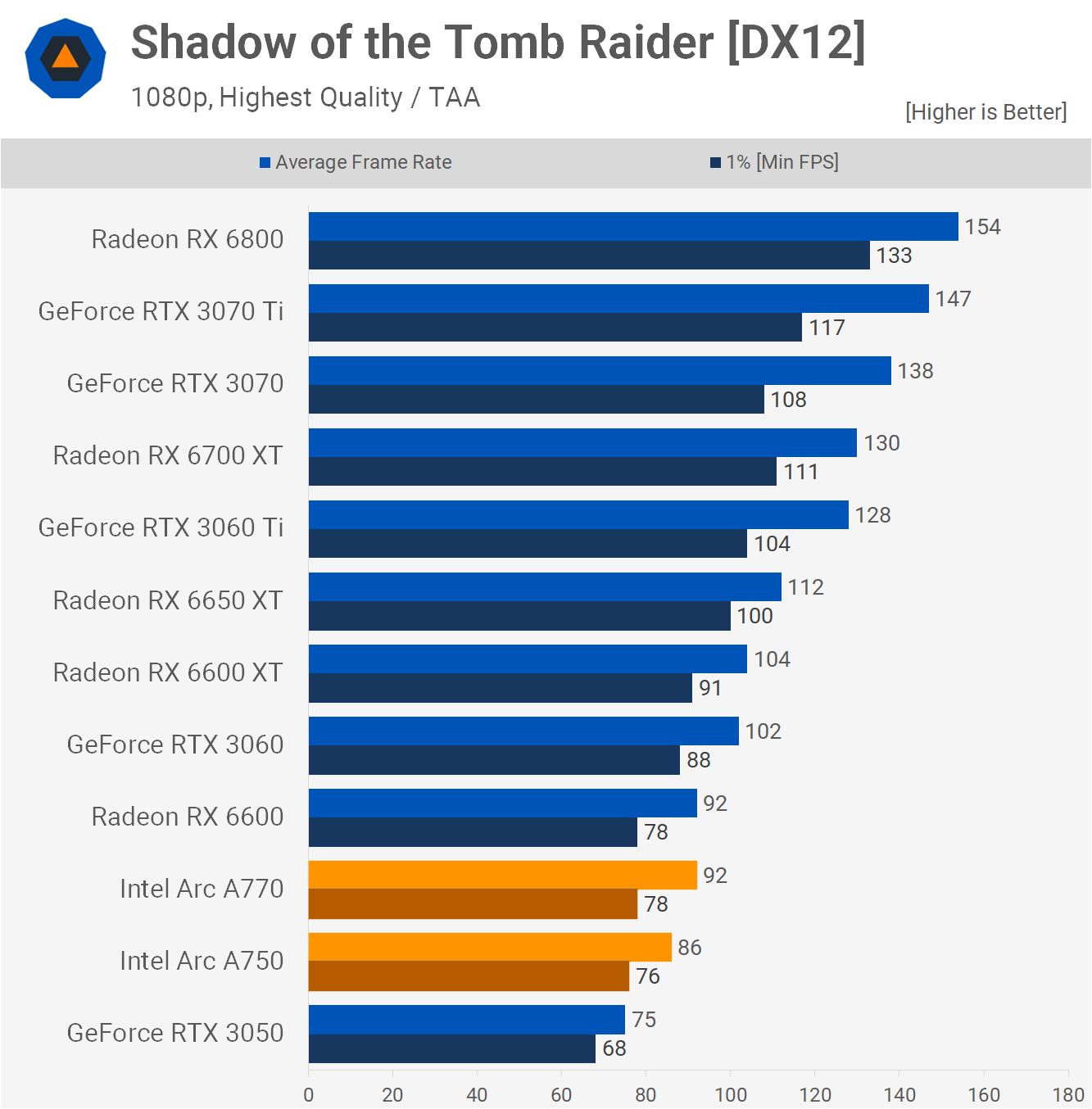

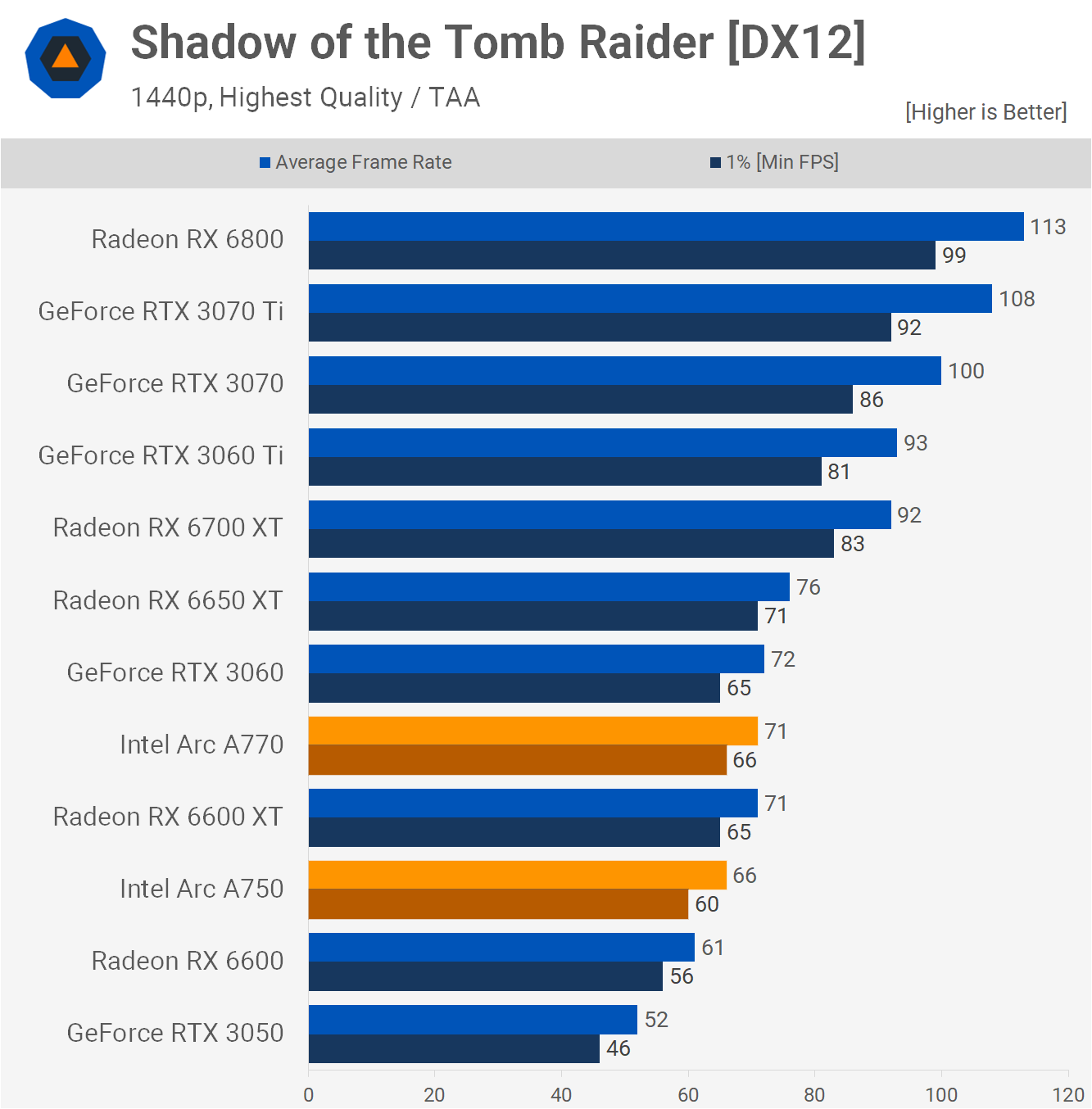

Shadow of the Tomb Raider is an older DirectX 12 title and it's not one that Arc is well optimized for, falling short of 100 fps on average at 1080p.

With just 92 fps, the A770 only matched the RX 6600, making it 18% slower than the 6650 XT and 10% slower than the RTX 3060.

Once again though those margins do close up at 1440p and now the A770 was just 7% slower than the 6650 XT while the A750 was 8% faster than the RX 6600.

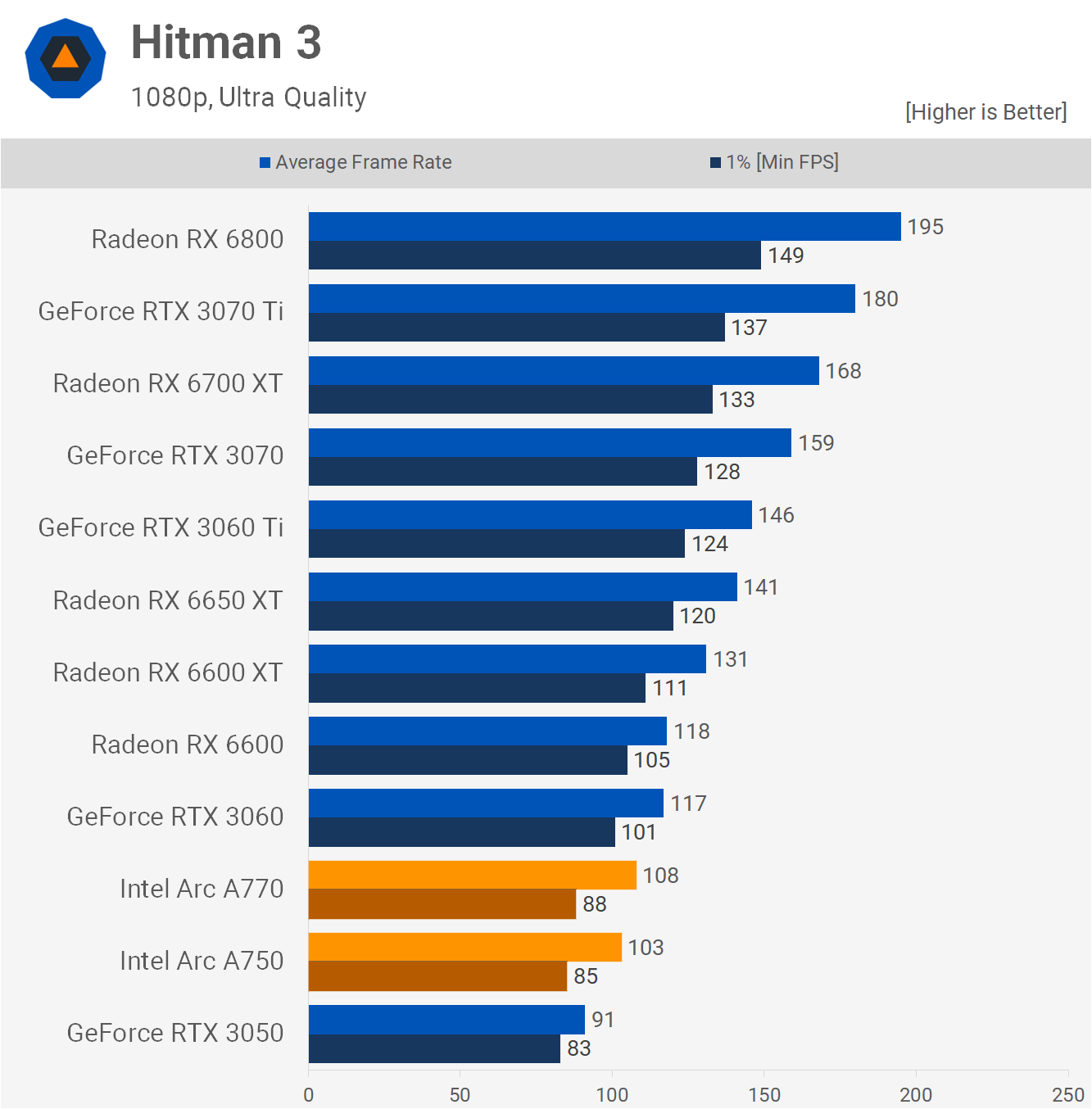

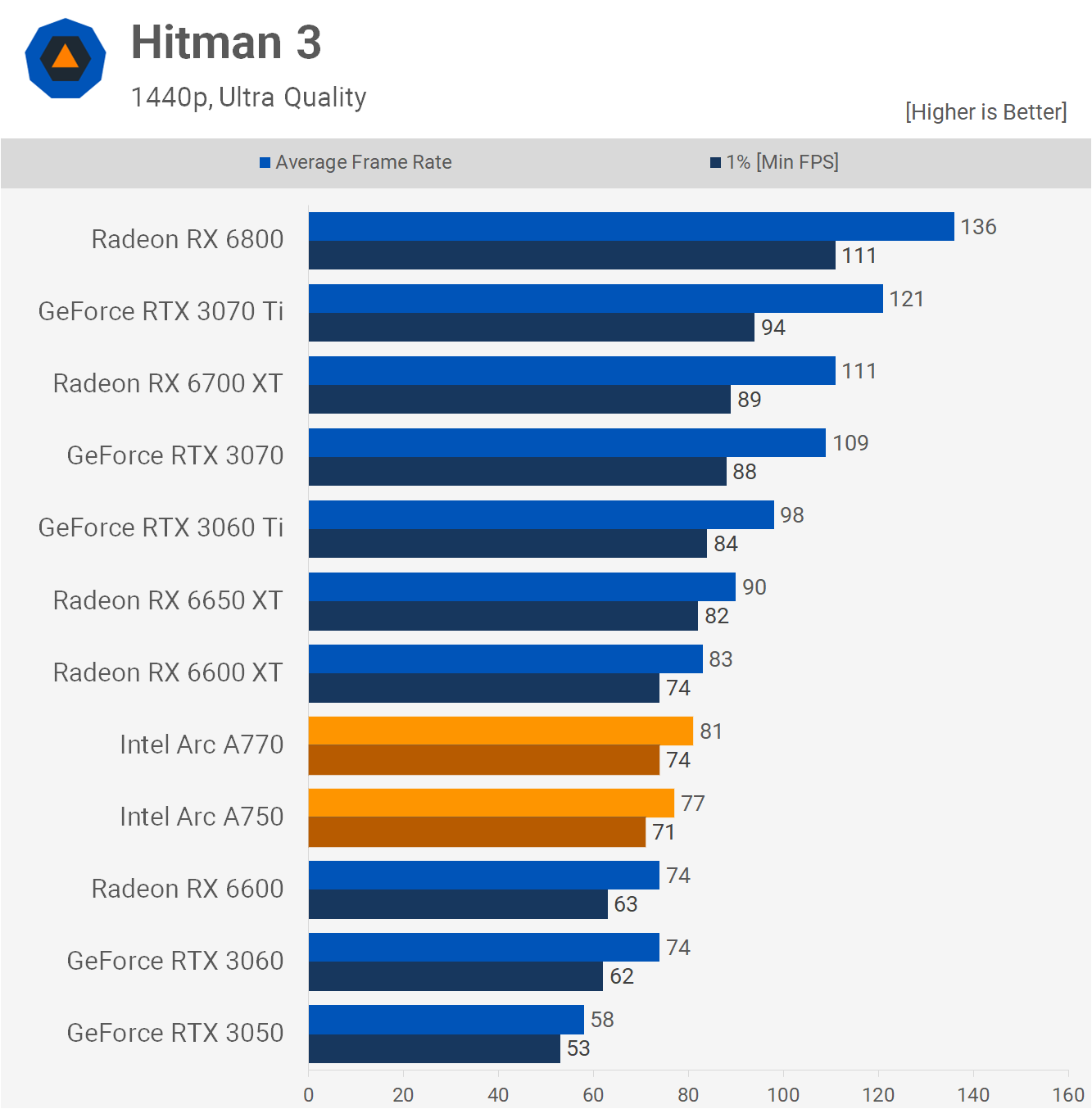

Hitman 3 is a brutal title for Arc GPUs at 1080p as both were quite slower than the RTX 3060 and miles slower than the 6650 XT. Again though, 1440p does improve the A770 and A750's standings, pushing both ahead of the Radeon RX 6600. Now the A770 was 10% slower than the 6650 XT, still a bad outcome given the price, but much better than the situation faced at 1080p.

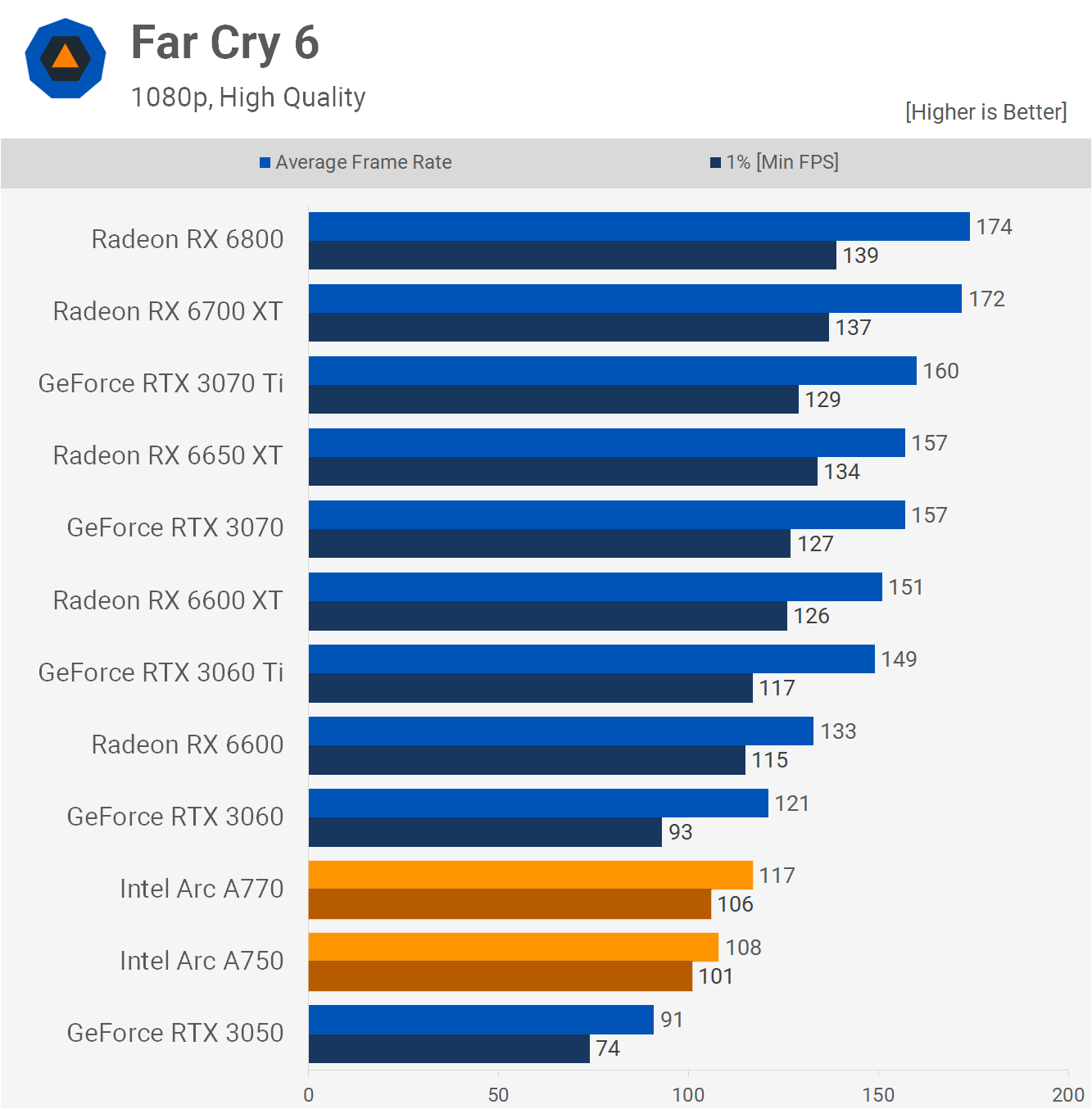

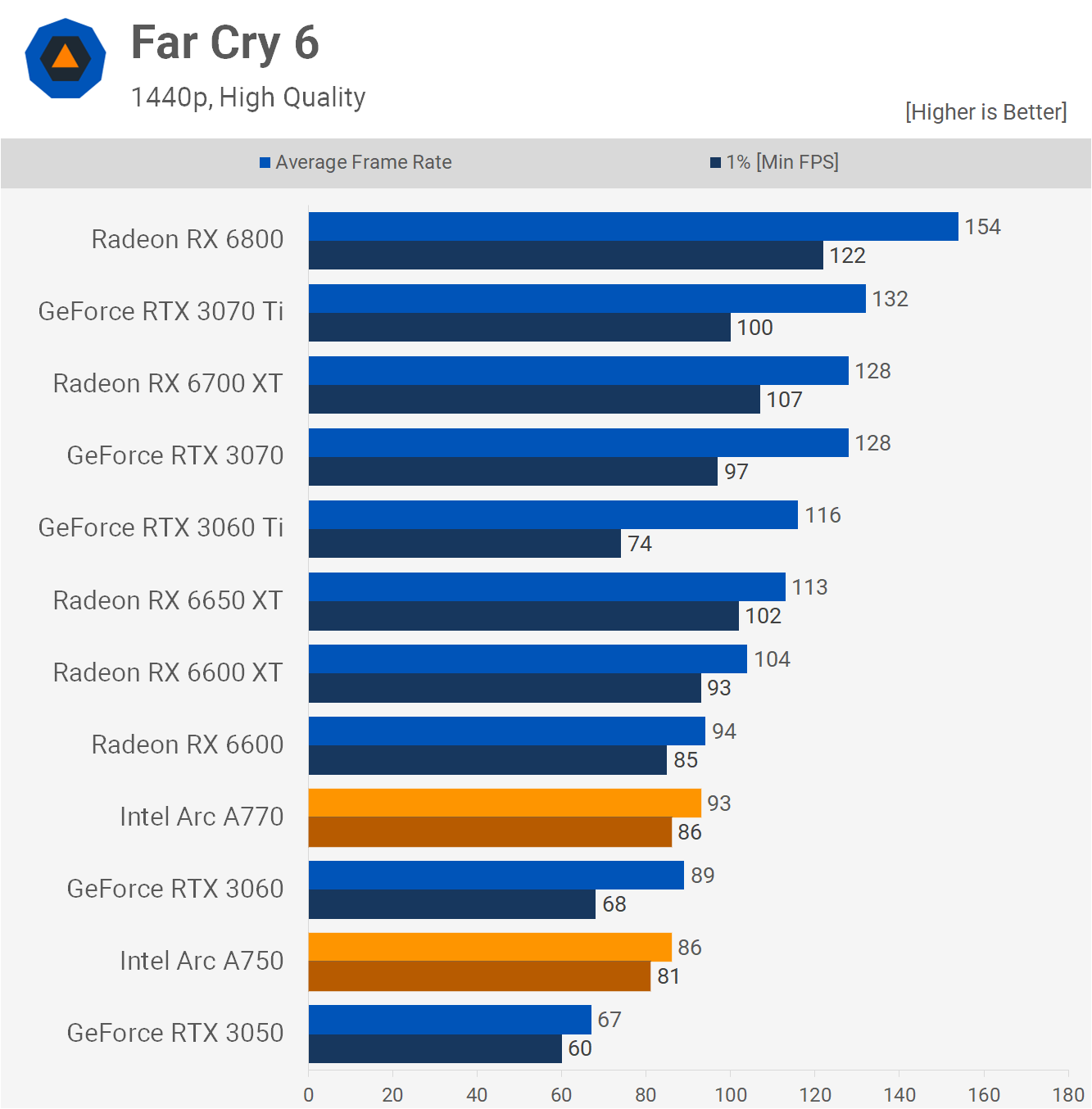

Far Cry 6 is another poor title for Intel Arc, depending on what you compare them to.

You can see why Intel went with the RTX 3060 because here the A770 was just 3% slower. But when compared to the Radeon 6650 XT it was a 25% slower and 12% slower than the much cheaper Radeon 6600.

Upping the resolution to 1440p gets the A770 up alongside the RX 6600, but it was still 18% slower than the 6650 XT, so not a good result relative to mainstream Radeon GPUs.

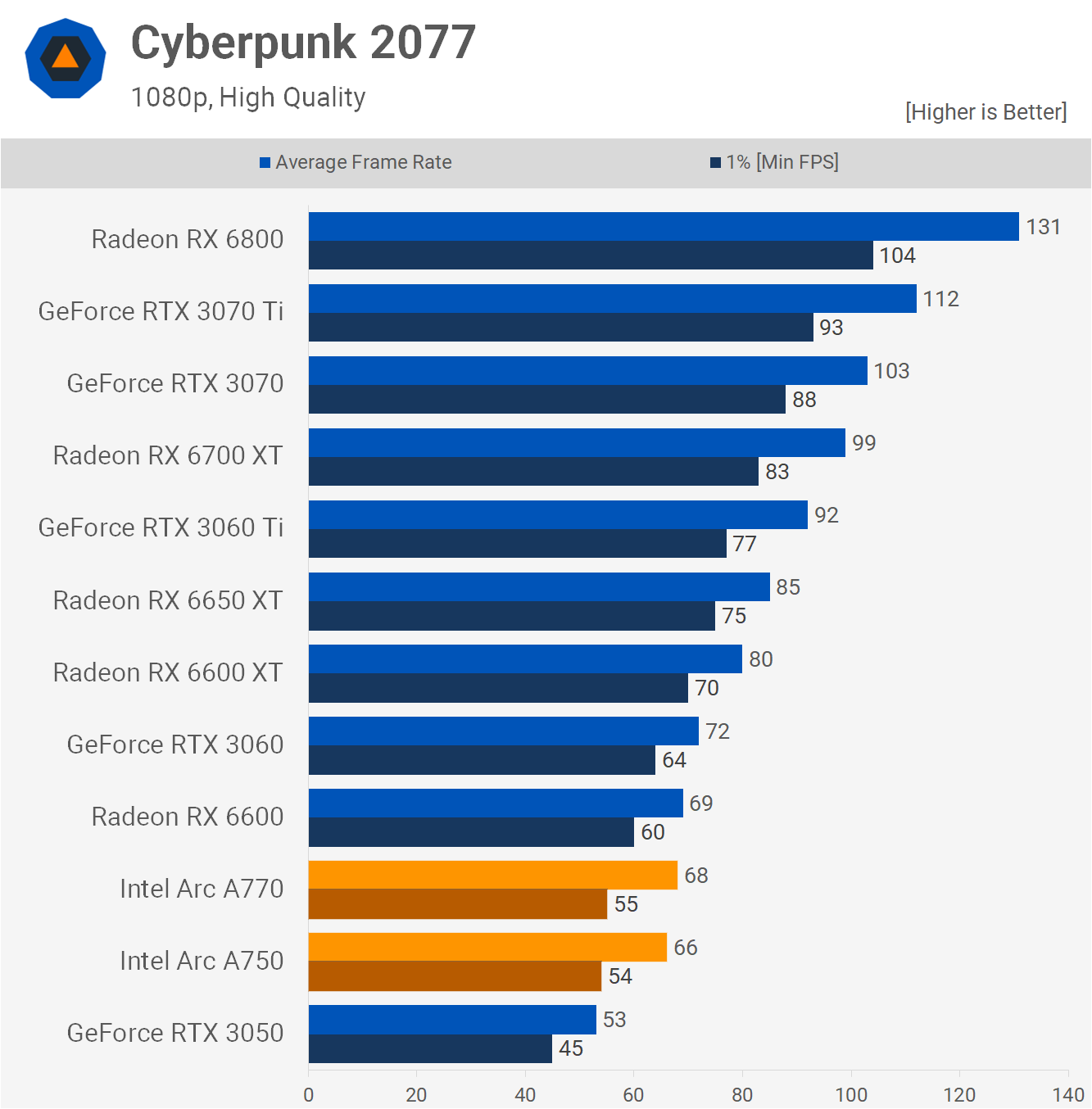

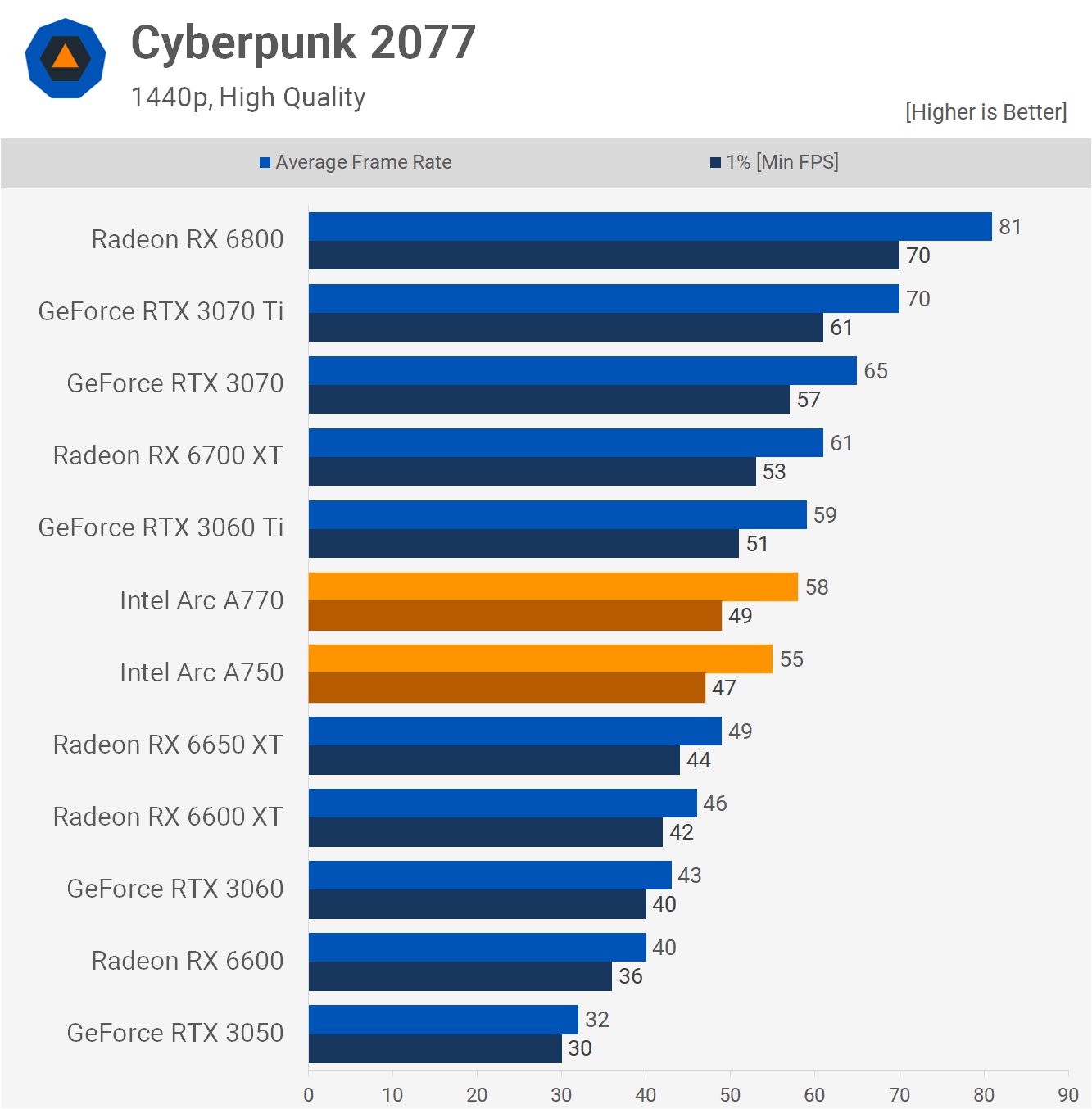

Next we have Cyberpunk 2077 and the 1080p results are not pretty with the A770 coming in behind the RX 6600 and 20% slower than the 6650 XT. Oddly, both the A770 and A750 delivered virtually the same performance, so it appears to be some kind of bottleneck, either caused by the Alchemist architecture or perhaps a software issue.

It's certainly an odd one because increasing the resolution to 1440p reduced the performance of the A770 by only 15%, meanwhile the 6650 XT saw a more typical 42% drop and the RTX 3060 a 40% drop.

The good news is that both the A770 and A750 were faster than the Radeon 6650 XT at 1440p and only slightly behind the 3060 Ti, so that's a tremendous result and very surprising given what was seen at 1080p.

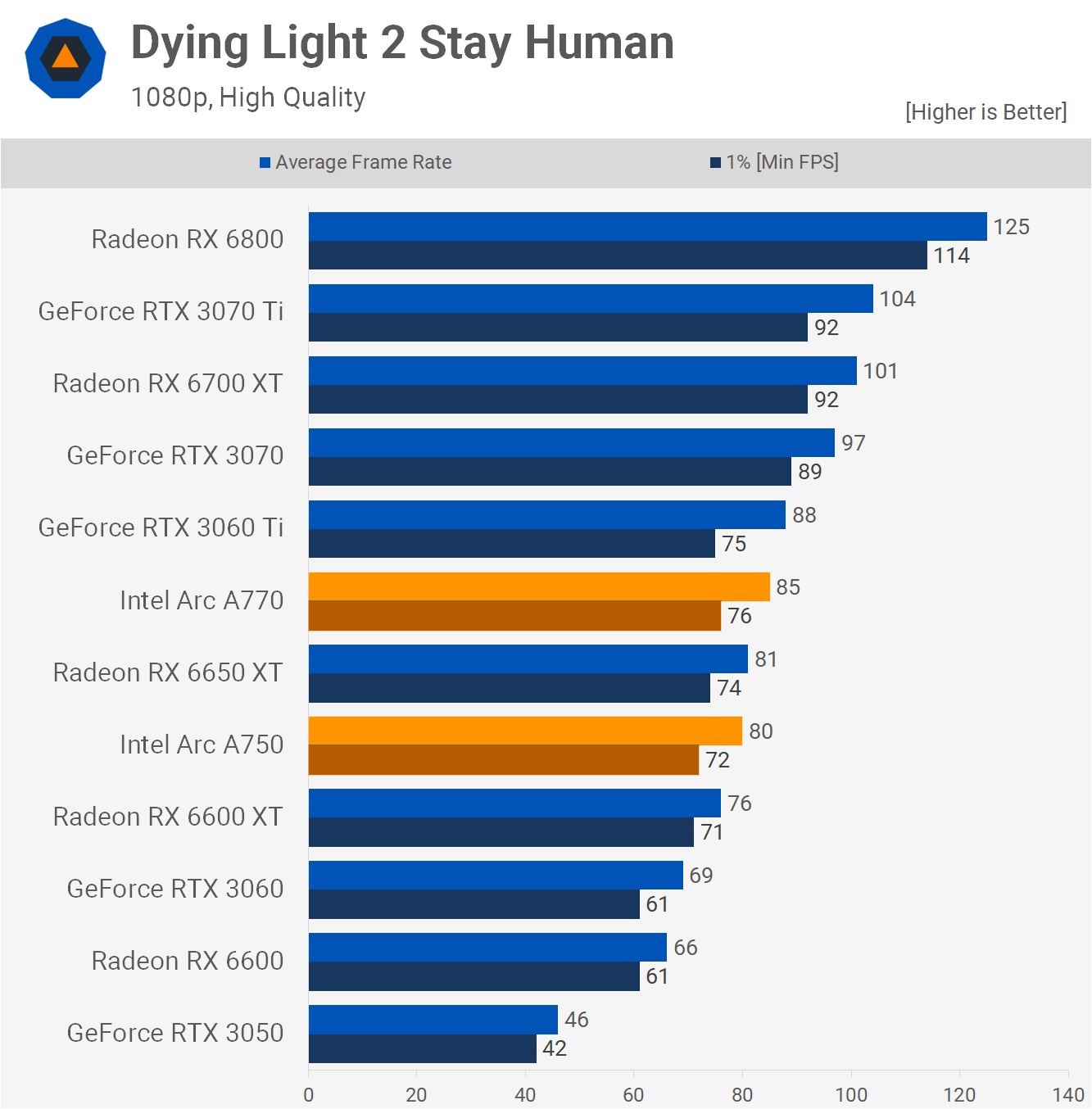

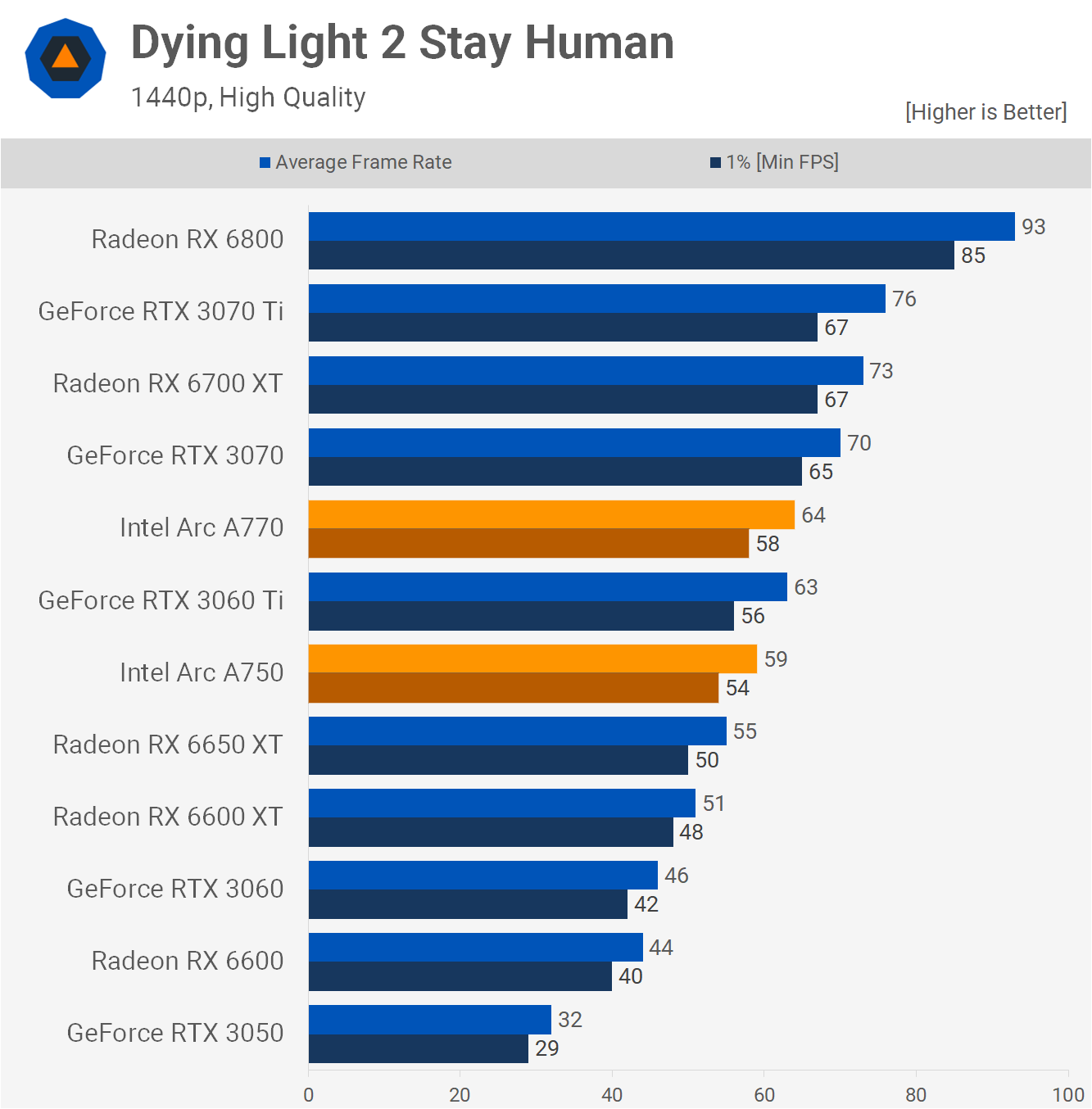

The Dying Light 2 results are much more competitive at 1080p, the A770 edged out the 6650 XT to deliver RTX 3060 Ti-like performance. The A750 also managed to match the Radeon 6650 XT and crushed the GeForce RTX 3060.

Then at 1440p the Arc GPUs look great and the A770 isn't that far off the RTX 3070. All said and done, it was 16% faster than the 6650 XT, while the A750 was 7% faster.

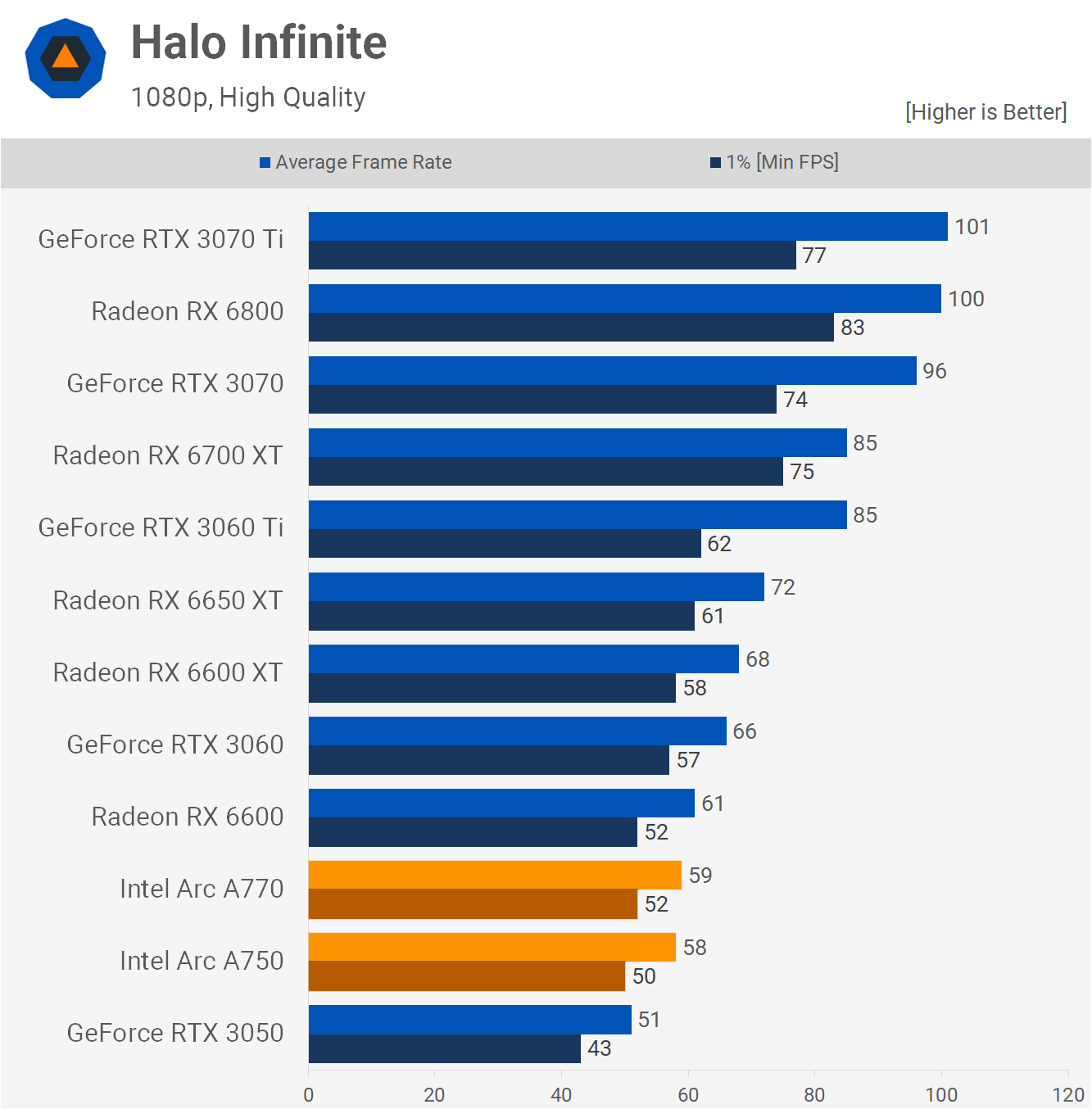

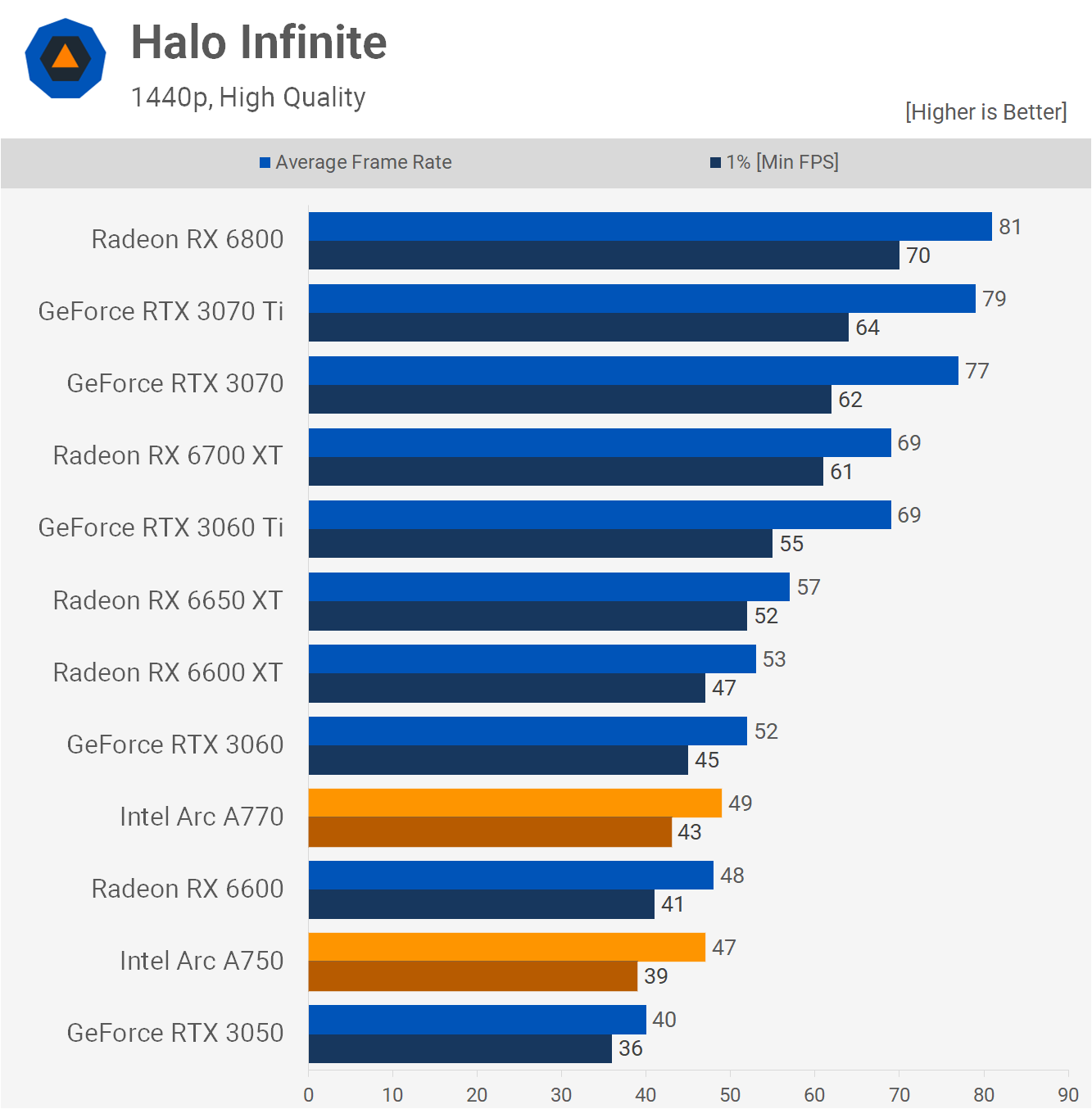

In Halo Infinite, the Arc GPUs can be found delivering sub-optimal performance at 1080p, as both were slower than the Radeon RX 6600 and just 16% faster than the RTX 3050, and while we know 16% is a sizable margin, when comparing a GPU with the RTX 3050, 16% is not enough.

Sadly, even at 1440p the Arc GPUs are underwhelming in Halo Infinite, trailing not just the RTX 3060 but also the 6650 XT by quite some margin.

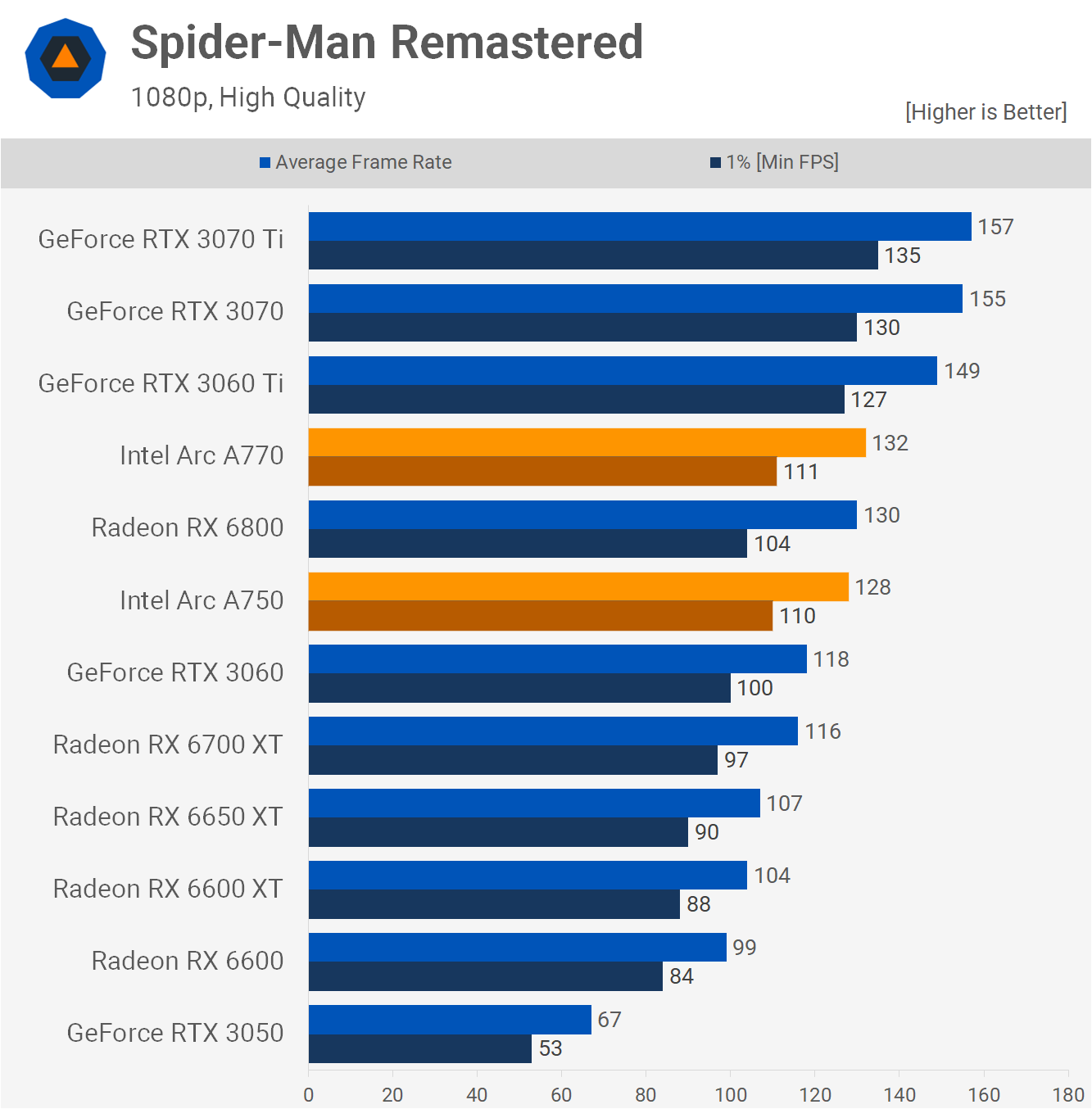

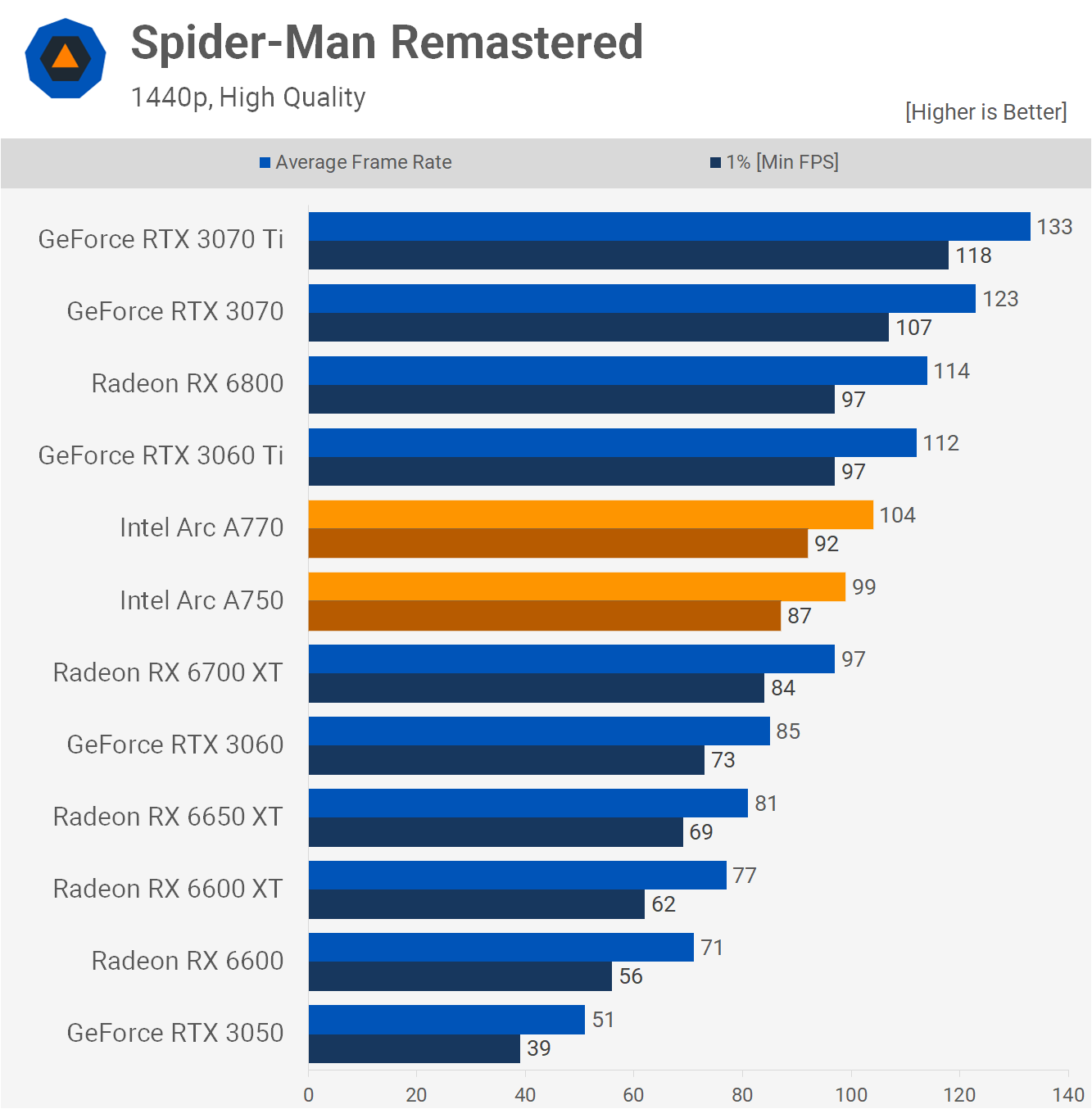

If you recall, when we first benchmarked Spider-Man Remastered with a boat load of GPUs, we included Intel's A380 and the results were horrible.

Shortly after that benchmark session, Intel addressed the performance issues with a new driver. Performance now is mighty good as we see here with the Intel Arc A770 and A750, both of which crushed the RTX 3060 and 6650 XT at 1080p.

The 1440p results are pretty strong, too, the A770 was just 7% slower than the RTX 3060 Ti and almost 30% faster than the Radeon 6650 XT. Amazing performance here, we just wish we saw more of the same.

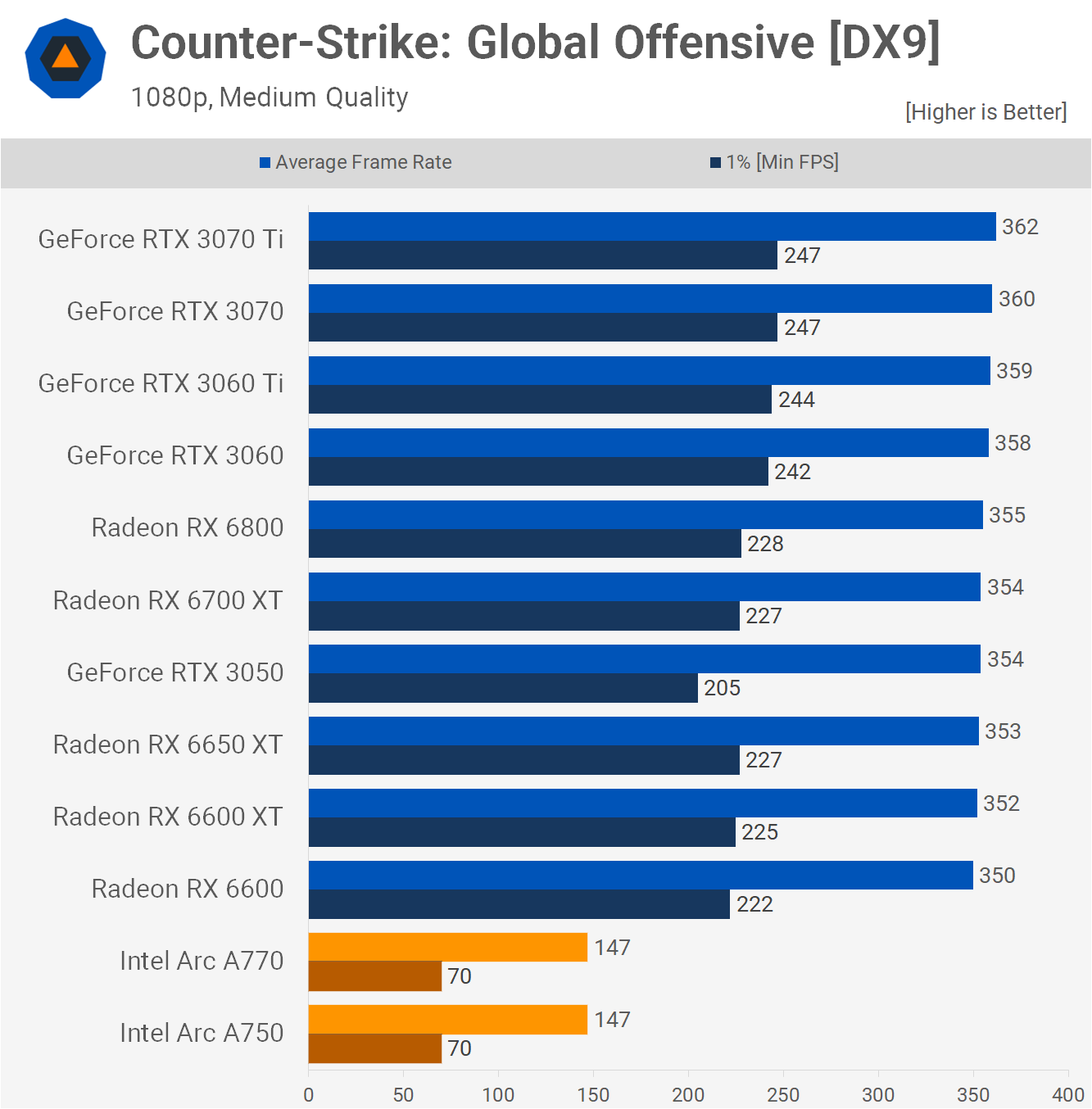

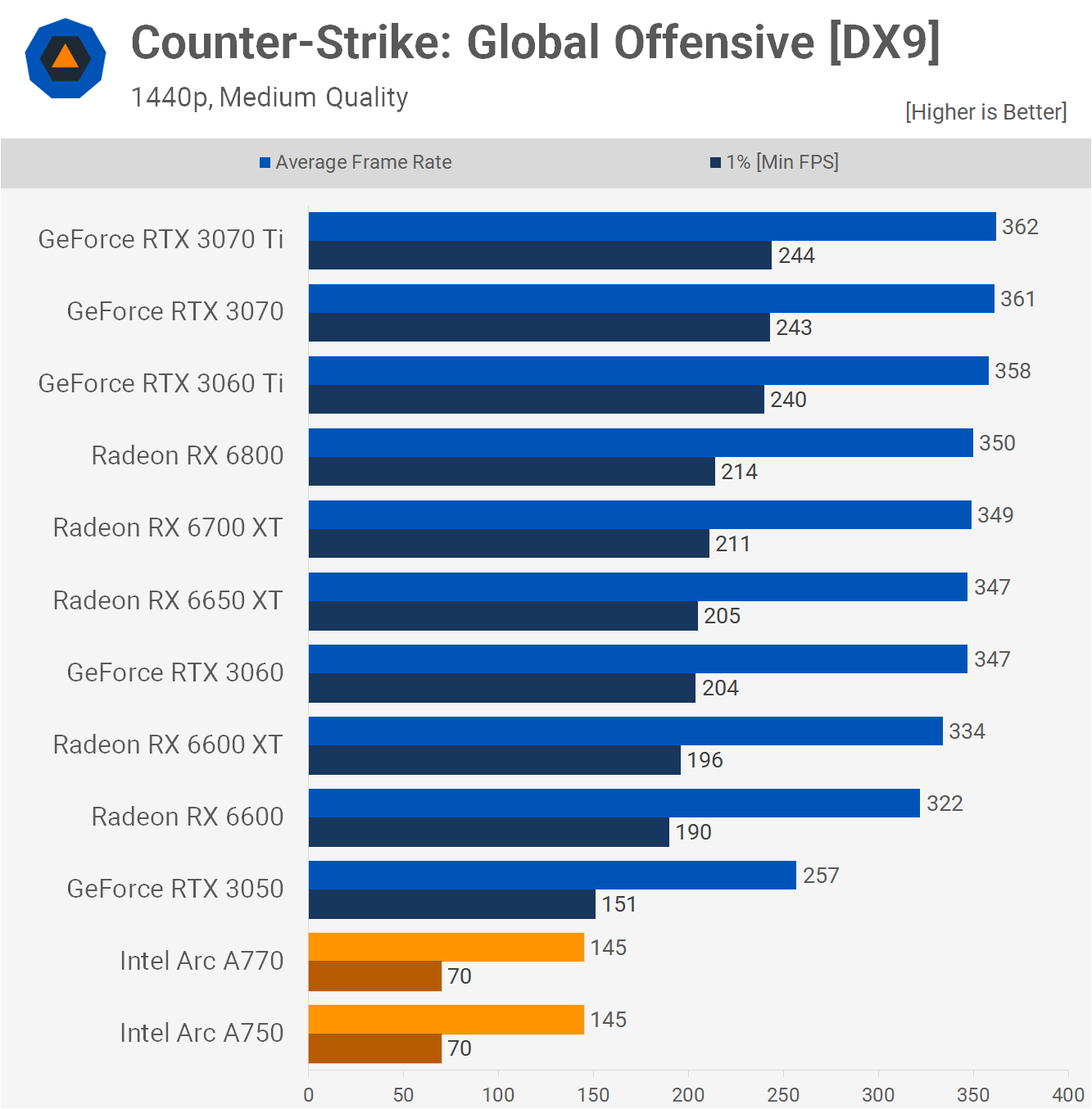

The most disappointing result in today's review and one of Arc's key lingering issues: support / proper optimization for older games. Counter-Strike: Global Offensive might be super old, running on top of DirectX 9, but it's by far and away the most played game on Steam with peaks of a million players daily, more than twice that of Apex Legends, for example.

That's a problem for Intel because performance is nowhere with the A770 and A750. Yes, these results are accurate and even at 1440p it's a mess.

The game is technically very playable, but certainly not in a competitive setting and you'd obviously just buy a GeForce or Radeon GPU if you played CSGO or other old games.

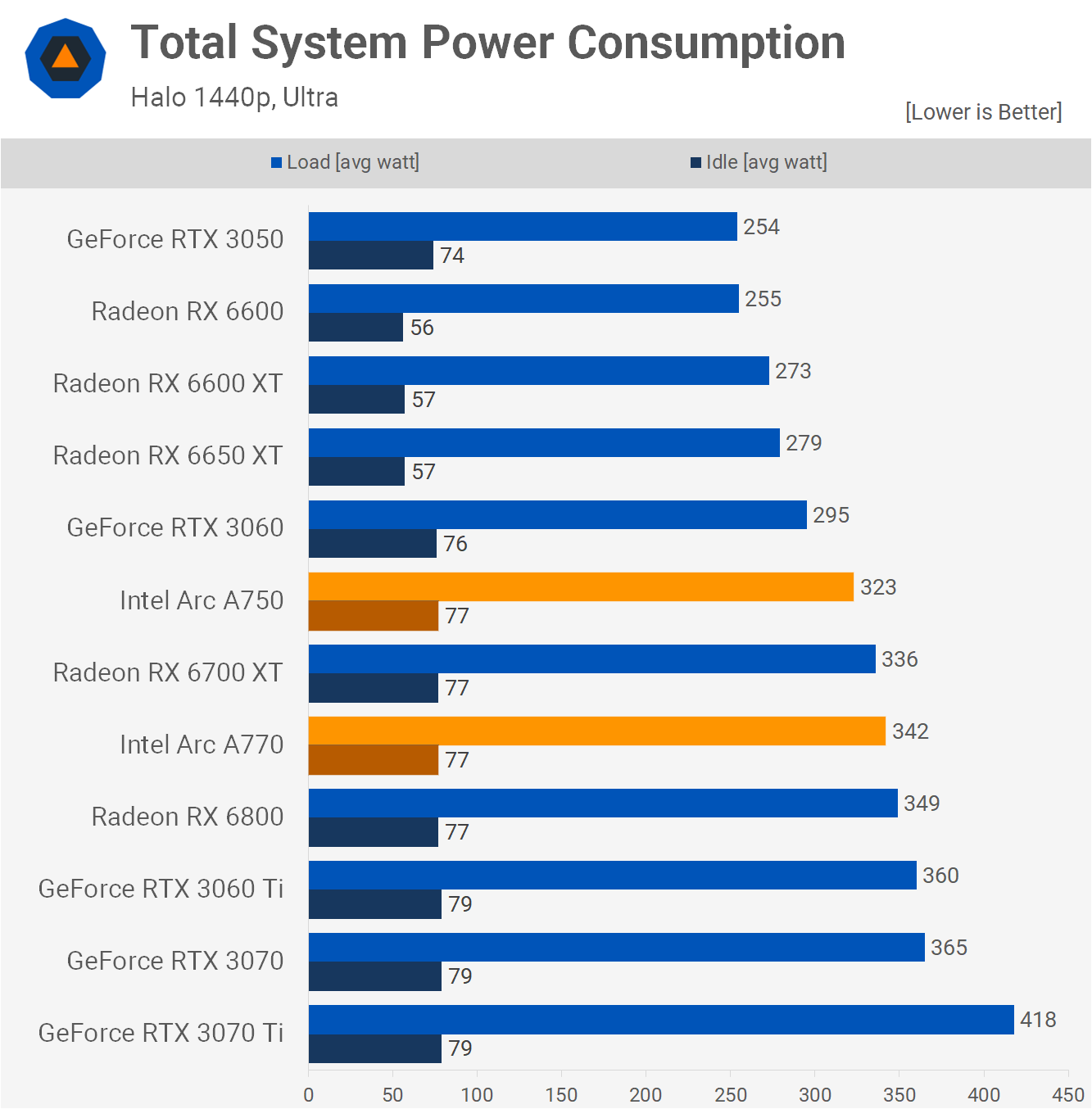

Power Consumption

The power consumption isn't anything to get excited about either, but it's far from a disaster.

You're looking at similar power use to that of the much faster Radeon RX 6800 for the A770 as it bumped up consumption by 23% over the Radeon 6650 XT. The A750 was no better, so in terms of power efficiency and performance per watt Arc is bad, but it's certainly no deal breaker.

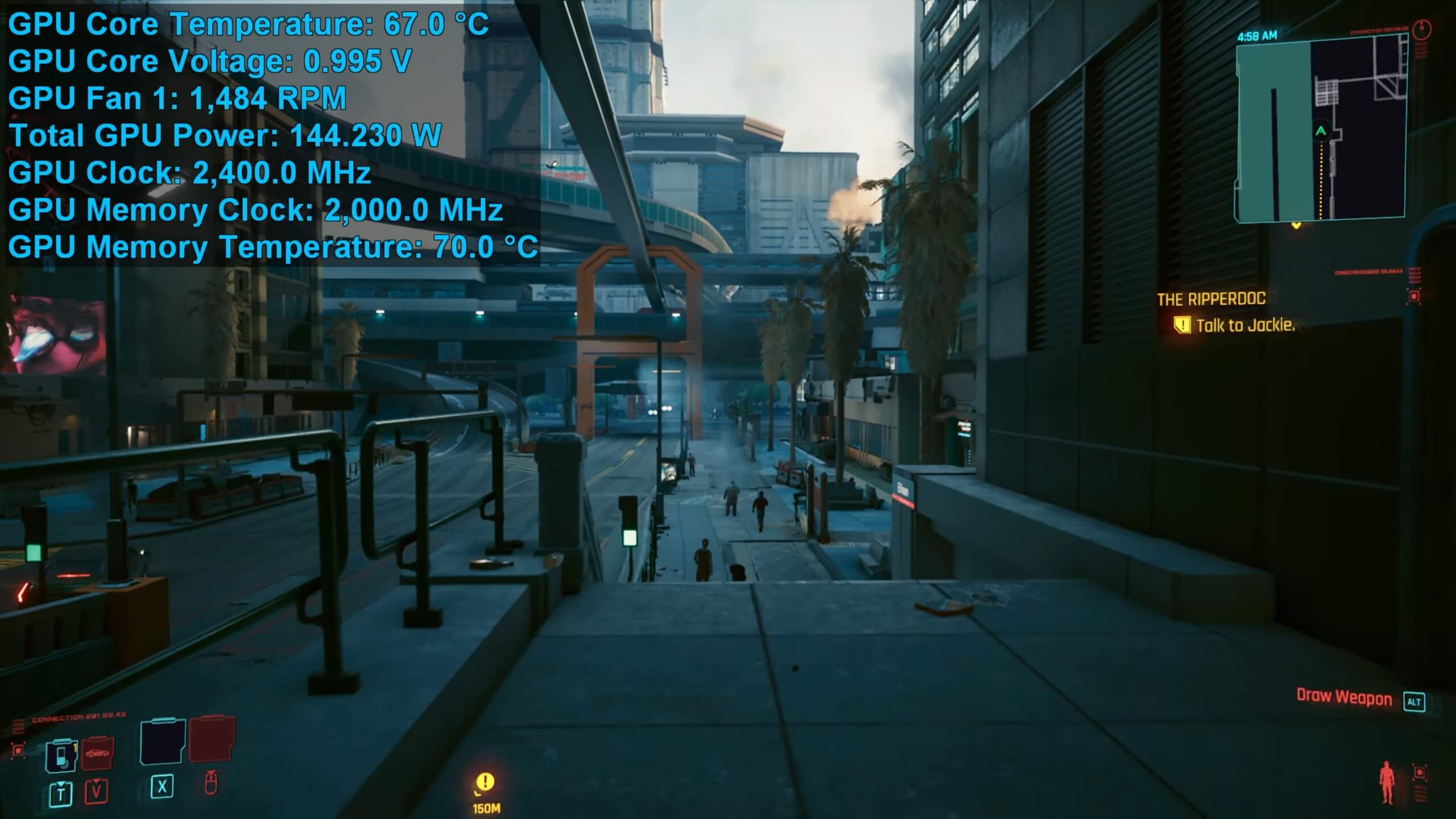

Cooling Performance

The Intel reference cards worked well in terms of cooling and temperatures. They look simple but nice enough and build quality is good, though they're a bit of a pain to take apart.

The Arc A750 ran at a core temperature of 67C with the memory at 70C. Both are very acceptable, especially given the 1500 RPM fan speed, which resulted in near-silent operation. The GPU clocked at 2.4 GHz and the memory ran at the advertised 16 Gbps.

In the case of the Arc A770, it ran the cores and memory at 70C with a fan speed of 1600 RPM, so it was very quiet. Given the GPU is sucking down just 155 watts on average, it's not that hard to cool, especially by today's standards.

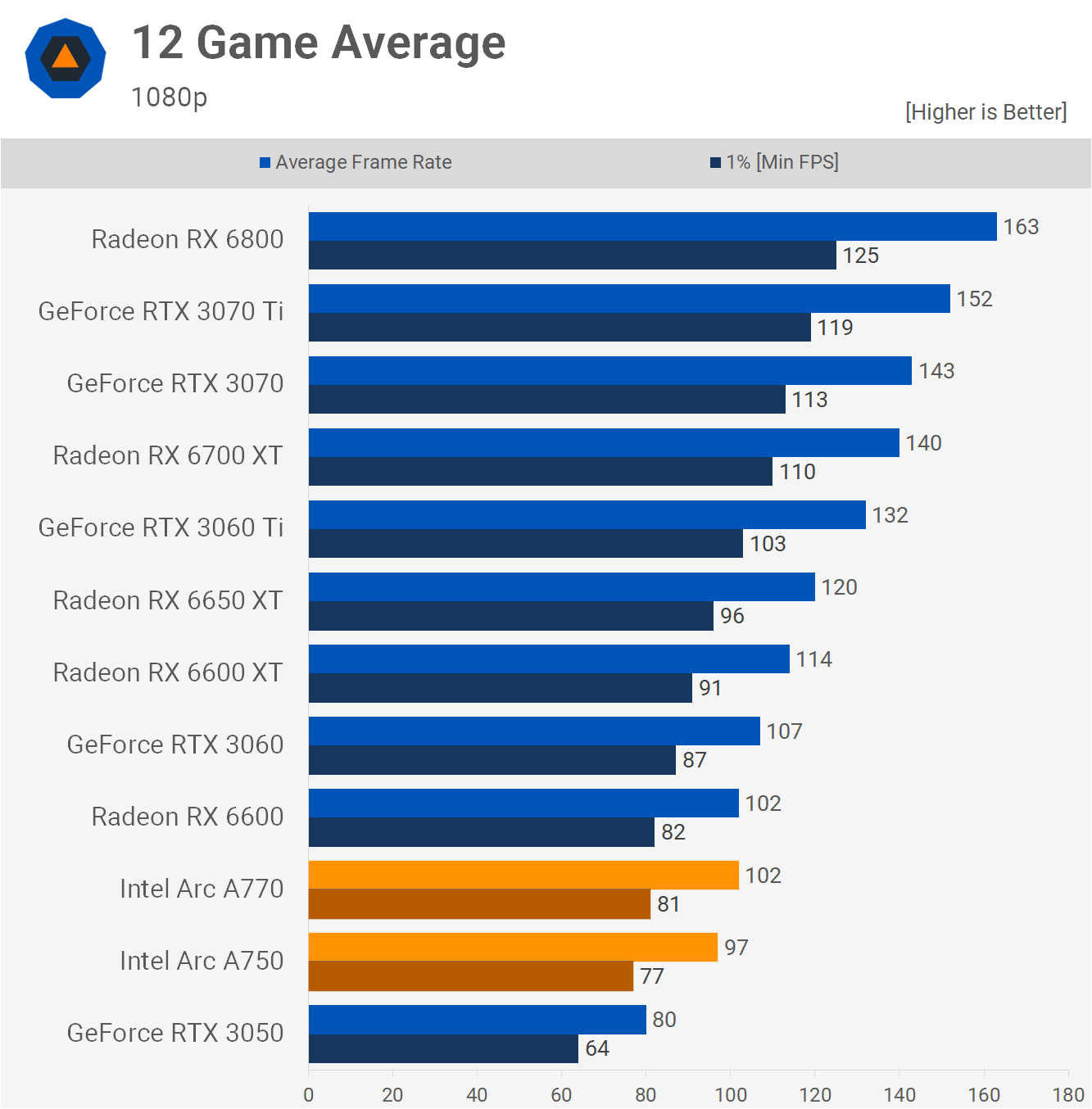

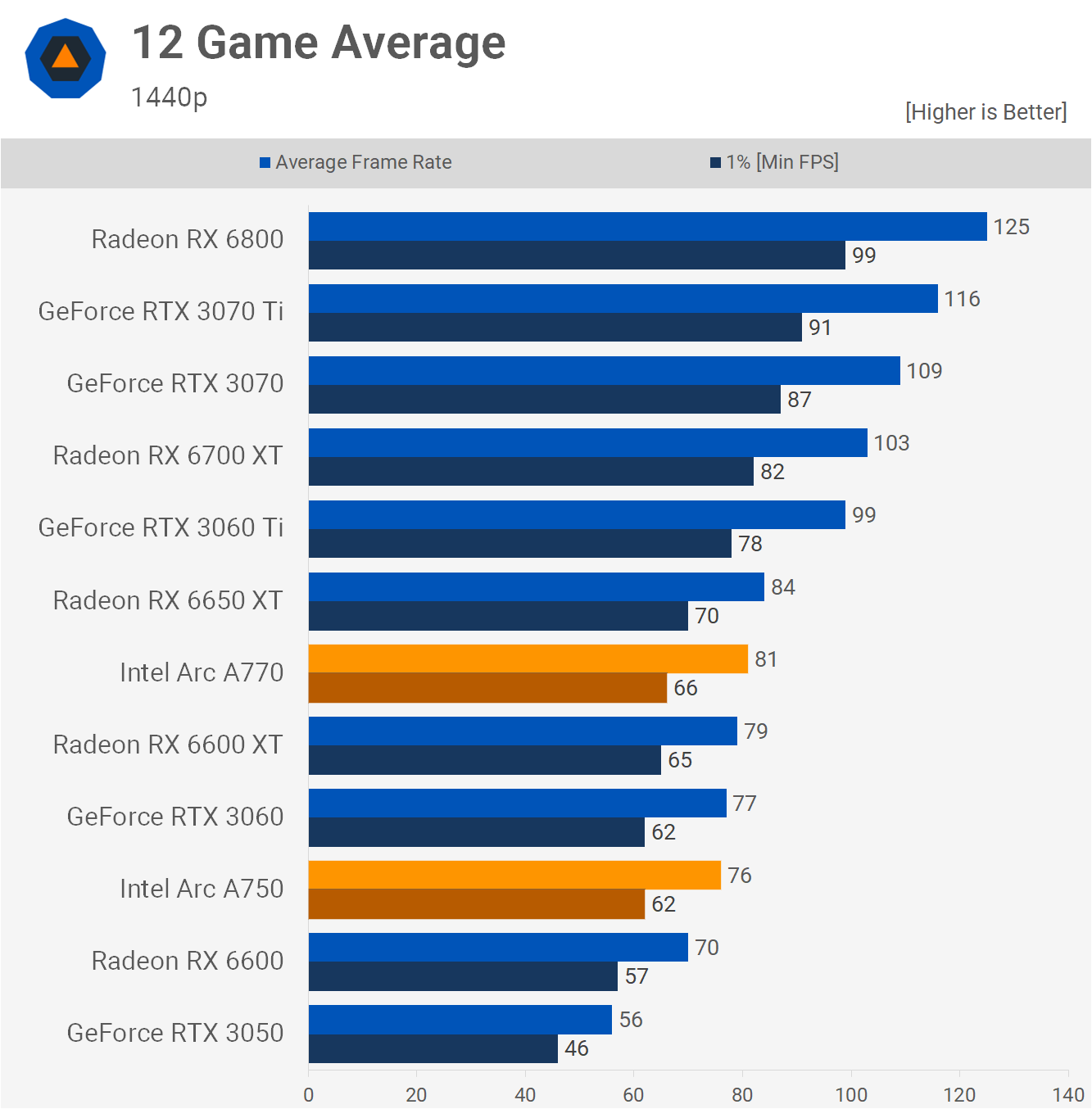

12 Game Average

Looking at the 12 game average at 1080p, it's hard to get excited about these new Intel Arc graphics cards. The Arc A770 only managed to match the Radeon RX 6600 with 102 fps, while the A750 was a whisker slower with 97 fps.

The 1440p results are more compelling but even so, the A770 falling behind the 6650 XT is a rough deal from Intel and although the A750 looks good sitting next to the RTX 3060, the GeForce GPU was already a terrible deal next to the 6650 XT. So AMD has made life difficult here for Intel.

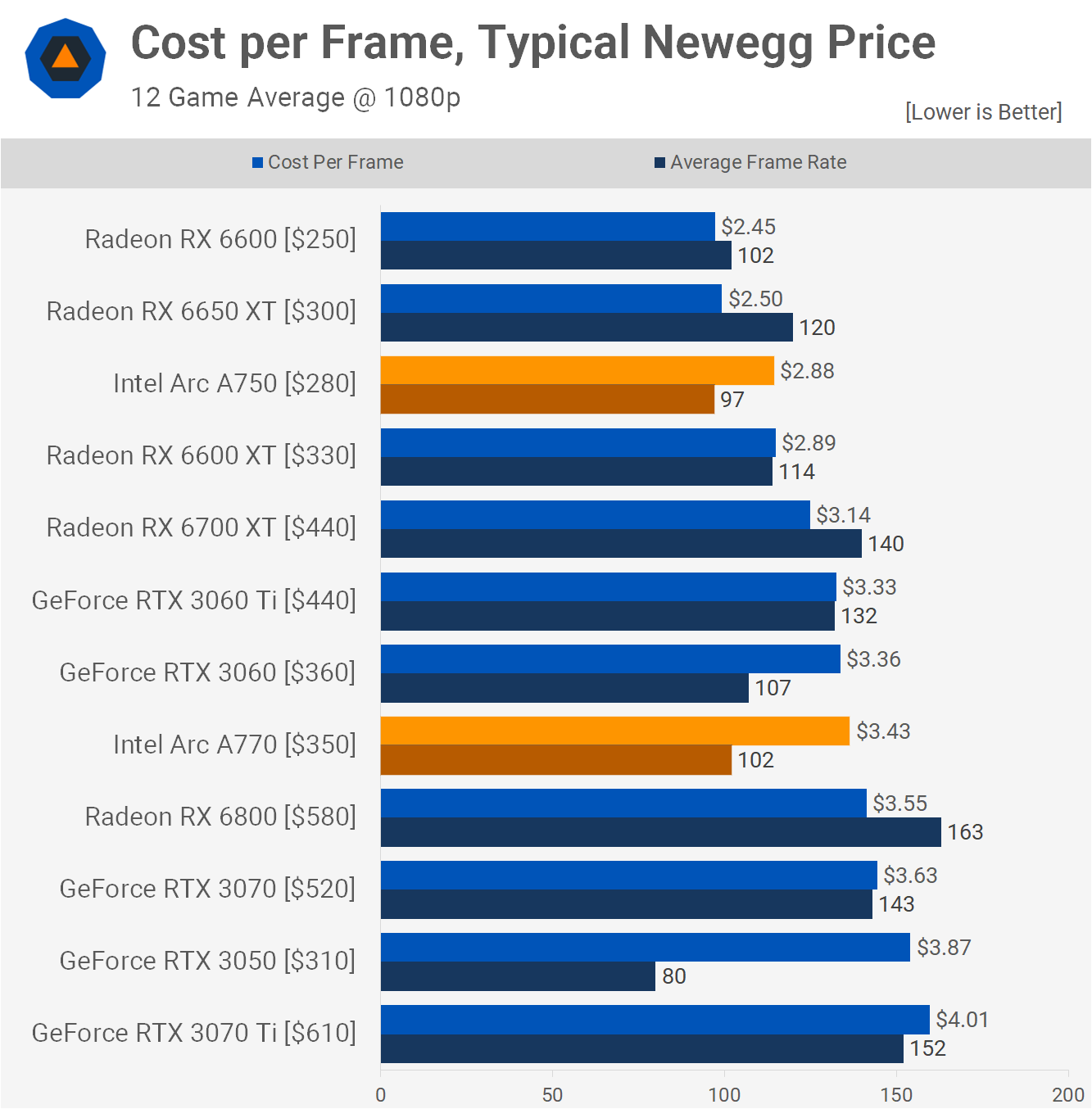

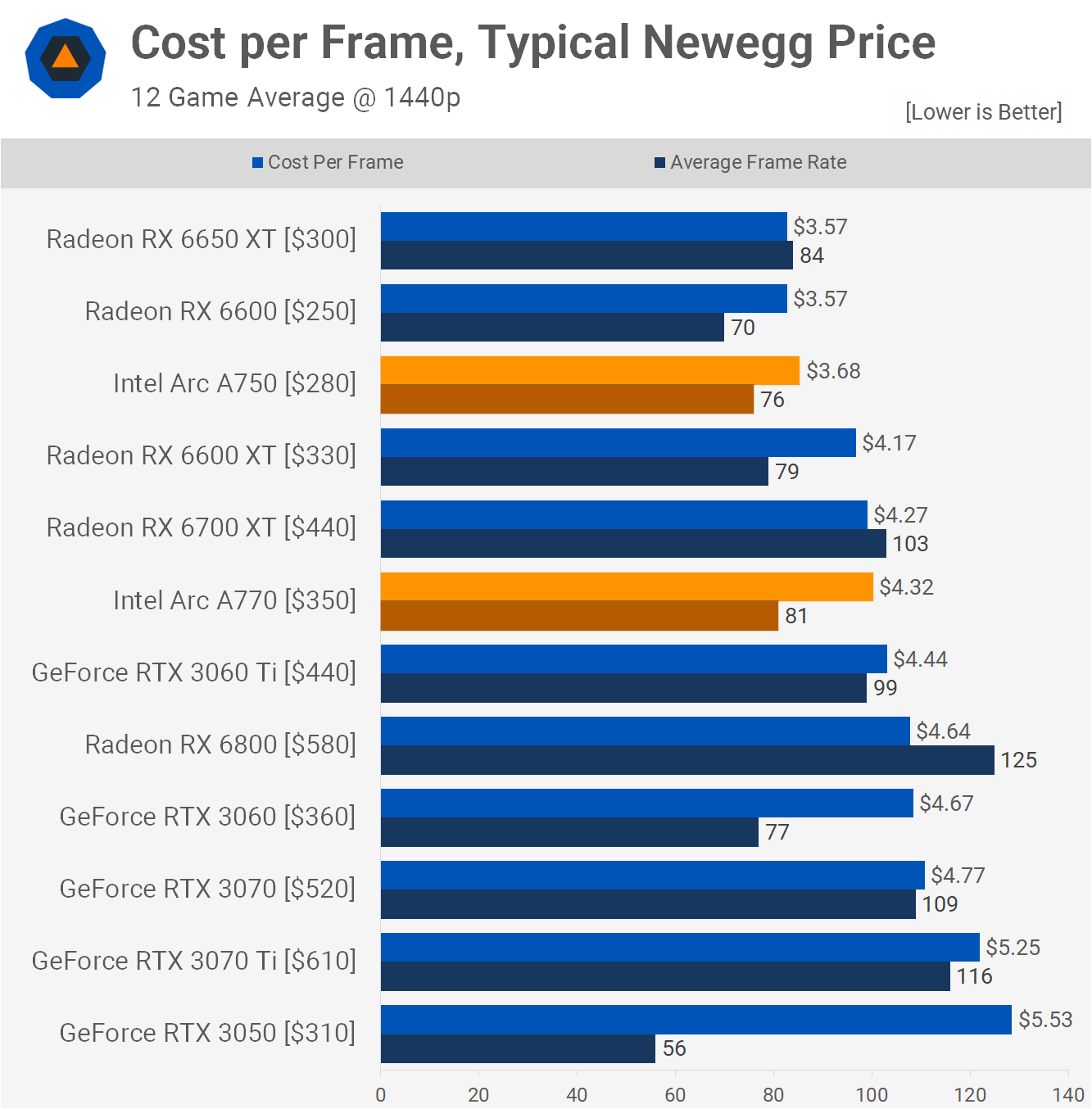

Cost per Frame

There's no better way to illustrate that last point than to look at a simple cost per frame graph, and we'll start with the 1080p data. In terms of price to performance, the A750 is only able to match the 6600 XT, while the A770 does beat out the already bad value RTX 3060 and 3060 Ti, but can only match the Radeon RX 6800 which is 60% faster.

Both should be compared to the 6650 XT and when doing so you learn that the A750 costs 15% more per frame and the A770 is 37% more costly per frame...

At 1440p things look a lot better for Intel, but ultimately not good enough. The Radeon 6650 XT matched the cost per frame of the Radeon 6600 and that meant the A750 was 3% more costly per frame and the A770 21% more costly.

Even the 8GB version of the A770 will come out more expensive per frame than the A750, so in short Intel can't match the 6650 XT at their current MSRPs.

What We Learned

The Arc A770 and A750 graphics cards genuinely impressed us on numerous occasions during testing and we believe there's true potential for Intel to become a third contender in the GPU space. It's just not going to happen with Alchemist and realistically that was never going to be possible.

The cost per frame graph above nails it – as in nailed the A770 to the point where it's dead on arrival. That seems a bit harsh as we saw impressive performance in quite a few instances given the price, but there were also plenty of cases where it was anywhere from underwhelming to downright terrible.

Thus it's hard to entertain the idea of paying a premium for the Arc A770 or A750 over established Radeon GPUs. If the RTX 3060 was the lone competitor in the sub-$400 market, then the A770 or A750 might be worth a shot, but unfortunately for Intel, AMD has undercut Nvidia by a substantial margin, making GeForce GPUs largely irrelevant in the sub $400 market.

Out of interest, we asked on Twitter how much cheaper these Intel Arc GPUs would need to be in order for you to purchase one over an AMD or Nvidia graphics card. The general consensus was that Intel needed to come in at least 20% cheaper, so with the 6650 XT easily available for $300, that means the Arc A770 would have to cost no more than $240 for some of you to go for it, and that's a discount of a little over 30%. That's probably not going to happen as Intel would likely be selling them at a significant loss, but that's the reality of the situation.

The Arc A770 needs to cost $240 and the A750 $220, anything more than that and you might as well buy from the established brand. Granted, we've yet to properly investigate XeSS, but Nvidia has DLSS and AMD offers FSR, which have more supported games at present.

We also haven't had time to test ray tracing performance beyond what we saw in F1 2021 and that's certainly something to explore in the weeks to come. That said, we strongly believe ray tracing at this performance tier is mostly irrelevant as you'd have to compromise on other visual quality settings in order to achieve a 60 fps minimum at low resolutions such as 1080p. We have the same opinion of the 6650 XT and RTX 3060.

Unless Intel is willing to slash prices, we can't recommend you take the gamble on either of the Intel Arc GPUs. In this price range you're better off buying the Radeon 6650 XT.