A while back we reviewed the Nvidia RTX 4090 Laptop GPU, taking a comprehensive look at how it fares in the laptop market. In short, it's a powerful but expensive upgrade over the previous fastest GPU, the RTX 3080 Ti Laptop mode. Nvidia definitely made impressive strides in terms of performance per watt with the Ada Lovelace architecture.

But there's another aspect of the RTX 4090 laptop GPU we didn't focus on before, and that's how the two RTX 4090 variants compare: the laptop part and the desktop part. As usual, we did note the deceiving naming scheme, but this review will be solely dedicated to look at just how significant the margin is between these GPUs – and why you might want to spend your hard earned cash on a desktop instead of a laptop this generation for gaming.

Without even benchmarking, it's readily apparent from looking at the spec sheet that the GeForce RTX 4090 desktop and laptop parts are vastly different.

It goes right back to the GPU die itself: the desktop card uses AD102, an impressive 609 sq mm die with 76 billion transistors. Meanwhile, the laptop model uses AD103, the same die as the RTX 4080, which is 379 sq mm in size with 46 billion transistors. This brings with it a substantial reduction in CUDA core count, dropping from a whopping 16,384 to just 9,728 in the laptop model, along with associated reductions in tensor cores, RT cores and L2 cache.

The memory subsystem is also a lot smaller on the laptop model. We get 16GB of GDDR6 memory on a 256-bit bus, versus 24GB of GDDR6X memory on a 384-bit bus on the desktop.

| GeForce RTX 4090 Laptop GPU 150W | GeForce RTX 4090 Laptop GPU 80W | GeForce RTX 4090 (Desktop) | GeForce RTX 4080 (Desktop) | |

| SM Count | 76 | 76 | 128 | 76 |

| Shader Units | 9728 | 9728 | 16384 | 9728 |

| RT Cores | 76 | 76 | 128 | 76 |

| Tensor Cores | 304 | 304 | 512 | 304 |

| GPU Core Boost Clock (MHz) | 2040 MHz | 1455 MHz | 2520 MHz | 2505 MHz |

| VRAM Size and Type | 16GB GDDR6 | 16GB GDDR6 | 24GB GDDR6X | 16GB GDDR6X |

| Memory Clock | 18 Gb/s | ? | 21 Gb/s | 22.4 Gb/s |

| Memory Bus Width | 256-bit | 256-bit | 384-bit | 256-bit |

| Memory Bandwidth | 576 GB/s | ? | 1008 GB/s | 717 GB/s |

| TGP | 150W | 80W | 450W | 320W |

| GPU Die | AD103 | AD103 | AD102 | AD103 |

| GPU Die Size | 379 sq.mm | 379 sq.mm | 609 sq.mm | 379 sq.mm |

| Transistor Count | 46 billion | 46 billion | 76 billion | 46 billion |

The desktop graphics card not only has 50% more VRAM, but 75% more memory bandwidth as well.

And because the laptop GPU is power limited to 150-175W in the best cases, versus a whopping 450W for the desktop card, clock speeds are lower across both the GPU core and memory. The GPU has a boost clock about 500 MHz lower, and memory speeds drop from 21 to 18 Gbps.

All things considered, the laptop chip is trimmed by about 60% compared to the desktop card across all facets with clock speeds at about 80% of the desktop card's level. Based on this alone, there is no way the laptop variant and desktop variant will perform anywhere close to each other, so it's highly misleading to give both GPUs basically the same name.

We could maybe, just maybe cut Nvidia some slack if both the laptop and desktop models used the same GPU die, but that is not the case. And with that, it's time to explore performance...

For testing, on the desktop side we're using a high-end gaming system because that's what the majority of people buying an RTX 4090 graphics card will be using. The test system is powered by a Ryzen 9 7950X, 32GB of DDR5-6000 CL30 memory, the MSI MEG X670E Carbon Wi-Fi motherboard, built inside the Corsair 5000D.

On the laptop side we have the MSI Titan GT77, which has the RTX 4090 Laptop GPU configured to a power limit of 150-175W. There's the Core i9-13950HX processor inside, 64GB of DDR5-4800 CL40 memory, plus plenty of cooling capacity to dissipate over 200W of combined CPU and GPU power.

For this review we'll be comparing performance at 1080p, 1440p and 4K, with an external display used for all configurations. That means that on the laptop side the display is connected directly to the GPU for the best possible performance output.

Also, when testing at 1080p we've increased the power limit on the CPU to 75W to best alleviate the potential CPU bottleneck. Meanwhile, on the desktop, everything is a stock configuration with no overclocking.

Gaming Benchmarks

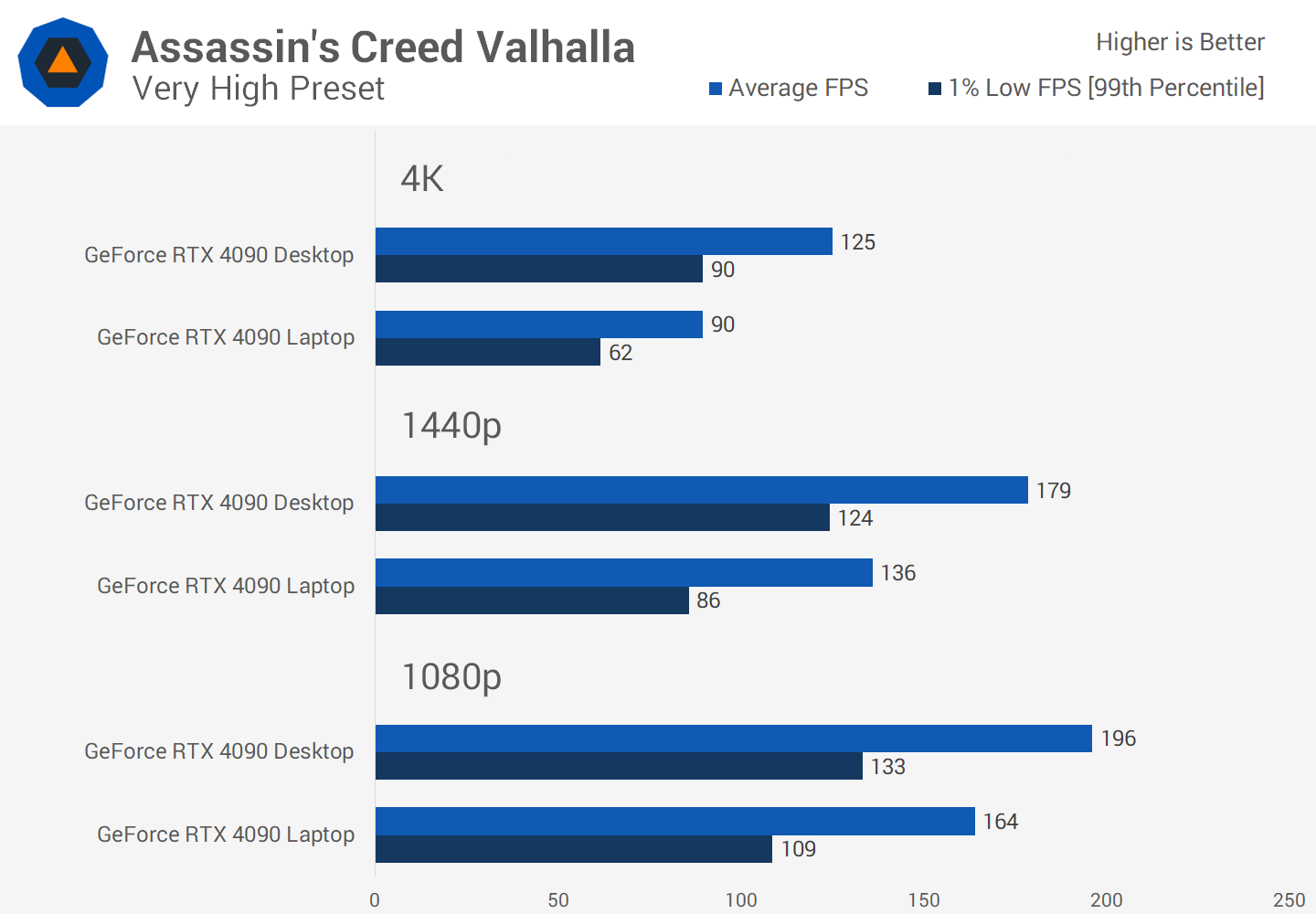

Starting with Assassin's Creed Valhalla at 1080p, we see that the RTX 4090 desktop delivers 20% better performance than the laptop, which is helped by a significant contribution from the faster desktop CPU.

As we get more GPU limited, the margin between the two GPUs will grow: there's a 31% difference at 1440p, and 39% at 4K in favor of the desktop graphics card. 1% lows are consistently higher as well. Again, due to the extra CPU headroom available in the desktop system.

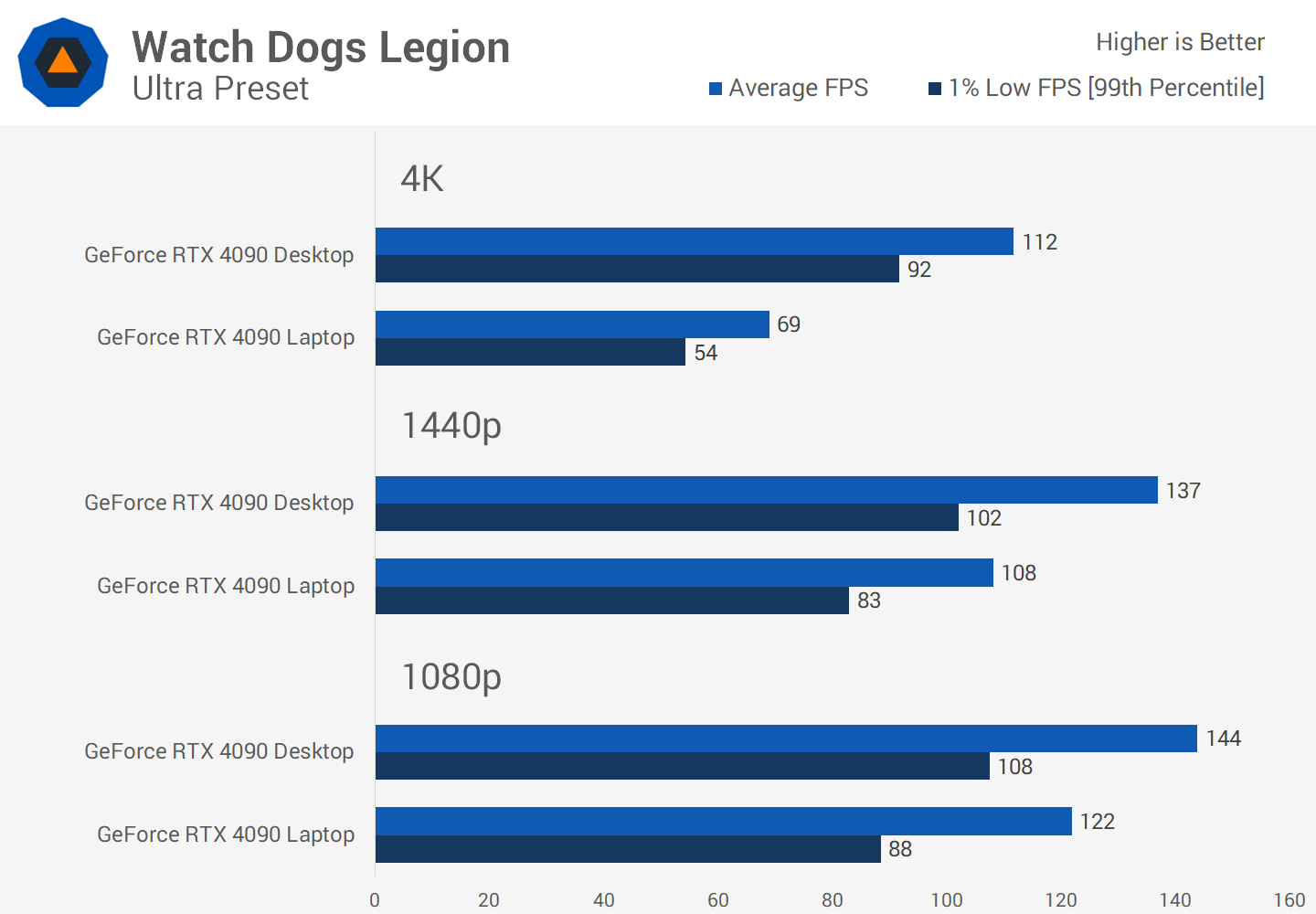

Watch Dogs Legion results are interesting. At 1080p we're looking at similar margins to Assassin's Creed, with the desktop 18 percent ahead. At 1440p it's a smaller margin that seen previously with the desktop 4090 coming in 27 percent faster, but then at 4K the beefy 450W GPU is able to fully flex its muscles and sit a huge 62 percent faster.

This is the difference between an experience around 70 FPS and around 110 FPS, which is huge for those using a high refresh rate 4K display.

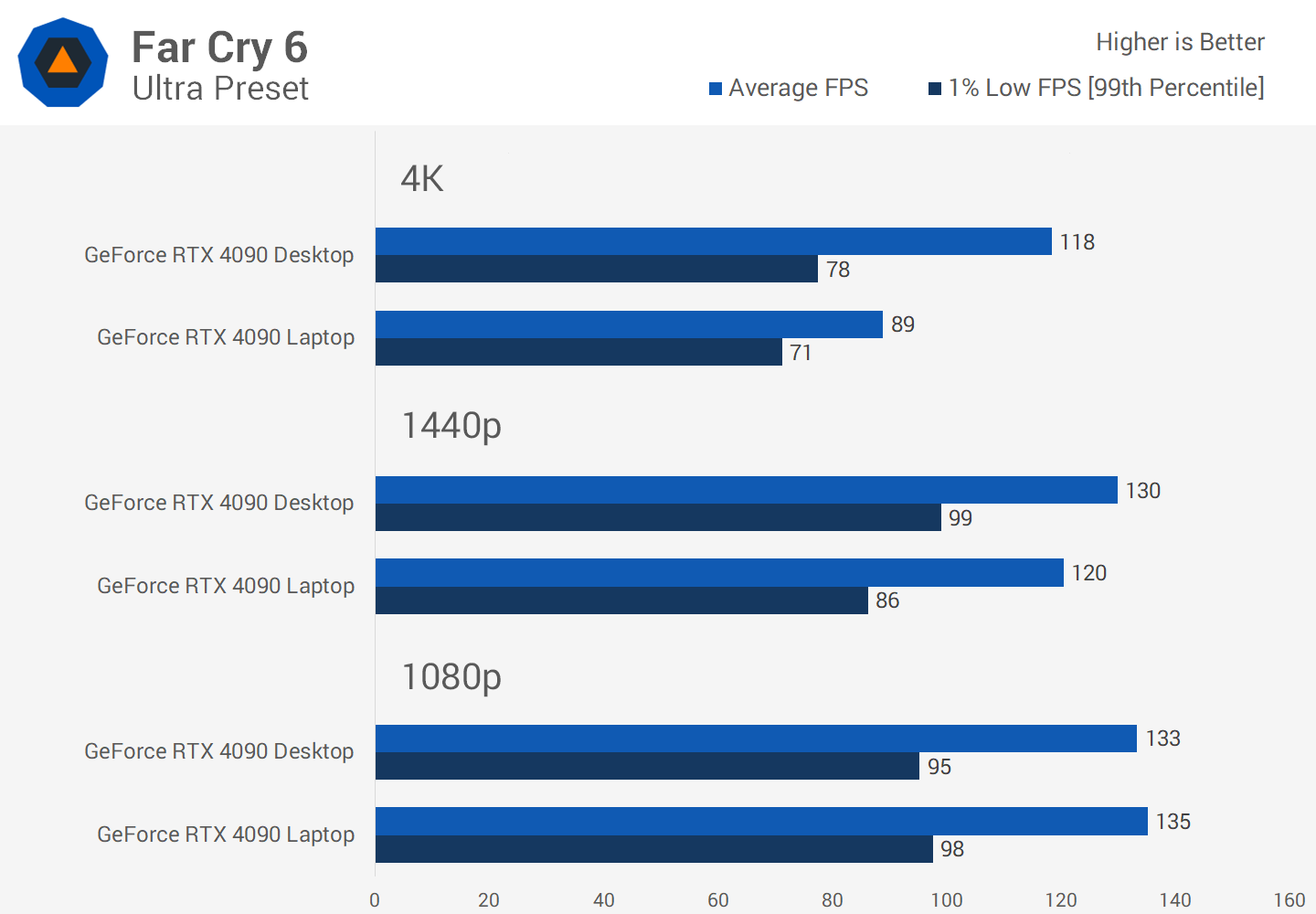

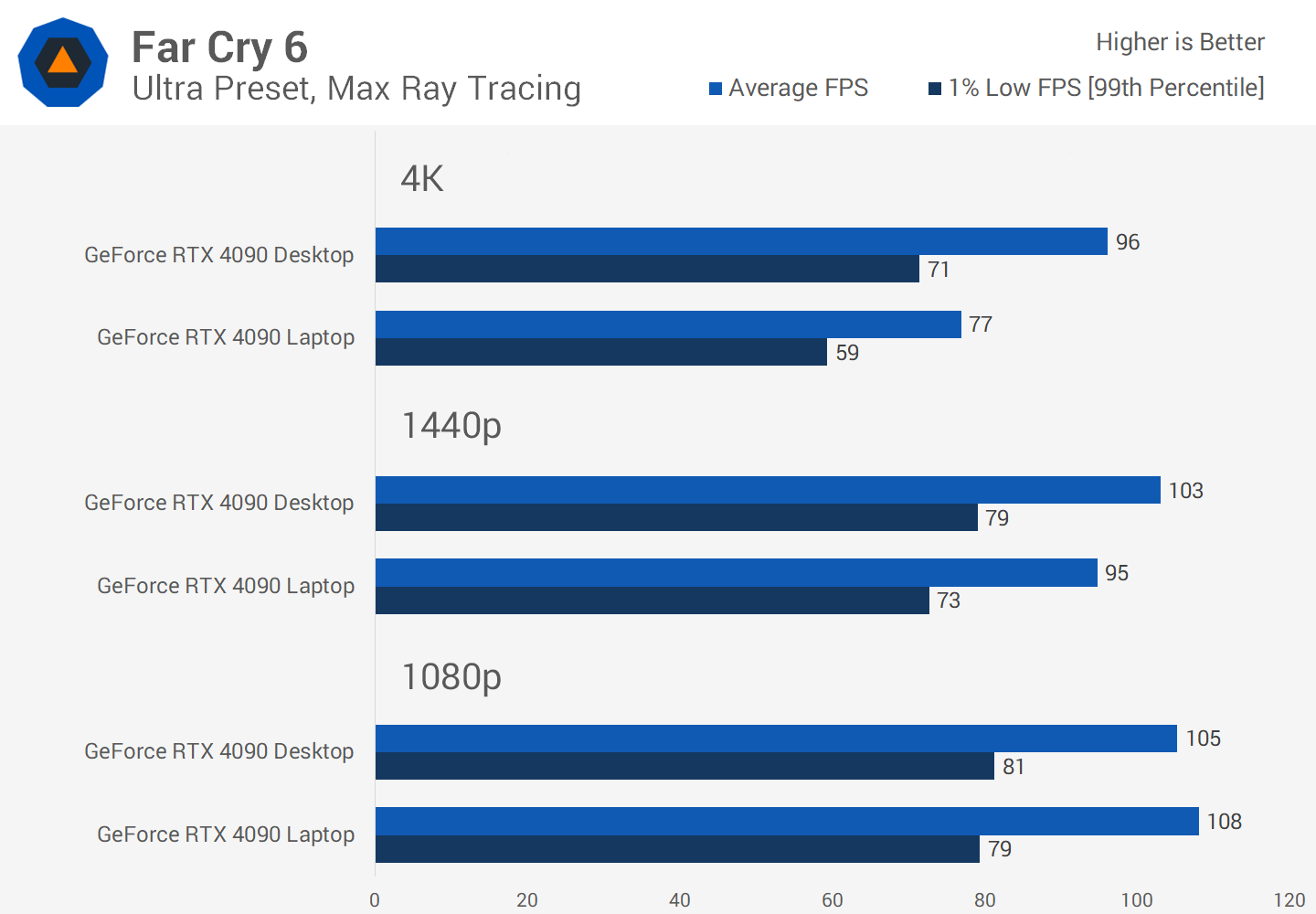

Far Cry 6 is one of the more unusual cases here. At 1080p we see virtually identical performance between the desktop and laptop models because we're fully CPU limited and the Core i9-13950HX is able to play to its strengths.

At 1440p we also don't see much of an advantage for the desktop GPU, coming in just 8 percent faster on average, with 15% higher 1% lows. But then at 4K the margin increases to 33 percent overall. Not the biggest delta but certainly a favorable result for the desktop card.

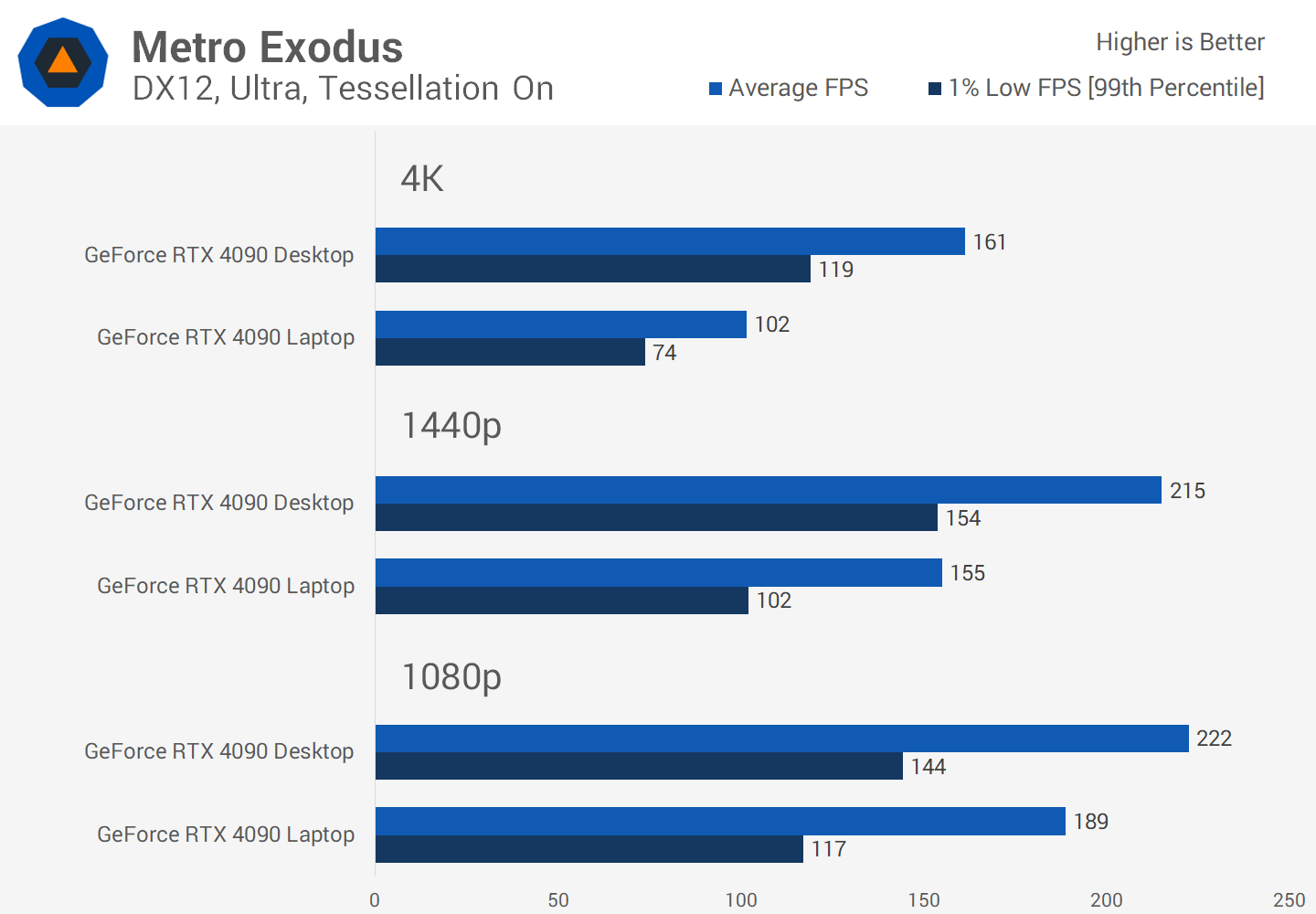

Metro Exodus is a typical showing. At 1080p the margin between the desktop and laptop variants sits at 18 percent, similar to some of the other games seen so far. At 1440p this margin increases to 39% in favor of the desktop card, then 59 percent at 4K – you'd definitely want to be using the desktop model at this high resolution.

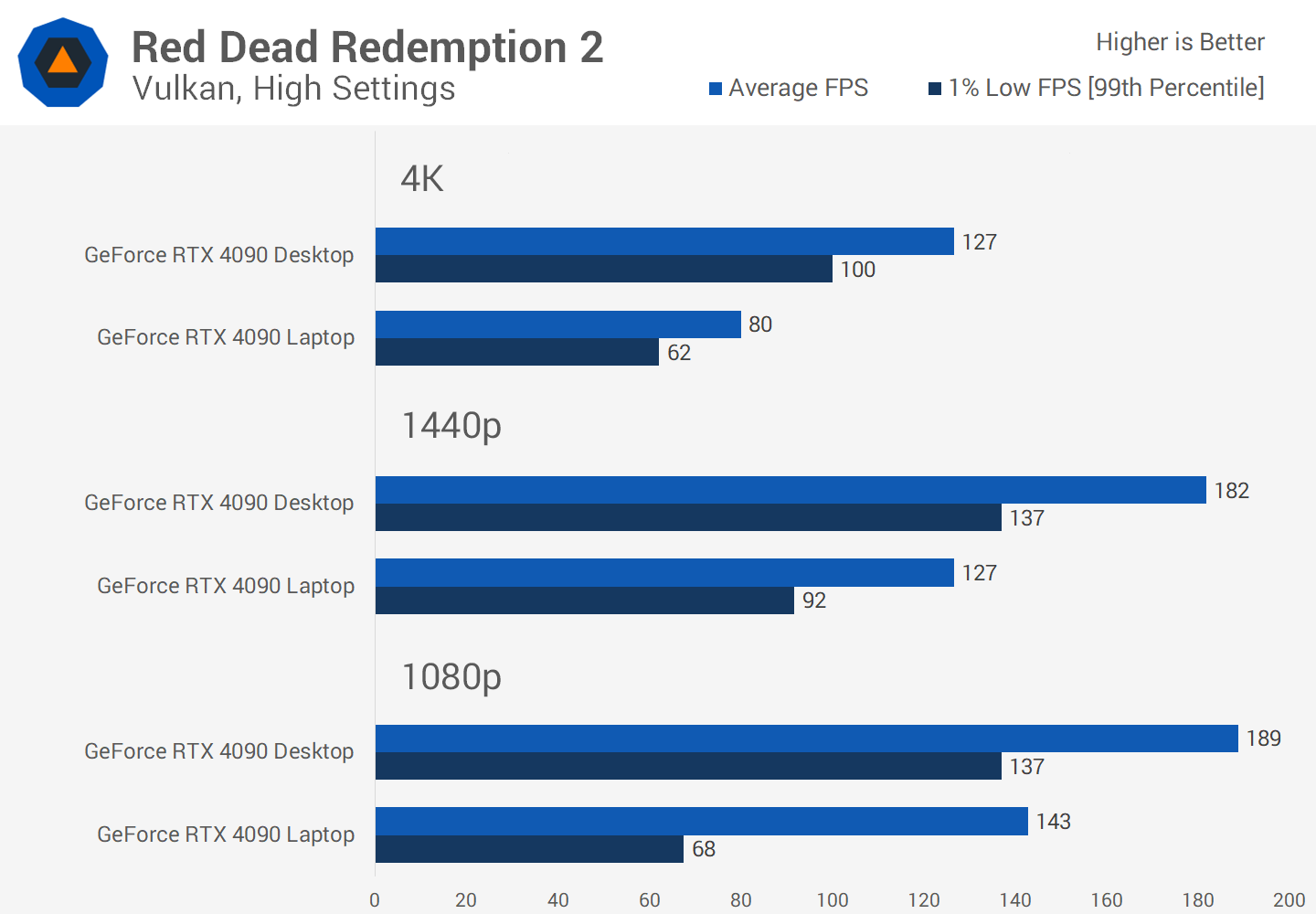

Red Dead Redemption 2 was one of the least CPU limited titles at 1080p, giving the desktop card 32 percent higher performance at the lowest resolution tested. This increased to a 44 percent margin at 1440p, then a 58 percent margin at 4K, again showing the relative slowness of the laptop model when fully GPU limited.

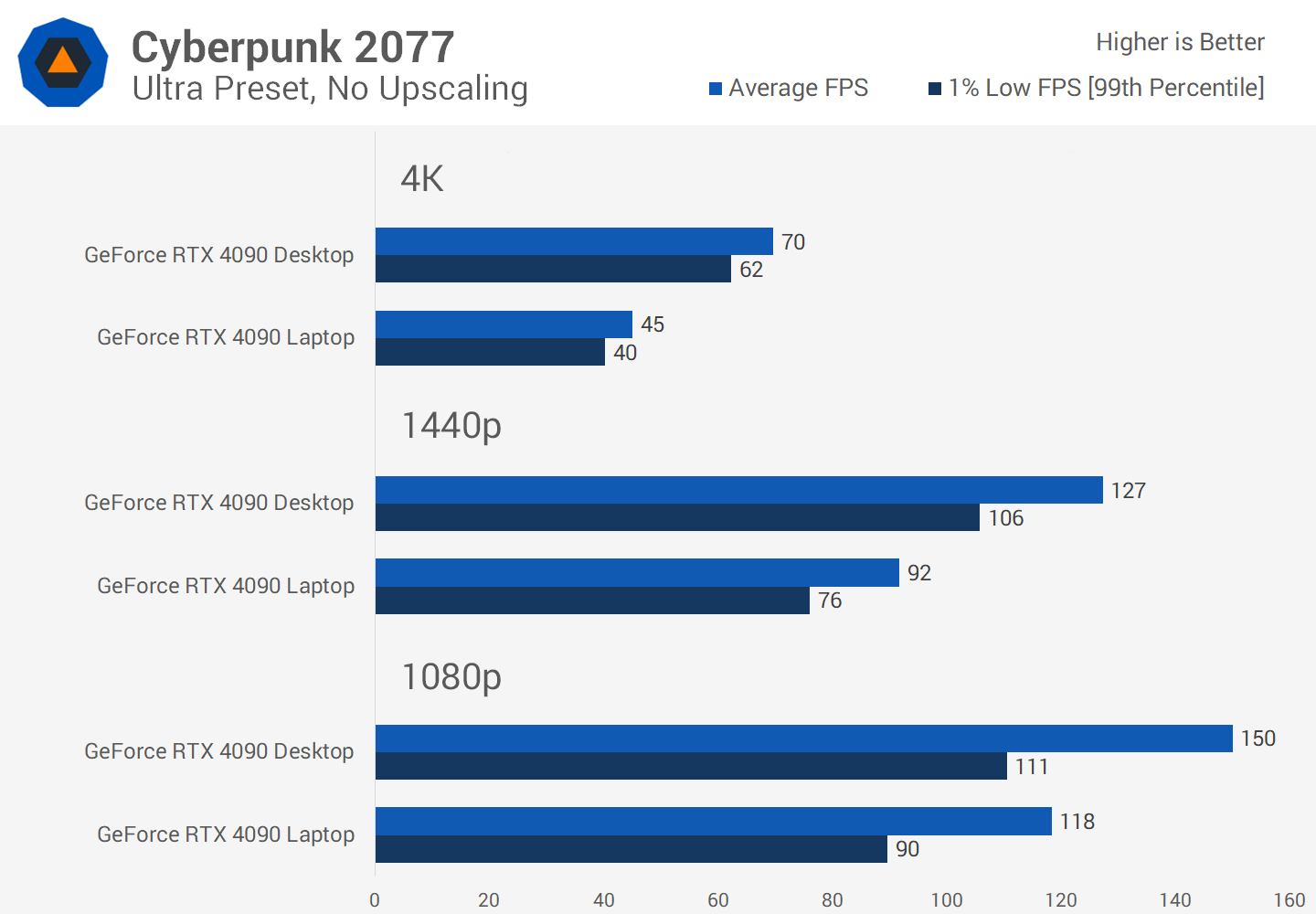

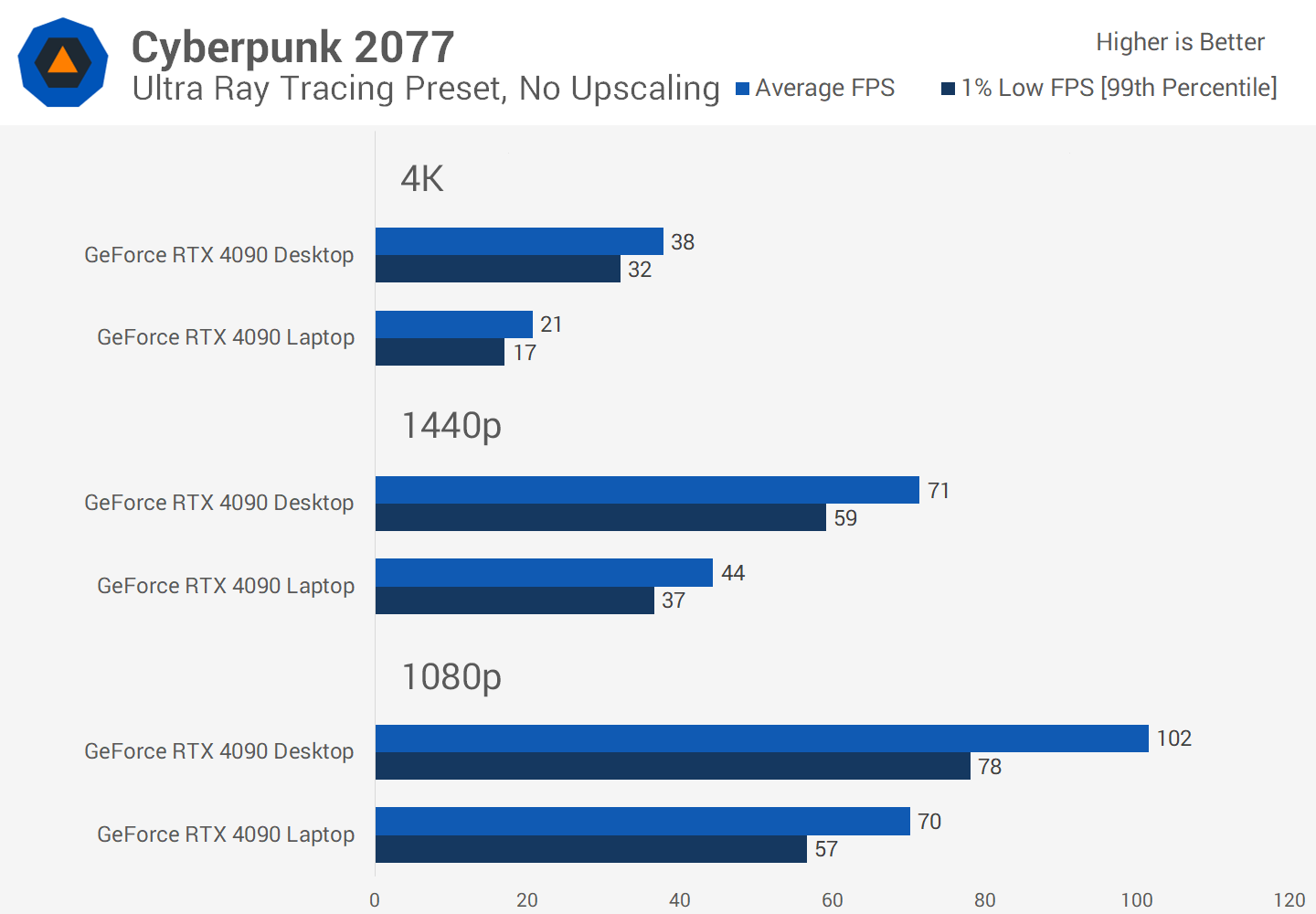

Cyberpunk 2077 using the Ultra preset without ray tracing or upscaling is quite favorable to the desktop RTX 4090. At 1080p it was 27% faster than the laptop GPU, which then grew to 39% at 1440p, and 55% at 4K.

With the frame rates seen here this is quite significant: it's the difference between 45 FPS at 4K on the laptop versus 70 FPS on the desktop – with the desktop being much more playable at that frame rate.

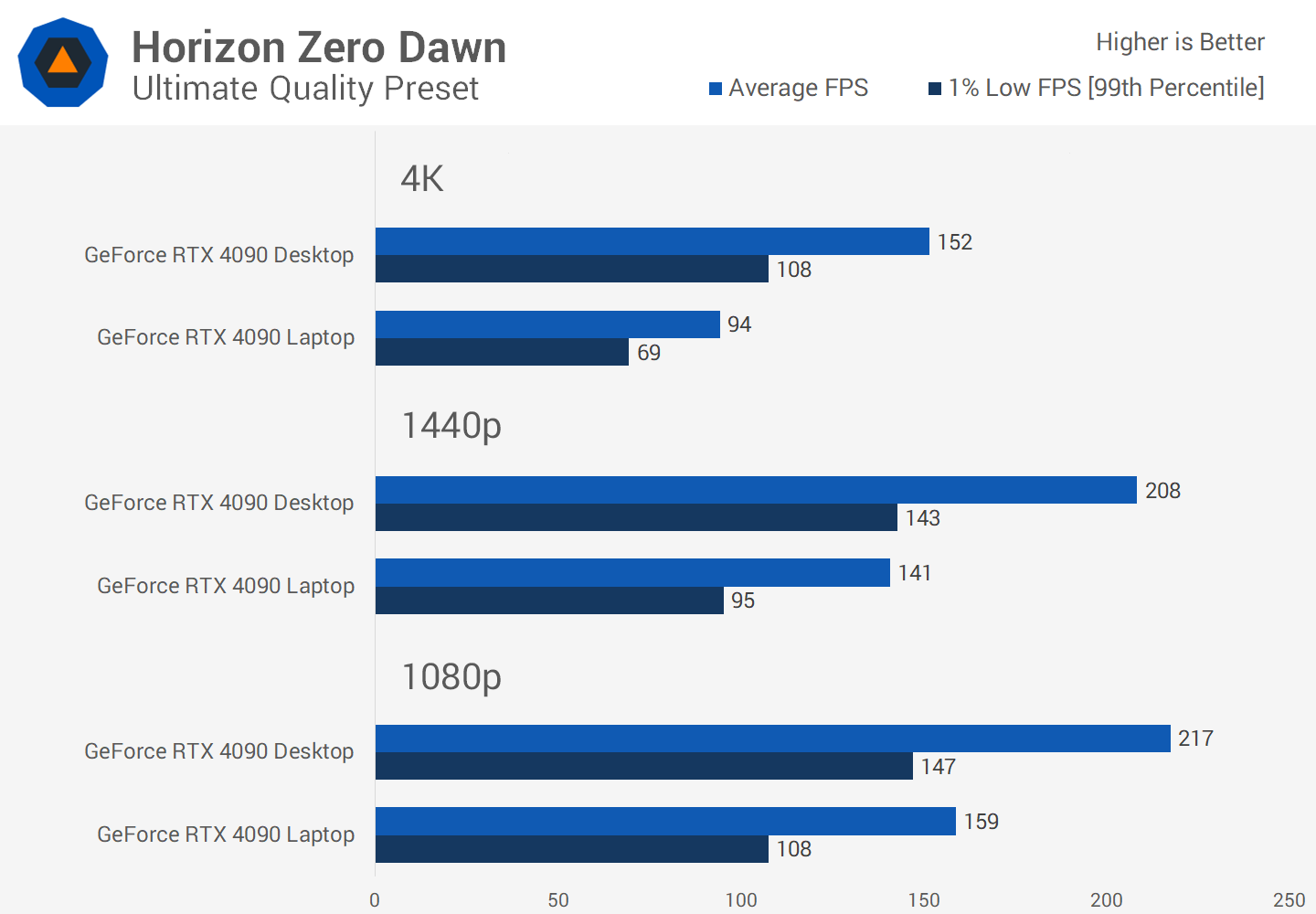

Next up is Horizon Zero Dawn, which showed a 37 percent margin between the two models at 1080p, a 48 percent margin at 1440p and a 61 percent margin at 4K so at all three resolutions there is a strong performance lead for the desktop card.

Forza Horizon 5 is showing modestly interesting results. It didn't have the lowest margins between the desktop and laptop at 1080p or 1440p, the numbers here are decent with a 26% lead at 1080p and 38 percent lead at 1440p for the desktop.

But at 4K the margin is a bit lower than expected, just 41 percent in favor of the desktop model which is lower than the average and a relatively good result for the laptop variant.

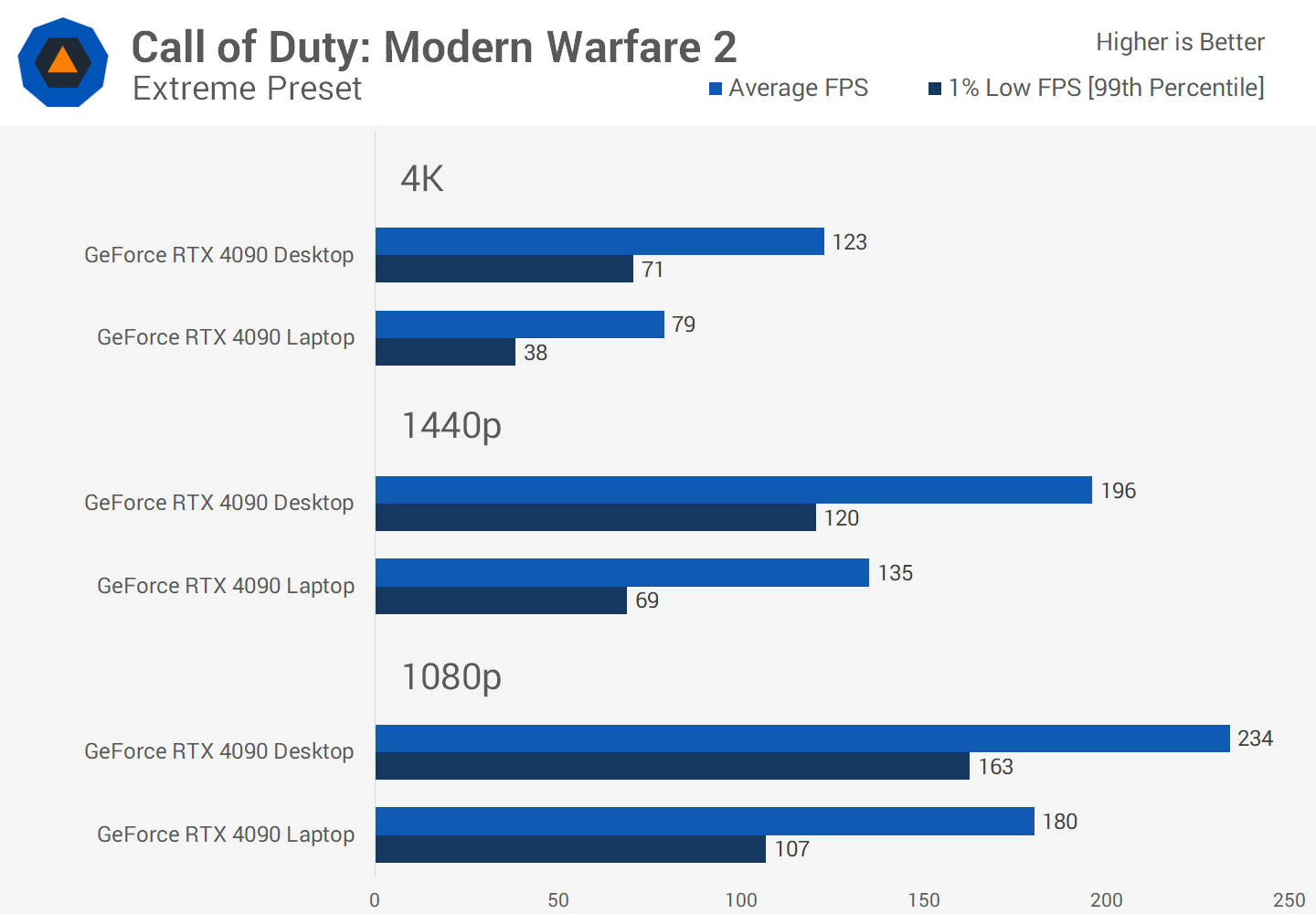

Gamers playing Modern Warfare 2 will definitely want the desktop configuration as it's quite faster at all resolutions using Extreme settings, with similar margins seen using Basic settings. 30 percent faster at 1080p, 45 percent faster at 1440p and 55 percent faster at 4K with much stronger 1% lows at all resolutions as well.

The latency difference between gaming at 80 FPS and 120 FPS is something that I can notice and I'm not really a hardcore multiplayer gamer, but I suspect most serious multiplayer fans would much prefer a desktop setup.

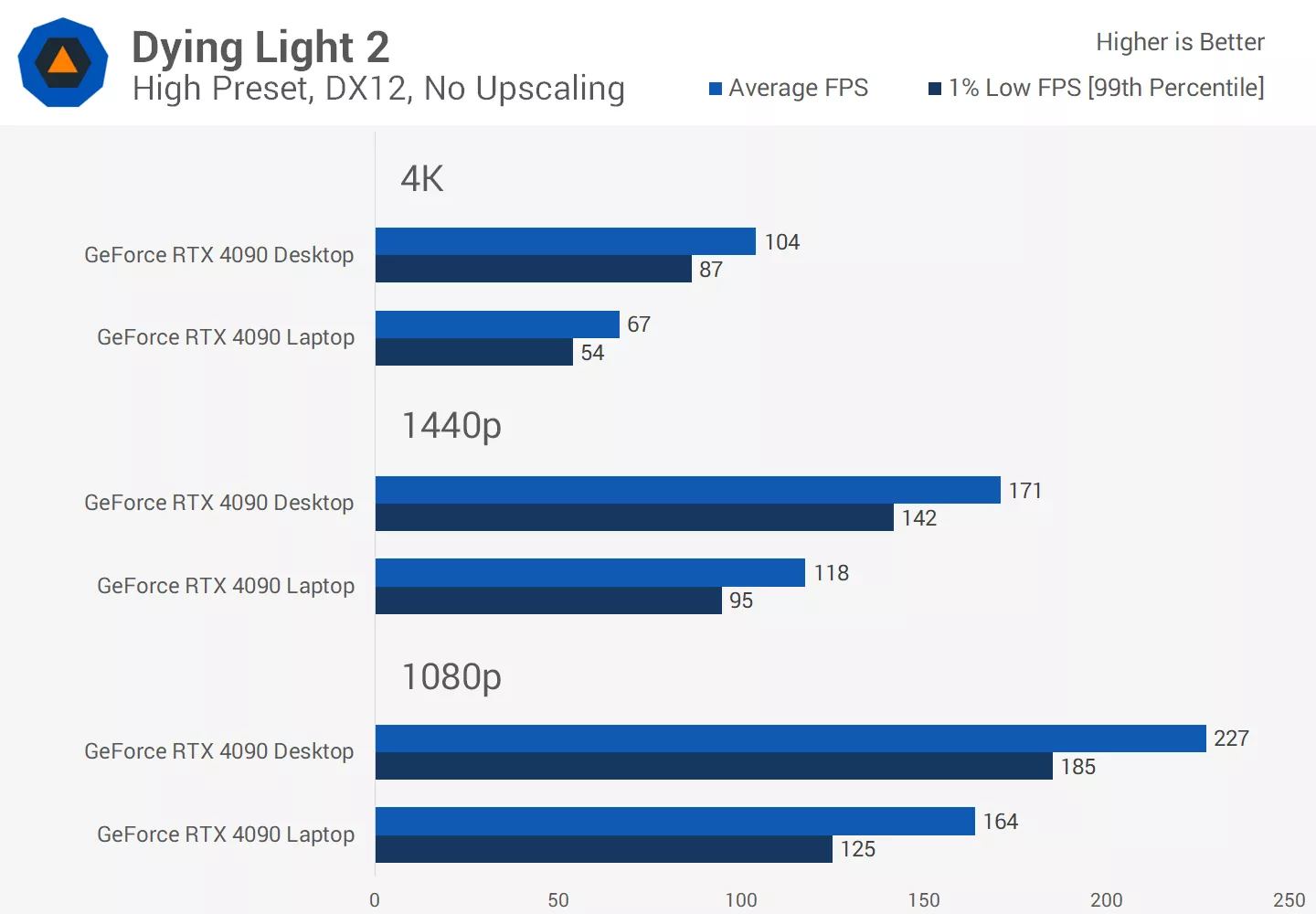

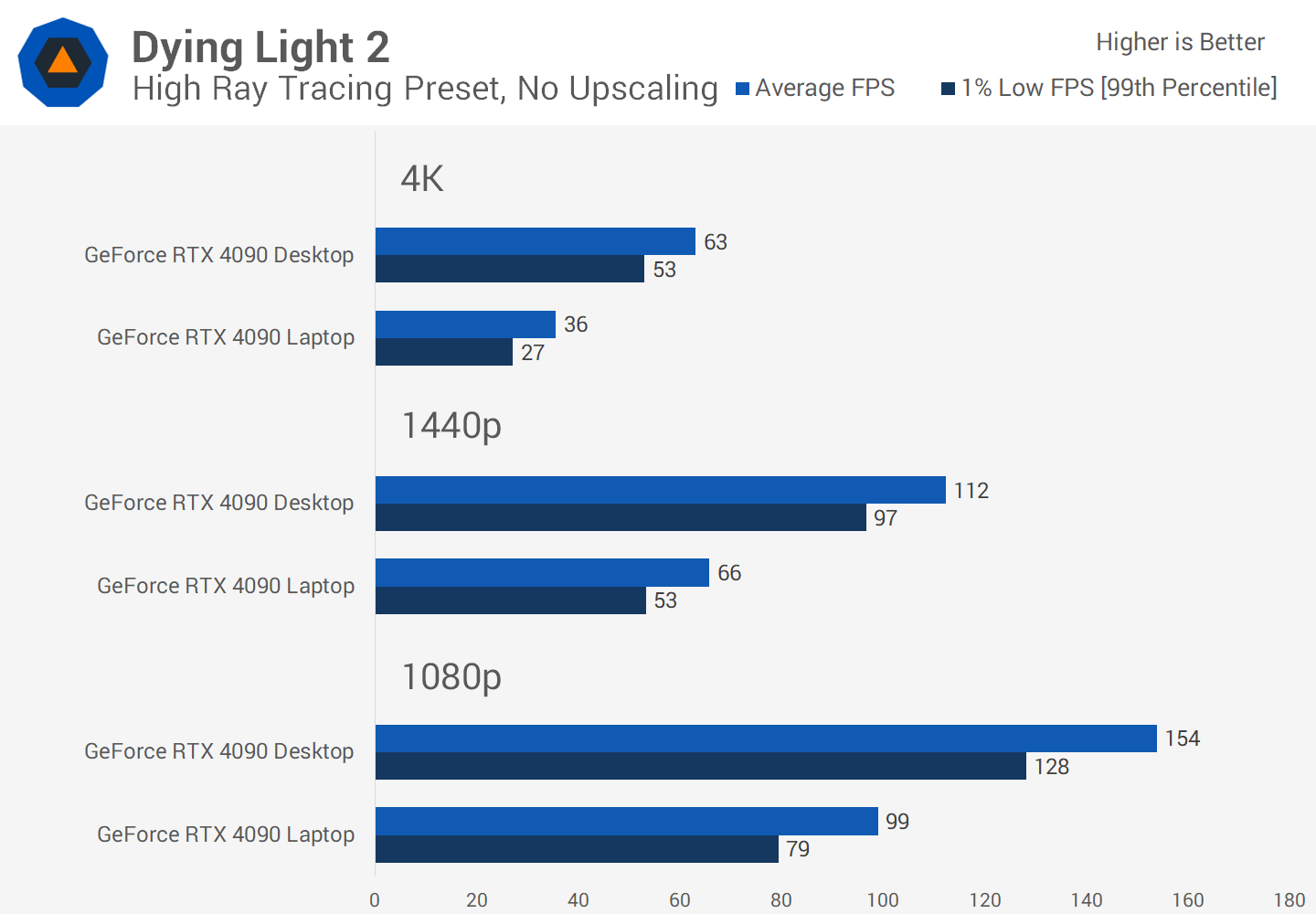

In Dying Light 2 the game was pretty easily GPU bottlenecked most of the time even using rasterization. At 1080p the desktop card was 39 percent faster, a margin that grew to 45% at 1440p and 56 percent at 4K. While the laptop model is playable at 4K using these settings, the desktop is capable of a more high refresh rate experience.

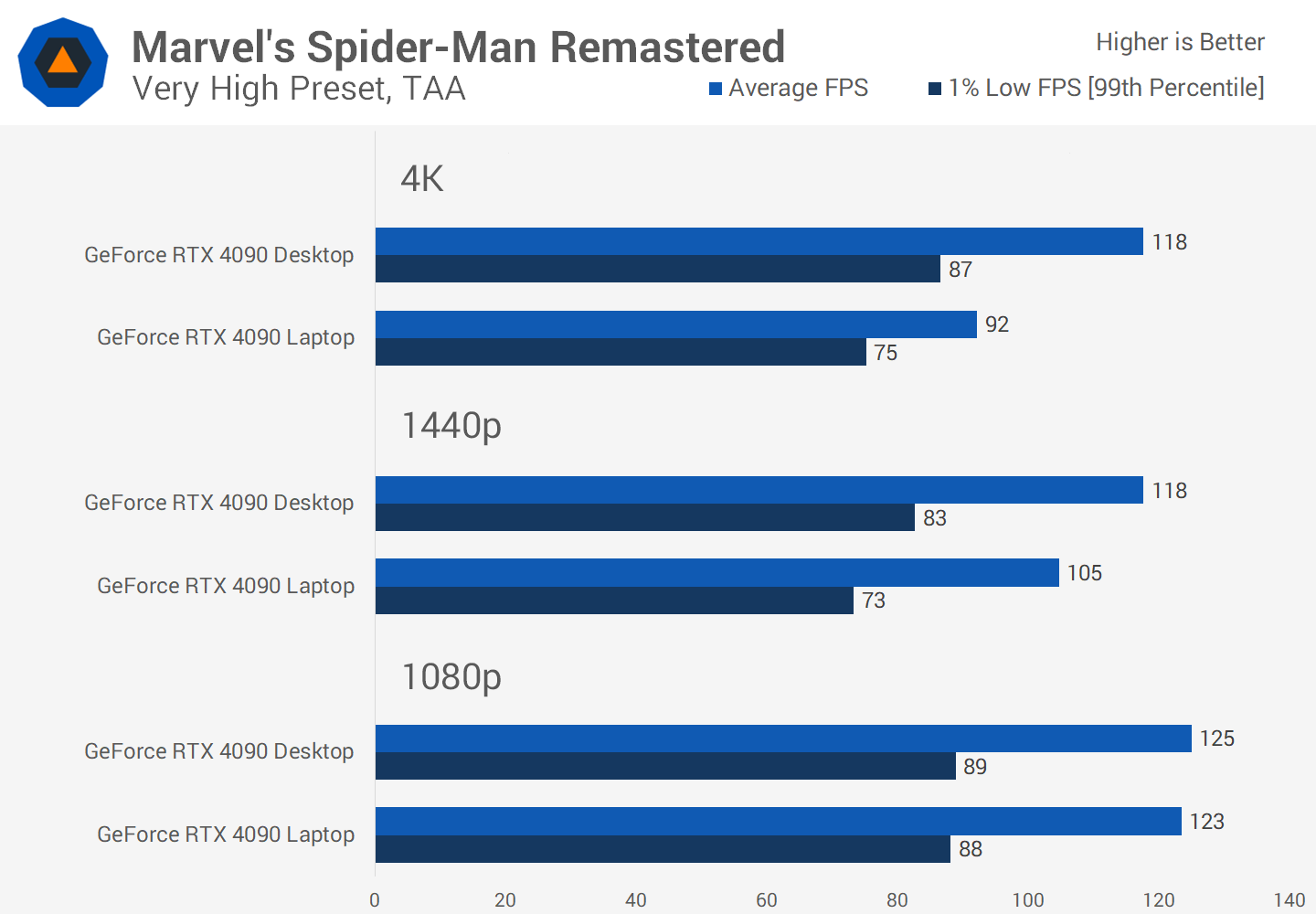

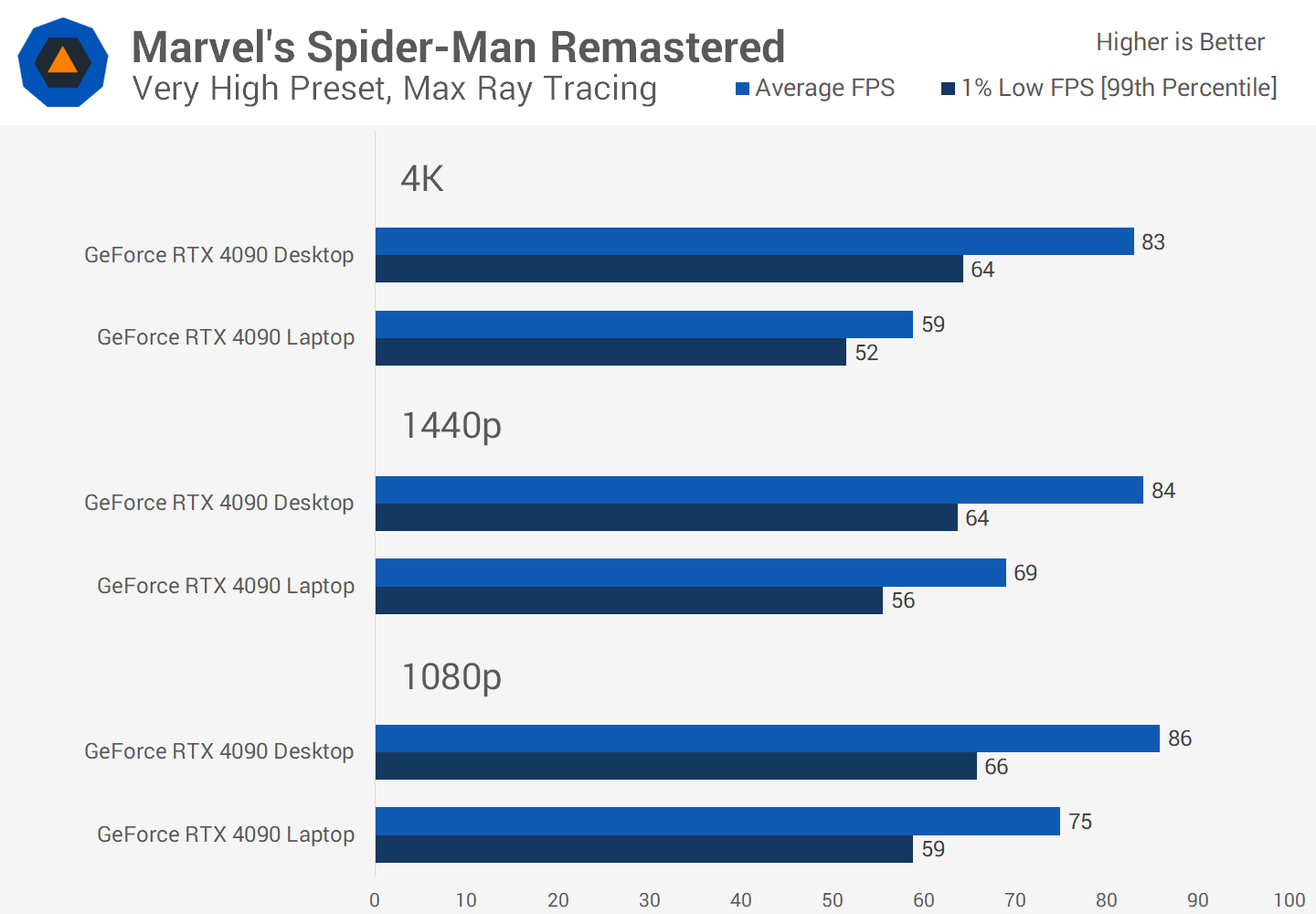

Perhaps the worst showing for the desktop configuration and therefore the best for the laptop was Spider-Man Remastered. At 1080p the game is so CPU bottlenecked there was no substantial performance difference between the desktop and laptop models.

At 1440p the desktop card was only 12 percent faster, and even at 4K it held just a 28 percent margin which is the slimmest of any rasterized game we tested. Desktop configuration is still preferable, but laptop gamers will be happy to know they are getting a more than reasonable level of performance.

Ray Tracing Performance

The differences between these two GPUs don't change substantially when looking at ray tracing. Far Cry 6, for example, remains very similar between both variants at 1080p and 1440p, while at 4K the desktop configuration is only 25% faster. A very favorable game for the laptop and largely CPU limited on desktop...

In other titles the difference can be massive.

In Cyberpunk 2077 using the ultra ray tracing preset and no upscaling, the RTX 4090 is 45 percent faster at 1080p, 61 percent faster at 1440p and whopping 83 percent faster at 4K.

This is the difference between playable and unplayable performance, the laptop model is only good for 21 FPS at 4K which isn't fixable through upscaling without dropping other quality settings if you are targeting 60 FPS.

The desktop card can do 38 FPS here which is not amazing but still playable with DLSS Balanced settings.

Dying Light 2 with ray tracing also saw huge margins in favor of the desktop card. 55% faster at 1080p, 71% faster at 1440p, and 77% faster at 4K is huge, and completely changes the discussion around what sort of settings are playable.

The laptop GPU delivers an acceptable 65 FPS at 1440p using these settings, but the desktop card can offer similar performance at a higher resolution, or a much higher frame rate at the same resolution. It's a night and day difference in this game.

What isn't a night and day difference is the ray tracing performance in Spider-Man Remastered. While the margins here are larger than without using ray tracing, the desktop card is just 15% faster at 1080p, 22 percent faster at 1440p, and 41 percent faster at 4K.

Ray tracing in this title is extremely CPU intensive which we suspect is reducing the margins somewhat compared to being fully GPU limited, like we saw in Dying Light or Cyberpunk.

Performance Summary

RTX 4090 Laptop vs. RTX 4090 Desktop in 20 Games

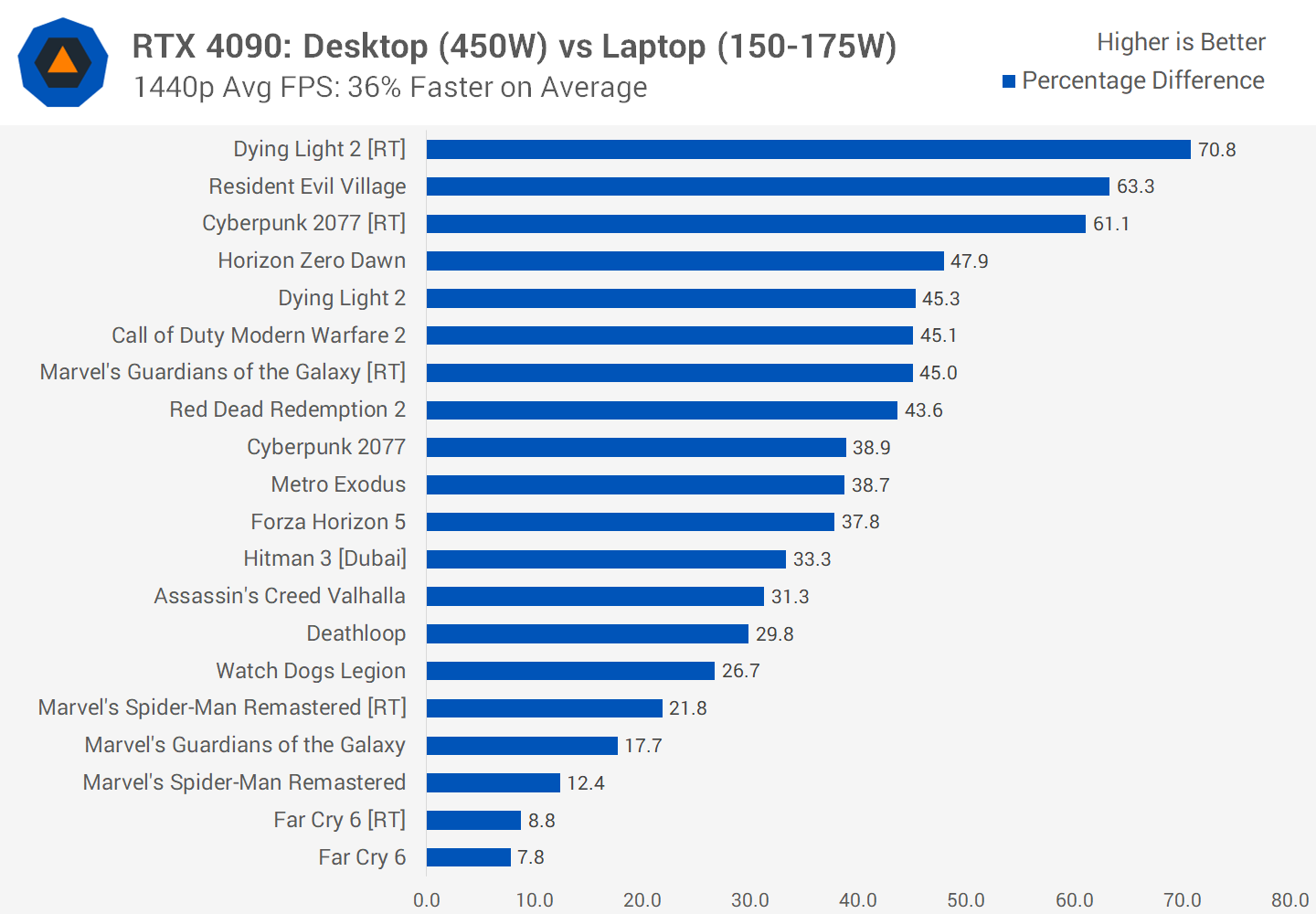

Looking across all game configurations that we tested, including a handful of ray tracing results, the RTX 4090 desktop GPU is obviously the faster variant at 1440p.

On average, the desktop part was 36 percent faster than the laptop running at 150W, although there is a significant spread of results as some games are quite heavily CPU limited, while others are GPU limited.

Far Cry 6 and Spider-Man Remastered, for example, don't benefit significantly from the desktop configuration, while games like Red Dead Redemption 2, Cyberpunk 2077 and Resident Evil Village all benefit hugely from the desktop card.

This margin grows when playing games at 4K as we become almost entirely GPU limited across the suite of games tested.

On average, the desktop configuration offers 56 percent more performance than the laptop card, which is enormous for two GPUs that have the same name.

This includes some especially massive results in Cyberpunk and Dying Light 2 ray tracing, and some less impressive gains in titles like Valhalla and Spider-Man.

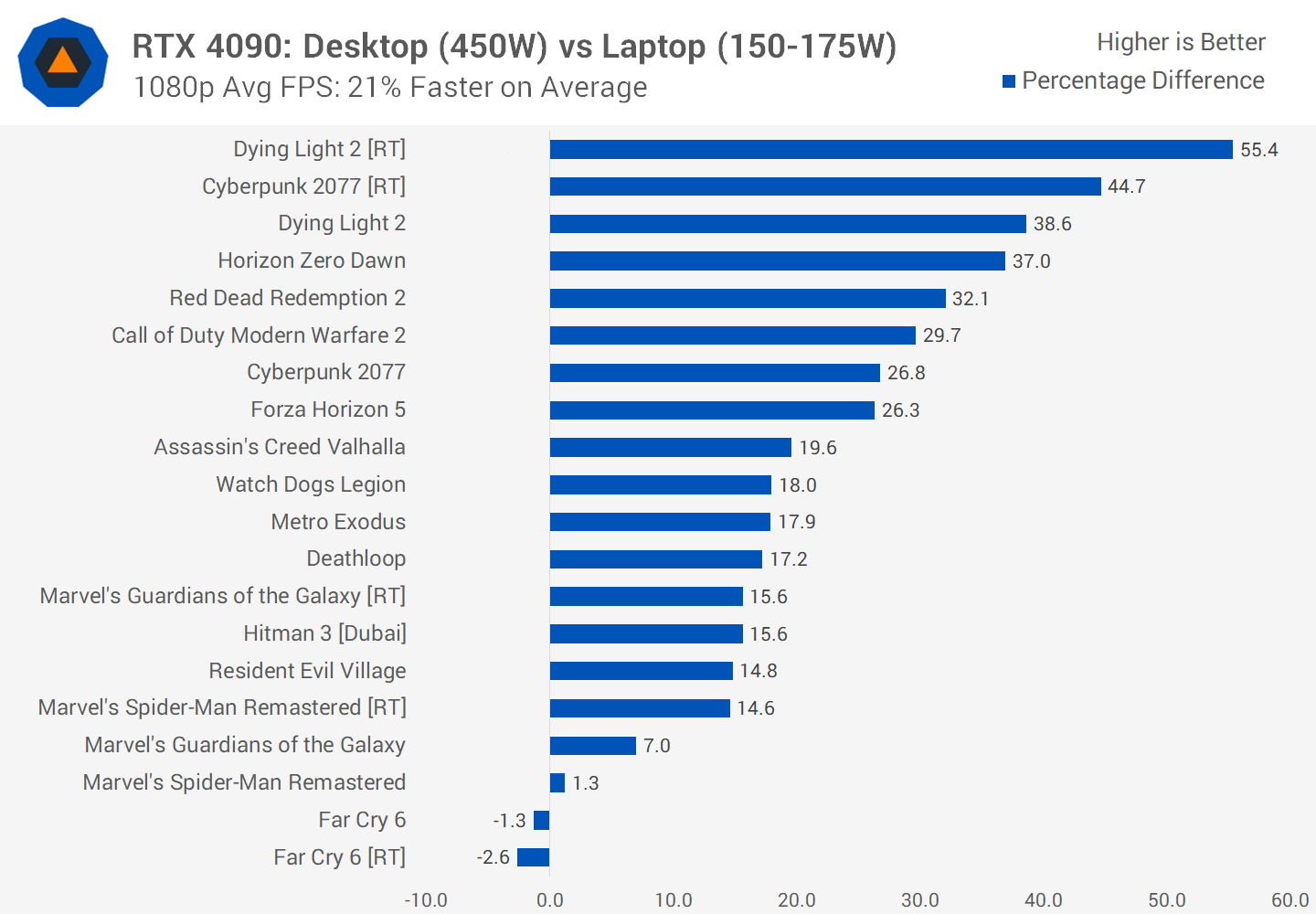

Meanwhile, at 1080p the margins between the desktop and laptop card are much less pronounced. On average, the desktop card was just 21 percent faster – still a significant margin – but this included four test scenarios with a margin less than 10 percent.

Some games are still very GPU demanding at 1080p and they saw the biggest margins in excess of 30 percent, but it's clear in CPU limited scenarios that the difference between a flagship desktop CPU and flagship laptop CPU are less than the differences on the GPU side.

What We Learned

After evaluating the differences between the RTX 4090 for desktops and the RTX 4090 for laptops, it becomes abundantly clear why Nvidia should not have given the laptop model virtually the same name as the desktop model. The laptop variant is substantially slower when GPU limited, to the point where the desktop card offers one to two tiers of extra performance. This could be the difference between a 60 FPS experience at a given resolution, and a 100+ FPS high refresh rate experience.

The margins are particularly large at 4K with ray tracing enabled, something we suspect owners of a flagship GPU might be interested in exploring.

Even in the worst case scenarios for the desktop GPU, like 1080p gaming, the desktop GPU is still faster to a notable degree, and it's only a small handful of titles where you could say the two configurations are tied – largely due to CPU limitations.

Certainly the 4K results are most representative of the real GPU differences between the GPU variants: the laptop model is about 60% the size of the desktop card in terms of hardware configuration, and it delivers 64% the performance.

Of course, it's hardly a fair battle to pit a gaming laptop with about 250W of total power capabilities up against a desktop GPU that alone consumes above 400W in some games...

Of course, it's hardly a fair battle to pit a gaming laptop with about 250W of total power capabilities up against a desktop GPU that alone consumes above 400W in some games – it's completely unrealistic to expect the most powerful desktop card to fit into a laptop form factor.

But while we know it's unrealistic, there are casual buyers out there who may be mislead into genuinely believing the desktop and laptop RTX 4090s are effectively identical when that couldn't be further from the truth.

We think Nvidia could simply admit that it's not possible to put their flagship desktop GPU into a laptop, and give the laptop GPU a slightly different name. This should be an RTX 4090M – or even better, the RTX 4080M – because then we would be matching the desktop model in GPU die and have the M suffix to make it clear it's clocked lower and power limited relative to the desktop card.

The reason why we believe Nvidia doesn't do that and is giving the desktop and laptop GPUs the same name, is that it allows laptop vendors to overprice their gaming laptops and put them on a similar pricing tier to a much more powerful desktop.

For example, high-end gaming desktops using the RTX 4090 are priced around $3,500, while the most affordable RTX 4090 gaming laptops are around $3,200 as of writing. Were this laptop GPU actually named the RTX 4080M, it would look a bit silly being priced at $3,500 up against a full RTX 4090 desktop.

We should also note that the margin between desktop and laptop variants of the same GPU (name) have grown over the years. With the GeForce RTX 3080, the desktop card was 33% faster than a 135W laptop variant at 1440p. Now with the RTX 4090, the desktop card is at least 40% faster when not CPU limited at 1440p, and closer to 55% faster at 4K.

It is still impressive to a certain degree that the laptop variant is only 36 percent slower than the desktop GPU, while consuming way less than half the power.

That's because the hardware gap between laptop and desktop has been growing as has power consumption: laptops have stayed relatively flat, topping out around 150W on the GPU, while desktop graphics cards have shot up to 450W. It's very hard for a laptop variant to keep up.

Bottom line, the GeForce RTX 4090 desktop is much faster as expected and also a better value than the laptop variant. It is still impressive to a certain degree that the laptop variant is only 36 percent slower than the desktop GPU, while consuming way less than half the power. The RTX 4090 Laptop is clearly far more efficient, while the desktop card is running well outside the efficiency window at ridiculous levels of power. You have to commend Nvidia for delivering a huge uplift in performance per watt in Ada versus Ampere, it just would have been nicer if it was priced more appropriately.

Shopping Shortcuts

- Nvidia GeForce RTX 4090 on Amazon

- Nvidia RTX 4090 Laptops on Amazon

- Nvidia GeForce RTX 4080 on Amazon

- AMD Ryzen 9 7950X on Amazon

Further Testing

Since we published this GeForce RTX 4090 comparison, we have run additional benchmarks and tests you may be interested in: