PC gamers have been hearing a lot about VRAM as of late, a topic that has stirred up a lot of controversy over the past few weeks. For the purpose of testing VRAM usage in games, we are taking an updated look at the GeForce RTX 3070 and Radeon RX 6800 GPUs in recently released titles to see what's what.

But before we get into all the testing, here's some backstory...

Back in late 2020, Nvidia released the GeForce RTX 3070 for $500, and at the time this was an impressively fast GPU. We came away impressed, stating in our day-one review that overall the RTX 3070 is a great value product, though cautioned gamers that AMD was about to release their direct competitor in a matter of weeks. That GPU contender turned out to be the Radeon RX 6800 and the 6700 XT, the first was $80 more expensive than the GeForce, while the 6700 XT was $20 cheaper.

We also noted that the GeForce RTX 3070 was the new and much more affordable RTX 2080 Ti, with the exception of the missing 3GB of VRAM – and VRAM was something we noted several times in that review.

The concern was that 8GB at this performance tier might not age as well, but overall we were impressed with what the RTX 3070 was delivering at the $500 price point, so it was a very positive review overall.

A few weeks later we received AMD's Radeon RX 6800 and we noted this at the end of our review:

"As good as the RTX 3070 performance is, we feel that even for gaming at 1440p, that 8GB VRAM buffer is going to be less than ideal in the not so distant future. It's perfectly acceptable on mainstream bound $300 (or cheaper) cards, but for $500, you could do a little better."

At the time ray tracing support in games was still limited and most of the few examples were quite poor. DLSS 2.0 support was also limited, though we noted that was a really nice feature.

We concluded the Radeon review by saying "for an extra $80, there's now the option of the Radeon RX 6800 which packs twice as much VRAM. Despite costing 16% more, the Radeon is already offering gamers around 16% more performance on average."

A month later we provided a 40 game benchmark comparing the two GPUs and there was a long conclusion where we voiced concern about the more limited VRAM buffer of the RTX 3070, commenting that "in two years or so, the Radeon 6800 may be better equipped to deliver better image quality simply because it can take advantage of massive texture packs, however at present the GeForce does well enough in a wide majority of scenarios."

But those were not the only points of contention. The GeForce was less expensive – though GPUs in general were not widely available then – and DLSS was a key selling point, while FSR was not even part of the picture.

But here we are, two and a half years since the release of the RTX 3070 and in the past few months the cracks have started to show. That's not say the 3070 hasn't been an excellent graphics card, and for the most part it will continue to do so, but it's now more evident that the Radeon 6800 is aging better, and that's what we'll be looking at today.

All of today's testing was conducted on the AM5 platform using the Ryzen 7 7800X3D with 32GB of DDR5-6000 CL30 memory using the latest display drivers. There's a lot to go over, so let's get into it...

Benchmarks

The Last of Us

Let's start with everyone's favorite well-optimized game, The Last of Us Part 1. It's fair to say there is some optimization work that can be done here, probably more on the CPU usage side of things though, and some work on texture quality at the lower settings needs to be done, as it really only looks great on ultra and high, but at least performance scales well as you lower settings.

That said, if you have 12GB or more VRAM, 32GB of system memory and a decent processor it will play smoothly using the ultra settings – for the most part – and we found the campaign very enjoyable using the Radeon 6800 on our 7800X3D test system.

The Last of Us Part 1 is yet another new game that doesn't play nice with 8GB graphics cards when cranking up the visuals, it's not a one-off and we believe we're going to see more examples of this before year's end.

We also believe if everyone had 16GB of VRAM there would be very few performance complaints. Maxed out, the RX 6800 is buttery smooth... just look at this live frame time graph, it's a thing of beauty.

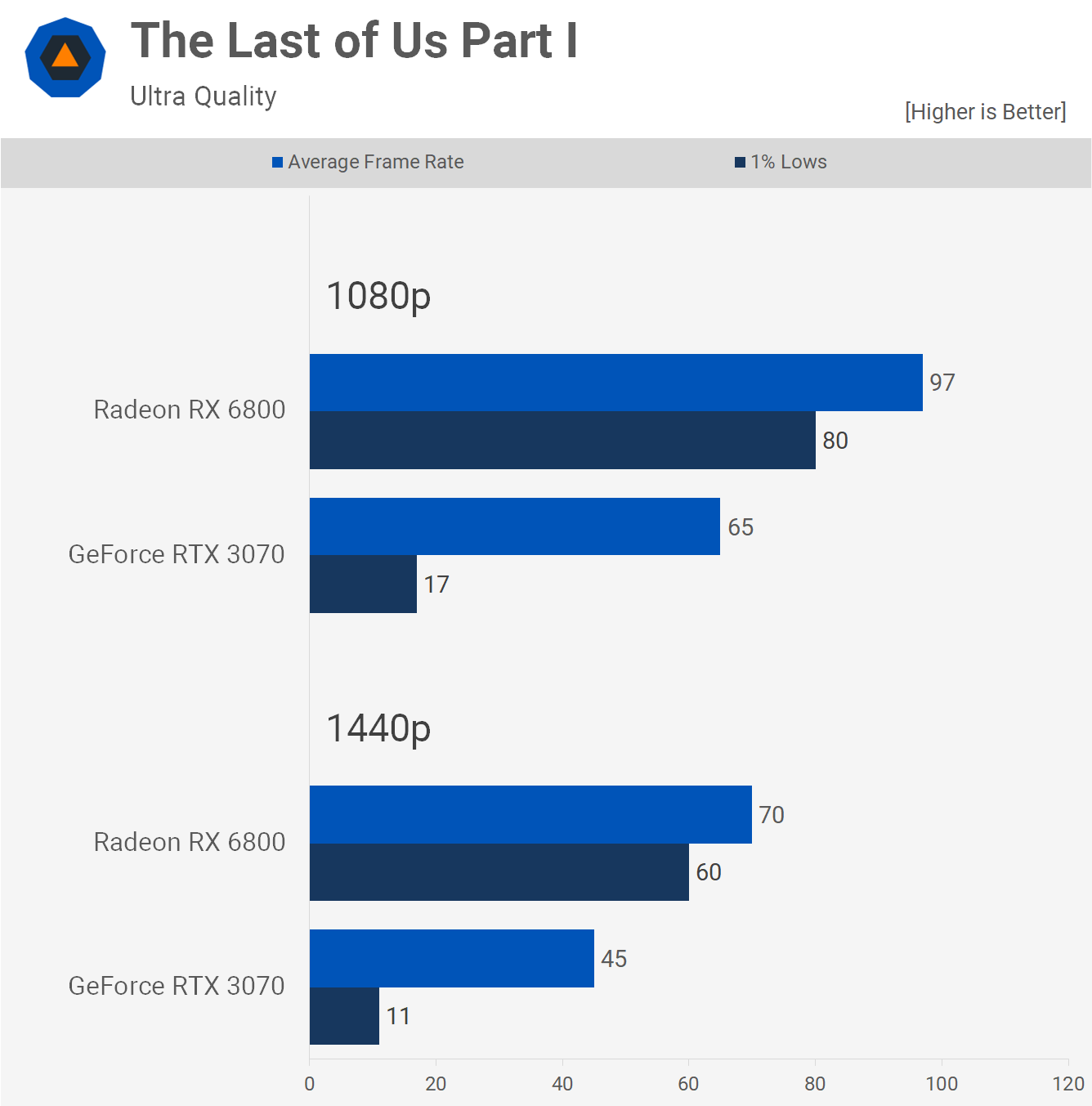

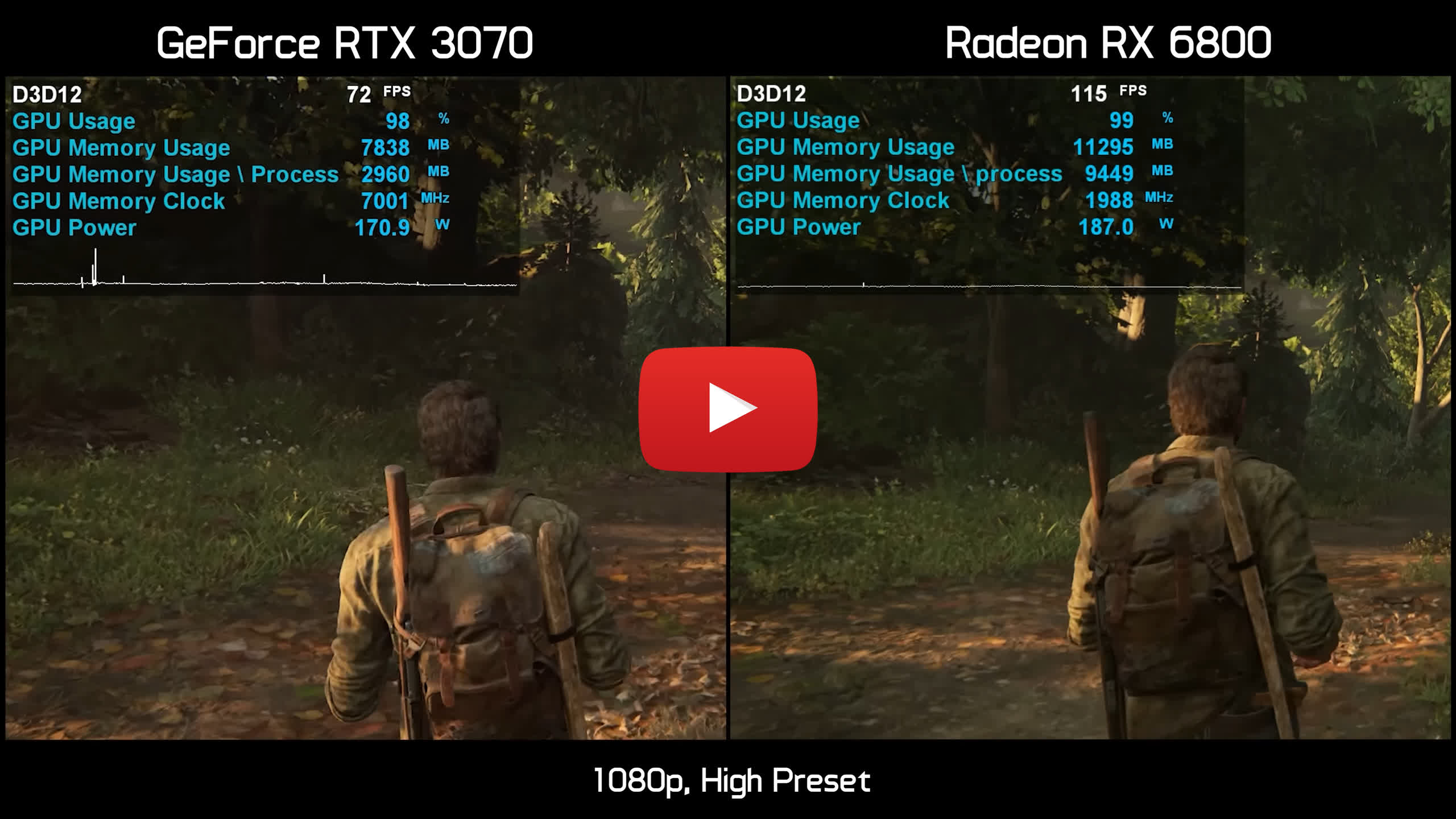

Comparing the RTX 3070 and RX 6800 using the ultra quality preset isn't even a comparison, and that's because while the RX 6800 can deliver smooth playable performance, even at 1440p, while the RTX 3070 is a stuttery mess in this title. In this game, both GPUs completed the same benchmark pass following a near identical path, but the footage is out of sync due to the extreme stuttering faced by the 3070. It's a remarkable difference, not only is the RX 6800 much faster in general, but the frame time performance is night and day.

For better image quality comparisons, check out the Hardware Unboxed video below:

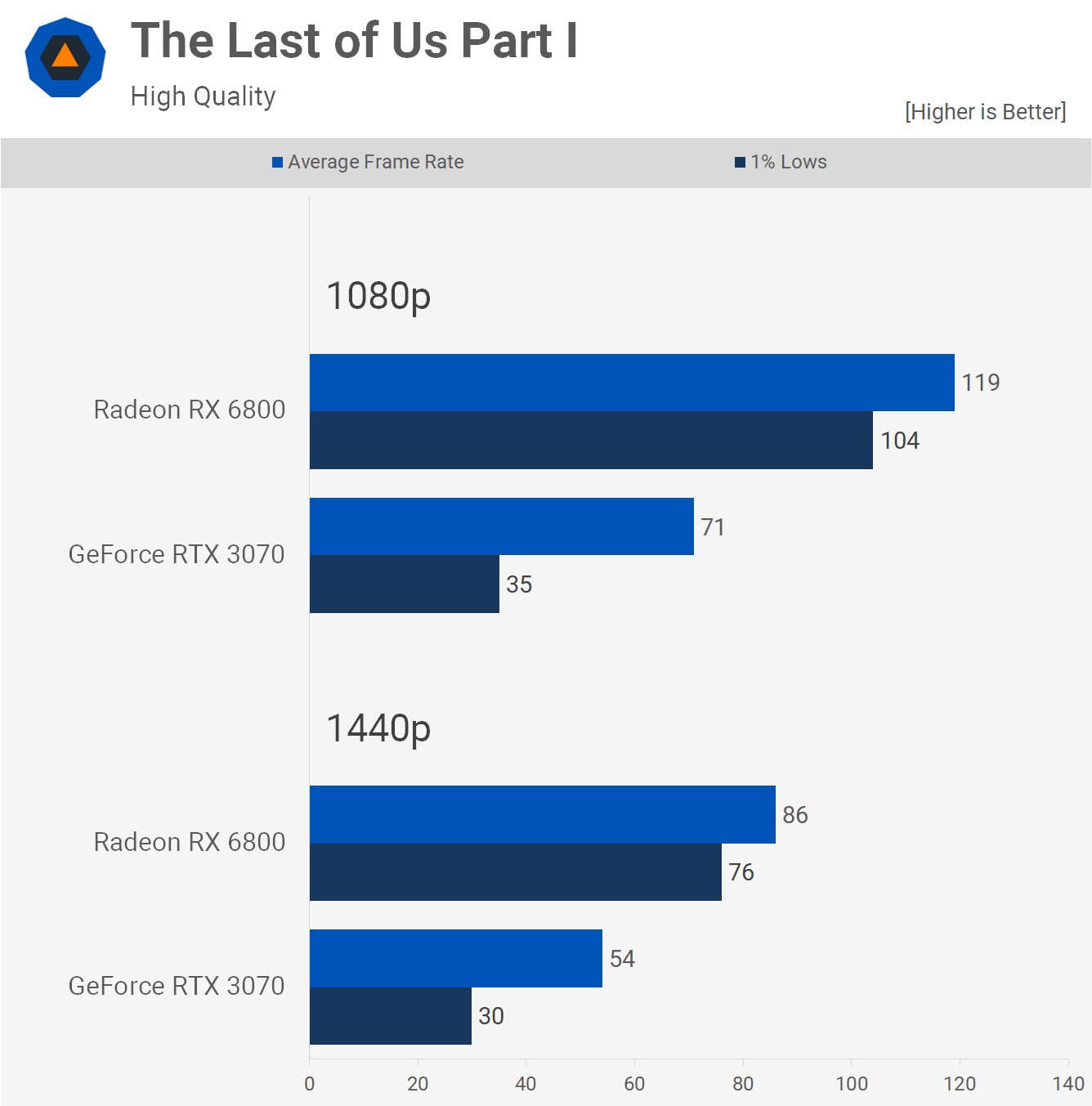

But what if we dial down the quality preset to 'high'? Now the RTX 3070 appears to work well, though there were still noticeable stutters at 1440p and this is reflected in our graph as the disparity between the 1% lows and average frame rate for the RX 6800 is 25%, but with the RTX 3070 we see a 46% margin. So ideally you'd want to run 8GB cards like the RTX 3070 using medium at 1440p and high at 1080p, leaving the Radeon 6800 to deliver not only superior performance but also superior image quality with the ultra preset.

Click on the video to jump to the scene described on this section.

Now here's a quick look at how these GPUs compare at 1440p using the ultra preset and we saw a few interesting things here. Other than stuttering, the RTX 3070 would often crash under these conditions.

We found it interesting that at random, we loaded up the game using the RTX 3070 and tried playing at 1440p with the ultra settings and found that the game didn't crash this time. But something was off. The frame rate performance was ~20% better for the 3070, and we had changed nothing. However, the reason was then quite obvious, where trees wouldn't render properly and there were still some frame stuttering issues. So running out of VRAM causes all kinds of issues for performance, stability, and image quality.

Hogwarts Legacy

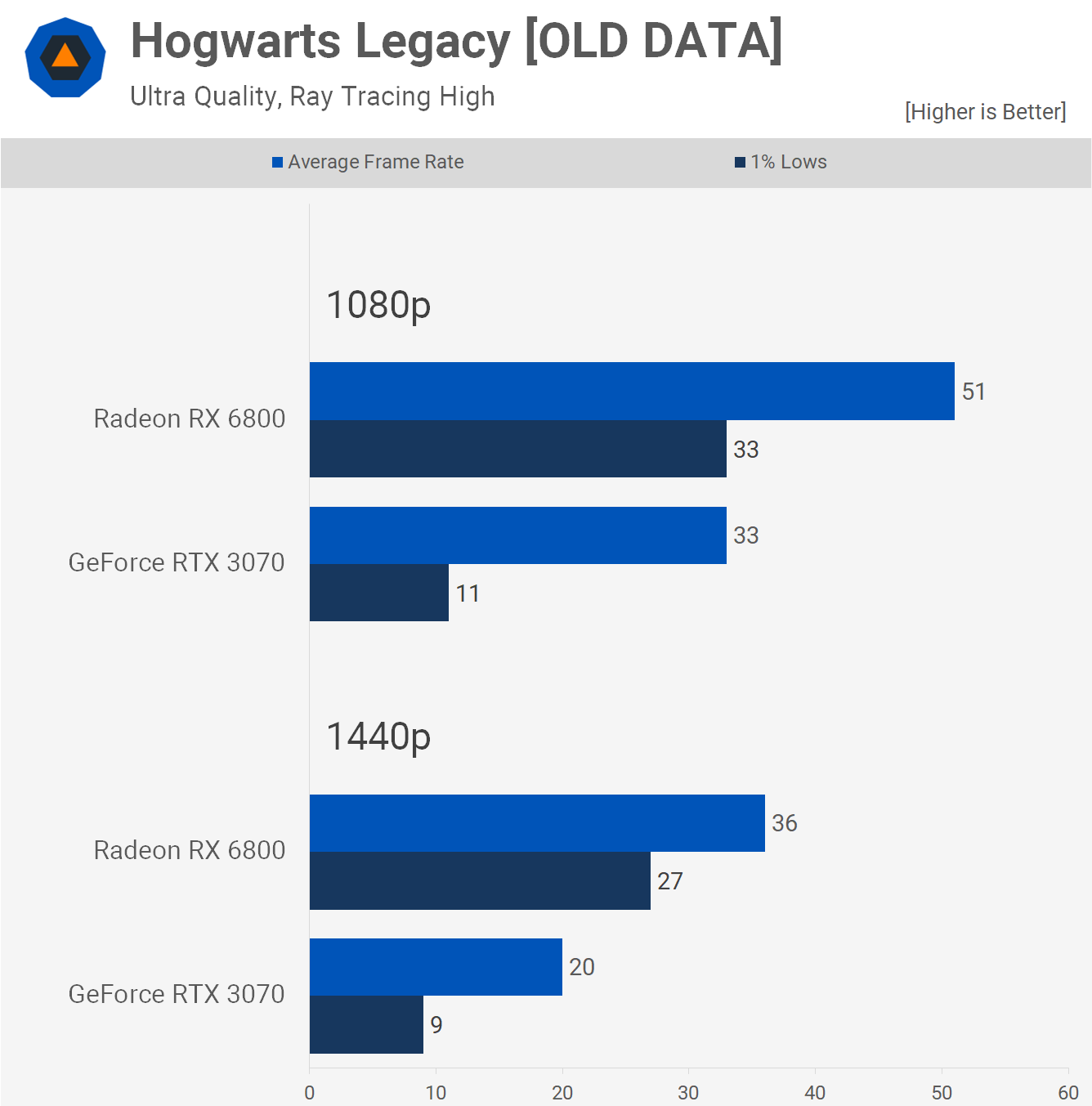

Next up we have Hogwarts Legacy and after initially gathering our footage and benchmarks for this article, we had to go back and update everything as it seems the game now handles running out of VRAM like most games do: missing textures. A week ago, the RTX 3070 was a stuttery mess leading to unplayable performance and very low FPS figures.

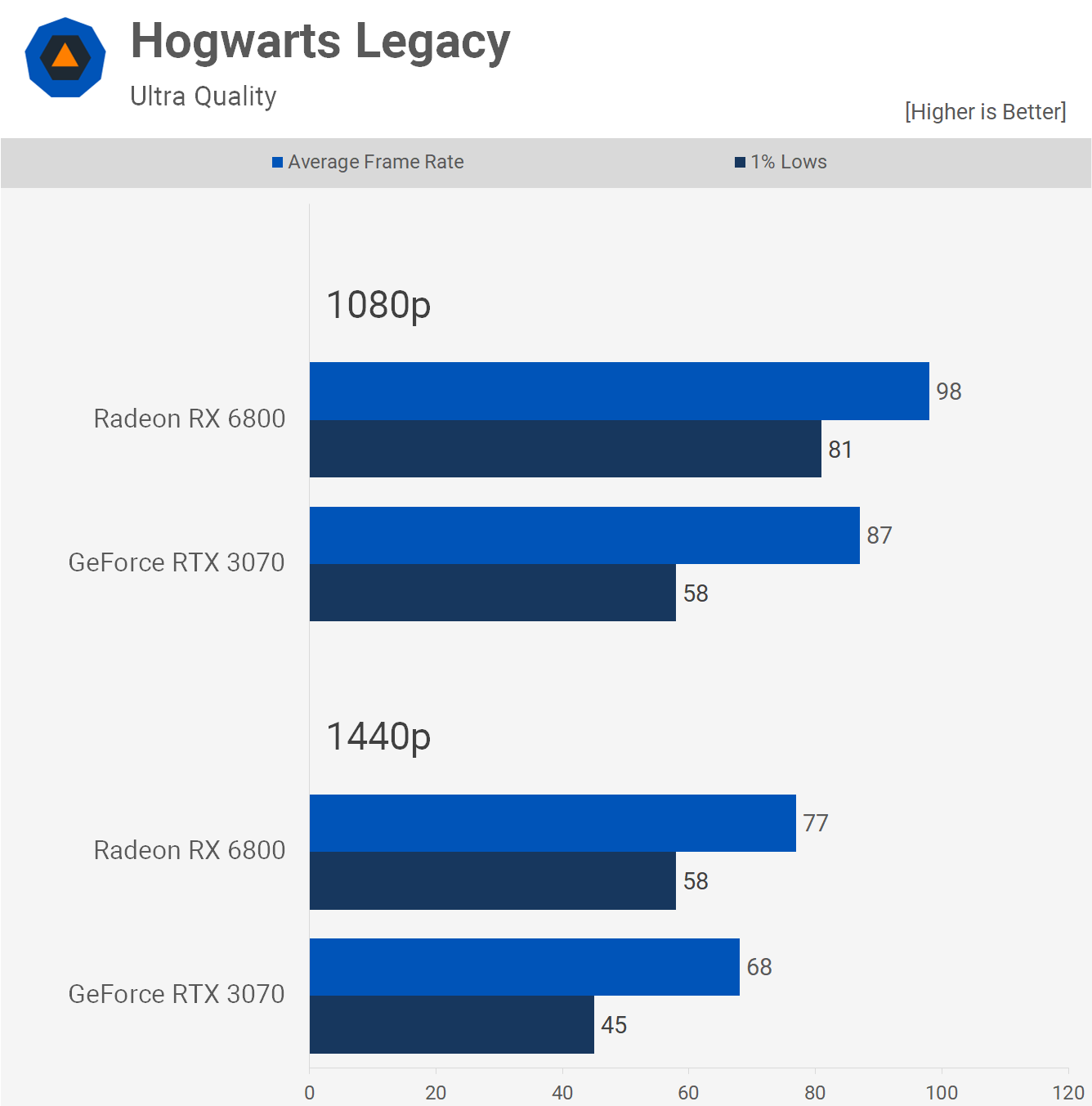

After a recent patch, the RTX 3070 is now just ~6% slower than the RX 6800 and frame time performance looks much improved, so how did Hogwarts Legacy fix this on 8GB graphics cards? Well no, not so fast, let's take a look at what's going on.

If you only look at performance, it looks similar between these two graphics cards, and initially the RTX 3070 even appears to be faster – it's not the exact same pass, and our results are based on a three-run average, but spot checking the frame rate counter up to this point gives you the impression that they're similar.

With the RTX 3070 we could notice the occasional jarring frame stutter, but the bigger issue is the image quality. The 3070 is constantly running out of VRAM and when it does the game no longer runs into crippling stuttering that lasts multiple seconds, instead all the textures magically disappear and then reappear at random. What you're left with is a flat horrible looking image and this occurs every few seconds. Even when standing completely still, the RTX 3070 keeps cycling textures in and out of VRAM as it simply can't store everything required for a given scene.

But what about DLSS?

If we enable upscaling (DLSS and FSR quality modes) for the RTX 3070 and the Radeon 6800, frame rate performance certainly improves but unfortunately the RTX 3070 just doesn't have enough VRAM, even when upscaling from a 720p image and we see the constant popping in and out of textures. Performance is also much worse on the 3070 when compared to the 6800, we're also still seeing stuttering, it's just a mess.

The next step is to dial down the quality preset to high, with high ray tracing, after all we don't typically recommend the ultra settings in most games. But even here the RTX 3070 suffers from regular frame stutter and in some instances severe frame stuttering.

Dropping down to the medium preset with ray tracing still set to high is a much improved experience with the RTX 3070, and texture quality is no longer an issue, though there is still the occasional frame stutter as VRAM usage is right on the edge and occasionally spills over.

Click on the video to jump to the scene described on this section.

So at best with RT enabled the RTX 3070 is a medium preset card, while the Radeon 6800 can go all the way up to ultra without any issues, though the frame rate isn't exactly impressive. Comparing the medium and ultra presets we see that in terms of image quality they aren't night and day different at 1080p, but the RX 6800 is clearly superior with sharper and more detailed textures, along with better lighting and shadows.

This is a shocking result that is only alleviated by lowering quality settings as not to overflow the GeForce's VRAM capacity.

We told you back in late 2020 that the RTX 3070 was the GPU to buy if you cared about ray tracing performance and image quality, and at times even mocked the weak RT performance of RDNA 2 GPUs, such as the Radeon 6800. To find the AMD GPU now offering vastly superior performance and image quality with RT effects enabled in a modern title is shocking to say the least.

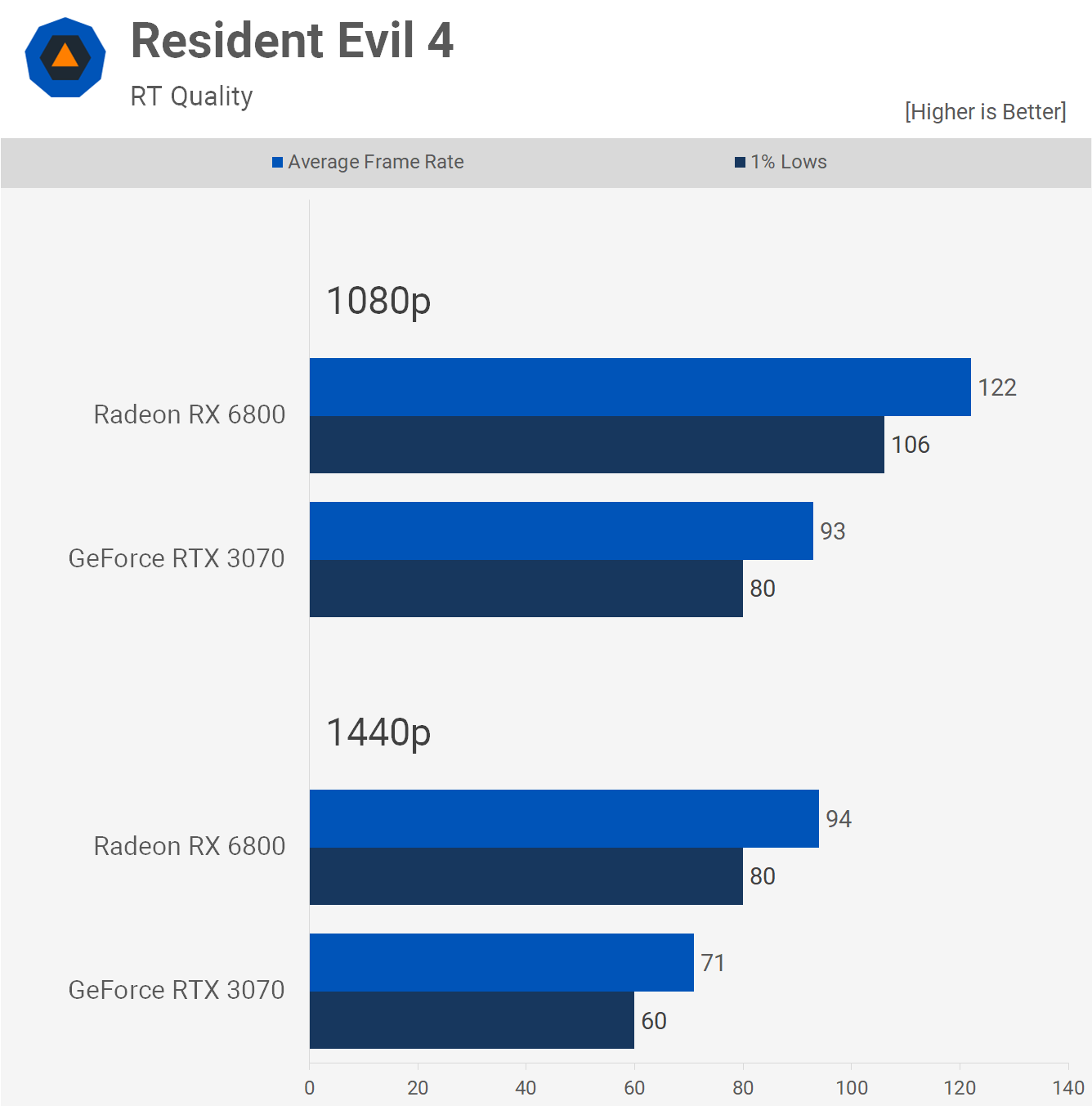

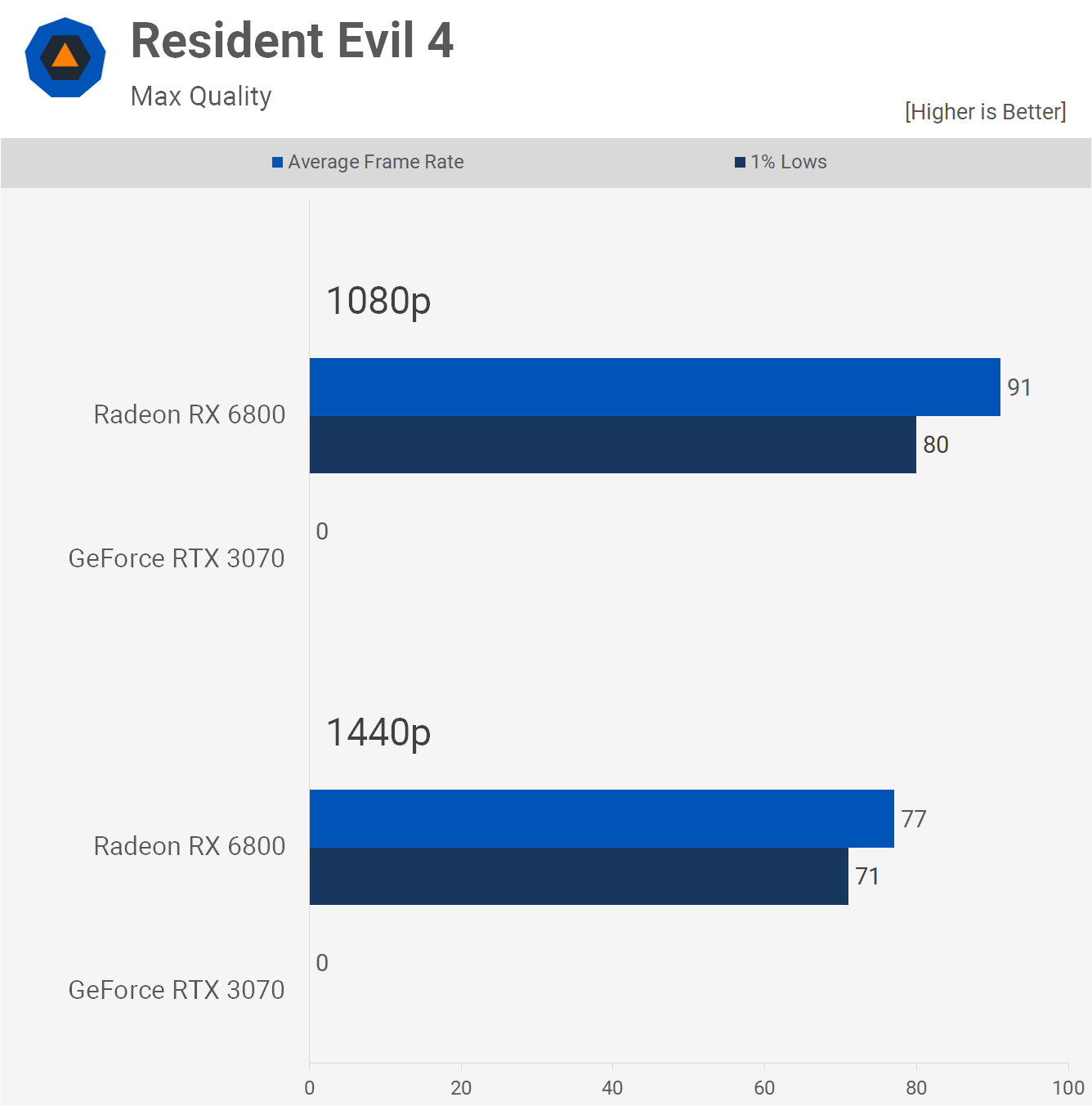

Resident Evil 4

Another new game we have to check out is Resident Evil 4, using the ray tracing preset which sets VRAM texture quality to 1GB and this isn't an issue for the GeForce RTX 3070 and as a result both GPUs delivered excellent performance.

However, textures do look better as you increase the texture capacity, not worlds better, but they do look better. Sadly though going above 1GB textures with ray tracing enabled breaks the RTX 3070 and will cause the game to crash.

Now here's a look at how the two compare with ray tracing disabled, but with the 8GB textures enabled at 1080p. As you can see, the RTX 3070 is considerably slower than the RX 6800, but worse than that, it suffers from stuttering issues and they occur fairly regularly, whereas the RX 6800 and its 16GB VRAM buffer is nice and smooth.

If we enable ray tracing but manually limit the RTX 3070 to 1GB textures, while leaving the Radeon 6800 on 8GB, we find that not only is the Radeon GPU much faster, but frame time performance is also better.

Generally speaking though, the RTX 3070 is okay under these conditions, but the Radeon 6800 is much faster, smoother and does offer slightly better image quality with ray tracing enabled.

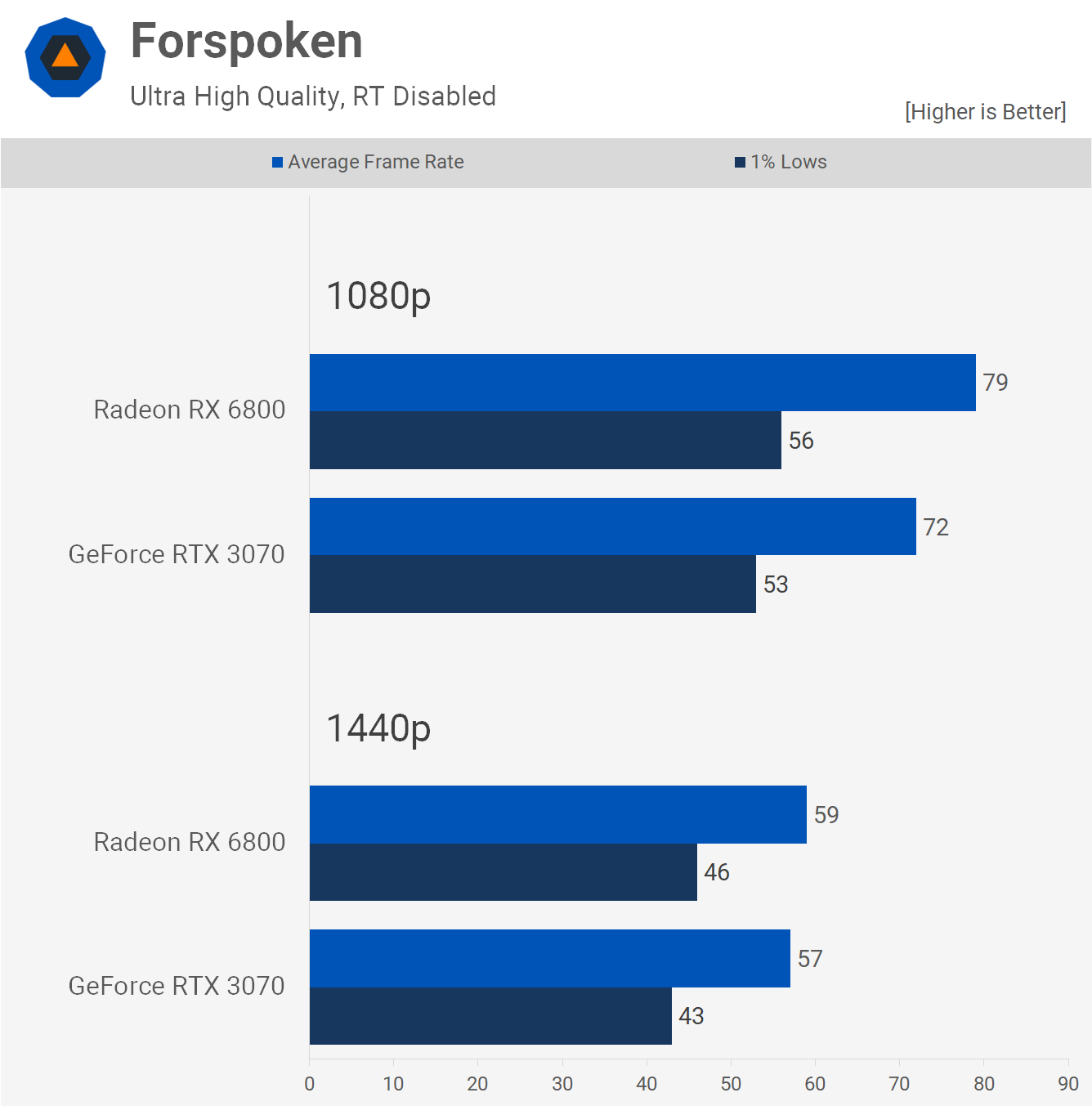

Forspoken

Forspoken wasn't exactly well received and we'd say rightfully so, though like The Last of Us Part 1, it might have fared better if most gamers had more than 8GB of VRAM, which sadly in 2023 they don't.

We'll show you why in a minute but first let's look at the graphs which we're sad to say are actually misleading – we'll get to why in a second.

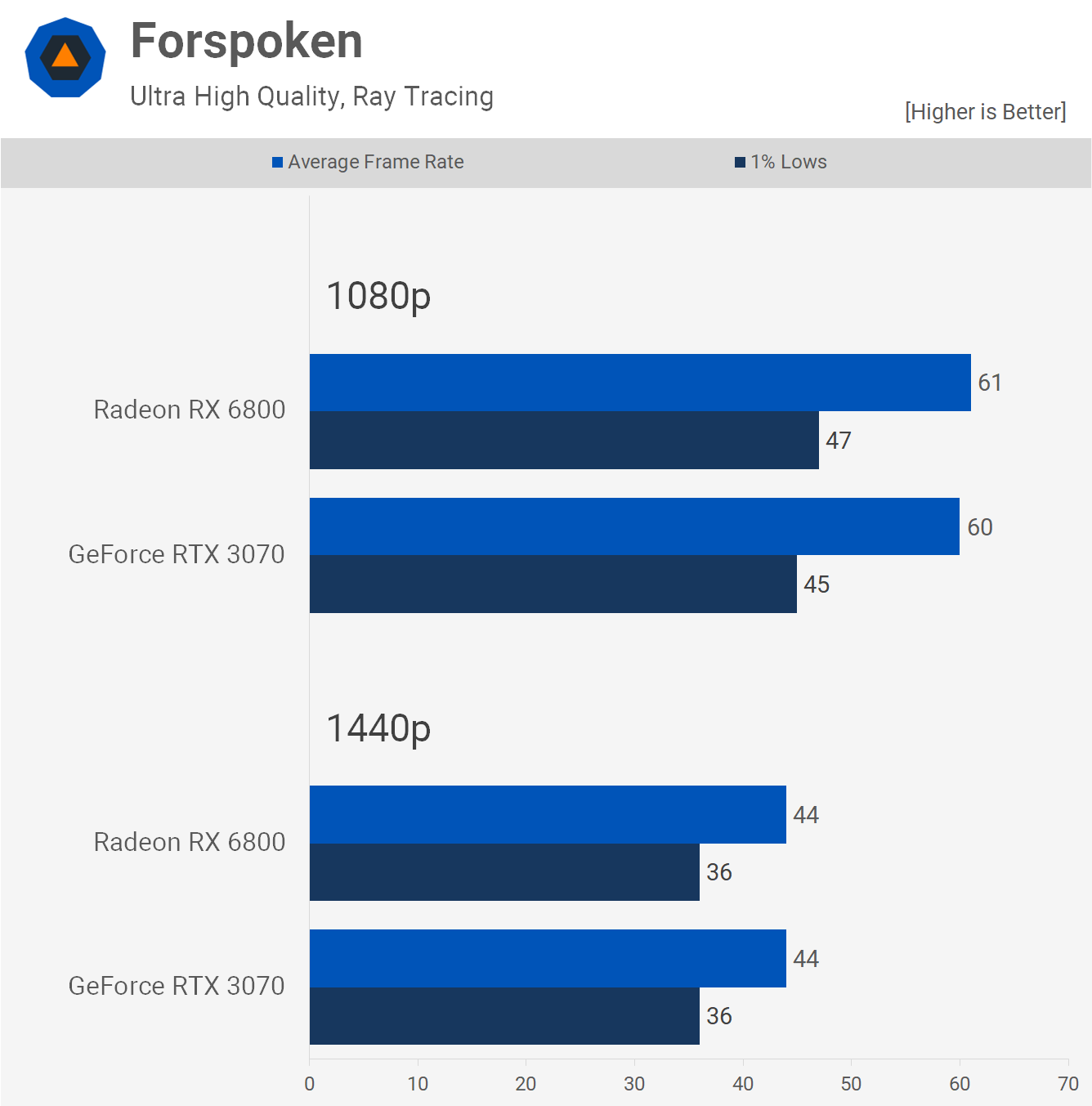

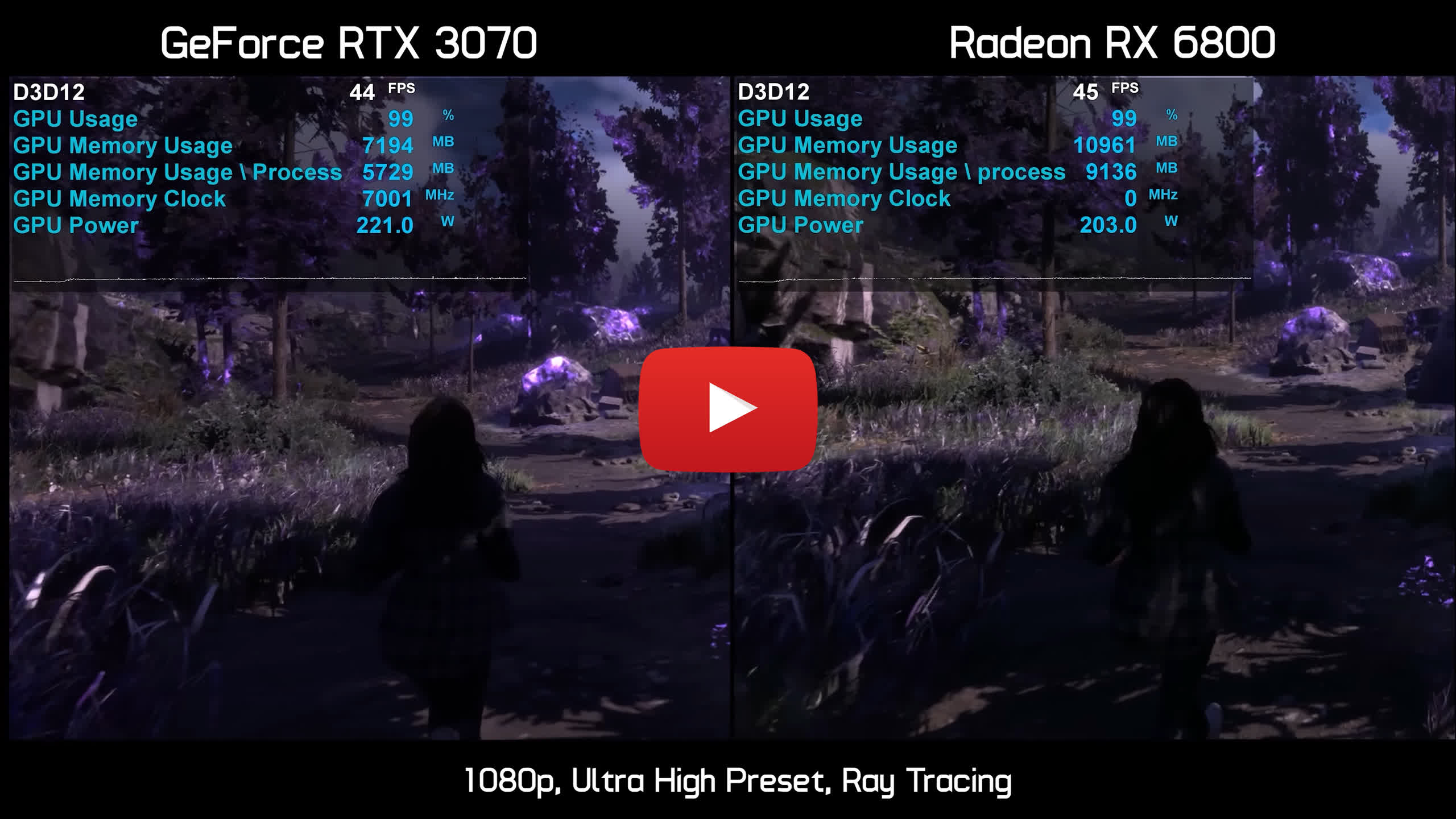

Using the ultra high preset with ray tracing disabled the Radeon 6800 was 10% faster than the RTX 3070 at 1080p and 4% faster at 1440p, and then with ray tracing enabled they're basically neck and neck, but there is more to this story.

So here's how things look with ray tracing disabled using the ultra high preset, frame rates are decent, not amazing but probably good enough for most when it comes to single player games. As we saw in the bar graph the RX 6800 is a little faster here, but overall not much in it.

Now with RT enabled the RX 6800 and RX 3070 were neck and neck in terms of FPS performance, but there's something going on here. Yes, once again the 8GB VRAM buffer of the RTX 3070 isn't enough once we enable ray tracing, but rather than stutter like mad, crash, or perform poorly, it just fails to load textures at all, leaving you with this blurry mess.

Click on the video to jump to the scene described on this section.

Admittedly, when running around the low texture quality of the RTX 3070 isn't very noticeable, but the second you stop to look at anything it's awful. We must wonder just how many owners of an 8GB graphics card, like the RTX 3070, went for the ultra high preset and were appalled by how bad the game looked, not realizing textures aren't loading correctly due to a lack of memory.

The game certainly looks worlds better on the Radeon RX 6800, mind you, we're not saying it's an amazing looking game or that it couldn't be better optimized, we're merely commenting that in its current condition the RX 6800 works really well and the RTX 3070 doesn't, and this has been true for all the new games we've looked at so far.

A Plague Tale: Requiem

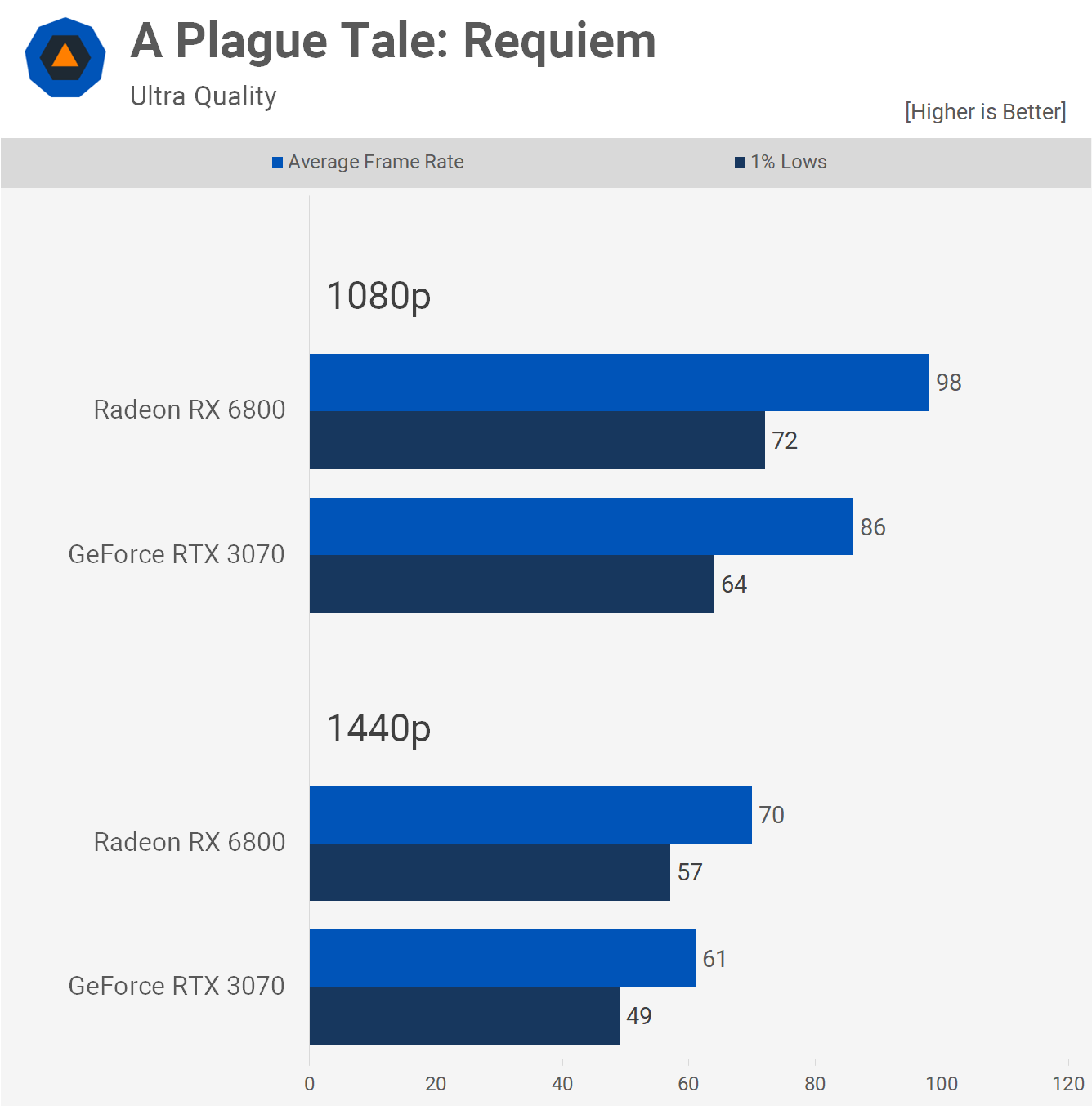

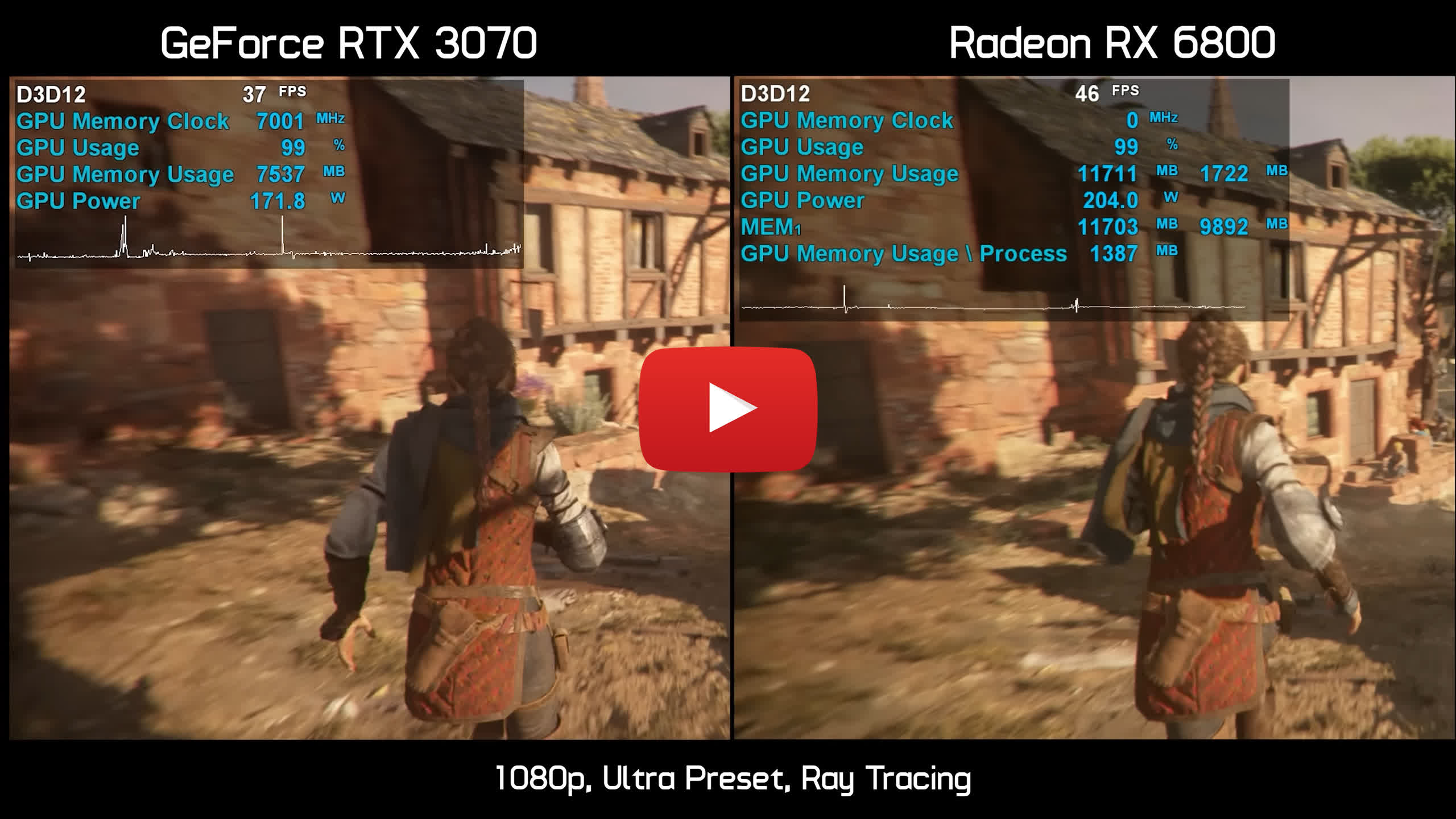

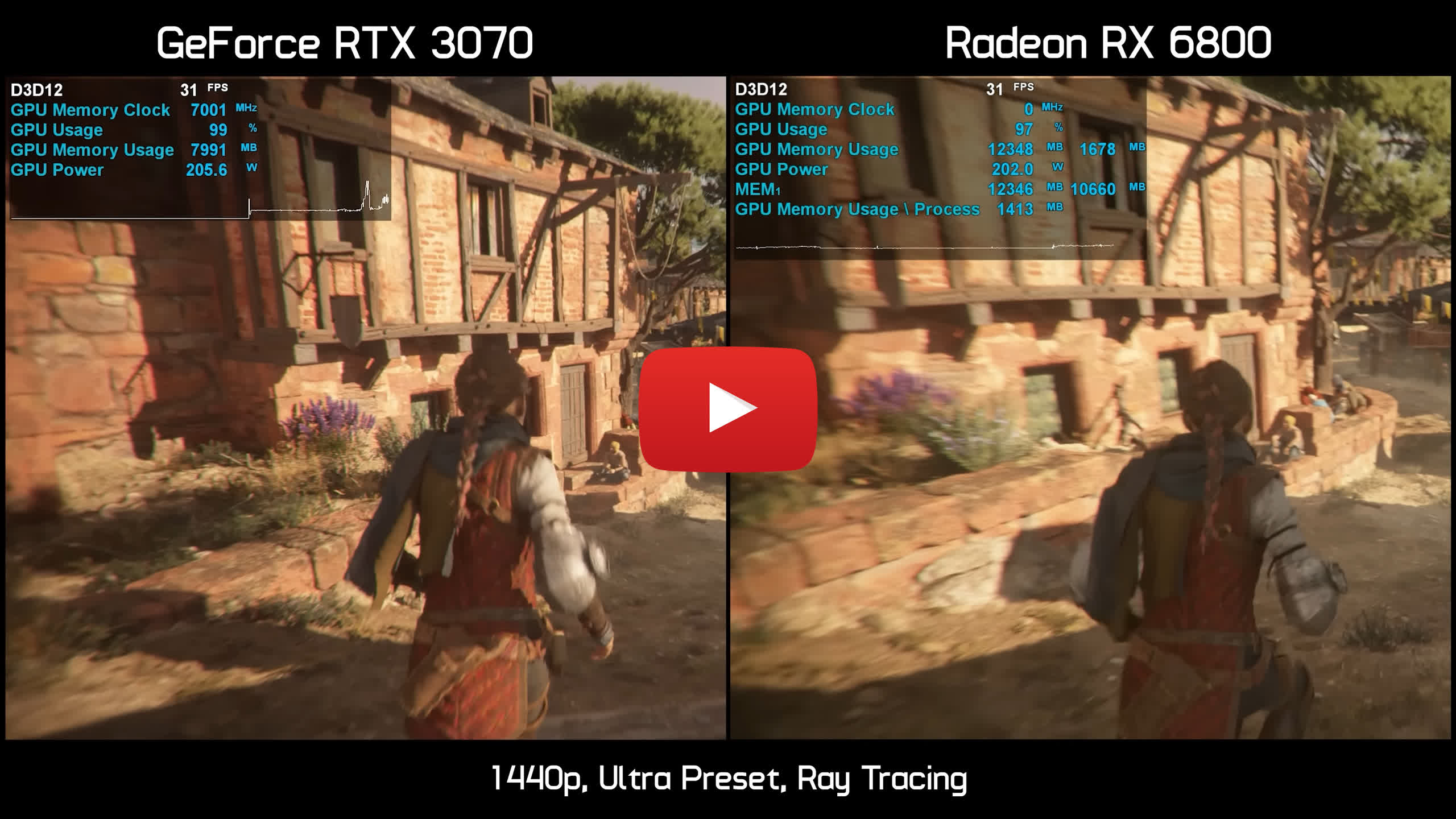

Now let's move on to one of the best looking games released this year – A Plague Tale: Requiem and we'll start with the ultra quality settings. Here the Radeon 6800 is ~14% faster than the RTX 3070, which isn't a bad result for the GeForce GPU as the RX 6800 was 16% more expensive at launch, so let's call this a draw.

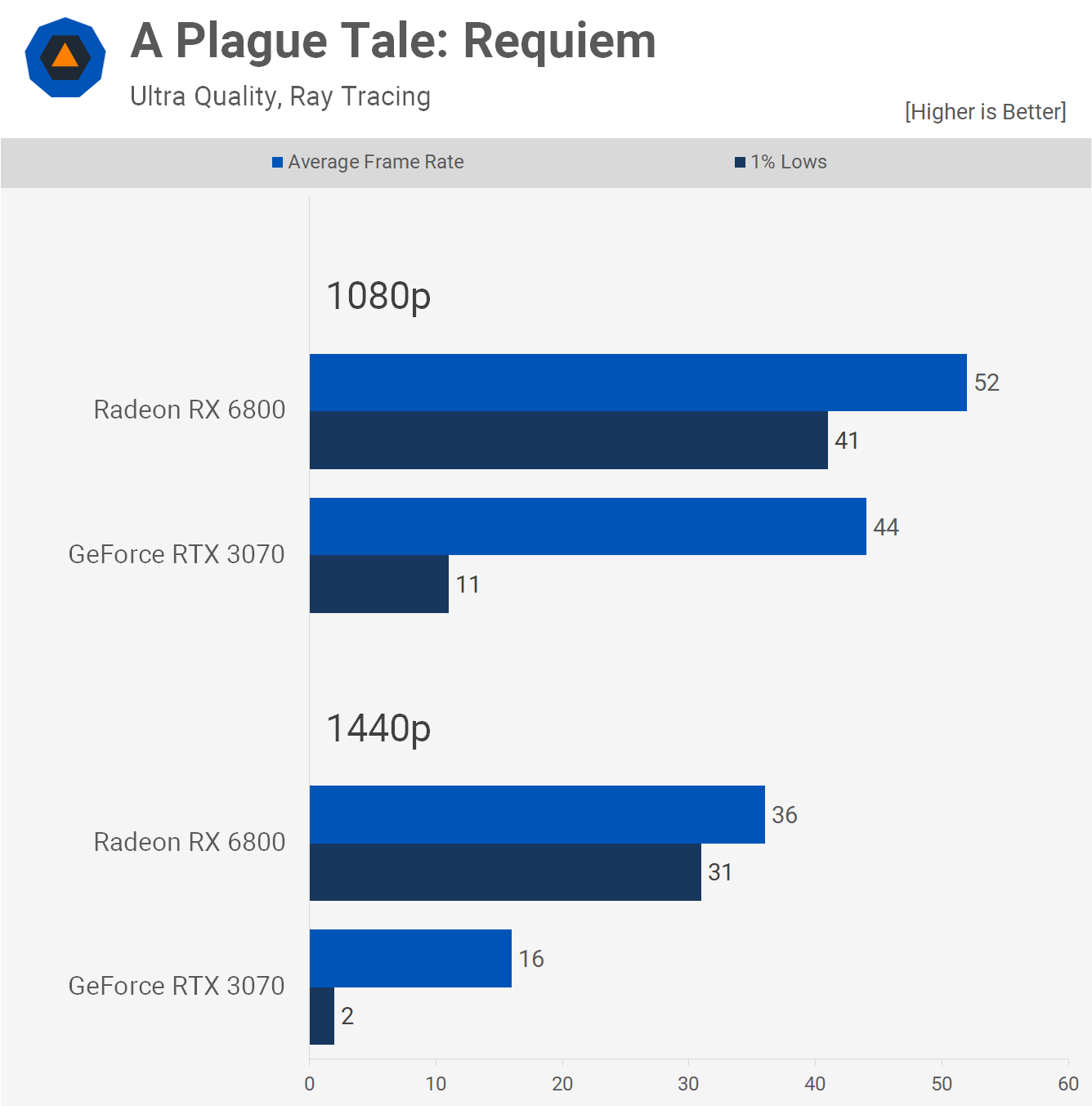

That said, the game supports ray tracing and we know GeForce owners love ray tracing, so let's give that a whirl shall we...

Oh, that doesn't look good, the Radeon RX 6800 averaged 52 fps at 1080p with 1% lows for 41 fps which is very playable, but the RTX 3070 suffered from extreme frame stutters and of course things only got worse, or rather completely broken at 1440p for the GeForce GPU, so let's go see what that looked like.

Click on the video to jump to the scene described on this section.

First here's the 1080p pass, and right away you can see frame time issues on the RTX 3070 whereas the RX 6800 is very smooth, I wouldn't say performance overall was amazing from the Radeon GPU, but it was smooth and certainly very playable, especially given this is a single player title. Meanwhile the RTX 3070 suffered from regular frame hitches and at times this made it very difficult to control the character.

The real fun begins when we increase the resolution to 1440p, again a console-like 30 fps on the RX 6800 is hardly cause for excitement, but thanks to that 16GB VRAM buffer it is possible to play the game under these conditions. The GeForce 3070 and its measly 8GB buffer on the other hand is completely unplayable and at times it's nearly impossible to control the character. There were times at 1440p where the game completely paused for multiple seconds.

Even if we use the balanced resolution upscaling option the RTX 3070 is still broken, whereas the RX 6800 is now very usable, and delivers an enjoyable experience. The RTX 3070 starts off looking okay, but it doesn't take long for that limited VRAM buffer to fill up and then things go to hell in a handbasket real fast. We're dropping down below 20 fps with horrible frame time performance and things never recover, if anything they just continue to get worse. So we have yet another new game that supports ray tracing, but manages to play better on the Radeon thanks to its much larger 16GB frame buffer.

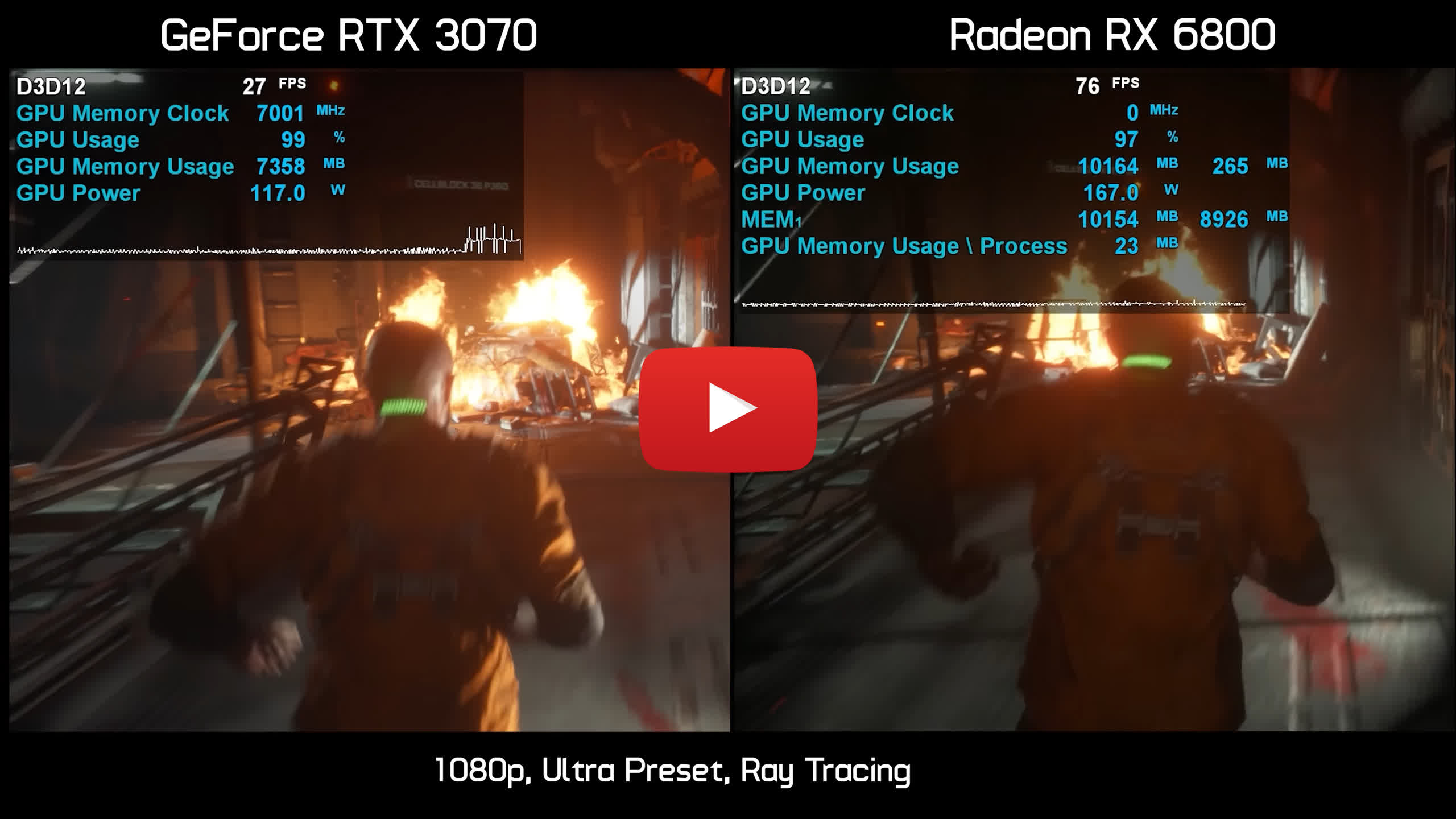

The Callisto Protocol

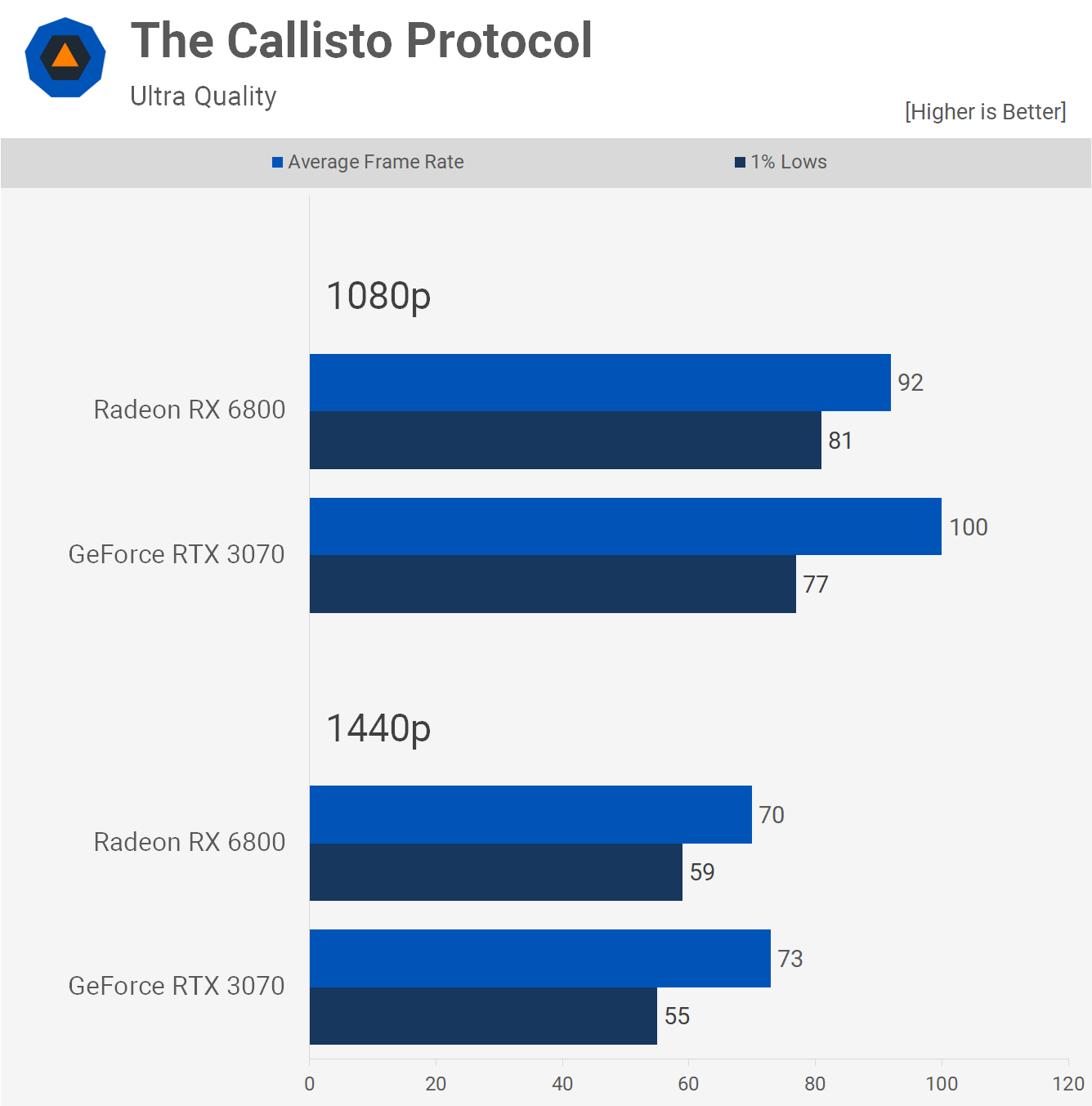

Next up we have The Callisto Protocol running at ultra quality but ray tracing turned off. The RTX 3070 was 9% faster than the RX 6800 at 1080p and 4% faster at 1440p, though in both instances the Radeon GPU enabled slightly better 1% lows.

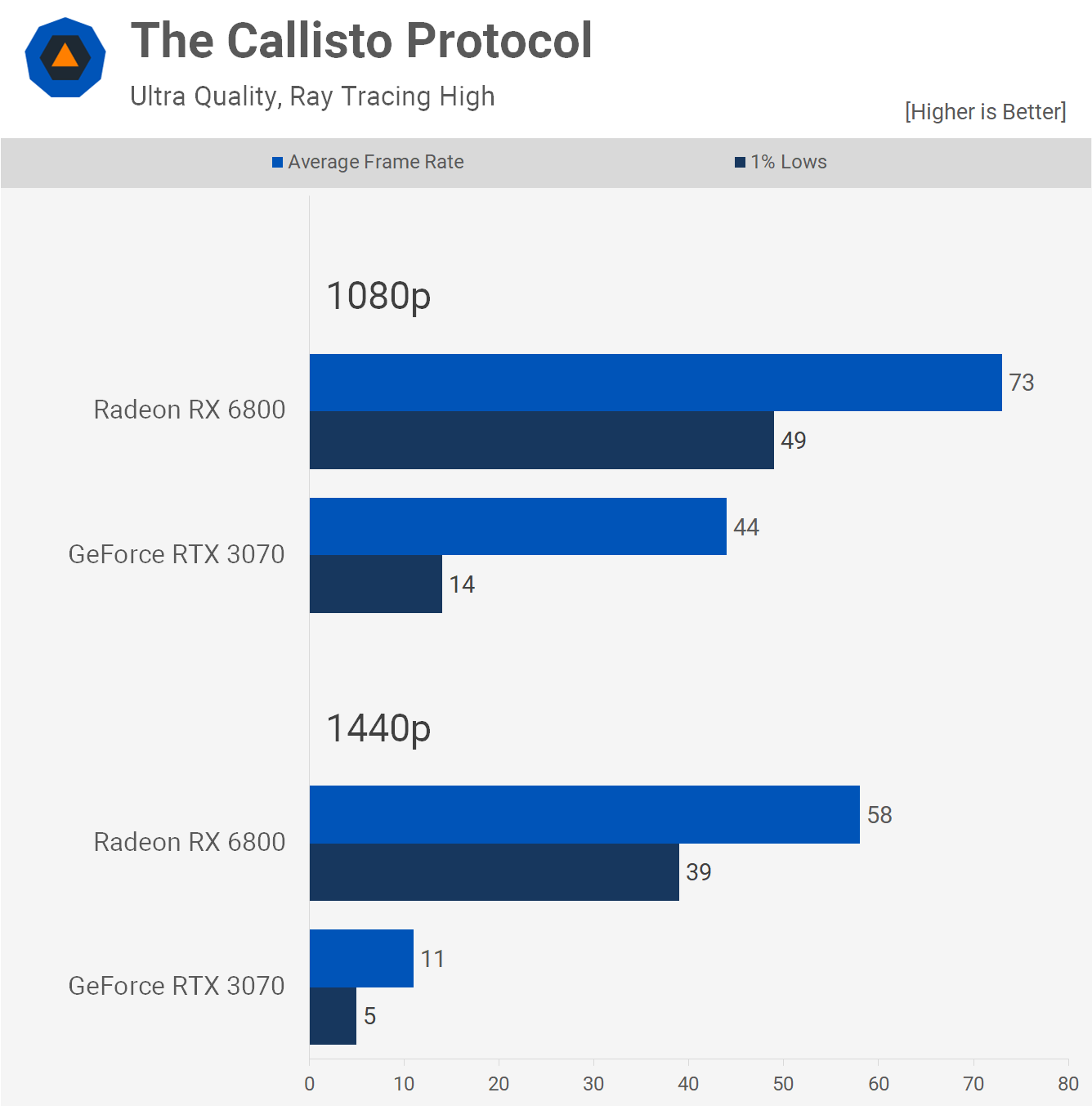

As we've seen a number of times now, the issues for the RTX 3070 start when we enable ray tracing. You could claim that the ultra quality preset is too much, but you'd also have to ignore the fact that the Radeon 6800 is good for 73 fps, while the 3070 is unusable thanks to its 8GB VRAM buffer. Worse still, the 6800 is still able to deliver highly playable performance at 1440p.

Here's a look at how these GPUs play at 1080p and it's interesting to note that the RTX 3070 plays quite well for about the first 30 seconds, but over that time VRAM usage climbs and eventually maxes out the card, resulting in constant frame stuttering.

Click on the video to jump to the scene described on this section.

Then at 1440p there is no buffer period, performance on the GeForce GPU is broken from the get go and this is a huge shame because had the RTX 3070 been given 16GB of VRAM it would be matching or possibly even beating the performance of the RX 6800, and this is the takeaway here: we're not going out of our way to break the RTX 3070, and instead pointing out had it been given more VRAM it will be able to deliver perfectly playable performance under almost all conditions in this late 2022 game release.

Better Results

Warhammer 40,000: Darktide

So we've shown you some new games where the GeForce RTX 3070 falls in a heap, but there are still plenty of new games where it works well, so let's look at that and we'll start with Warhammer 40,000: Darktide.

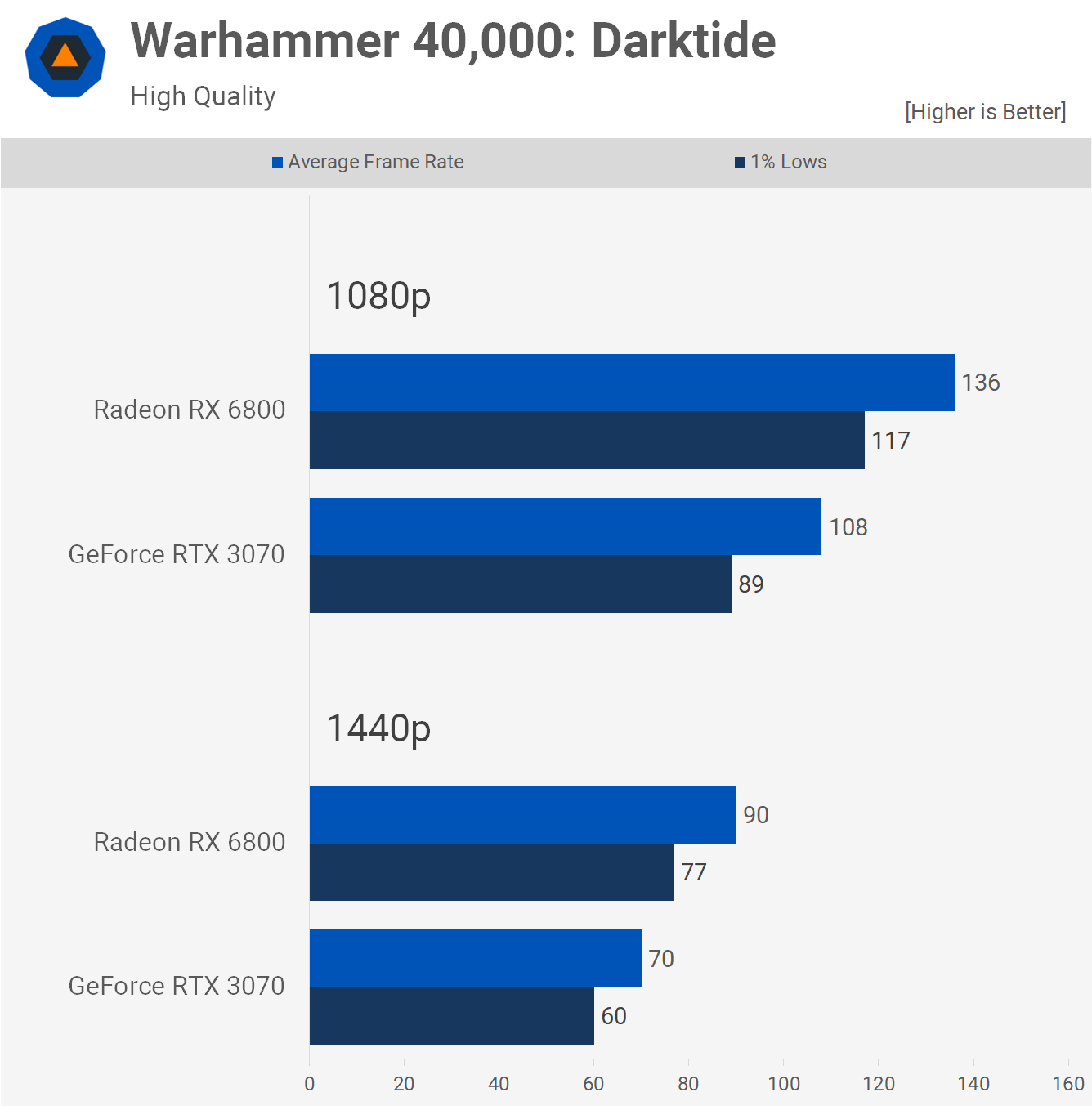

This is a new game that runs well on both GPUs and here we see with the high quality preset that the RX 6800 is 26% faster at 1080p and 29% faster at 1440p. A big win for the Radeon GPU, but again the RTX 3070 was certainly capable of delivering playable performance.

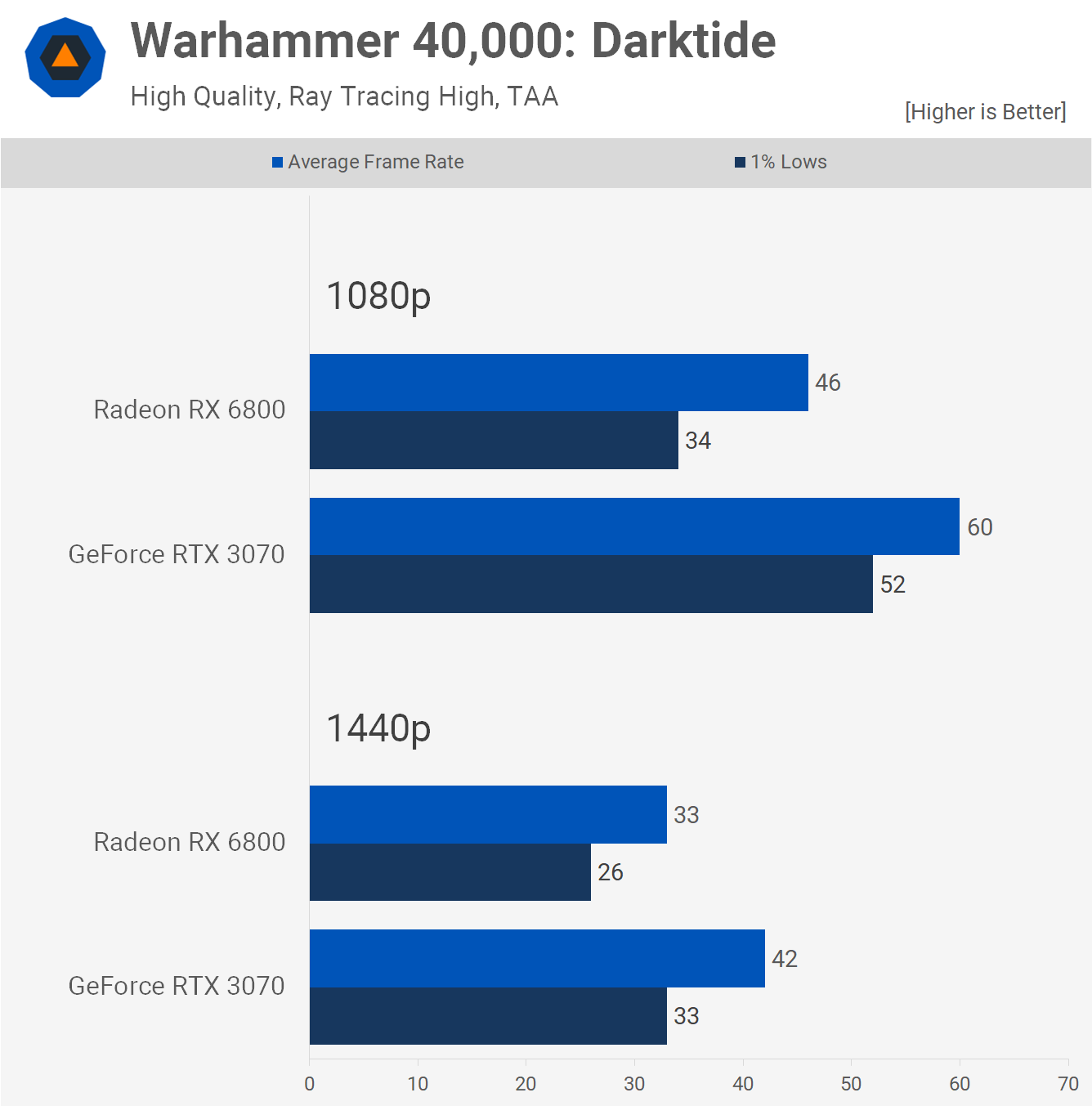

In fact, if we enable ray tracing the RTX 3070 makes a mockery of the Radeon, offering 30% greater performance at 1080p while also achieving 60 fps. This is more what you'd expect to see when comparing these two GPUs with ray tracing enabled.

Call of Duty Modern Warfare II

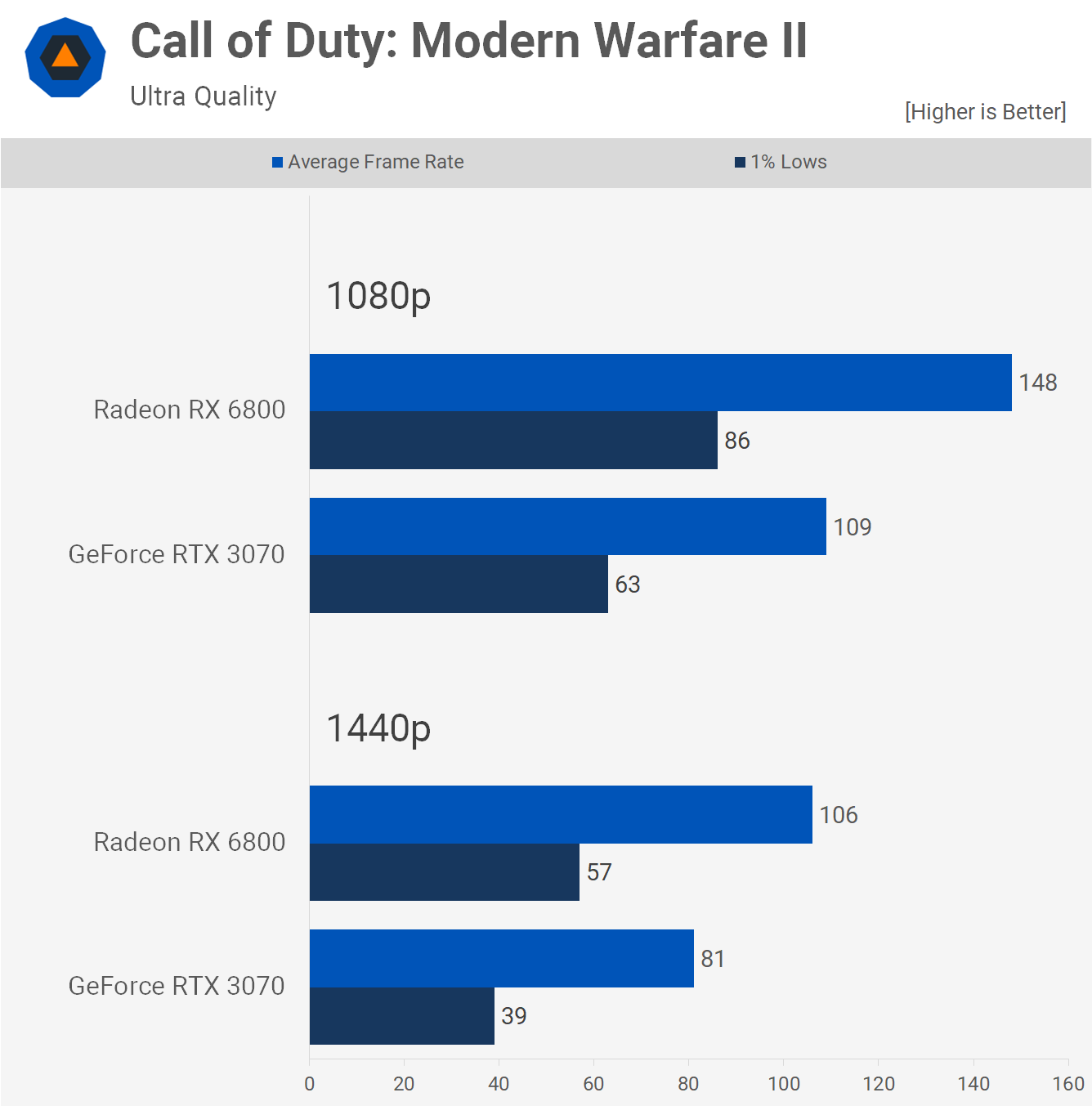

The GeForce RTX 3070 also works quite well in Call of Duty Modern Warfare II, delivering well over 60 fps at 1080p, and this is with the ultra quality preset. Those playing the multiplayer modes will likely opt for lower quality visuals for a competitive advantage and that will reduce VRAM requirements further.

Still, the RX 6800 was 36% faster at 1080p and 31% faster at 1440p.

Dying Light 2

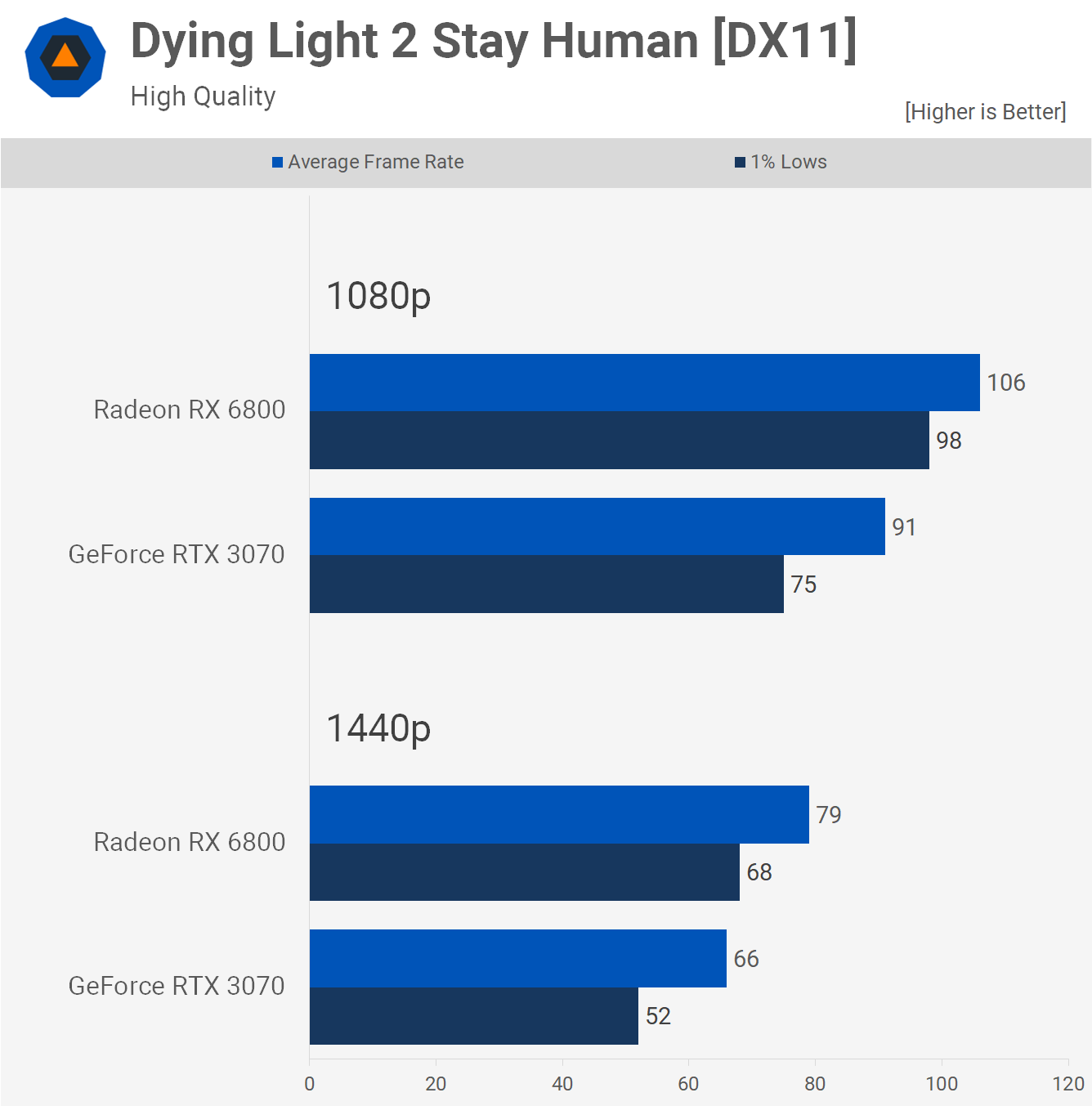

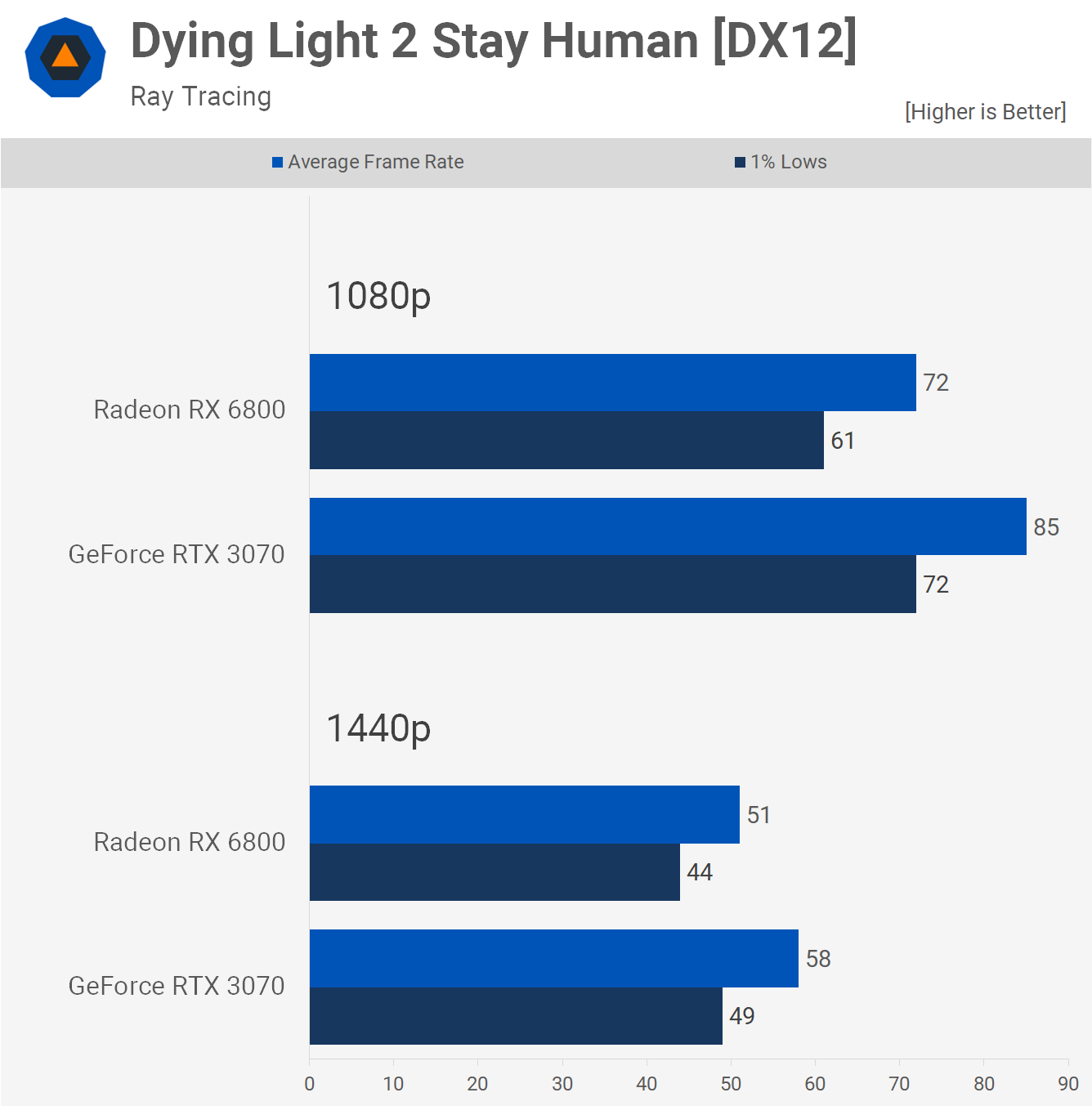

Dying Light 2 using the high preset sees the Radeon RX 6800 delivering 16% greater performance at 1080p and 20% at 1440p. So clear wins for the Radeon GPU, but this game does support ray tracing so let's enable that.

Using the high quality ray tracing preset hands the RTX 3070 an advantage, though it has to be said that although the RX 6800 is 15% slower at 1080p, the performance overall is very good and certainly playable on the Radeon GPU.

Dead Space

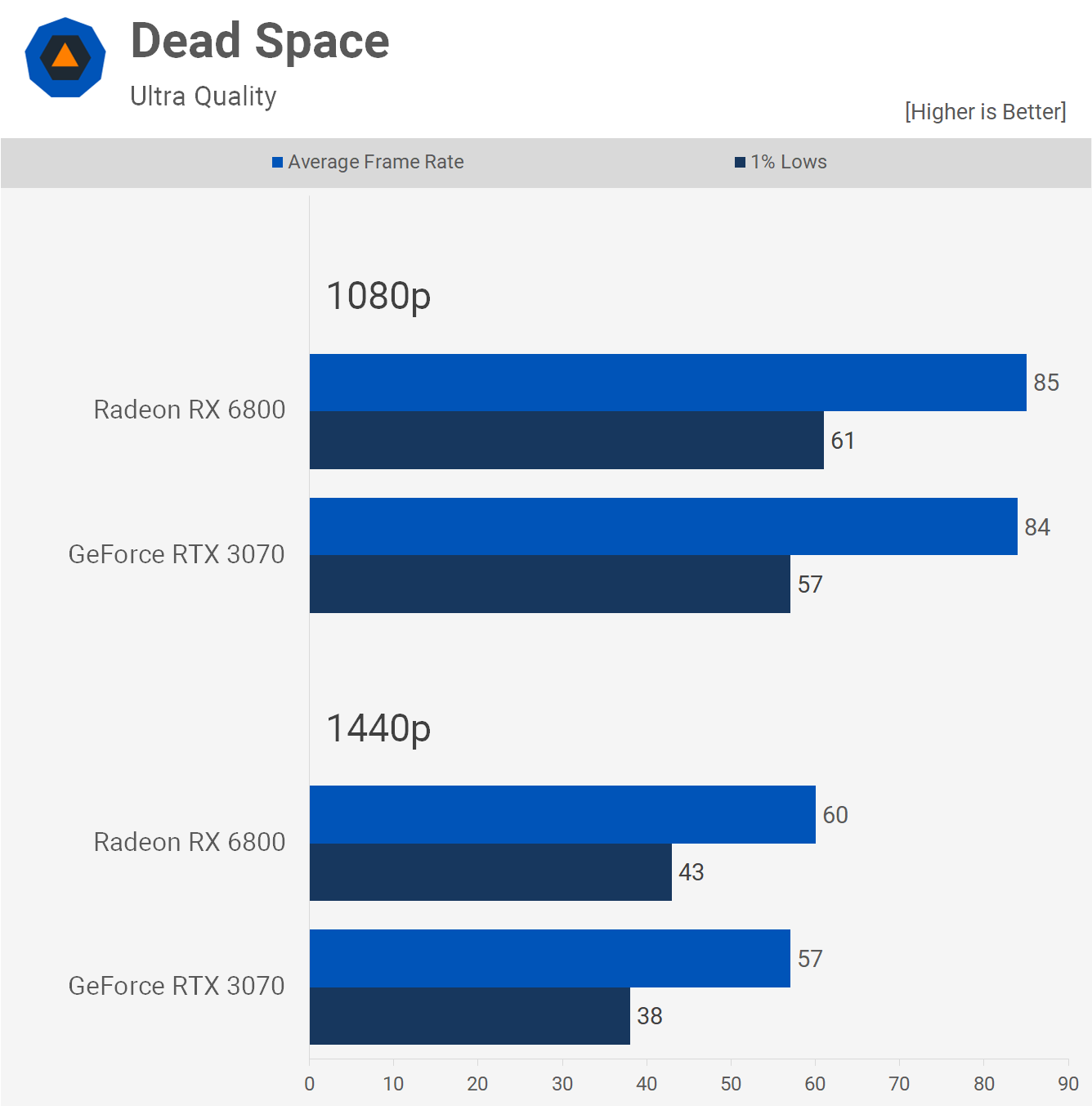

The Dead Space remake ran well on both GPUs using the ultra quality preset, we're looking at over 80 fps at 1080p and around 60 fps at 1440p. The RTX 3070 did manage to match the RX 6800 here, so that's a great result for the previous generation $500 GPU.

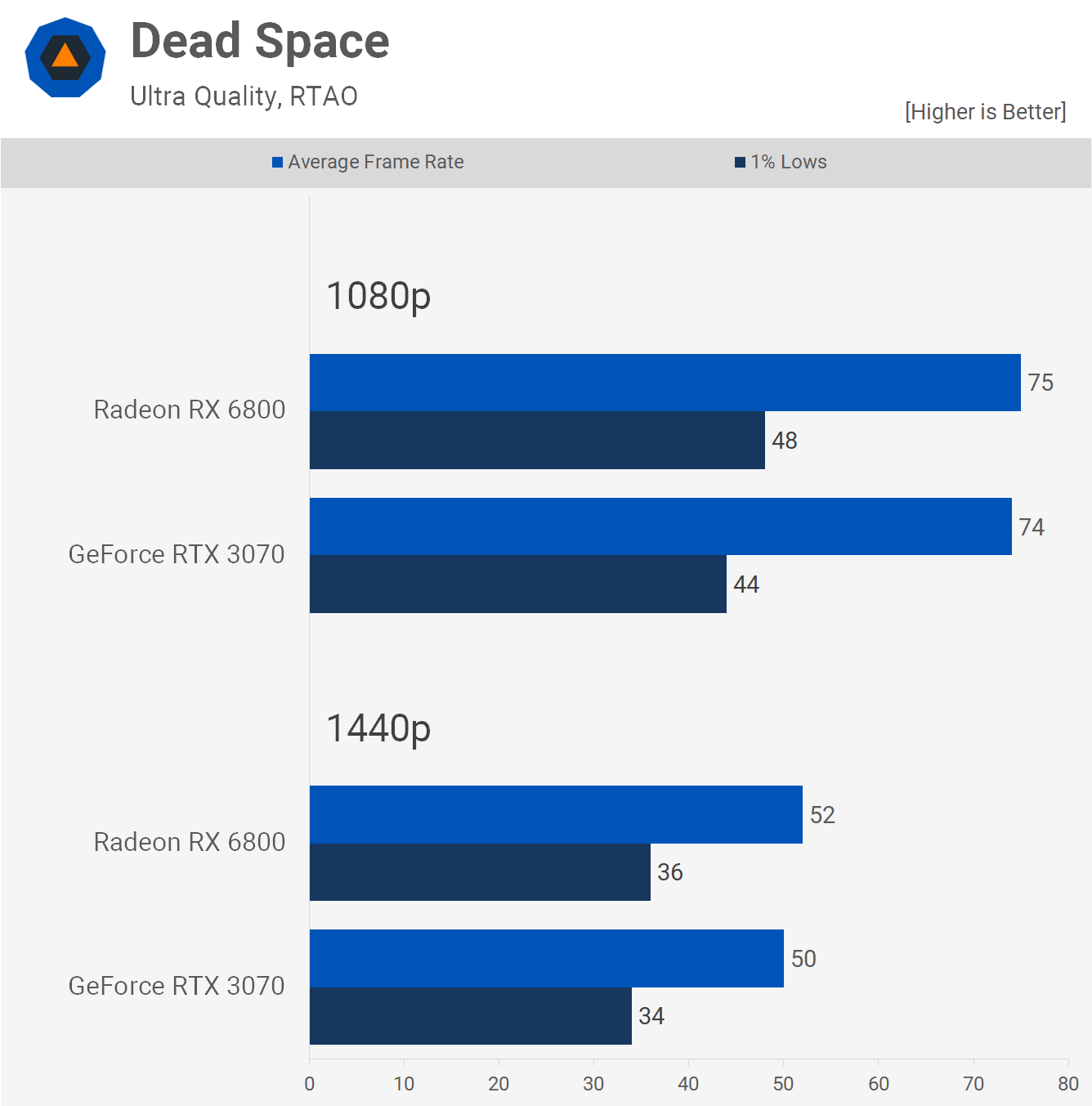

With the game's ray tracing enabled we only see a minor hit to performance and again the RX 6800 and RTX 3070 are evenly matched.

Fortnite

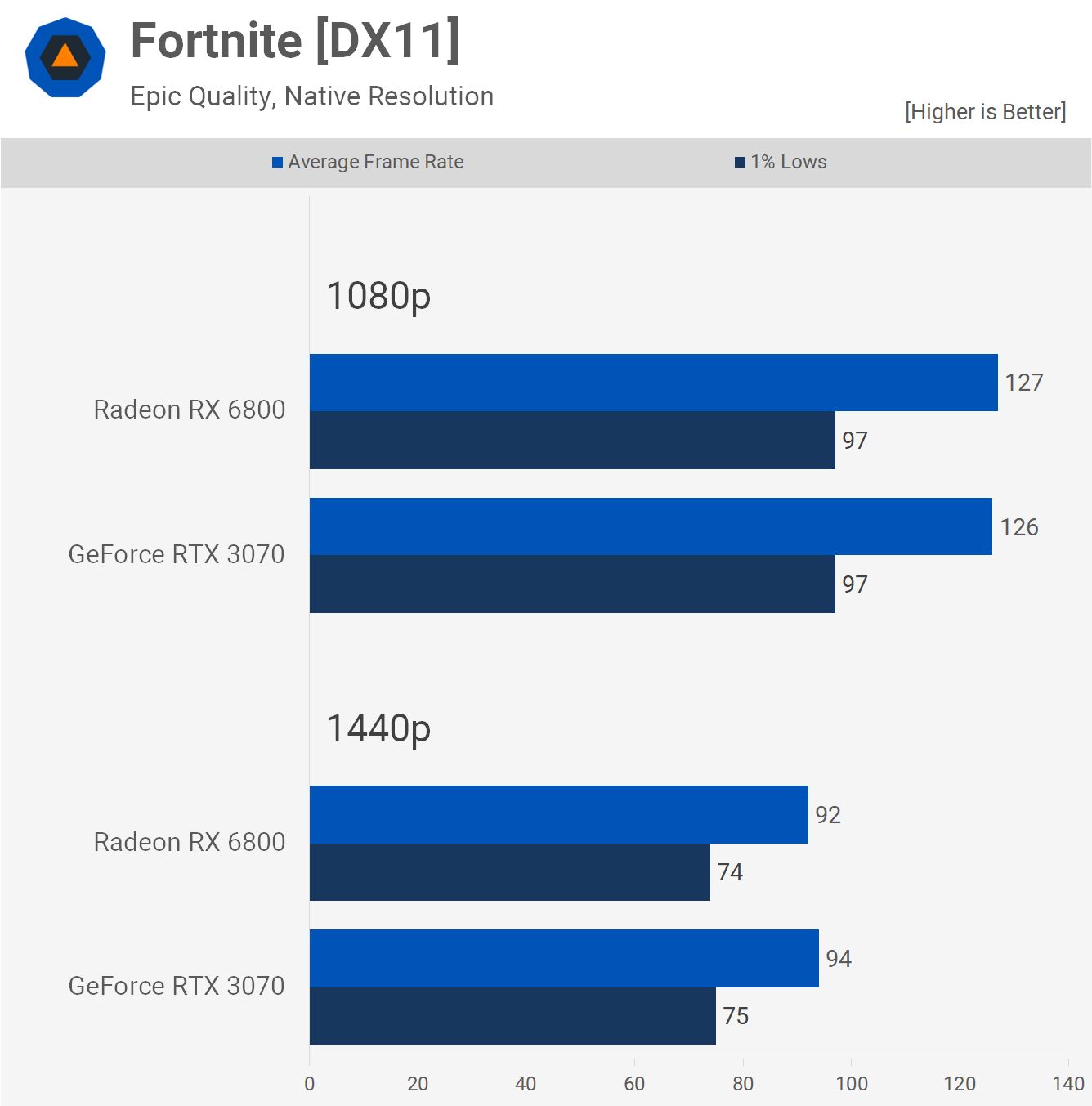

Fortnite, powered by Unreal Engine 5, shows similar performance between these two GPUs using the game's DirectX 11 implementation and with the Epic quality preset we're looking at strong performance at both tested resolutions.

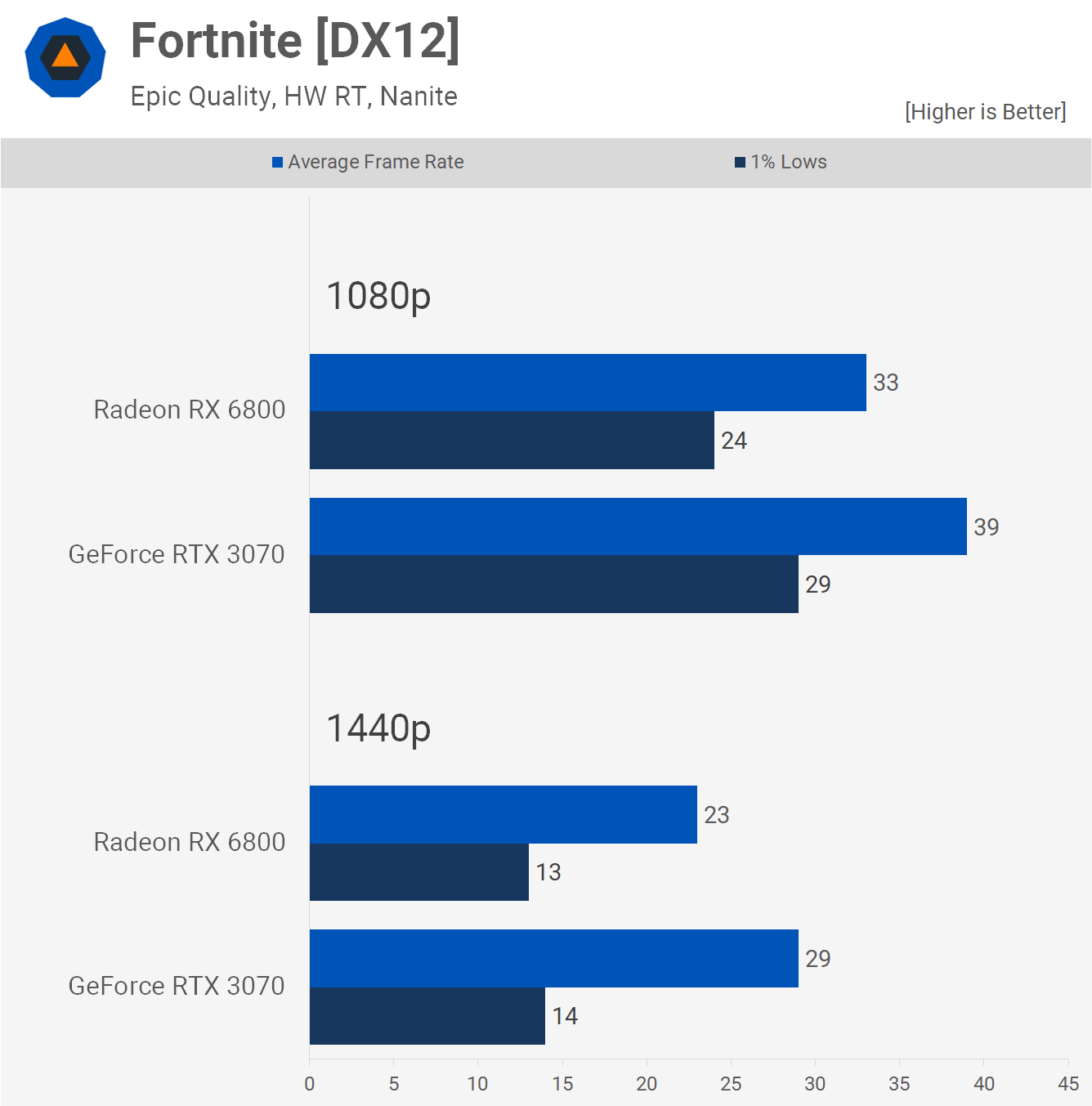

Enabling hardware ray tracing with Nanite and Lumen handed the RTX 3070 a performance advantage, though without the aid of upscaling neither GPU was particularly impressive.

Halo and Other Titles Tested...

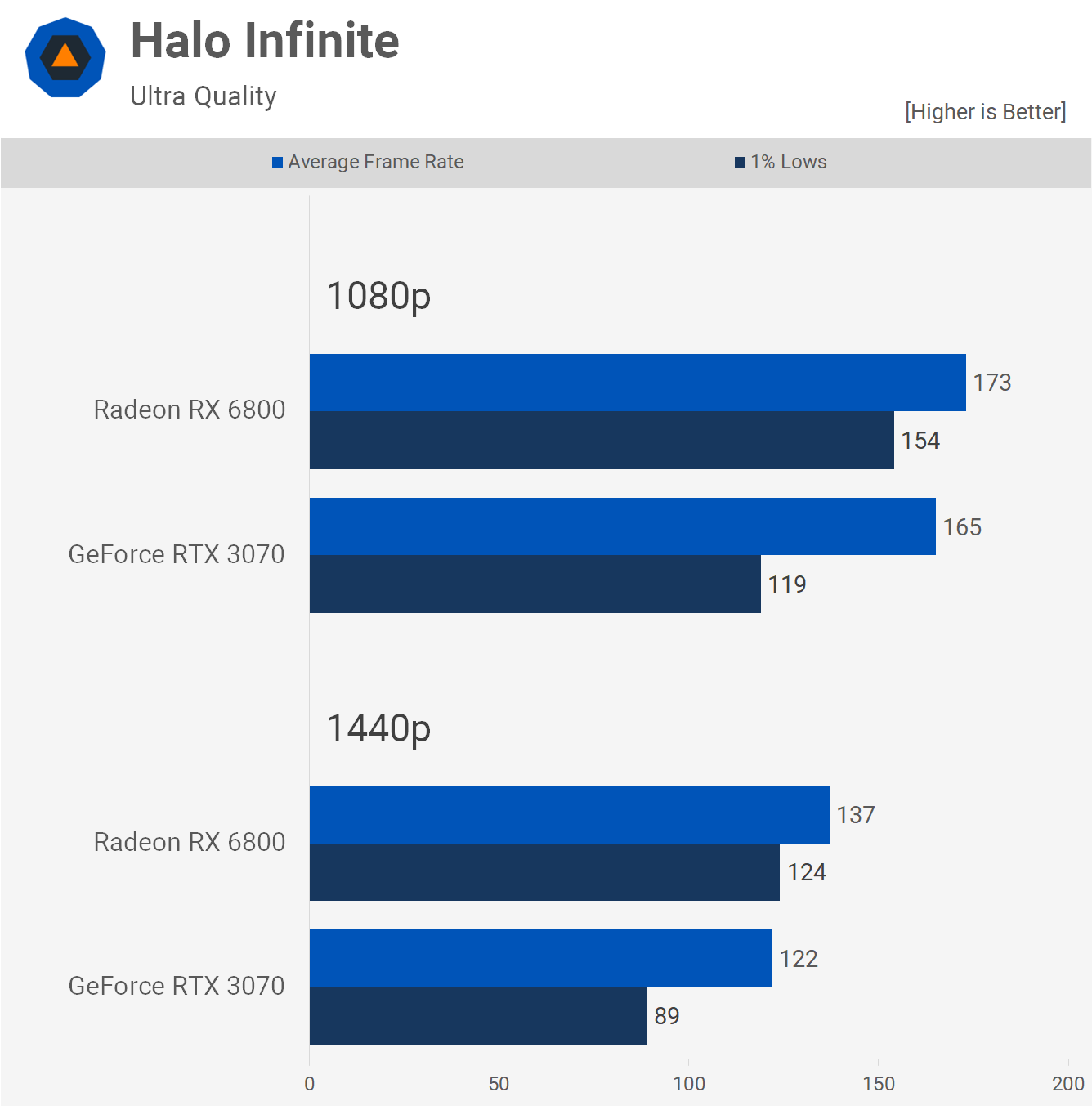

Halo Infinite is a game that we've seen many complaints about performance, but we've got to say in our testing the RTX 3070 seemed to work pretty well.

Although the 1% lows are much lower than what we see from the RX 6800, performance appeared good and didn't suffer from noticeable stuttering. It's likely this title is right on the edge with VRAM usage on 8GB cards.

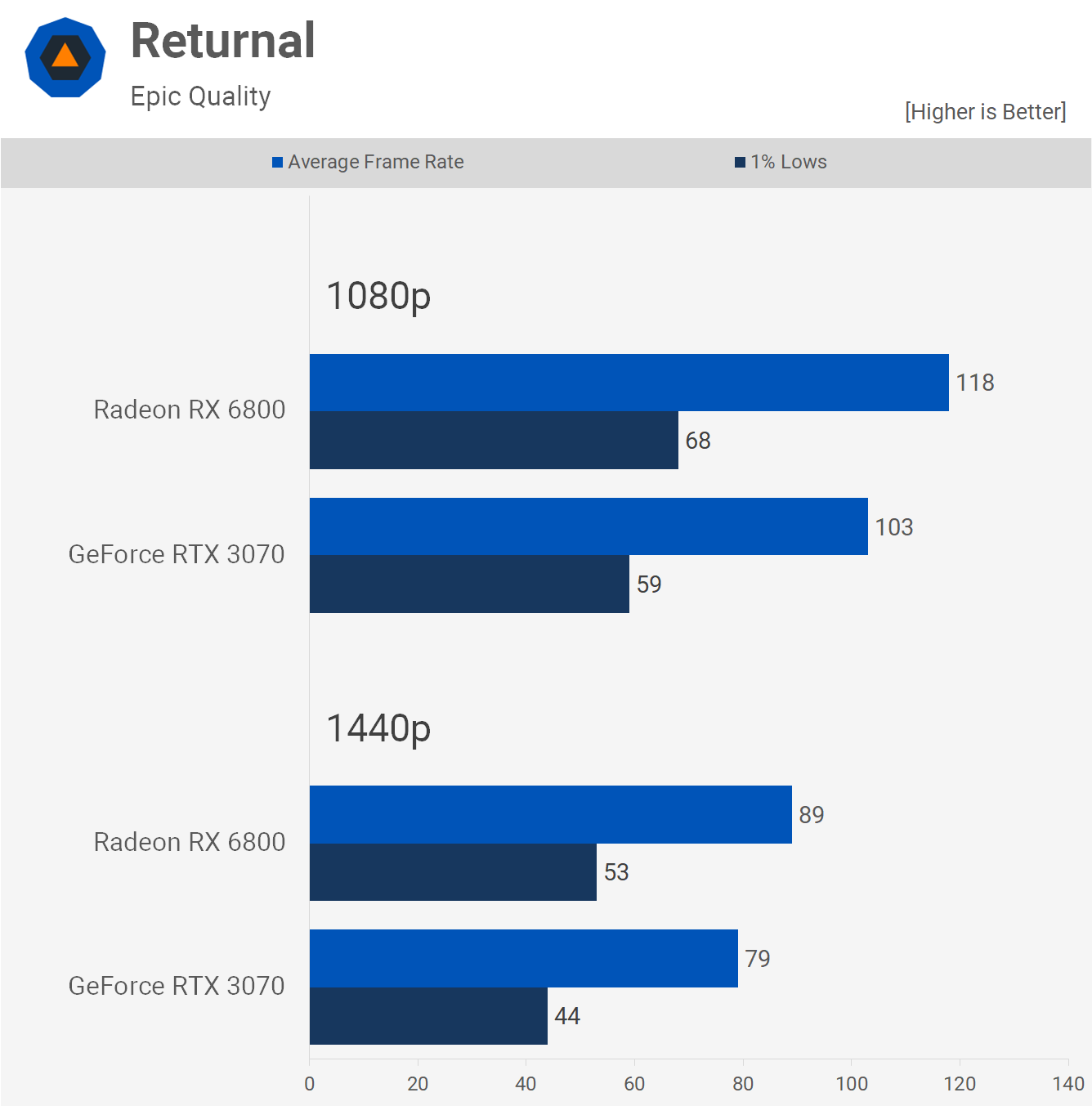

We haven't had time to get into Returnal yet, so we're just using the built-in benchmark for these results and that's generally something we try to avoid. But for now this will have to do. Here we can see using the Epic preset that the RX 6800 is around ~14% faster than the RTX 3070, making them pretty neck and neck in terms of value.

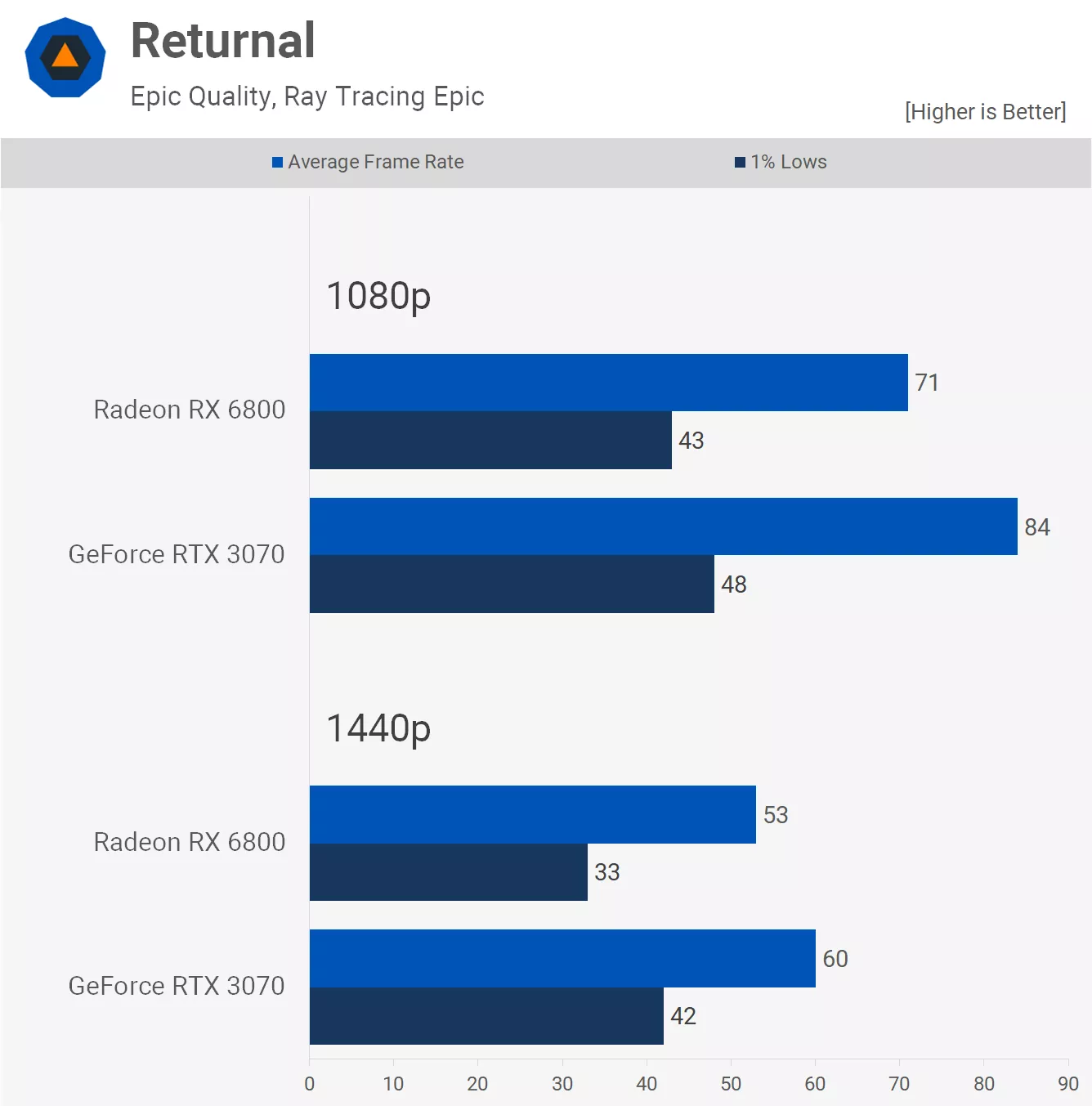

However, when we enable ray tracing the RTX 3070 overtakes the RX 6800, providing an additional 18% performance at 1080p and 13% at 1440p. So again, this is more what we'd typically expect to see when comparing the ray tracing performance of the RTX 3070 and Radeon 6800.

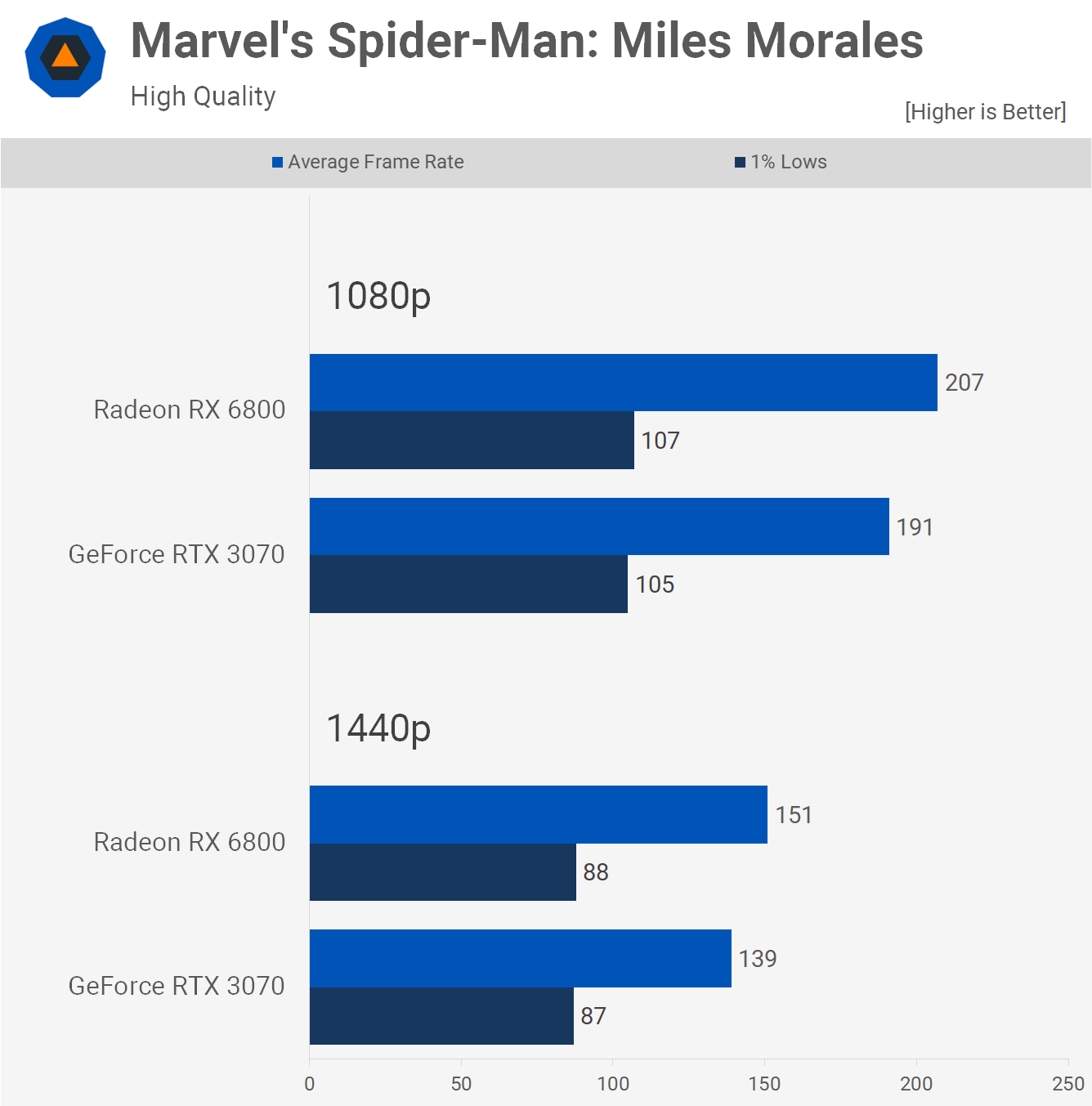

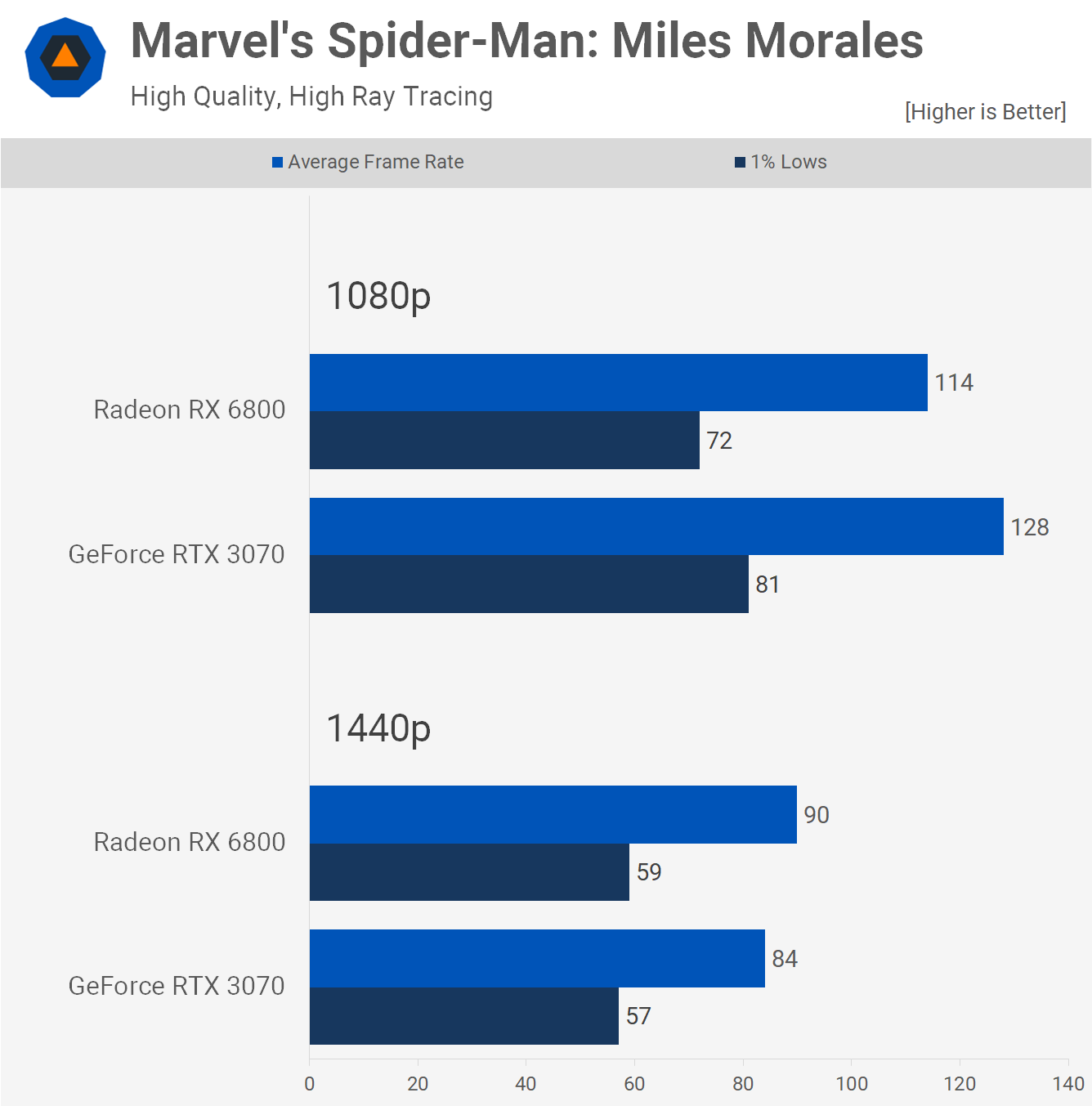

Spider-Man is probably the best example of a well optimized game on the GPU front, and here we see even with ray tracing enabled on its second highest level, along with the second highest visual quality preset, both GPUs were able to render well over 100 fps at 1080p, and we're looking at well over 60 fps at 1440p. Interestingly, the RTX 3070 was 12% faster at 1080p but 7% slower at 1440p, but overall both GPUs delivered excellent performance in this title.

Definitive Proof

After today's testing we believe this is definitive proof that 8GB of VRAM is no longer sufficient for high-end gaming. To be clear, we're not talking about a single outlier in The Last of Us Part 1, but there are a number of new AAA titles that will break 8GB graphics cards, and you can expect many more before year's end, and of course, into the future.

That doesn't mean all 8GB graphics cards are now useless or obsolete, or that all graphics cards released in the last few years should have had more than 8GB of VRAM. Rather, we're seeing clear evidence that 8GB graphics cards are shifting towards the low-end, and therefore you can now consider 8GB of VRAM entry-level.

This leads to a less-than-ideal situation for recently released products with just 8GB of VRAM. This is particularly unfortunate for Nvidia's GeForce 30 series generation which saw the release of the $500 RTX 3070 and $600 RTX 3070 Ti with just 8GB of VRAM.

The RTX 3060 Ti at $400 is also there and we expect the $330 RTX 3060 with its 12GB buffer to age much better. The only 8GB Ampere-based GPU should have been the RTX 3050 which is low-end.

It's the same story with AMD's Radeon RX 6650 XT and 6600 XT, though fortunately for most of its life the 6650 XT has been selling well below the $400 MSRP, and has been retailing for less than $300 for some time now. Meanwhile, the Radeon RX 6600 which we've been recommending ever since it was discounted to just over $200, probably gets away with having just 8GB of VRAM as it's a mainstream offering, not high-end.

Even today you can expect to pay ~$500 for an RTX 3070 and that's a ludicrous amount of money for an already outdated product. For the same money you can buy the Radeon RX 6800, and while we're not enthused about that product at $500, it's a significantly better buy than the 3070.

To repeat ourselves, graphics cards with 8GB of VRAM are still very usable, but they are now on an entry-level capacity, especially when it comes to playing the latest and greatest AAA titles.

For multiplayer gamers, the RTX 3070 and other high-end 8GB graphics cards will continue to deliver the goods, as games like Warzone, Apex Legends, Fortnite and so on are typically played with competitive quality settings which heavily reduce VRAM consumption.

As for the Radeon RX 6800 vs. GeForce RTX 3070 battle, it's becoming increasingly clear that we were right to caution readers about spending so much money on a graphics card with just 8GB of VRAM. And we'll be honest, we didn't think we would end up being quite this right, nor did we expect that we would be faced with a situation where the Radeon 6800 was delivering better ray tracing performance than the RTX 3070, that's pretty crazy.

It's also somewhat disappointing when you realize that in just about every ray tracing-enabled scenario we covered in this review, had the RTX 3070 been paired with 16GB of VRAM, it would have been faster than the Radeon.

But the goal here is not a big long "I told you so, r/Nvidia," rather it's an attempt to raise awareness, educate gamers about what's going on, and encourage them to demand more from Nvidia and AMD.

Moving forward, 8GB of VRAM should be reserved for sub-$200 products, or ideally sub-$100 graphics cards. The 12GB VRAM mark should become the new entry point, 16GB at the mid range, and 24GB beyond that. This is what gamers should now be demanding from GPU makers.