After years of waiting, the day has finally arrived: Cyberpunk 2077 is here. CD Projekt Red's long-awaited title is easily the biggest release of the year. The game was announced back in 2012, and since then multiple teasers and trailers have kept the hype growing. So, has the wait been worth it? Based on the Steam reviews alone, we'd say the answer is yes.

Cyberpunk has received mostly positive reviews in massive numbers and with over one million concurrent players on Steam in the first 24 hours, it's already smashed Fallout 4's record for the most players for a single player title. When preloads went live, Steam saw its biggest bandwidth peak since PUBG, reaching 23.5 terabytes per second.

So Cyberpunk 2077 is massively popular and appears to be a huge success, both in terms of sales and the quality of the game. Therefore we'll be dedicating a fair amount of time to check this one out. This first feature will cover Cyberpunk 2077 performance with all current and previous generation GPUs from AMD and Nvidia.

Then on the coming days, we'll be providing a detailed look at ray tracing and DLSS performance as well as some in-game quality comparisons, and possibly a third article will look at older GPU generations and CPU benchmarks as well. So plenty more content to come.

Follow up: Cyberpunk 2077 DLSS + Ray Tracing Benchmark

For this GPU benchmark round we're using version 1.03 of the game, which is the launch version with the day-one patch applied, which we waited for before we began testing. The game does not feature a built-in benchmark, which is fine as we typically prefer to measure in-game performance. We're testing in 'Little China' using the 'Streetkid' lifepath. This means testing takes place in the most demanding section of the game, in the city while it's raining.

As for quality settings, we've tested using both the 'medium' and 'ultra' quality presets at 1080p, 1440p and 4K. In total, we tested 25 graphics cards comprising current and previous generation GPUs. Do note the game was reset when changing quality presets. So in order to create this article we've made nearly 500 benchmark runs, each lasting 60 seconds. Forget about sleep... "All work and no play makes Steve a dull boy."

Cyberpunk 2077 has been a pleasure to test with, though. In all of those runs, the game crashed to desktop just a single time, which is highly stable for a new video game. It's also fast to load, the menu system is well-conceived, and switching between resolutions is a breeze. If only all games were this well polished.

The test system was configured with a Ryzen 9 5950X with 32GB of DDR4 memory on the MSI X570 Unify motherboard, and of course we've used the latest display drivers available from AMD and Nvidia.

During the week prior to the game's release, we ran a poll asking what would be the minimum sustained frame rate you'd accept in a game like Cyberpunk 2077 considering the stunning visuals. The answer was an overwhelming number of you saying 60 fps or better. Over 60K readers/viewers responded to the pool and only 7% of voted saying 45 fps was acceptable, and a mere 4% willing to settle on a console-like 30 fps experience. With that in mind, let's get into the results.

Benchmarks

Ultra Settings

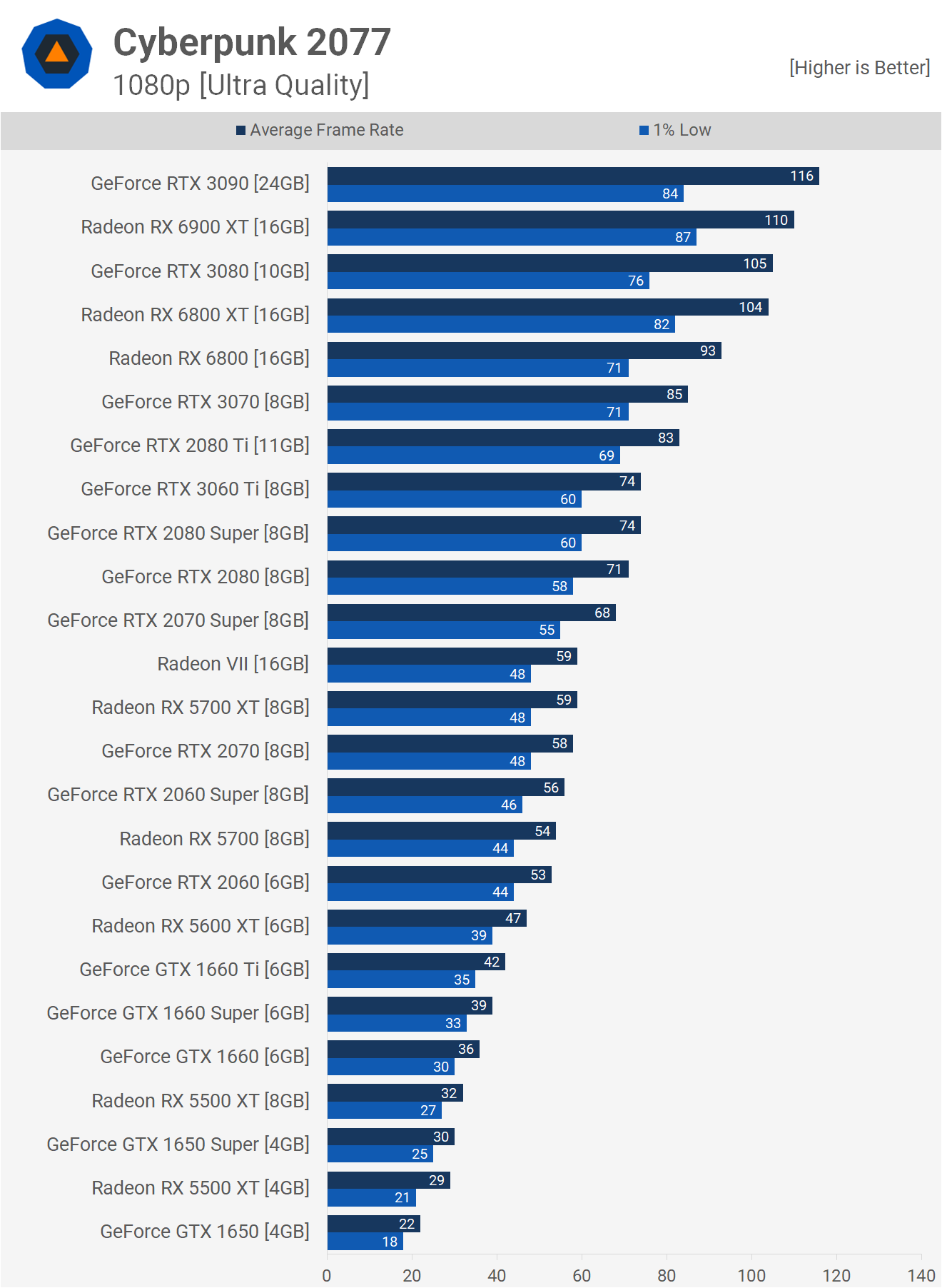

Starting with the 1080p Ultra results we see for that desired 60 fps experience you'll require a Radeon VII, RX 5700 XT or a GeForce RTX 2070, and even then we're talking about almost 60 fps on average with 1% lows below 50 fps.

For a more consistent 60 fps experience you'll want an RTX 2080, 2080 Super, 3060 Ti or the new Radeon RX 6800 from AMD.

If you're willing to settle with 40 to 50 fps, anything from the GTX 1660 Ti up to the RX 5700 will work. Obviously Cyberpunk 2077 is a very demanding game as it's a visually impressive open world game, so the numbers you see here aren't unexpected, and of course, we'll looking at performance using the medium quality preset soon.

Before we move on to the 1440p test results, it's interesting to note that even though Cyberpunk 2077 is an Nvidia sponsored title and has been highly optimized to take advantage of Nvidia's hardware (at least the PC version has) the game runs very well on Radeon GPUs at launch, which is great. The Radeon RX 5700 XT, for example, is able to edge out the RTX 2070 and 2060 Super and while it has been matching the 2070 Super in most new AAA titles, the fact that it still beats its main competitor in the 2060 Super is a good result.

The RX 6800 also beats the RTX 3070 by a 9% margin and although it does cost 16% more at MSRP, that's still a good result in an Nvidia sponsored title. We're also seeing a situation at 1080p where the 6800 XT beats the RTX 3080.

Now for those of you hoping to play Cyberpunk 2077 at 1440p with the visual quality settings cranked up, while also achieving 60 fps, you'll want to make sure you have at least a Radeon RX 6800 and in today's market that's not an easy product to find, as is the case for all the GPUs towards the top of this graph.

Here the Radeon 6800 XT slips behind the RTX 3080, though it was just 5% slower. The new 6900 XT was able to match the RTX 3080. Then the super expensive RTX 3090 was 11% faster than the 3080, which is a pretty typical performance uplift at this resolution, which means Nvidia's flagship graphics card was good for 81 fps on average.

For those of you comfortable with a 40-50 fps experience, the new GeForce RTX 3060 Ti will suffice, or the previous generation flagship RTX 2080 Ti. From AMD you'll need a Radeon VII for just 40 fps on average, while the 5700 XT dropped down to 36 fps.

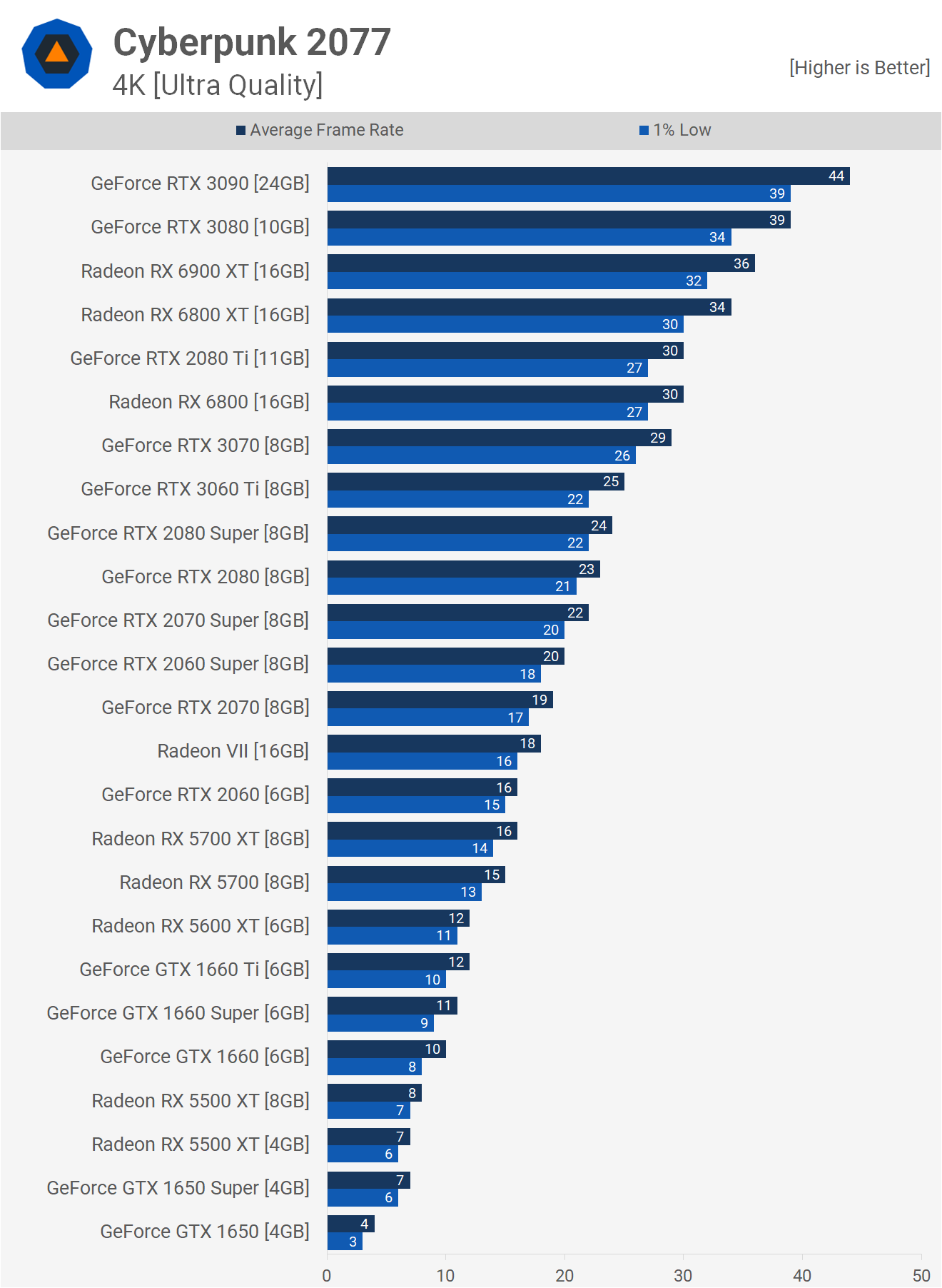

A 60 fps experience using ultra quality settings simply isn't possible right now, as even the RTX 3090 was good for just 44 fps on average. This is how we played the game initially and while it did look visually stunning, aiming wasn't a fun experience. For us this frame rate isn't really playable, or at least not to a degree where we find ourselves enjoying the game. Of course, GeForce RTX owners will want to enable DLSS for a (much) better experience, but as we noted, we'll be covering that in better detail in our follow up article.

AMD has yet to release their DLSS-like technology, so short of downscaling with image sharpening, there's no way to get that 4K image quality with playable frame rates using a Radeon GPU.

Ultra vs. Medium Quality

Before we move into the medium quality testing, we should mention that comparing image quality between the medium and ultra presets at 4K using the RTX 3090, we honestly couldn't tell the difference in some areas of the game. In others, ultra does offer slightly better shadow quality and lighting effects, but it's subtle and you have to be looking for those differences.

The section of the video shown here belongs to the benchmark pass, as this is obviously highly relevant to the numbers being shown. In short though, you're looking at around a 40% performance uplift with the medium preset for a minor downgrade in image quality, at least in our opinion.

Medium Settings

Switching to the medium quality preset at 1080p, we see that for 60 fps you can get away with a GTX 1660 Ti or Radeon RX 5600 XT, which is a surprisingly low requirement, though we are only at 1080p. Still under these conditions, you can get over 40 fps with a GTX 1650 Super or 5500 XT, both of which were underwhelming previous-generation budget GPUs.

The 5700 XT performed better relative to the GeForce competition using these dialed down quality settings, sitting directly between the 2060 Super and 2070 Super.

The newer RTX 3060 Ti and RX 6800 were faster again as you'd expect, pushing well over 100 fps, getting up around 120-125 fps. For me personally, we find 90 fps ideal, input feels good at that frame rate, so the 5700 XT works well. Point is though, with these dialed down, but still visually appealing quality settings, the game plays very well at 1080p on rather modest hardware.

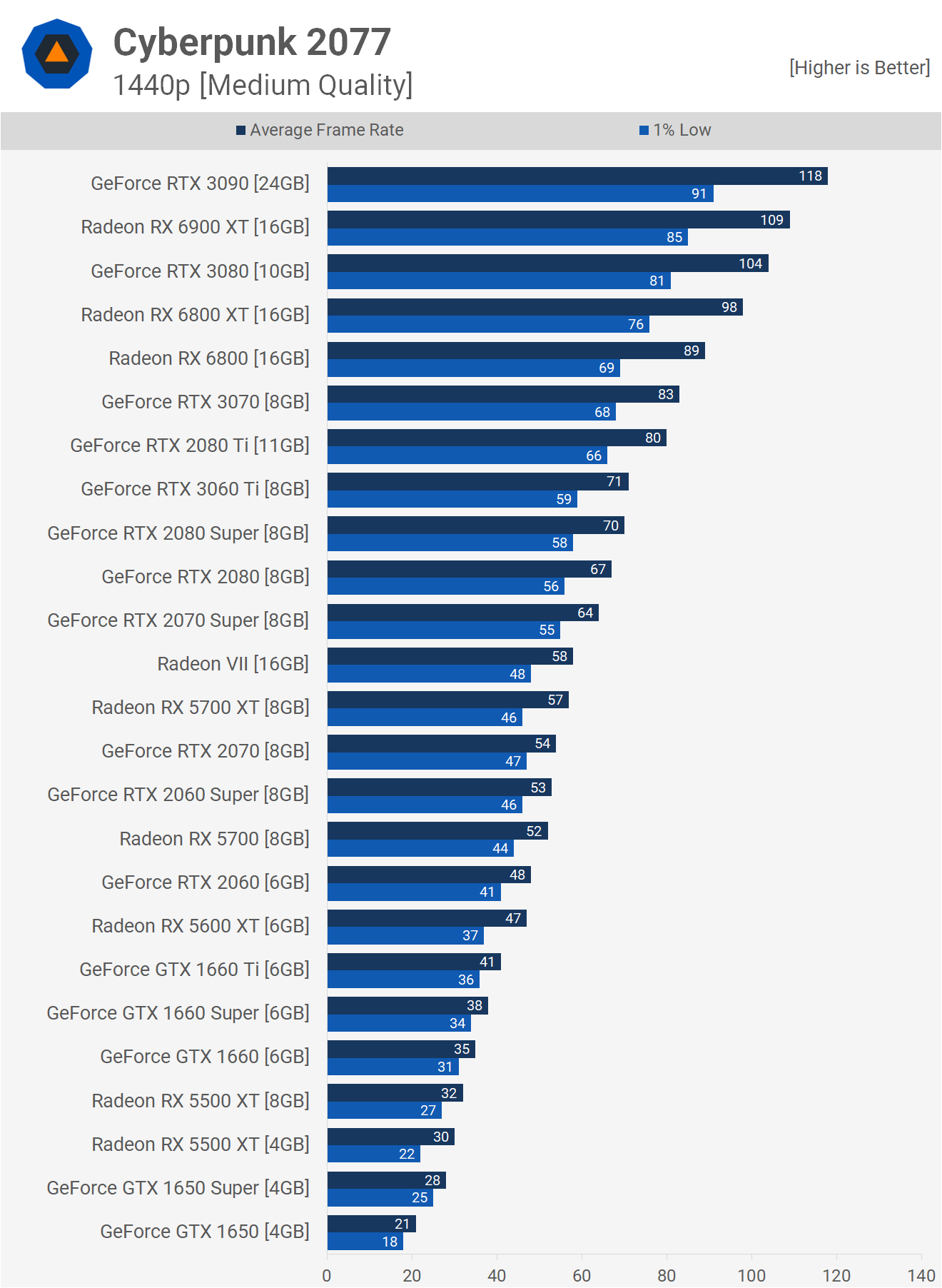

Moving to 1440p means gamers will require at least an RTX 2070 Super for 60 fps with the medium quality settings. The Radeon VII and 5700 XT fall just short, though passable for someone after 60 fps on average. The new RTX 3060 Ti offers a much better experience, though the gains over the previous-gen $400 part, the 2060 Super are disappointing.

In our RTX 3060 Ti review we found the GPU to be 50% faster than the 2060 Super on average, yet here it's just 34% faster and that would have been one of the weakest margins we saw in our review covering 18 new and popular titles. The RX 6800 does a little better relative to the card it's replacing, the 5700 XT, offering 56% more performance and in our day one review featuring 18 games, it was on average 52% faster.

Finally at 4K, it's possible to push up over 60 fps and even achieve close to a 60 fps 1% low when using an RTX 3090. The RTX 3080 was also good for 60 fps on average, as was the Radeon RX 6900 XT, while the 6800 XT managed just over 50 fps.

For the RTX 3070 and slower GPUs, we'd recommend dialing the resolution back to 1440p for a much smoother gaming experience.

Preset Scaling

Here's a look at preset scaling performance with the 6800 XT and RTX 3080 at 1440p. Both saw a 42% performance uplift when going from ultra to medium. As we just saw when looking at a wide range of GPUs, that kind of performance uplift makes a huge difference to the lower-end models.

The visual difference between high and ultra is minimal, at least in the sections of the game we've compared. Medium seems like a great compromise though, and then you have low, which is noticeably worse than medium. The game still looks good on the low setting however, so those of you with older or budget GPUs are in for a real treat even at lower quality settings. This is something we may end up benchmarking with older GPUs later on if there's enough interest.

What We Learned

That's how Cyberpunk 2077 performs on modern GPUs, and we've gotta say given how visually breathtaking the game is, the performance is justified for an open-world title. If you're willing to compromise ever so slightly on image quality with the medium preset, then the game becomes very playable on surprisingly mid-range hardware like the Radeon RX 5700 XT or RTX 2070. Granted, these are still $400 GPUs, but the 5700 XT is very reasonable given what we're seeing here.

With the new wave of GPUs hitting shelves, of course, it's the RTX 3060 Ti you want to buy at this price point, which achieves a comfortable 71 fps at 1440p with the medium quality settings. Again, remember we'll look at how the 3060 Ti handles ray tracing with and without DLSS in our follow up benchmark review, along with many more GPUs.

We're also keen to look at CPU performance as the game appears to be very demanding on that regard. For example, we saw up to 40% utilization with the 16-core Ryzen 9 5950X, and out of interest we quickly tried the 6-core Ryzen 5 3600 as that's a very popular processor. We're happy to report gameplay was still smooth, though utilization was high with physical core utilization up around 80%.

RAM usage isn't extreme in Cyberpunk 2077, and when not constrained by VRAM, you're looking at around 7 to 8GB of system memory used by the game, which is reasonable and it means you should be fine for a smooth experience with 16GB of RAM. The section of the game we tested saw VRAM usage peak at 6.5GB at 1080p, 7GB at 1440p, and 8GB at 4K, but expect those figures to increase with ray tracing enabled.

Overall, Cyberpunk 2077 is a great looking next generation game that appears to scale very well to suit a wide range of hardware. If you're not satisfied with the performance your old GPU is delivering, then hopefully this article has been helpful in steering you towards your next upgrade, presumably some time next year when we'll actually be able to buy graphics cards again.