Following up on the mini-test we did for PlayerUnknown's Battlegrounds back in June, it was about time we checked where the game's performance is at after receiving countless updates, not to mention all the hardware releases along the way.

At the time, we compared graphics card performance using various presets and concluded that the best value combo for 60fps/1080p performance was the Ryzen 5 1400 and GTX 1060. Since then, updates to the game claim to have addressed the game's poor optimization issues including a patch about four months back that improved CPU utilization by allowing the game to utilize six or more cores.

That being the case, we thought it would be interesting to focus on CPU performance for this one. Today's testing involves all of the 8th-gen Intel Core series CPUs, all the Ryzen CPUs, and a few from the seventh-gen Core series, so we have test results for 16 different processors at 1080p on very low, medium and ultra quality presets.

The chips were paired with a GeForce GTX 1080 Ti using the 388.43 driver and the CPU utilization of all 16 CPUs has also been included, for those interested. All unlocked Intel CPUs along with all Ryzen CPUs have been tested using DDR4-3200 CL14 memory. Meanwhile the locked Intel CPUs were tested using DDR4-2400 CL14 memory. So, for example, the Core i3-8350K was tested with 3200 memory but the Core i3-8100 used 2400 memory.

For testing we walked through the town of Pochinki for 30 seconds, which is more than enough time to gather the data we need. The pass time was reduced from the normal 60 seconds to just 30 seconds to try and minimize the frequency at which we were killed before completing a pass as this is a high loot area, so high risk but high reward for those seeking good gear.

The test starts and ends at the exact same point every time and the results are based on an average of three runs. Let's check them out...

Benchmarks

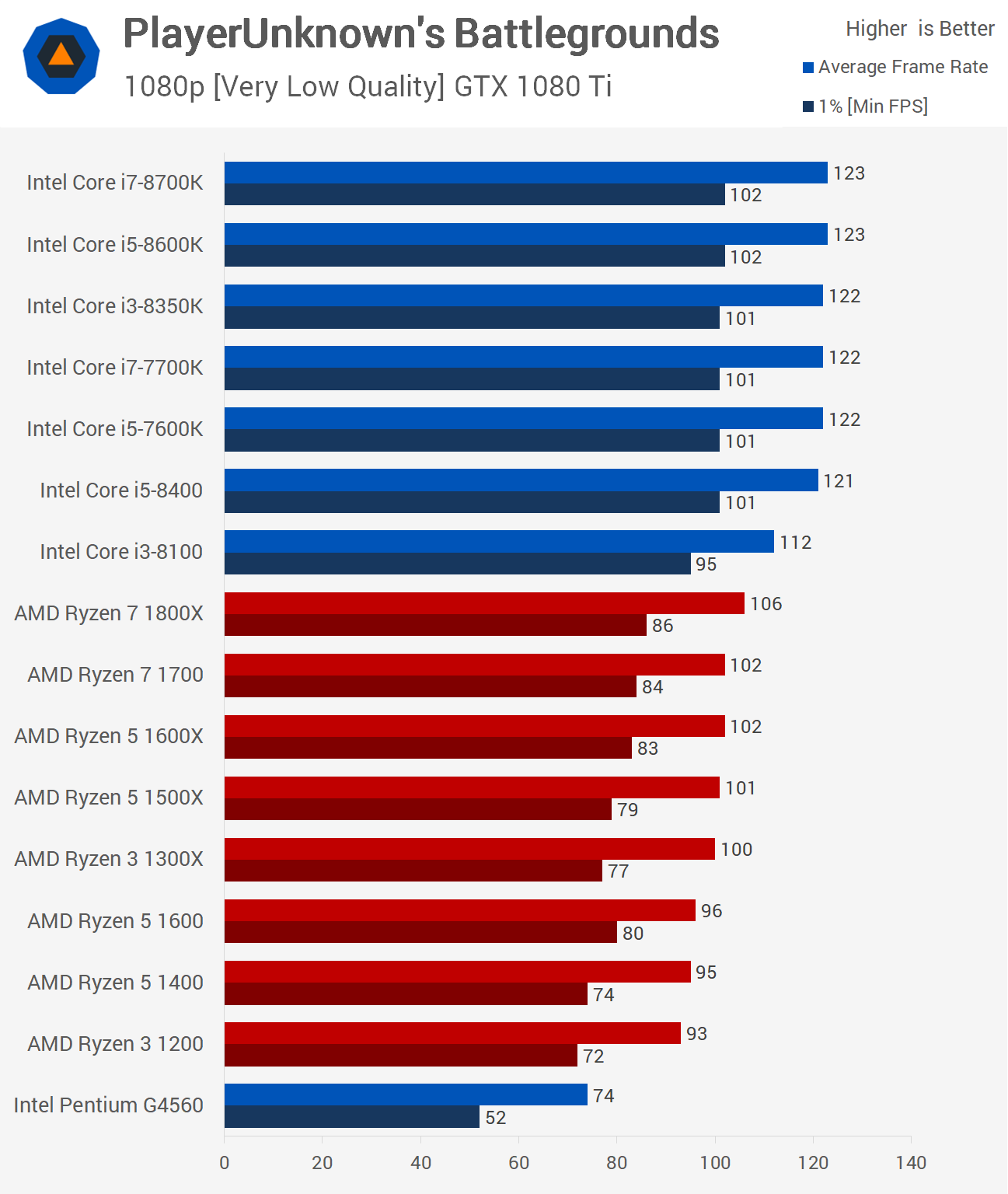

First up we have the 'very low' quality results and visual quality settings are set to their lowest value, so this should remove the GTX 1080 Ti as the performance limiting component. That said, we are clearly seeing a GPU bottleneck with the majority of the 7th and 8th-gen Core processors.

The GTX 1080 Ti is only good for 120fps on average with dips to around 100fps.

Previously, when testing with the ultra quality preset the 7700K and R5 1600 delivered the same performance. Here the 7700K is 20% faster than the 1600X as the Ryzen CPUs appear to be struggling in comparison. Of course, with well over 60fps at all times the Ryzen CPUs still provided playable performance but in a game that claims to support high core count CPUs, the results are disappointing.

Shockingly, the Ryzen 7 1800X was just 14% faster than the Ryzen 3 1200 for the average frame rate and just 6% faster than the 1300X. This suggests that the game isn't really utilizing the higher core count CPUs well at all and instead prefers core frequency over core count.

It appears as though a quad-core is sufficient and it doesn't necessarily require HT or SMT support. That said, for optimal performance a dual-core with HT isn't enough and we see this with the Pentium G4560 which was considerably slower than any other CPU tested. It was still playable however, and would be a good pairing for a budget-minded $100 graphics card.

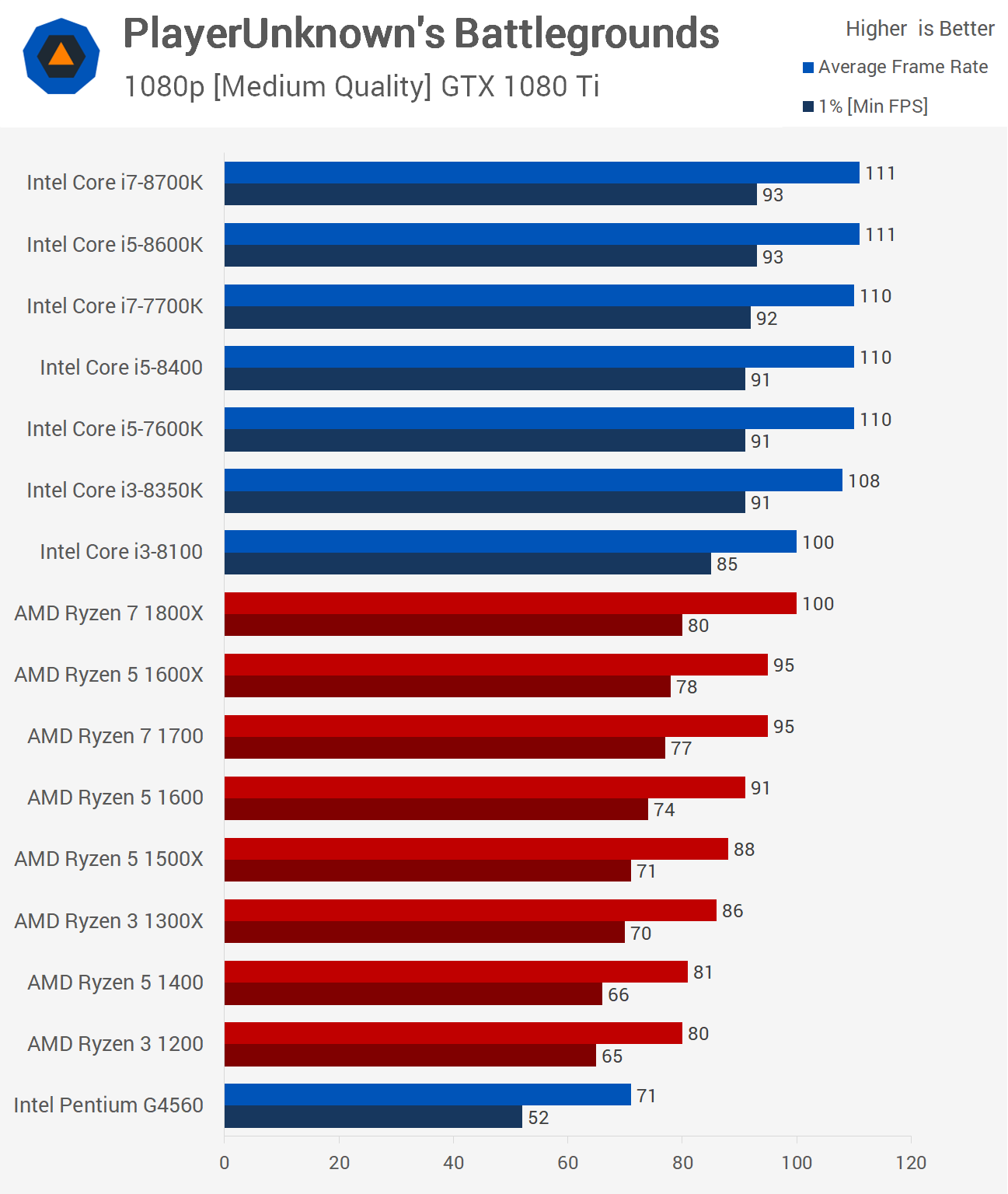

Increasing the visual quality settings to the medium quality preset reduces the GTX 1080 Ti's performance by around 10% with the fastest CPUs tested.

There is a little bit of reshuffling with the Ryzen CPUs and now those with more cores are doing better, at least when compared to what we saw previously. The 1800X is 25% faster than the R3 1200 and 16% faster than the 1300X. So the medium quality settings appear to place more load on the CPU though this wasn't apparent when monitoring CPU utilization as the overall usage figure was much the same.

Again, most of the Intel CPUs are able to find the limits of the GTX 1080 Ti. so it's likely the 8 and 12 thread models could go faster again.

Finally we have the ultra quality preset results and here we see very little change from the medium quality results. For the most part a few frame are dropped though it's the Intel quad-cores that are the biggest losers here. The 1% low result for the Core i3-8100 and 8350K dropped by around 15% whereas the Ryzen 5 1500X and Ryzen 3 1300X, for example, were just 8% slower.

Quality Presets

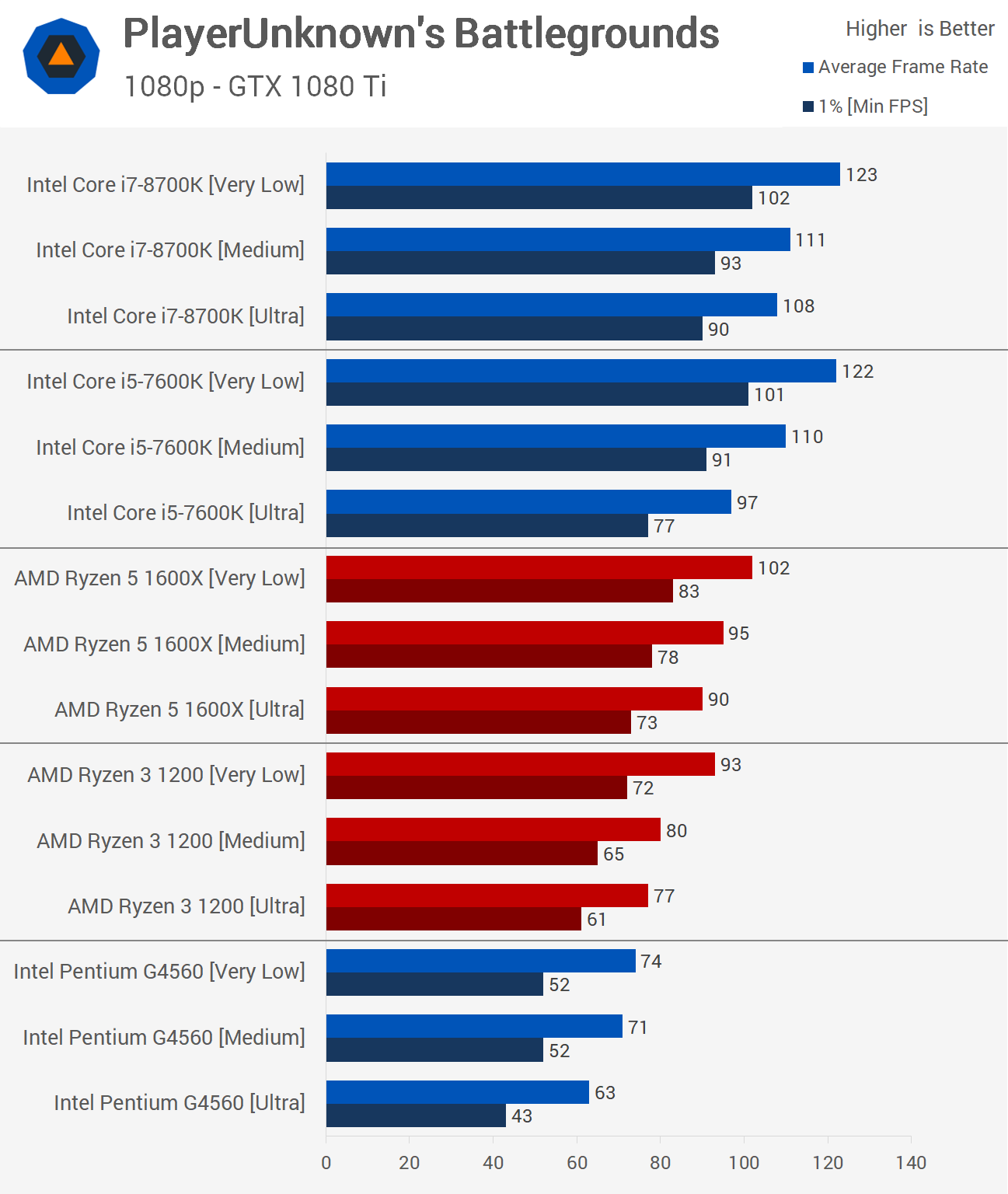

So far the results seem to be somewhat all over the place and that's something we often see with poorly optimized games. This graph gives us a better look at what's going on. Looking at the Core i7-8700K we see a 10% drop from very low to medium and then just a 3% drop from medium to ultra.

The Core i5-7600K on the other hand falls 10% from very low to medium and then a further 12% from medium to ultra. So that's interesting: the ultra quality preset certainly hurts the quad-core more but then we have the Ryzen 3 1200 results which are more inline with what we saw from the 8700K, confusing to say the least. Then the 1600X shows fairly consistent scaling across the three quality presets.

The Pentium G4560 is different again showing similar results with the very low and medium presets but then dropping quite a bit when using the ultra preset, while the Core i5-7600K and Ryzen 5 1600X are the only CPUs to show consistent scaling.

CPU Utilization

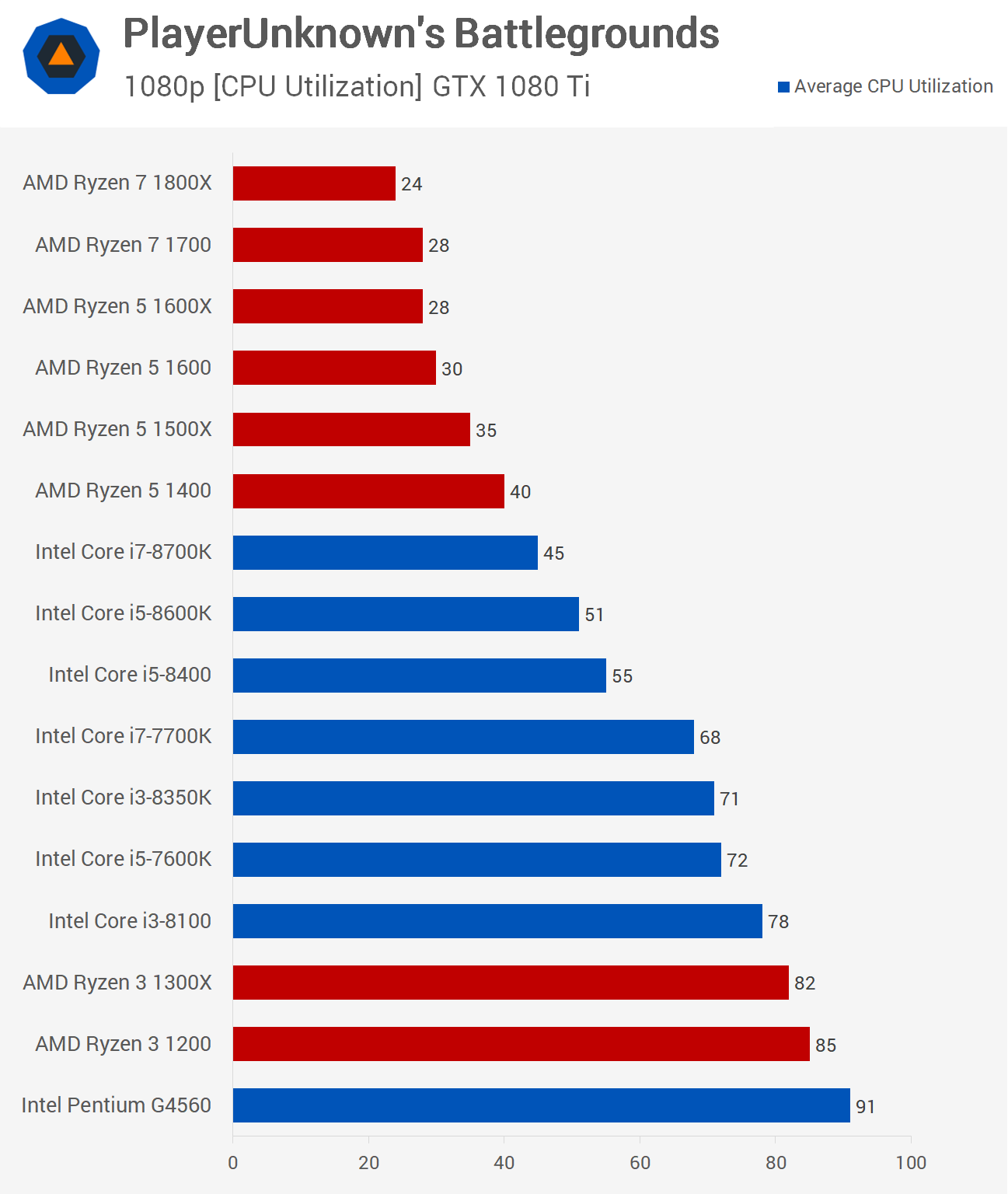

Yet more interesting results. What you're looking at here is the average CPU utilization recorded from the 30 second pass, so it's not the peak, but rather the average. The G4560 for example at times did hit 100% but it also dropped down to around 80%, though for the entire test the average utilization figure was 91%.

What's interesting to note here is how heavily under-utilized the Ryzen 5 and 7 series CPUs are. The Ryzen 5 1500X for example has four cores and eight threads that clock up to 3.7GHz depending on load. Yet the Core i7-8700K which packs six cores, 12 threads and a minimum operating frequency of 3.7 GHz actually saw a higher utilization figure, quite a bit higher.

AMD's own 6 core/12 thread Ryzen 5 1600X saw an average utilization figure of just 28%, which is considerably lower than the 45% figure seen when testing with the 8700K. You would expect a lower clocked CPU of the same core count to see higher utilization, not drastically lower. So there is clearly an optimization issue here for the Ryzen CPUs.

Conclusions

It's been six months since we last tested PlayerUnknown's Battlegrounds and it's safe to say that the game still requires further optimization. Shoddy Ryzen support aside, even the Core i7-8700K and GTX 1080 Ti combo was very underwhelming – an average of 123fps at 1080p using the minimum quality settings is pathetic.

Helping to put that result into context, the same combo pushes over 220fps in Battlefield 1 on medium settings, 200fps in Warhammer II, 240fps in F1 2017, 260fps in Rainbow Six Siege, 220fps in Call of Duty WWII, 250fps in DiRT 4, and the list goes on. And again, all of those games were running on medium instead of the minimum quality preset.

In anticipation of the argument that PUBG is an open-world shooter and therefore hammers the CPU, we saw that this simply wasn't the case when using the very low quality settings. Quad-core Intel chips and greater hit a GPU bottleneck at just 120fps while the Ryzen CPUs were heavily under-utilized.

One thing seems clear, if you're a massive PUBG fan or you're building a PC solely to play this title, something like the Core i3-8100 or 8350K for example will offer you the most bang for your buck. We'd normally never recommend the 8350K, but PUBG makes it a valid choice.

We're yet to test older CPUs but chances are anything back to the Core i5-2500K will play the game just fine, providing a mild overclock is applied. It was surprising to find that there's little difference between the very low and ultra quality settings in terms of frame rate performance when using a high-end GPU. Visually though, there's a massive difference.