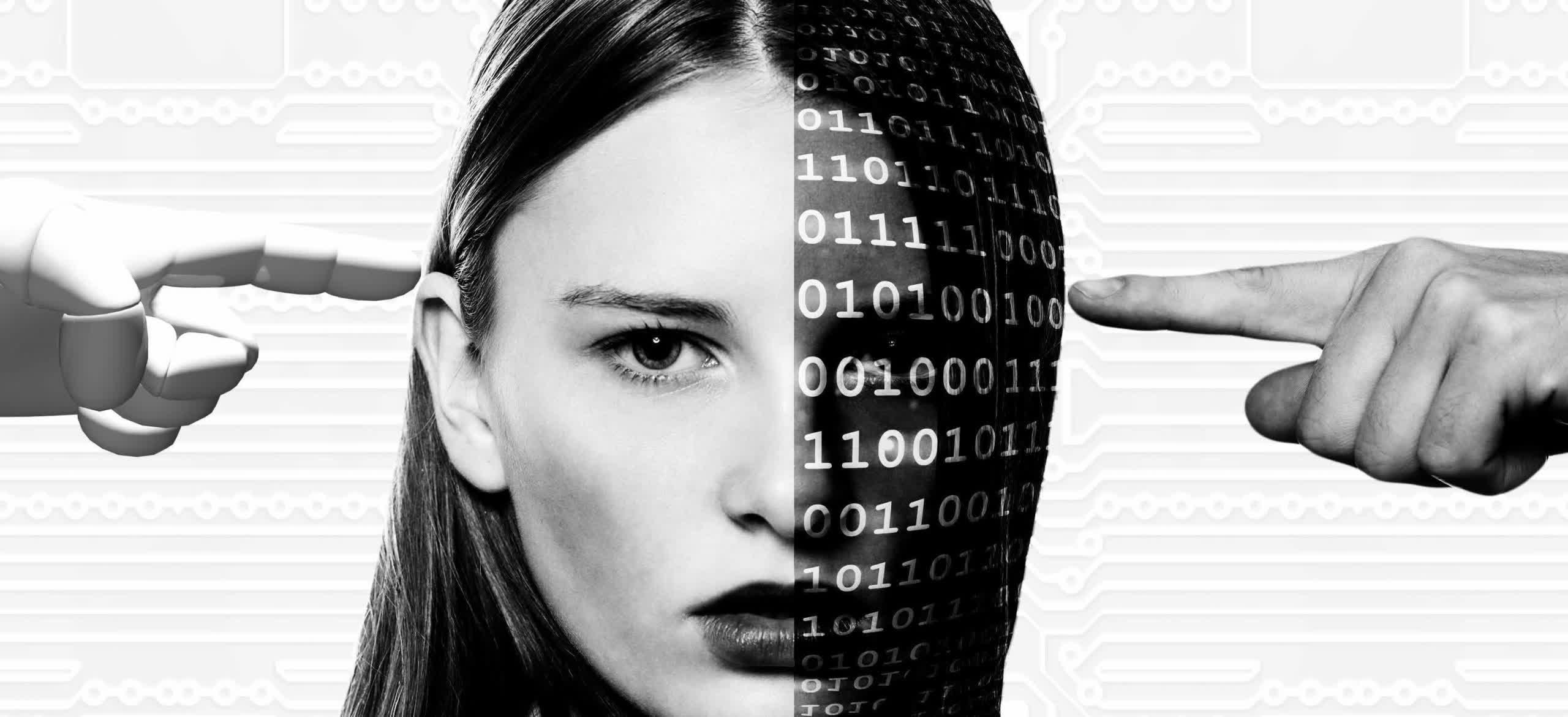

Better late than never: The AI boom has been anything but uncontroversial, with copyright lawsuits popping up everywhere. It has also elicited concerns from experts over its rapid growth and use to spread disinformation. Congress has finally stepped in to create a legal definition and framework addressing these concerns.

The US Senate just introduced new legislation called the "Content Origin Protection and Integrity from Edited and Deepfaked Media Act" (COPIED Act). The cringeworthy mouthful of a bill looks to outlaw the unethical use of AI-generated content and deepfake technology. It also aims to regulate the use of copyrighted material in training machine learning models.

Proposed and sponsored by Democrats Maria Cantwell of Washington and Martin Heinrich of New Mexico, along with Republican Marsha Blackburn of Tennessee, the legislation aims to establish enforceable transparency standards in AI development. The senators intend to task the National Institutes of Standards and Technology with developing sensible transparency guidelines should the bill pass.

"The bipartisan COPIED Act, I introduced with Senator Blackburn and Senator Heinrich, will provide much-needed transparency around AI-generated content," said Senator Cantwell.

COPIED Act via Senate Commerce Committee

The legislation also wants to curb unauthorized data use in training models. Training data acquisition currently lies in a legal gray area. Over the last several years, we have seen arguments and lawsuits regarding the legality of scraping public-facing online information. Clearview AI is likely the biggest abuser of this training method, but it's not alone. Others, including OpenAI, Microsoft, and Google, have struggled to toe an infinitely fine line between legal and ethical when it comes to training their systems on copyrighted material.

"Artificial intelligence has given bad actors the ability to create deepfakes of every individual, including those in the creative community, to imitate their likeness without their consent and profit off of counterfeit content," said Senator Blackburn. "The COPIED Act takes an important step to better defend common targets like artists and performers against deepfakes and other inauthentic content."

The senators feel that clarifying and defining what is okay and what is not regarding AI development is vital in protecting citizens, artists, and public figures from the harm that misuse of the technology could cause, particularly in creating deepfakes. Deepfake porn has been an issue for years now, and the tech has only gotten better. It is telling that Congress has ignored the issue until now. The proposal comes only months after someone used voice-cloning tech to impersonate President Biden, telling people not to vote.

"Deepfakes are a real threat to our democracy and to Americans' safety and well-being," said Senator Heinrich. "I'm proud to support Senator Cantwell's COPIED Act that will provide the technical tools needed to help crack down on harmful and deceptive AI-generated content and better protect professional journalists and artists from having their content used by AI systems without their consent."

The usual suspects have spoken out in favor of the bill. The Nashville Songwriters Association International, SAG-AFTRA, the National Music Publishers' Association, RIAA, and several broadcast and newspaper organizations have issued statements applauding the effort. Meanwhile, Google, Microsoft, OpenAI, and other AI purveyors have remained oddly silent.

US Senate introduces ground-breaking bill to setup legal framework for ethical AI development