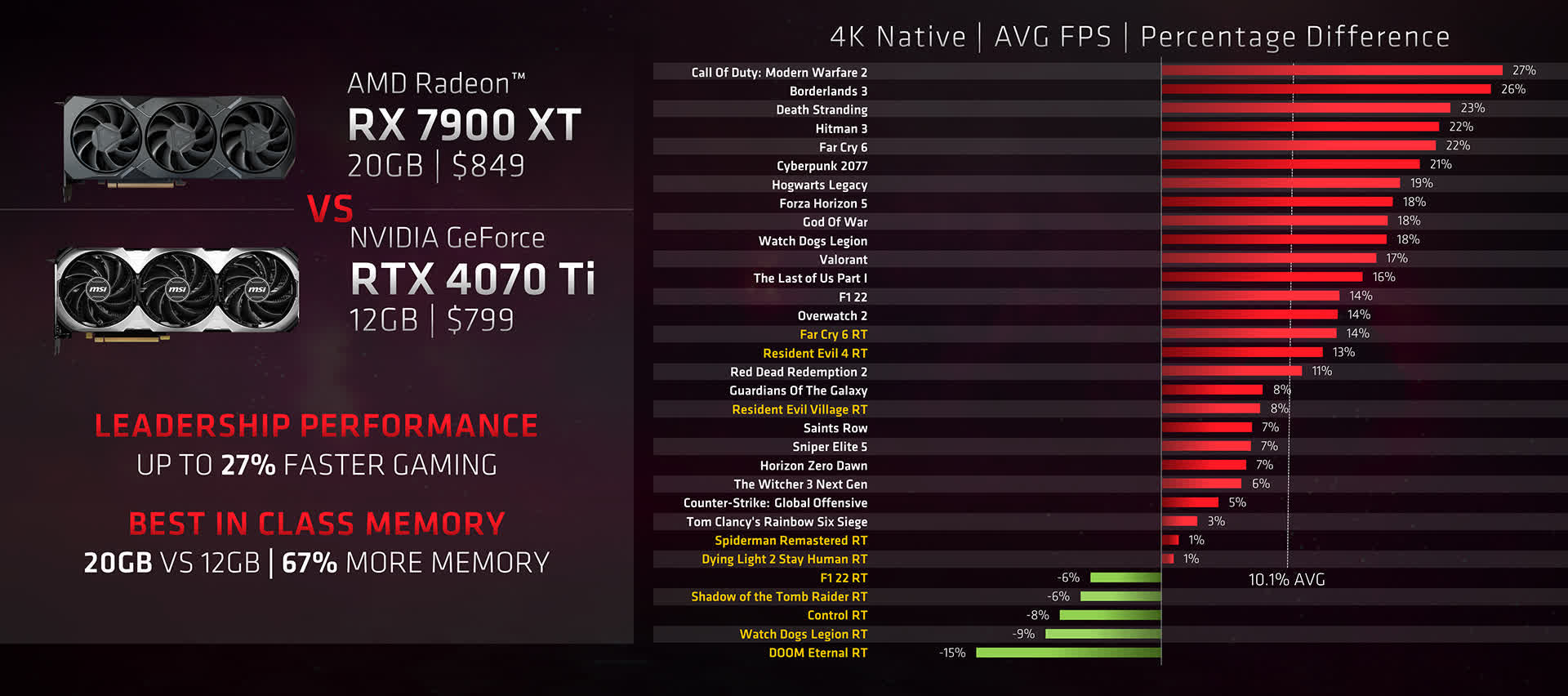

Even if you're just a casual follower of tech news, you can't have missed that the amount of video memory (VRAM) on a graphics card is a hot topic right now. We've seen indications that 8GB may not be sufficient for the latest games, especially when played at high resolutions and with graphics quality set to high or maximum. AMD has also been emphasizing that its cards boast significantly more RAM than Nvidia's, and the latter's newest models have been criticized for having only 12 GB.

So, are games really using that amount of memory, and if so, what exactly is it about the inner workings of these titles that require so much? Let's lift the hood of modern 3D rendering and take a look at what's really happening within these graphics engines.

Games, graphics, and gigabytes

Before we delve into our analysis of graphics, games, and VRAM, it might be worth your time to quickly review the basics of how 3D rendering is typically done. We've covered this before in a series of articles, so if you're not sure about some of the terms, feel free to check them out.

We can summarize it all by simply stating that 3D graphics is 'just' a lot of math and data handling. In the case of data, it needs to be stored as close to the graphics processor as possible. All GPUs have small amounts of high-speed memory built into them (a.k.a. cache), but this is only large enough to store the information for the calculations taking place at any given moment.

There's far too much data to store all of it in the cache, so the rest is kept in the next best thing – video memory or VRAM. This is similar to the main system memory but is specialized for graphics workloads. Ten years ago, the most expensive desktop graphics cards housed 6 GB of VRAM on its circuit board, although the majority of GPUs came with just 2 or 3 GB.

Today, 24 GB is the highest amount of VRAM available, and there are plenty of models with 12 or 16 GB, though 8 GB is far more common. Naturally, you'd expect an increase after a decade, but it's a substantial leap.

To understand why it's so much, we need to understand exactly what the RAM is being used for, and for that, let's take a look at how the best graphics were created when 3 GB of VRAM was the norm.

Back in 2013, PC gamers were treated to some truly outstanding graphics – Assassin's Creed IV: Black Flag, Battlefield 4 (below), BioShock Infinite, Metro: Last Light, and Tomb Raider all showed what could be achieved through technology, artistic design, and a lot of coding know-how.

The fastest graphics cards money could buy you were AMD's Radeon HD 7970 GHz Edition and Nvidia's GeForce GTX Titan, with 3 and 6 GB of VRAM apiece, and price tags of $499 and $999 respectively. A really good monitor for gaming might have had a resolution of 2560 x 1600, but the majority were 1080p – the bare minimum, these days, but perfectly acceptable for that period.

To see how much VRAM these games were using, let's examine two of those titles: Black Flag and Last Light. Both games were developed for multiple platforms (Windows PC, Sony PlayStation 3, Microsoft Xbox 360), although the former appeared on the PS4 and Xbox One a little after the game launched, and Last Light was remastered in the following year for the PC and newer consoles.

In terms of graphics requirements, these two games are polar opposites; Black Flag is a fully open-world game with a vast playing area, while Metro: Last Light features mostly narrow spaces and is linear in theme. Even its outdoor sections are tightly constrained, although the visuals give the impression of a more open environment.

With every graphics setting at its maximum value and the resolution set to 4K (3840 x 2160), Assassin's Creed IV peaked at 6.6 GB in urban areas, whereas out on the open sea, the memory usage would drop to around 4.8 GB. Metro: Last Light consistently used under 4 GB and generally varied by only a small amount of memory, which is what you'd expect for a game that's effectively just a sequence of corridors to navigate.

Of course, few people were gaming in 4K in 2013, as such monitors were prohibitively expensive and aimed entirely at the professional market. Even the very best graphics cards were not capable of rendering at that resolution, and increasing the GPU count with CrossFire or SLI wouldn't have helped much.

Dropping the resolution down to 1080p brings it closer to what PC gamers would have been using in 2013, and it had a marked effect on the VRAM use – Black Flag dropped down to 3.3 GB, on average, and Last Light used just 2.4 GB. A single 32-bit 1920 x 1080 frame is just under 8 MB in size (4K is nearly 32 MB), so how does decreasing the frame resolution result in such a significant decrease in the amount of VRAM being used?

The answer lies deep in the hugely complex rendering process that both games undergo to give you the visuals you see on the monitor. The developers, with support from Nvidia, went all-out for the PC versions of Black Flag and Last Light, using the latest techniques for producing shadows, correct lighting, and fog/particle effects. Character models, objects, and environmental structures were all made from hundreds of thousands of polygons, all wrapped in a wealth of detailed textures.

In Black Flag, the use of screenspace reflections alone requires 5 separate buffers (color, depth, ray marching output, reflection blur result), and six are required for the volumetric shadows. Since the best target platforms, the PS4 and Xbox One, had half of the total 8 GB of RAM available for the game and rendering, these buffers were at a much lower resolution than the final frame buffer, but it all adds up to the VRAM use.

The developers had similar memory restrictions for the PC versions of the games they worked on, but a common design choice back then was to allow graphics settings to exceed the capabilities of graphics cards available at launch. The idea was that users would return to the game when they had upgraded to a newer model, to see the benefits that progress in GPU technology had provided them.

This could be clearly seen when we looked at the performance of Metro: Last Light ten years ago – using Very High quality settings and a resolution of 2560 x 1600 (10% more pixels than 1440p), the $999 GeForce GTX Titan averaged a mere 41 fps. Fast forward one year and the GeForce GTX 980 was 15% faster in the same game, but just $549 in price.

So what about now -- exactly how much VRAM are games using today?

The devil is in the detail

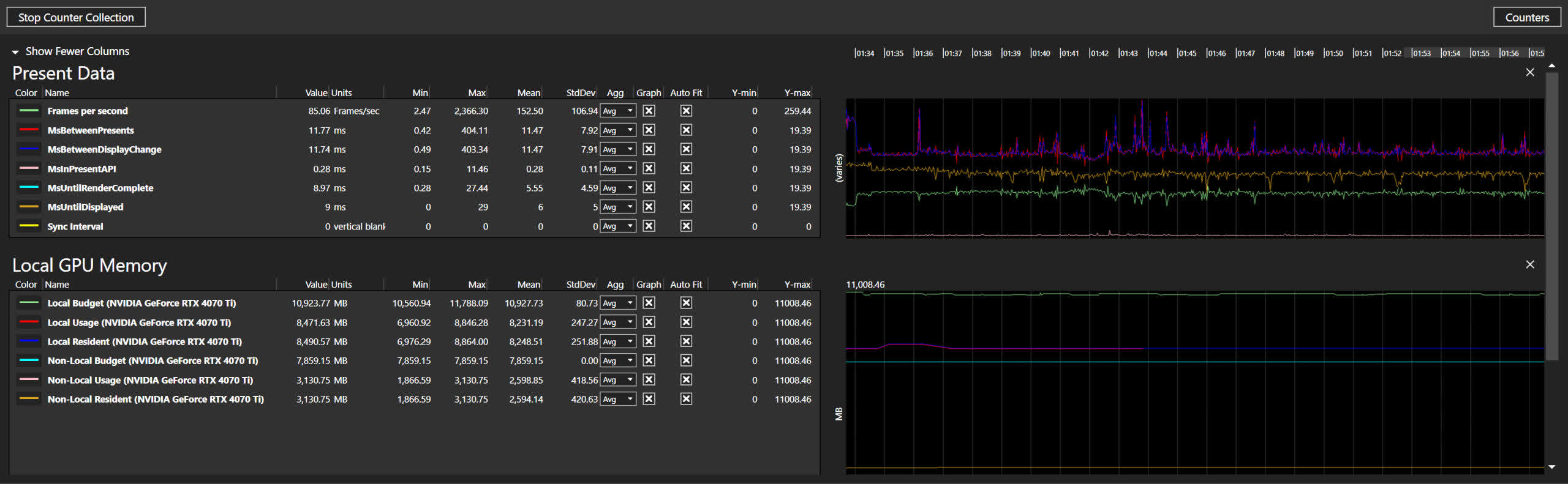

To accurately measure the amount of graphics card RAM being used in a game, we utilized Microsoft's PIX – this is a debugging tool for developers using DirectX, that can collect data and present it for analysis. One can capture a single frame, and break it down into every single line of code issued to the GPU and see how long it takes to process it and what resources are used, but we were just interested in capturing RAM metrics.

Most tools that record VRAM usage actually just report the amount of local GPU memory allocated by the game and consequently the GPU's drivers. PIX, on the other hand, records three – Local Budget, Local Usage, and Local Resident. The first one is how much video memory has been made available for a Direct3D application, which constantly changes as the operating system and drivers juggle it about.

Local resident is how much VRAM is taken up by so-called resident objects, but the value we're most interested in is Local Usage. This is a record of how much video memory the game is trying to use and games have to stay within the Local Budget limit, otherwise, all kinds of problems will occur – the most common being the program halts momentarily until there's enough budget again.

In Direct3D 11 and earlier, memory management was handled by the API itself, but with version 12, everything memory related has to be done entirely by the developers. The amount of physical memory needs to be detected first, then the budget set accordingly, but the significant challenge is ensuring that the engine never ends up in a situation where it's exceeded.

And in modern games, with vast open worlds, this requires a lot of repeated analysis and testing.

For all the titles we examined, every graphics quality and details variable was set to its maximum values (ray tracing was not enabled), and the frame resolution was set to 4K, with no upscaling activated. This was to ensure that we saw the highest memory loads possible, under conditions that anyone could repeat.

A total of three runs were compiled for each game, with every run being 10 minutes long; the game was also fully restarted between the data sampling to ensure that the memory was properly flushed. Finally, the test system used comprised an Intel Core i7-9700K, 16GB DDR4-3000, and an Nvidia GeForce RTX 4070 Ti, and all games were loaded from a 1 TB NVMe SSD on a PCIe 3.0 4x bus.

The results below are the arithmetic means of the three runs, using the average and maximum local memory usage figures as recorded by PIX.

| Game | Average VRAM use (GB) | Peak VRAM use (GB) |

| Far Cry 6 | 5.7 | 6.2 |

| Dying Light 2 | 5.9 | 6.2 |

| Assassin's Creed Valhalla | 7.4 | 7.5 |

| Marvel's Spider-Man Remastered | 8.0 | 8.2 |

| Far Cry 6 (HD textures enabled) | 8.0 | 8.4 |

| Doom Eternal | 7.5 | 8.2 |

| Cyberpunk 2077 | 7.7 | 8.9 |

| Resident Evil 4 | 8.9 | 9.1 |

| Hogwarts Legacy | 9.7 | 10.1 |

| The Last of Us Part 1 | 11.8 | 12.4 |

Where Doom Eternal, Resident Evil 4, and The Last of Us are similar to Metro: Last Light, in that the playing areas are tightly constrained, the others are open-world – depending on the size of the levels, the former can potentially store all of the data required in the VRAM, whereas the rest have to stream data because the total world is just too big to store locally.

That said, data streaming is quite common in today's game, regardless of genre, and Resident Evil 4 uses it to good effect. Despite the former game's menu screen warning us that maximum quality settings would result in us running out of VRAM, no such problems were encountered in actual gameplay. Memory usage was pretty consistent across the majority of the games tested, especially Resident Evil 4, though Hogwarts Legacy was the exception, which varied so much at times that we thought PIX was broken.

Taking the results from Assassin's Creed Valhalla, the obvious question to ask is what is contributing to the 1.5 times increase in VRAM use? The answer to this question, and the one for this entire article, is simple – detail.

For its time, Black Flag was the cutting edge for graphical fidelity, in open-world games. But while the latest saga in the never-ending AC series still uses lots of the same rendering techniques, the amount of additional detail in Valhalla, and in the majority of today's games, is vastly higher.

Character models, objects, and the environment as a whole are constructed from considerably more polygons, increasing the accuracy of smaller parts. Textures contain a lot more detail too – for example, cloth in Valhalla displays the weave of the material, and the only way that this is possible is by using higher-resolution textures.

This is especially noticeable in the textures used for the landscape, allowing for the ground and walls of buildings to look richer and more life-like. To enhance the realism of foliage, textures that have transparent sections to them (such as grass, leaves, ferns, etc.) are much larger in size, which aids in the blending with objects behind them.

And it's not just about textures and meshes – Valhalla looks better than Black Flag because the lighting routines are using higher-resolution buffers, and more of them, to store intermediate results. All of which simply adds to the memory load.

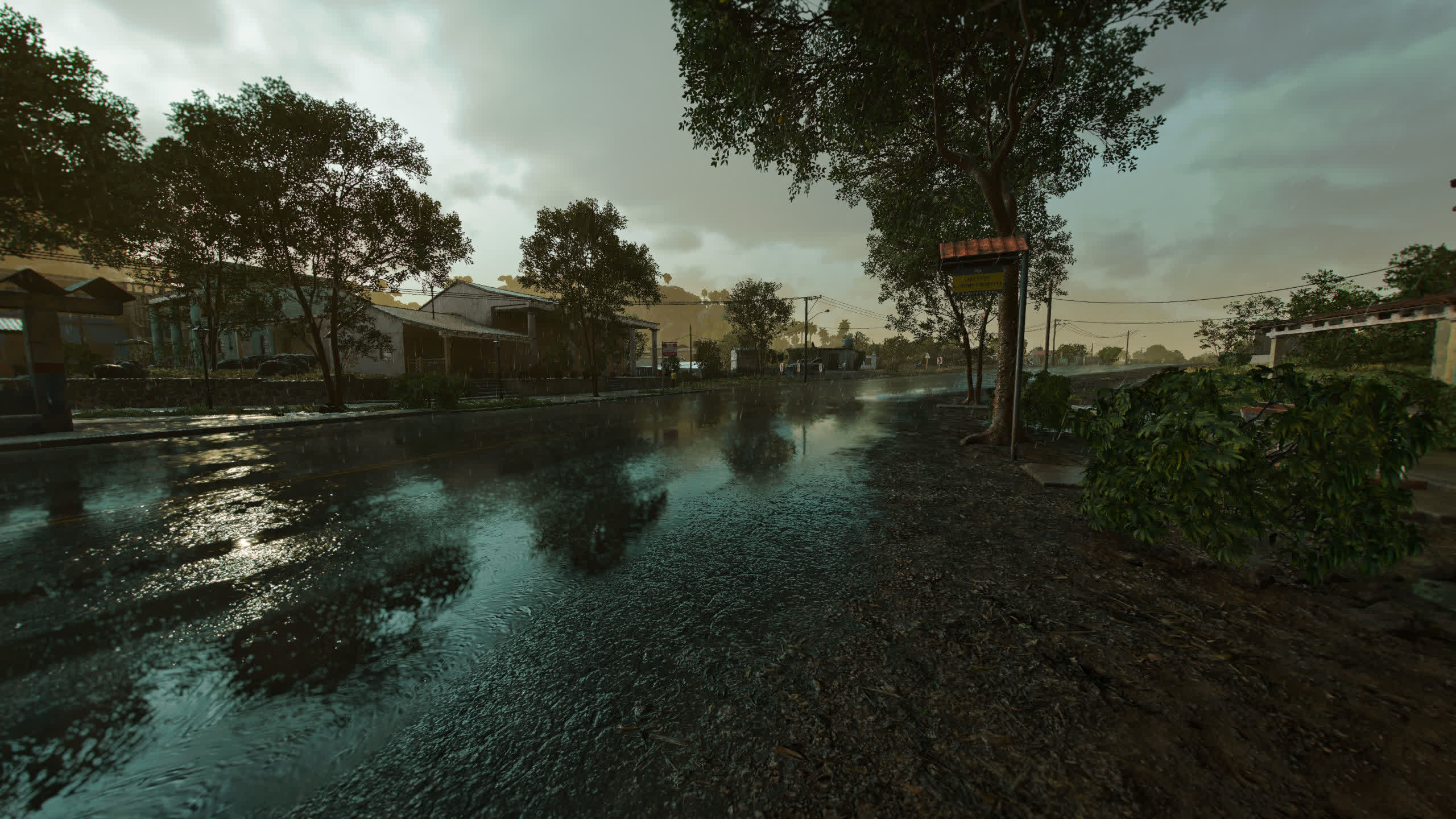

The Last of Us is more recent than Valhalla and is another example of how greater detail is achieved through the use of more polygons, bigger textures, better algorithms, and so on. However, the amount of VRAM taken up by all of this is of another level entirely: 60% more than Valhalla, on average.

The above screenshot highlights the level of detail in its textures. In particular, pay close attention to the cracks in the asphalt, weathering, and plant growth – the overall material as an almost photographic level of realism.

Other aspects are less impressive. The clothing on the characters looks perfectly okay, especially when the characters aren't up close to the camera, but it's nowhere near as outstanding as the environment and buildings.

And then there's Hogwarts Legacy. It's clearly not a game rooted in reality, so one can't expect the graphics to have quite the same fidelity as The Last of Us, but what the title offers in terms of rich detail in the world you play in, it greatly lacks in terms of texture resolution.

The VRAM load for this particular screenshot hovered a little under 9.5 GB and during our testing, there were numerous times when the Local Usage figures exceeded the Local Budget, resulting in lots of stuttering.

But as high as that amount might seem, especially for such basic-looking graphics, it can be easily made far, far worse.

Better lighting demands even more memory

Hogwarts Legacy, like many of the games tested for this article, provides the option to use ray tracing to determine the end result for reflections, shadows, and ambient occlusion (a basic part of global illumination, that ignores specific light sources).

In contrast, the latest version of Cyberpunk 2077 now offers the option to use path tracing and a special algorithm called spatiotemporal reservoir resampling for the majority of the lighting within the game.

All of this rendering trickery comes at a cost, and not just with the amount of processing the GPU has to do. Ray tracing generates huge amounts of additional data, in the form of bounding volume hierarchies, as well as even more buffers, that are required to work out exactly what the rays are interacting with.

Using 4K and the same graphics settings as before, but this time with every ray tracing option enabled or set to its maximum value, the VRAM loads change considerably.

| Game (RT enabled) | Average VRAM use (GB) | Peak VRAM use (GB) |

| Dying Light 2 | 7.4 | 8.2 |

| Doom Eternal | 8.3 | 9.5 |

| Marvel's Spider-Man Remastered | 9.2 | 9.6 |

| Resident Evil 4 | 9.0 | 9.7 |

| Far Cry 6 (HD textures) | 9.9 | 10.7 |

| Cyberpunk 2077 (Overdrive) | 12.0 | 13.6 |

| Hogwarts Legacy | 12.1 | 13.9 |

Cyberpunk 2077, Spider-Man, and Dying Light 2 are really the only games that show any appreciable benefit to using ray tracing, although how much one is willing to sacrifice performance to achieve this is another topic entirely. In the other titles, the impact of ray tracing is far more muted – Far Cry 6 sometimes looks very good in specific scenarios, with it enabled, but Resident Evil 4 and Hogwarts Legacy show no appreciable benefit from its use.

In RE4, it doesn't really hit the frame rate too much, but the impact in Cyberpunk 2077 and Hogwarts Legacy was extreme. In both titles, the Local Usage consistently outran the Local Budget, resulting in the GPU's drivers constantly trying to make space for the data that must stay in the VRAM. These games stream assets all the time in normal gameplay, so in theory, it shouldn't be a problem.

But when the GPU carries out a shader routine that requires a texture or render target sample, the first place it will look for that data is the first level of cache. If it's not there, then the processor will work through the remaining levels, and ultimately the VRAM, to find it.

When all these searches result in a miss, then the data has to be copied across from the system memory into the graphics card, and then into the cache. All of this takes time, so the GPU will just stall the shader instruction until it has the required sample. Either the game will just pause momentarily until the routine is complete, or abandon it altogether, leading to all kinds of glitches.

In the case of Hogwarts Legacy, the rendering engine would just carry out the former and not just for a few milliseconds. Applying ray tracing at 4K on maximum settings, with a 12 GB graphics card, essentially made it totally unplayable. Cyberpunk 2077 managed this problem far better, and very few pauses took place, though with the game running at sub-10 fps all the time, it was somewhat of a Pyrrhic victory for the engine.

So our testing has shown that modern games are indeed using a lot of VRAM (the ones we examined are, at least), and depending on the settings used, it's possible to force the game into a situation where it tries to use more local memory than it has available.

Settings are the savior

At this point, one might rightly argue that all these figures are somewhat one-sided – after all, how many people will have PCs that can play games at 4K on maximum settings or even want to? Decreasing the resolution to 1080p does reduce the amount of VRAM being used, but depending on the game, it might not always make much of a difference.

Taking the worst VRAM offender, The Last of Us, repeating the testing at 1080p saw an average memory usage of 9.1 GB and a peak of 9.4 GB – that's 3GB lower than at 4K. We also found that the same figures could be achieved by using the higher resolution but setting all of the texture quality variables to Low.

Combining 1080p with Low textures reduced the VRAM load even further, with the average falling to 6.4 GB and the peak to 6.6 GB. Identical results were obtained using 4K and the Low graphics preset (shown above), whereas the Medium preset used 7.3 GB on average (7.7 GB max).

Doing the same 4K + Low preset combination in Cyberpunk 2077 resulted in 6.3 GB being used, and the other titles were easy to drop well below 8 GB, using combinations of low frame and texture resolutions, while still retaining high levels of lighting and shadows.

Upscaling can also reduce the VRAM load but results depend heavily on the game. For example, using 4K Ultra preset + DLSS Performance in The Last of Us dropped the average usage from 11.8 GB down to 10.0 GB, whereas running Cyberpunk 2077, with the same upscaling option, lowered the peaks from 8.9 GB to 7.7 GB. In that game, using FSR 2.1 Balanced gave us a maximum VRAM usage of 8.2 GB, though the Ultra Performance setting was barely lower at 8.1 GB.

With ray tracing, getting the memory load down was just as easy. In Cyberpunk 2077, using 1080p with DLSS Performance enabled, and maximum, RT Overdrive settings, the mean RAM usage was 7 GB, with the peak just 0.5 GB higher. Admittedly, it didn't look very nice like this, with lots of ghosting in the frame, when in motion. Increasing the resolution to 1440p helped remove most of the artifacts, but the VRAM load upped to 7.5 GB on average, with a maximum of 8 GB being recorded.

Hogwarts Legacy, though, was still heavy on the RAM at 1080p + DLSS Performance when using maximum quality settings and ray tracing – the average usage was 8.5 GB, and the peak was just a fraction under 10 GB.

One of the most recent games that were tested also happened to be the easiest to set to a required memory budget – Resident Evil 4. The use of FSR upscaling and knocking down a couple of the quality settings one notch of their maximum values was all it took to produce a 4K output and keep the RAM budget well under 8 GB.

We keep mentioning 8 GB because GPU manufacturers have generally stuck with this amount of local memory, on the majority of their products, for a number of years. The first models to be furnished with that amount of VRAM were AMD's Radeon R9 390 from 2015 and Nvidia's GeForce GTX 1080, released in 2016 (though the 2015 GeForce GTX Titan X had 12GB). Nearly eight years later and it's still the standard amount of low-end and mid-range models.

At this point, it's important that we draw your attention to the fact that games will try to budget a significant portion of the VRAM present (we saw figures ranging from 10 to 12 GB), so all of the memory usage figures you've just seen would be different if the budget was lower or higher.

Unfortunately, this isn't something we can alter ourselves, other than using different graphics cards, but a well-coded game should use the available video memory within the physical limits, and never exceed them. However, as we have seen in some cases, this can occur, butchering the performance when it takes place.

It's easy enough to get everything to fit in the memory available, as long as you're willing to play around with the settings. You might not like how the game looks when scaled down in that manner, of course. However, we still haven't fully answered the primary purpose of this article – just why are modern games seemingly asking more of a PC's VRAM than ever before?

The consoles conflict

All of the games we've looked at in this article were developed for multiple platforms and understandably so. With millions of people around the globe owning the latest or last generation of consoles, making an expensive blockbuster of a game for just one platform typically isn't financially viable.

Two years ago, Microsoft and Sony released their 'next gen' gaming consoles – the Xbox Series X and S, and the PlayStation 5. Compared to their predecessors, they look utterly modern under the hood, as both have an 8-core, 16-thread CPU and a graphics chip that wouldn't look out of place in a decent gaming PC. The most important update, with regard to the topic being examined here, was the increase in RAM.

Unlike desktop PCs, consoles use a unified memory structure, where both the CPU/system and GPU are hard-wired to the same collection of RAM chips. In the previous round of consoles, both Microsoft and Sony equipped their units with 8 GB of memory, with the exception of the Xbox One X, which sported 12 GB. The PS5 and Xbox Series X (shown above) both use 16 GB of GDDR6, although it's not configured the same in the two machines.

Games can't access all of that RAM, as some of it is reserved for the operating system and other background tasks. Depending on the platform, and what settings the developer has gone with, around 12 to 14 GB is available for software to use. It might not seem like a huge amount but that's more than double what was available with the likes of the PS4 and Xbox One.

But where PCs keep the meshes, materials, and buffers in VRAM, and the game engine (along with a copy of all the assets being used) in the system memory, consoles have to put everything into the same body of RAM. That means textures, intermediate buffers, render targets, and executing code all take their share of that limited space. In the last decade, when there was just 5 GB or so to play with, developers had to be extremely careful at optimizing data usage to get the best out of those machines.

This is also why it seems like games have just suddenly leaped forward in memory loads. For years, they were created with very tight RAM restrictions but as publishers have started to move away from releasing titles for the older machines, that limit is now two to three times higher.

Previously, there was no need to create highly detailed assets, using millions of polygons and ultra-high-resolution textures. Downscaling such objects to the point where they could be used on a PS4, for example, would defeat the purpose of making them that good in the first place. So everything was created at a scale that was absolutely fine in native form for PCs at that time.

This process is still done now, but the PS5 and Xbox Series X also come with modern storage technologies that greatly speed up the transfer of data from the internal SSD to the shared RAM. It's so fast that data streaming is now a fundamental system in next-gen console titles and, along with the vastly more capable CPU and GPU, the performance bar that limits how detailed assets can be is now far higher than it has ever been.

Thus, if a next-gen console game is using, say, 10 GB of unified RAM for meshes, materials, buffers, render targets, and so on, we can expect the PC version to be at least the same. And once you add in the expected higher detail settings for such ports, then that amount is going to be much larger.

A bigger future awaits us

So, as more console conversions and multi-platform games hit the market, does this mean owners of 8 GB graphics cards are soon going to be in trouble? The simple answer is that nobody outside of the industry knows with absolute certainty. One can make some educated guesses, but the real answer to this question will likely be multifaceted, complex, and dependent on factors such as how things transpire in the graphics card market and how game developers manage PC ports.

With the current generation of consoles still in the flush of their youth, but getting ever closer to the middle years of their lifespan, we can be certain that more games will come out that will have serious hardware requirements for the Windows versions. Some of this will be down to the fact that, over time, developers and platform engineers learn to squeeze more performance from the fixed components, utilizing ever more console-specific routines to achieve this.

Since PCs are unable to be handled in exactly the same way, more generalized approaches will be used and there will be a greater onus on the developers to properly optimize their games for the myriad of hardware combinations. This can be alleviated to a degree by using a non-proprietary engine, such as Unreal Engine 5, and letting those developers manage the bulk of coding challenges, but even then, there's still a lot of work left for the game creators to get things just right.

For example, in version 1.03 of The Last of Us, we saw average VRAM figures of 12.1 GB, with a peak of 14.9 GB at 4K Ultra settings. With the v1.04 patch, those values dropped to 11.8 and 12.4 GB, respectively. This was achieved by improving how the game streams textures and while there's possibly more optimization that can be done, the improvement highlights how much difference a little fine-tuning on a PC port can make.

Another reason why hardware requirements and VRAM loads are probably going to increase beyond 8 GB is that gaming is responsible for selling an awful lot of desktop PCs and heavily supports a lot of the discrete component/peripheral industry. AMD, Intel, Nvidia, and others all spend prolifically on game sponsorship, though sometimes it involves nothing more than an exchange of funds for the use of a few logos.

In the world of graphics cards, though, such deals are typically centered around promoting the use of a specific feature or new technology. The latest GPU generations from AMD, Intel, and Nvidia sport 12 GB or more of RAM, but not universally so, and it will be years before PC gaming userbase is saturated with such hardware.

The point where console ports start consistently requiring 8 GB as a minimum requirement, on the lowest settings, is probably a little way off, but it's not going to be a decade in the future. GPU vendors would prefer PC gamers to spend more money and purchase higher-tier models, where the profit margins are much larger, and will almost certainly use this aspect of games as a marketing tool.

There are other, somewhat less nefarious, factors that will also play a part – development teams inexperienced in doing PC ports or using Unreal Engine, for example, and overly tight deadlines set by publishers desperate to hit sales targets before a financial quarter all result in big budget games having a multitude of issues, with VRAM usage being the least of them.

We've seen that GPU memory requirements are indeed on the rise, and it's only going to continue, but we've also seen that it's possible to make current games work fine with whatever amount one has, just by spending time in the settings menu. For now, of course.

Powerful graphics cards with 8 GB are probably going to feel the pinch, where the VRAM amount limits what the GPU can ultimately cope with, in the next couple of seasons of game releases. There are exceptions today, but we're a little way off from it becoming the norm.

And who knows – in another decade from now, we may well look back at today's games and exclaim 'they only used 8 GB?!'