We're certain many of you already have a solid understanding of how we perform CPU benchmarks, but as new people develop interest in PC tech and start to read our content, or content similar to ours, there are a few basic test methods that need explaining. Although we do our best to explain this with some consistency as we review new hardware, it's difficult to keep at it every time without getting derailed from the subject matter.

Recently we've started to see a more overwhelming number of comments and complaints about how we test hardware in our reviews – namely, CPU reviews that look at gaming performance. In this case, we typically focus on low resolution testing (1080p), as this helps to alleviate the potential for GPU bottlenecks, allowing us to more closely look at actual CPU performance.

That being the goal, we also want to use the most powerful gaming GPU possible, and today that's the GeForce RTX 4090. This combination of a powerful GPU and a low resolution means that in most instances we will be limited by the CPU, and therefore are able to compare the gaming performance between various CPUs without any kind of system limitation, which could risk spoiling the results.

The push back to this approach is the alleged claim that it's "unrealistic," and that no one is going to use an RTX 4090 at 1080p, and certainly no one is going to do this with a mid-range to low-end CPU. And while this seems likely, that's not the point, in fact it misses it entirely.

At this stage we've basically heard every argument there is for why low resolution testing with a high-end GPU is misleading or pointless, and we're here to tell you in no uncertain terms that they're all wrong. But of course, we're not simply expecting you to take our word for it. Instead we've spent some time benchmarking in an effort to gather enough data that should make it clear why we and most other reviewers, test the way we do.

First we went back and tested some older CPUs, with a range of graphics cards of varying performance. Part of the reason why we want to know how powerful a CPU is for gaming, is not just so we can see what it offers today, but how it might stack up in a few years from now.

The Intel Core i7-8700K was released back in October 2017 for $360, and at the time it was the fastest mainstream desktop CPU for gaming. Six months later, AMD released the Ryzen 5 2600X for $230, and it was one of the better value gaming CPUs on the market then. Both packed six cores, but occupied very different price points with the Core i7 costing ~60% more.

At the time, the argument some liked to make was that the $700 GeForce GTX 1080 Ti wasn't a good GPU to test the ~$200 Ryzen 5 processor because it's too expensive, claiming that the $400 GTX 1070 would be a far more realistic and suitable pairing. So let's take a popular and demanding 2017 title and run a few tests... Assassin's Creed Origins instantly came to mind.

Benchmarks Using Older CPUs

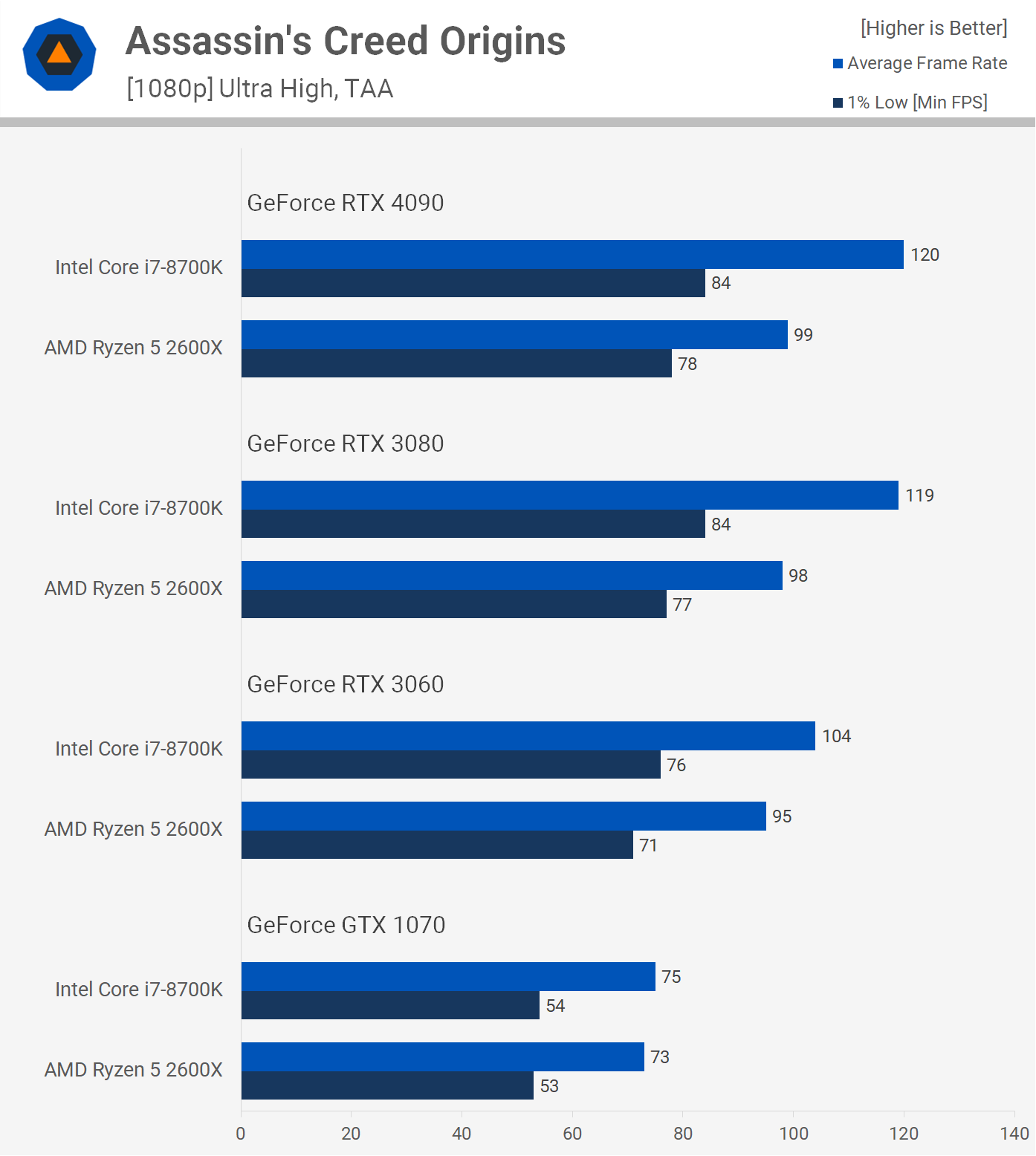

Assassin's Creed Origins (1080p Ultra High)

If we were to compare the 8700K and 2600X at 1080p with the Ultra High preset using the GTX 1070, we'd find that both CPUs are capable of around 70 fps and that's certainly very playable performance for such a title. This would have led us to believe that the 8700K was just 3% faster than the 2600X, less than half the margin we found when using the GeForce GTX 1080 Ti back in 2017.

Our 1080 Ti data was similar to what we see today with the RTX 3060, so five years later what can be basically considered entry-level performance, ignoring terrible products such as the RTX 3050 and Radeon 6500 XT.

Then if you were to use the more modern GeForce RTX 3080, the margin balloons out to 21% in favor of the Core i7 processor, and this appears to be the limit for both CPUs as the margin doesn't change with a significantly more powerful GPU like the RTX 4090.

That means in this title the Core i7-8700K is 20% faster than the Ryzen 2600X, and for those who held onto their CPU for five years and upgraded to an RTX 3080 as early as two years ago, would have enjoyed considerably more performance had they purchased the Core i7.

Of course, we're not saying the 8700K was the better purchase, as it was a more expensive CPU and the Ryzen 5 was a better value offering (and a far superior upgrade path with the 5800X3D), we're not making this discussion about the 8700K and 2600X, both parts had their place depending on your preferences. But they serve as perfect examples, as they demonstrate why using the GTX 1080 Ti which provided similar margins to what was shown with the RTX 3060 gave us a better idea of how they'd stack up in this title using future GPUs.

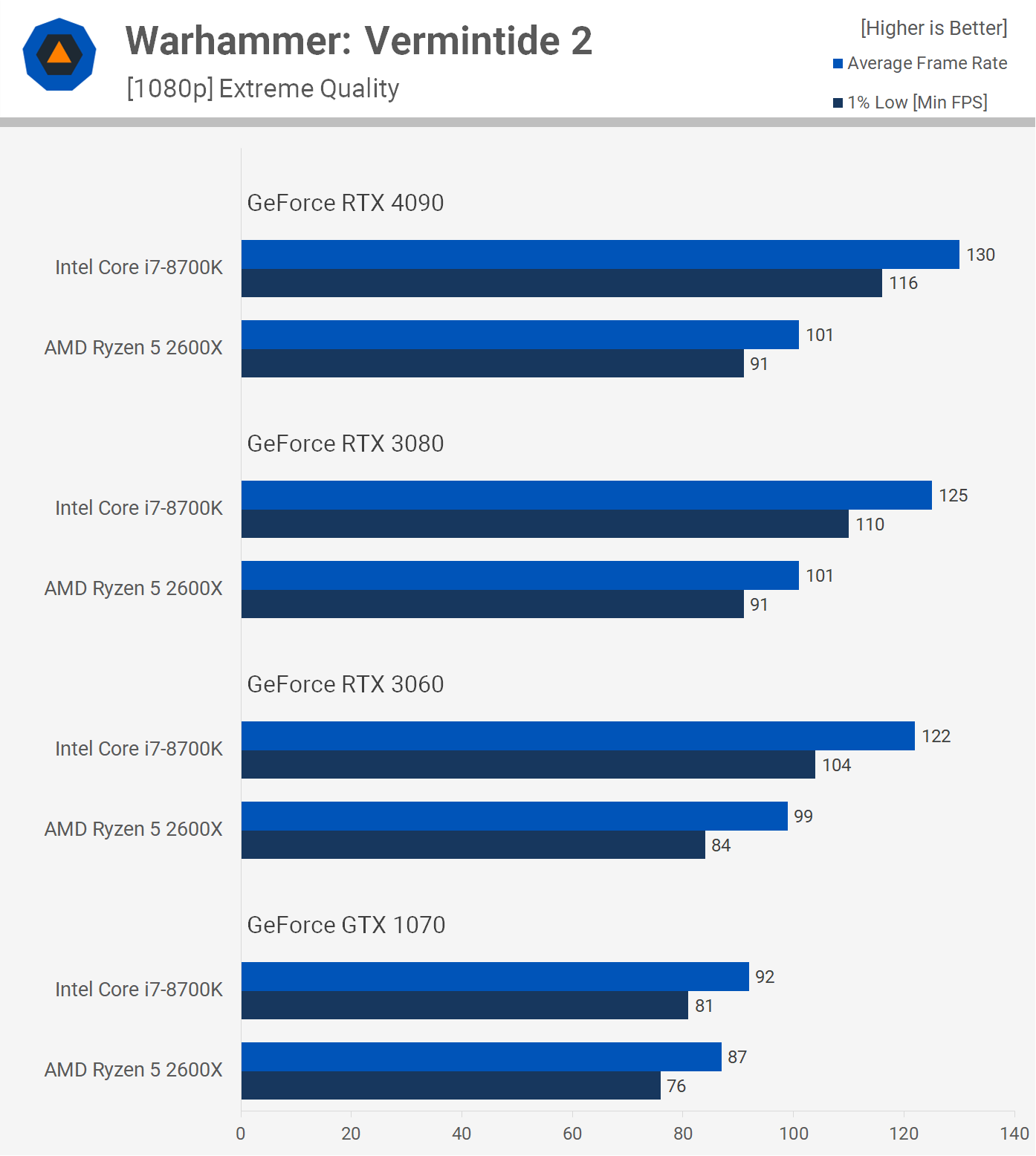

Warhammer: Vermintide 2 (1080p Extreme)

Another game we tested with in 2017 was Warhammer: Vermintide 2 and it's a game we still often test with today. Using the GeForce GTX 1070 we saw that the Core i7-8700K was on average 6% faster than the Ryzen 2600X, a nice little performance boost. But with the Intel CPU costing ~60% more, it would have been a laughably poor result back in 2017, had we tested with the GTX 1070.

Using the medium quality preset we found back then that the Core i7-8700K was 17% faster on average, which is not miles off what we're seeing here with the RTX 3060, which provided a 23% margin between the two CPUs. The margin was much the same with the GeForce RTX 3080, and up to 29% faster with the RTX 4090.

It's made clear then that the GeForce GTX 1080 Ti tested at 1080p gave us a much better indication (over five years ago) as to how things might look in the future, when compared to a more mid-range product like the GTX 1070.

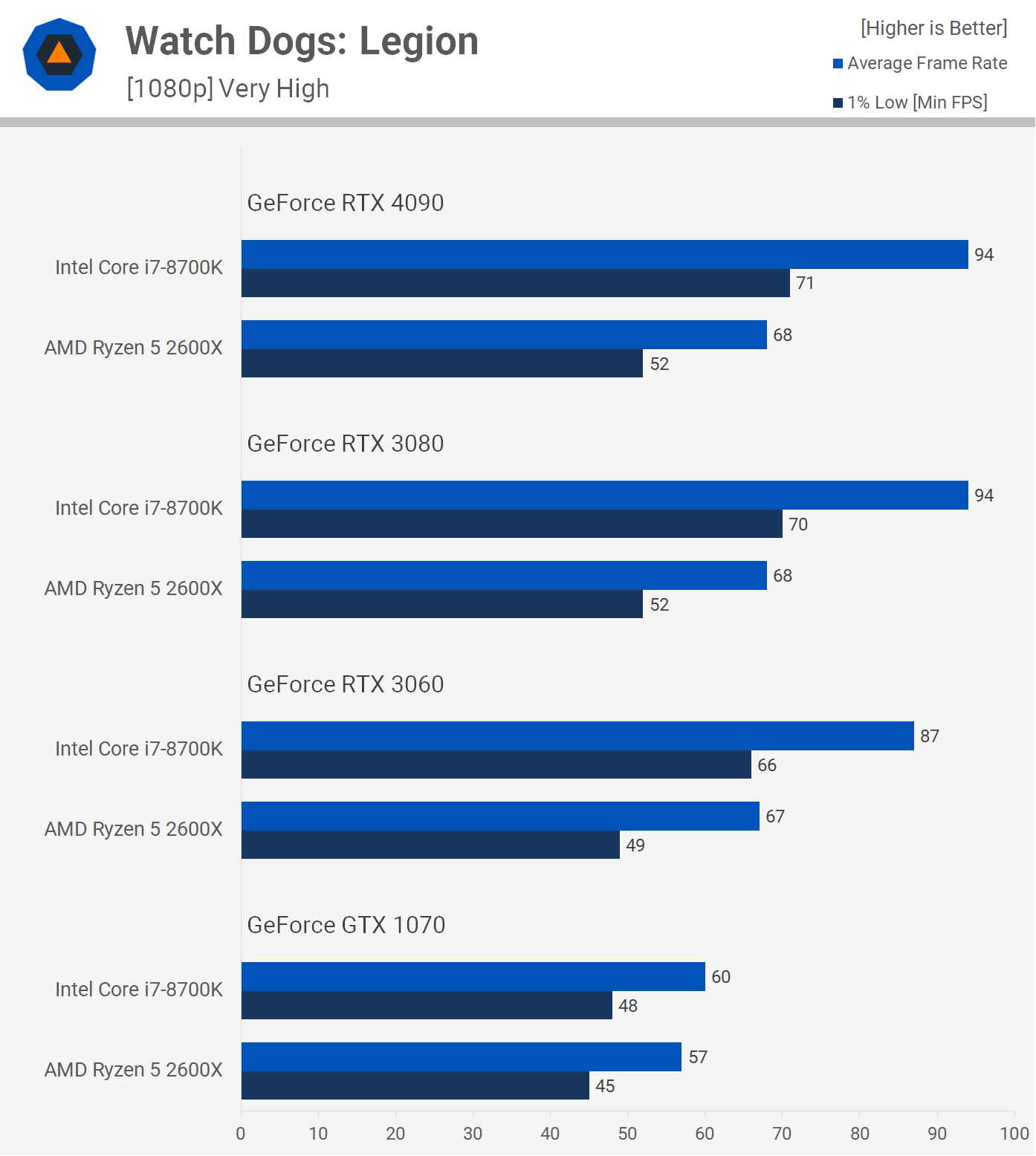

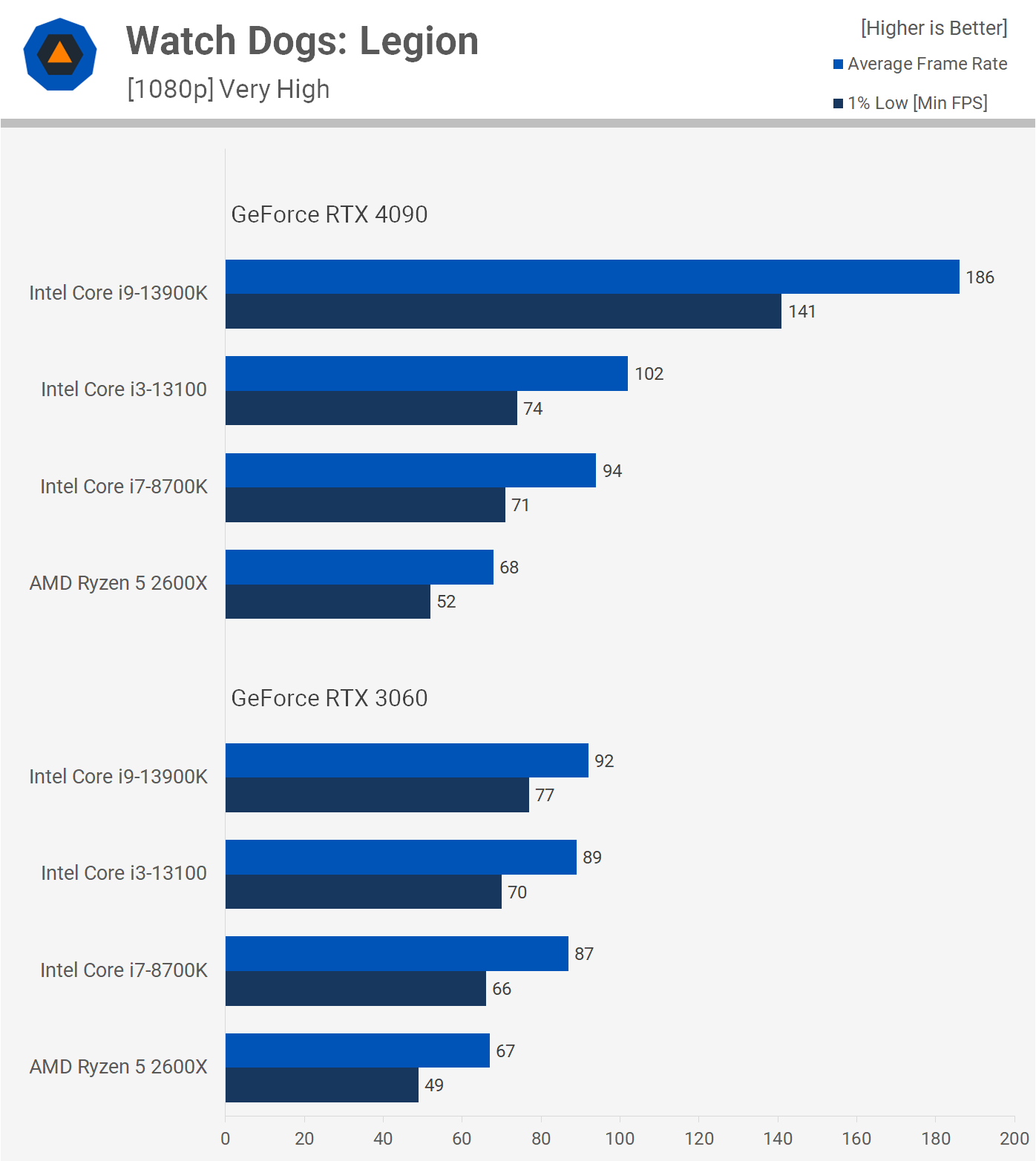

Watch Dogs: Legion (1080p Very High)

Now let's use some more modern games, like Watch Dogs Legion released in 2020. Once again we see that the mid-range GeForce GTX 1070 only provided the Intel CPU with a 5% win, going from 57 to 60 fps. Swapping out the GTX 1070 for the newer but still relatively low-end by today's standards, RTX 3060, we see that the margin blows up to 30% in favor of the 8700K.

Going from the RTX 3060 to the RTX 3080 increased that margin to 38% and this ultimately is the effective performance difference between these two CPUs in Watch Dogs Legion, as the margin failed to grow with the more powerful GeForce RTX 4090.

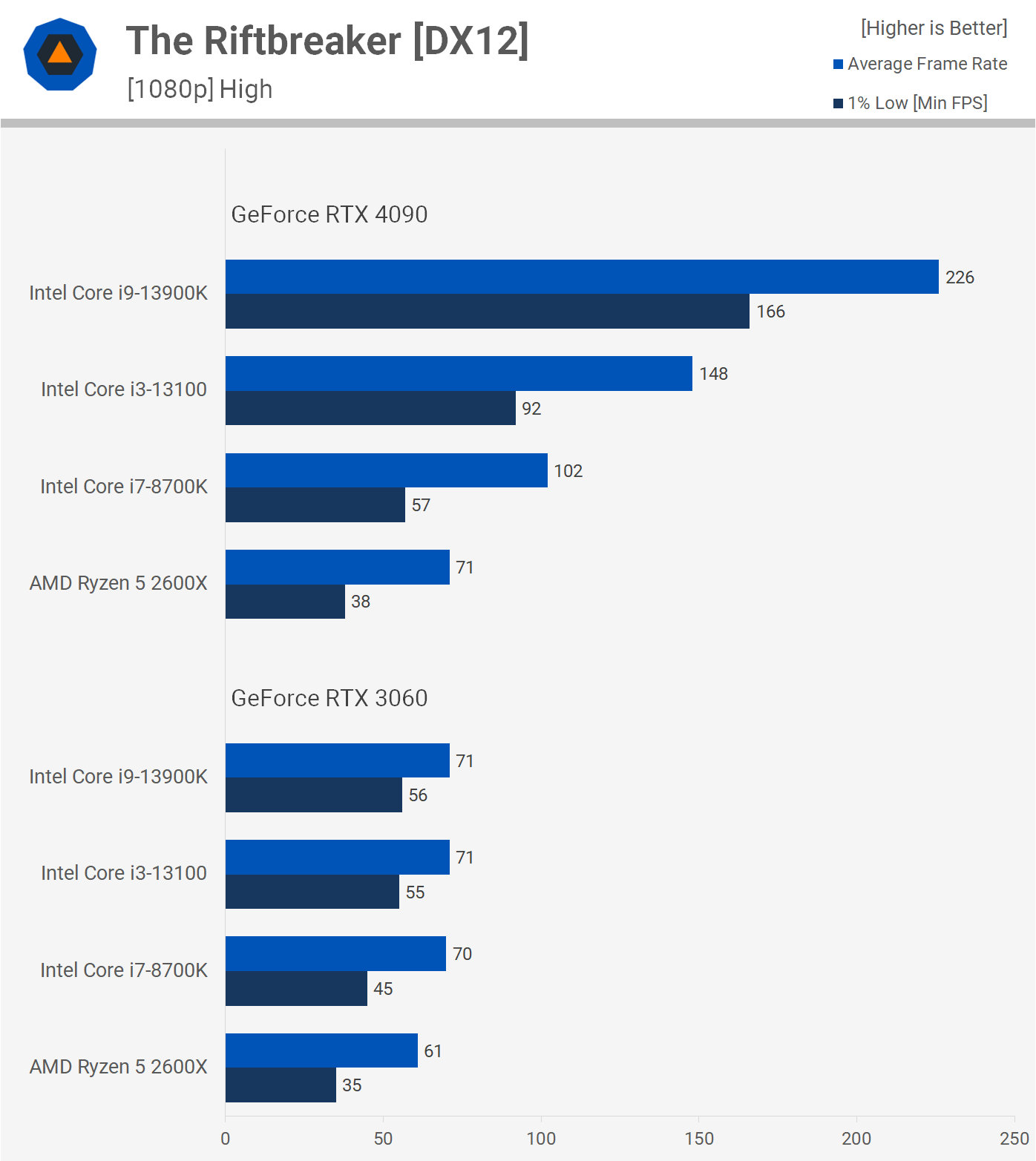

The Riftbreaker is a late 2021 release and it's very CPU demanding. Even so, using the GeForce GTX 1070 saw the Core i7-8700K just 4% faster than the Ryzen 2600X when comparing the average frame rate, at around 50 fps.

Upgrading to the RTX 3060 saw that margin grow by more than three times, to 15%. But even the RTX 3060 is heavily masking what the true margin is as the GeForce RTX 3080 enabled the 8700K to produce 44% more performance, and this is the actual performance difference between these two CPUs as we saw the same margin when using the mighty RTX 4090.

Benchmarks Using Current-gen CPUs

Hopefully those were eye-opening results for those of you pushing for GPU-limited CPU testing. Also keep in mind that there are many types of gamers who read our content, some of you only play single player games and therefore heavily prioritize visuals over FPS. In that instance, 60 fps might be the target. In which case, the Ryzen 5 2600X will mostly keep you happy even in 2023.

But others might enjoy competitive multiplayer shooters where 120 fps might be the minimum acceptable frame rate, with a target closer to 200 fps or higher. The point is, the games you play aren't the same games everyone plays and the way you like to play them isn't the way everyone likes to play them. For example, I personally target a minimum of 90 fps as this is the highest input delay I'll accept when playing most games, even single player titles and in Watch Dogs Legion that means I'd be better served by the i7-8700K than I would the 2600X.

Another point worth taking into consideration is that resolution is irrelevant when talking about performance targets. Remember this isn't a GPU benchmark, it's a CPU benchmark.

So if you are a somewhat serious multiplayer gamer, perhaps you play Warzone 2 and you like at least 200 fps, which for a competitive shooter is a reasonable performance requirement, it doesn't matter if you're gaming at 1080p, 1440p, 4K or any other resolution, it's presumed that you'll either be bringing enough GPU firepower to hit your desired frame rate at your chosen resolution, or you'll lower quality settings. In other words, you require 200 fps, therefore it doesn't matter if you're gaming at 1080p or 4K, the CPU has to be able to facilitate 200 fps.

In the examples we just saw, the Ryzen 5 2600X can't push past 70 fps in Watch Dogs Legion, no matter how powerful your GPU is.

Watch Dogs: Legion (1080p and 4K Very High)

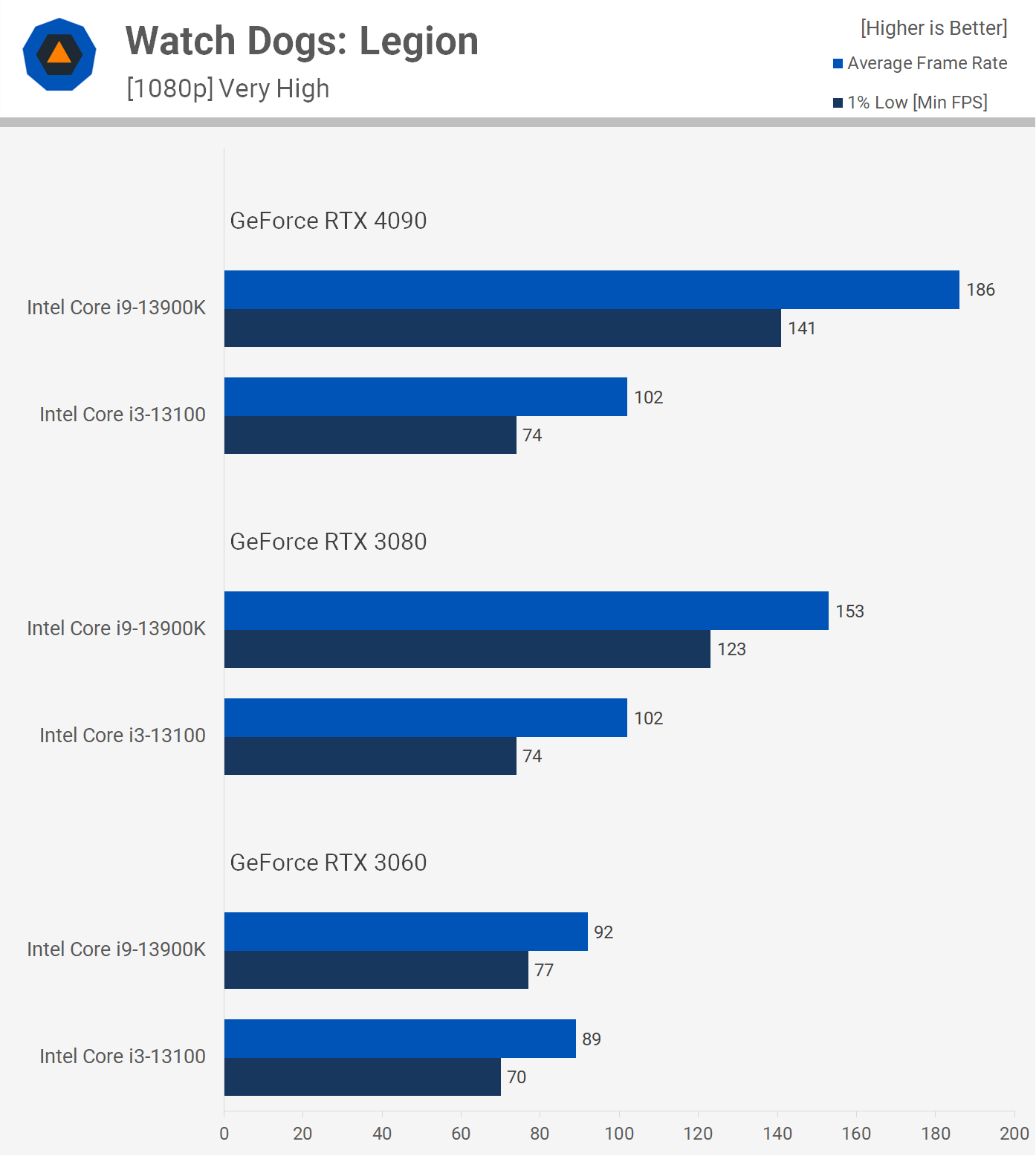

So what about another example using modern PC hardware, and for this we want a more extreme example. Let's compare the $150 Core i3-13100 to the $610 Core i9-13900K. We also want to look at 4K performance as that's something that's been coming up a lot in our CPU benchmarks, for some reason some readers believe 4K data is useful for CPU benchmarking, so let's address that...

Testing Watch Dogs Legion at 1080p, using what is arguably a more "suitable" GPU for the Core i3-13100 in the GeForce RTX 3060, the 13900K is a mere 3% faster when comparing the average frame rate. Is that useful data? In our opinion it's not, but let's dig a little deeper.

Now, when using the GeForce RTX 3080, the Core i9-13900K is 50% faster than the 13100 – 50%!!

That's a massive performance difference. Therefore, this data is far more useful as it not only gives a better indication of the true difference in performance between the CPUs, but it actually tells you what the i3-13100's limits are in this game.

This is further enforced by testing with an even faster GPU. The RTX 4090 still saw the 13100 limited to 102 fps. However, the 13900K gained a further 22% performance, hitting 186 fps, making it a staggering 82% faster than the 13100, quite a different story to the 3% uplift we saw using the RTX 3060.

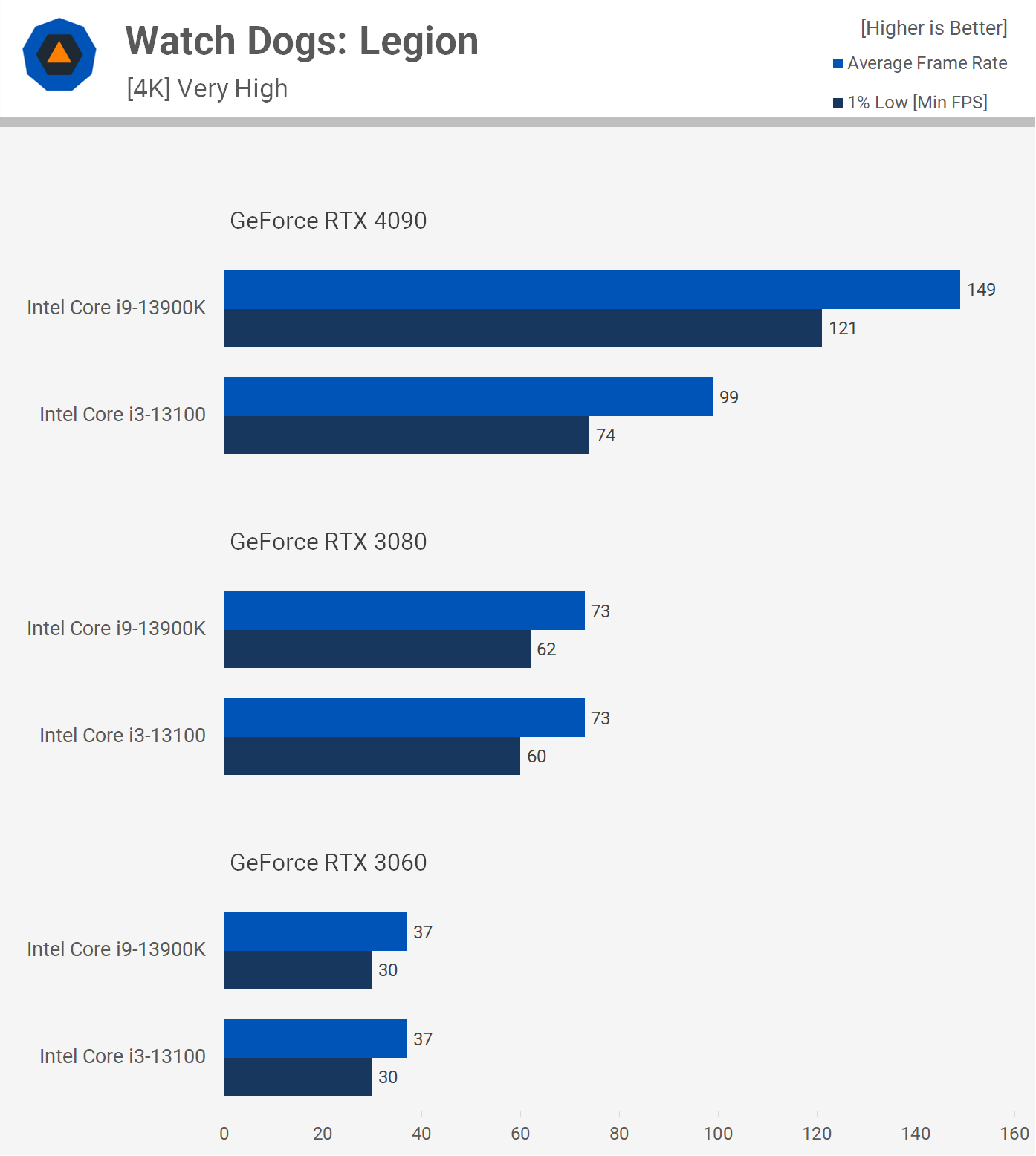

What about 4K testing? Here we saw no difference in performance between these two CPUs when using the RTX 3060 and RTX 3080, as the data is heavily limited by the GPU.

As we saw at 1080p, the 13100 had no issue driving 102 fps on average with 1% lows of 74 fps, both well below what the RTX 3060 and 3080 can drive at 4K. We only start to run into the limits of the 13100 at 4K when using the RTX 4090, limiting performance to 99 fps whereas the 13900K was good for 149 fps – a 51% increase for the Core i9 and remarkably similar to the margins seen with the RTX 3080 at 1080p.

There are several issues with this data beyond what we've already shown. For example, if this was a title where it made sense to chase big frame rates for competitive gameplay, limiting the 13900K to 149 fps with the RTX 4090 at 4K doesn't show you the full throughput capabilities of this CPU.

Again, if you simply wanted 200 fps here, you wouldn't settle for 150 fps, you'd lower the visual quality settings in the hope that your CPU could allow the GPU to go further. Testing at 1080p shows that the 13900K in its current configuration fell just short of 200 fps, though it's unclear if this is a CPU or GPU limit without further testing. Point is that we're more on the limit here, and if this was a competitive multiplayer title we would have tested with a lower quality preset.

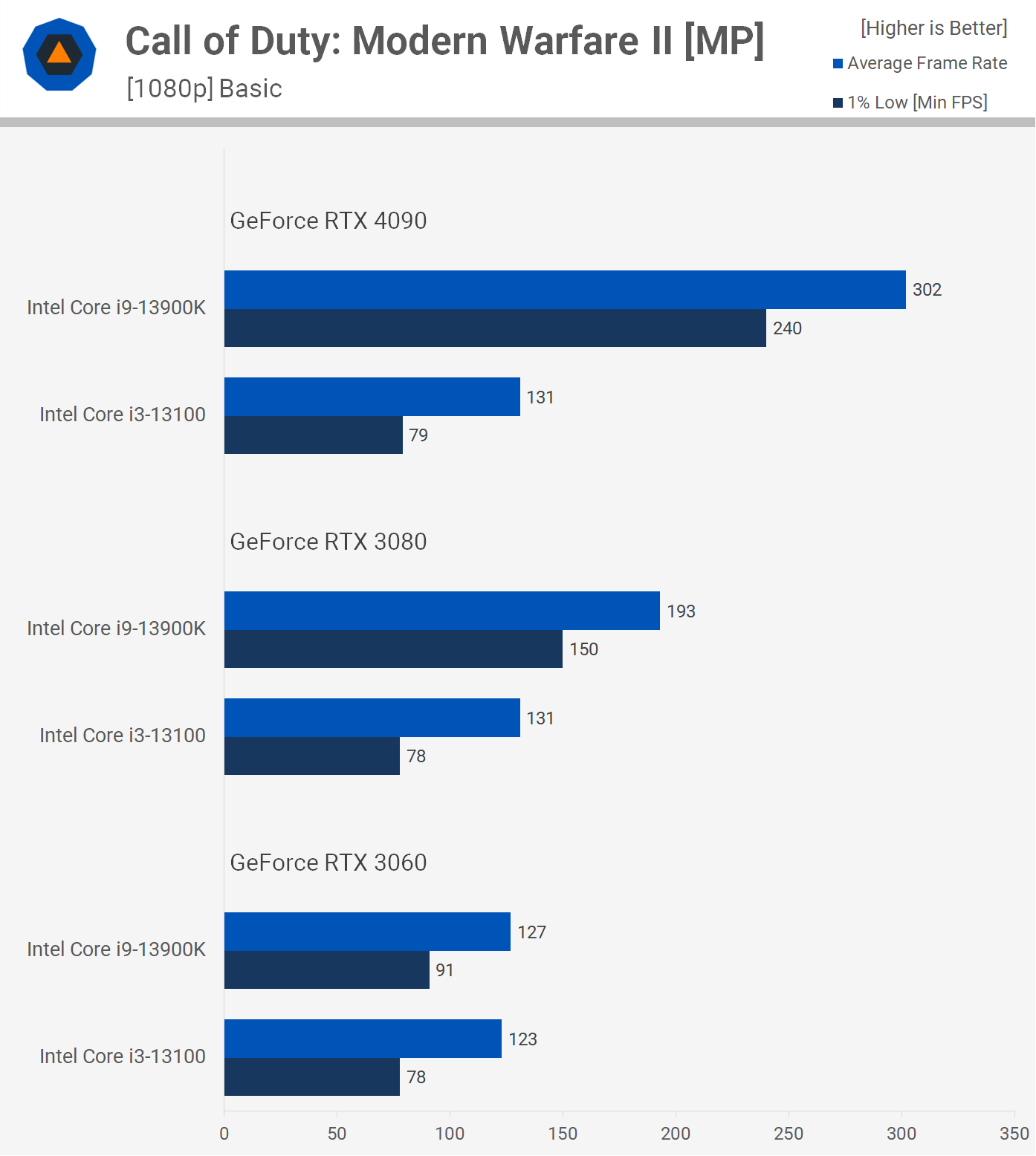

Call of Duty Modern Warfare 2 (1080p and 4K)

Speaking of competitive multiplayer titles using lower quality settings, here's Call of Duty Modern Warfare II using the basic quality preset. Armed with the RTX 3060 both CPUs allowed for over 120 fps on average, though the 1% lows of the i3-13100 took a hit. Still, looking at the average frame rate we see that the Core i9-13900K is just 3% faster than the Core i3.

The situation changes dramatically when we swap out the RTX 3060 for the 3080. Now the 13900K is 47% faster hitting 193 fps, while the 13100 saw no improvement to 1% lows with a mild 7% boost to the average frame rate.

But we're nowhere near the limits of the 13900K as the Core i9 processor spat out an impressive 302 fps when paired with the RTX 4090, making it over 2x faster than the 13100, a 130% improvement here. Again, we're able to confirm the limits of the 13100 at 130 fps, so we know exactly what this part is capable of, something we couldn't determine with the RTX 3060.

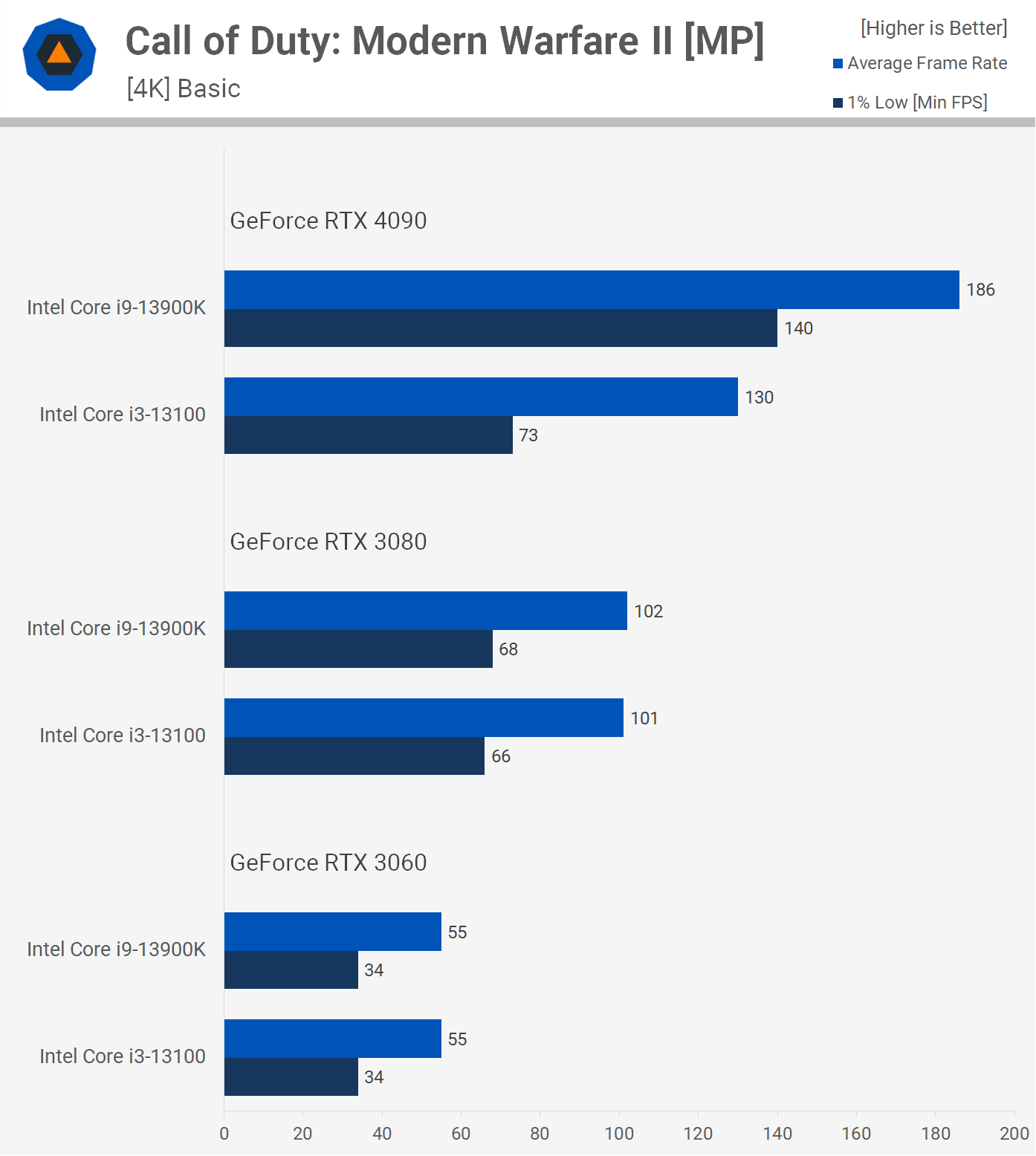

Now here's a look at the 4K data and you can see why these numbers are unhelpful and completely pointless. The GeForce RTX 3060 limited both CPUs to 55 fps, while the RTX 3080 limited performance to just over 100 fps and we know the 13100 is good for up to 130 fps, as seen in the 1080p testing.

To see any difference in performance we require the GeForce RTX 4090 and this reveals a 43% performance advantage for the 13900K, so a substantial uplift, but also not the true difference.

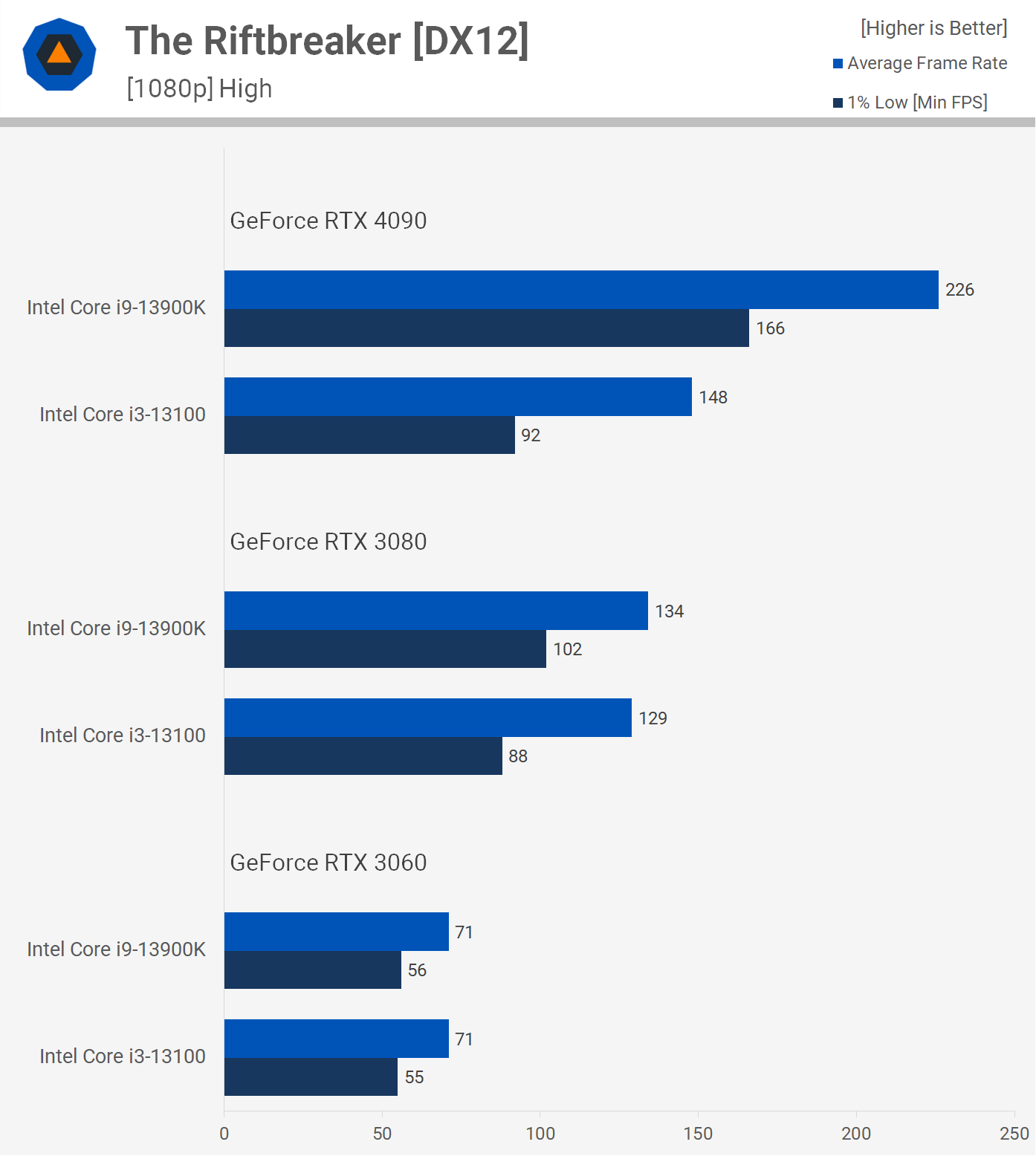

The Riftbreaker (1080p and 4K)

The last game we have is The Riftbreaker and you're probably starting to see a pattern emerge. Using the RTX 3060 tells us nothing useful other than the fact that the RTX 3060 can only render 71 fps on average at 1080p in this title, using the high quality settings. The RTX 3080 data is slightly more useful and while it does indicate that the 13100 is perfectly adequate here, it doesn't tell us which CPU is best for going well beyond 120-130 fps, or which CPU will end up faster in the future.

For that, the GeForce RTX 4090 has some concrete data for us.

Here the 13900K is 53% faster, hitting 226 fps, while the 13100 maxed out at 148 fps. An impressive performance achievement by the Core i3-13100 still, and if all you require is ~140 fps, then the Core i3 will likely work and it took the RTX 4090 at 1080p to answer that question.

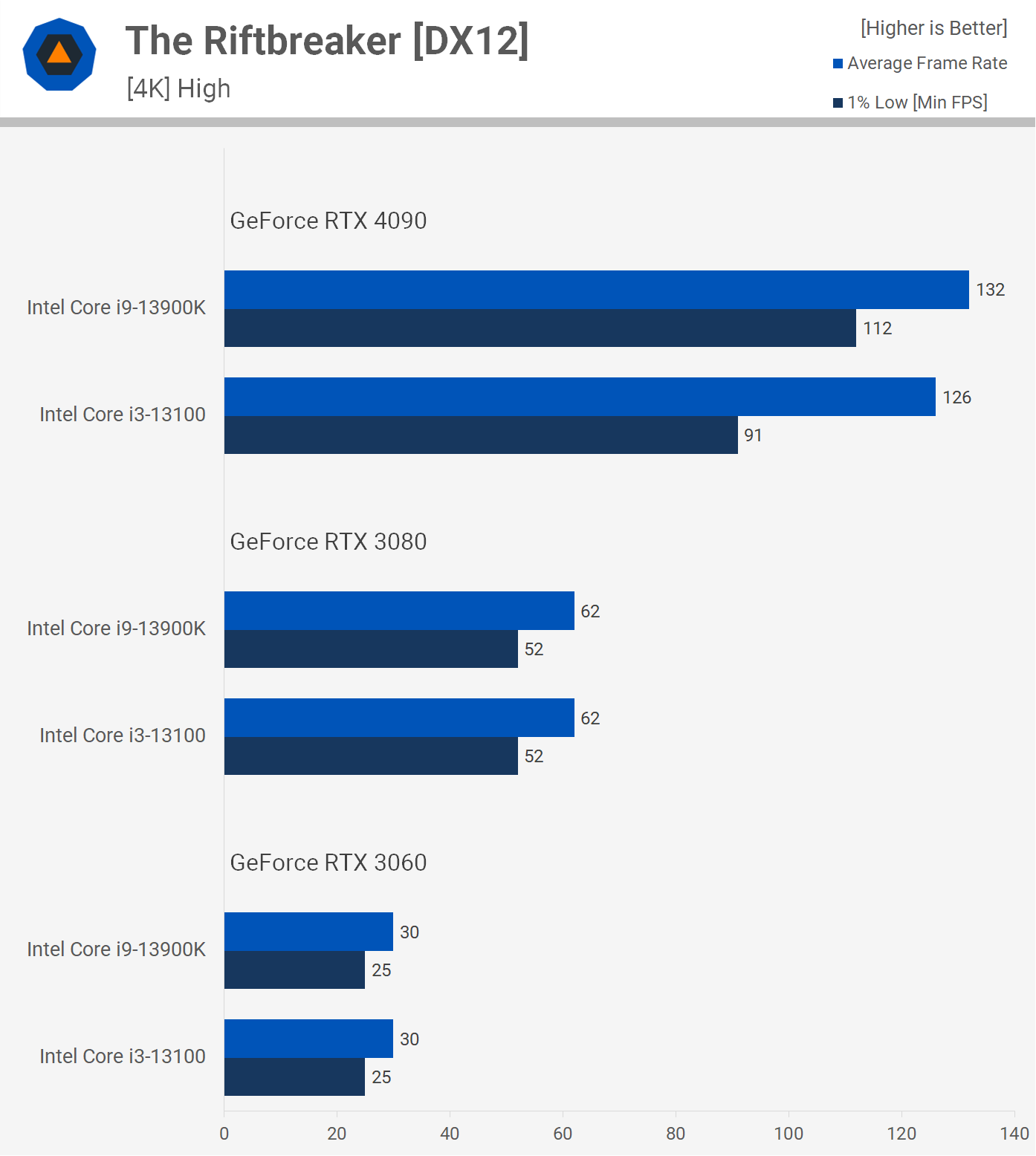

Then as we've come to learn, the more GPU limited 4K data masks the real performance difference between Core i3 and Core i9 processors, and even the 23% improvement to the 1% lows for the 13900K when armed with the RTX 4090 is a far cry from the real margin.

Mixed Data (13th-gen vs 12th-gen CPUs)

Lastly, here's some mixed data, comparing the new 13th-gen Intel CPUs with the older Core i7-8700K and Ryzen 2600X. Let's say you owned a Core i7-8700K and you're looking at retiring it. Granted, you will want something that offers substantially more performance to justify the investment.

Testing Watch Dogs Legion using the RTX 3060 at 1080p shows a mere 6% uplit from the 8700K to the 13900K, that's seriously disappointing and would have you wondering how you go about boosting performance. However, under the same conditions but with the RTX 4090, we see that the 13900K is actually 98% faster than the 8700K – basically twice the performance – and the CPU update will enable a truly high refresh rate experience, assuming you have the GPU power to unlock 186 fps in this title, or are willing to lower visual quality settings in order to make such a performance target possible with a lesser graphics card.

It's a similar story in Modern Warfare II, using the RTX 3060 the 13900K looks much like the Core i3-13100 or Core i7-8700K, all at ~120-130 fps. However, swapping out the RTX 3060 for the RTX 4090 reveals the true CPU performance difference and now the 13900K shows a 113% boost over the 8700K.

The Riftbreaker shows more of the same. Using the RTX 3060, the 13900K matched the average frame rate performance of the 8700K, although 1% lows were 24% higher due to the improved IPC and DDR5 memory bandwidth is likely playing a key role here. Anyway, if we look at the average frame rate performance there isn't much to report when using the RTX 3060.

Switching to the RTX 4090 changes everything and even the i3-13100 is offering big performance gains over the 8700K. The 13900K is also 122% faster than the 8700K when comparing the average frame rate and a staggering 191% faster when looking at the 1% lows.

How We Test, Explained

Hopefully by this point you have a more complete understanding why testing CPU performance with a strong GPU bottleneck is a bad idea, and significantly more misleading than the well established testing methods used by almost all tech media outlets. We get why readers want to see more realistic testing, which is why we stick to commonly used resolutions such as 1080p instead of 720p, as well as quality presets that make sense for a given title.

We also understand why readers want to see what kind of performance upgrade a new CPU will provide for their specific configuration, but you can easily work that out, with just a bit of digging.

For that you have to work out how much performance your graphics card will deliver in a chosen game, when not limited by CPU performance. Look at GPU reviews and check out the performance at your desired resolution, then cross reference that with CPU data from the same game.

The RTX 3060, for example, can deliver around 90 fps in Watch Dogs Legion at 1080p, but the Ryzen 5 2600X is only good for around 60 fps, so upgrading to the Core i3-13100 would net you around 30% more performance in this case.

Meanwhile, the argument that no one would ever pair a Core i3-13100 with a GeForce RTX 4090 is simply shortsighted, and of course, misses the point entirely. That's because based on polling data we know that most of you upgrade your graphics card ~3 times before upgrading your CPU. In other words, most of you don't upgrade your CPU every time you make a GPU upgrade, and most of you only upgrade your GPU when you're getting at least 50% more performance. This all came from polling readers.

So while Core i3-13100 shoppers today might be looking at a RTX 3060 or RTX 3070-levels of GPU performance, a 50% jump up in performance would land RTX 3060 owners at around an RTX 3080, while 3070 owners would be looking more at an RTX 4070 Ti. That's a problem, as the 13100 was a great pairing for the 3060 in our testing, but almost always heavily limited the RTX 3080, especially for those who will be targeting 140 fps or more.

Ultimately, you want to isolate the component you're testing, so you can show what it's really capable of, and we as reviewers are doing this using real-world testing. The idea isn't to upsell you on a Core i9-13900K from a Core i3-13100, but rather to accurately show you what both products are capable of, allowing you to make an informed buying decision.

Another scenario is that you have two CPU options at the same price point, let's say two CPUs that cost $200. Testing those CPUs with a GPU or resolution that results in GPU limited testing would almost certainly lead you to believe that both CPUs deliver a similar level of performance, so it won't matter which one you buy. But in reality one could be significantly faster than the other for gaming, let's say 50% faster...

So when it comes time to upgrade your GPU, one CPU will offer no extra performance in games that are CPU limited, while the other CPU will enable big 50% performance gains. There isn't a single scenario where heavily GPU limited CPU benchmarking makes sense, or is useful on its own.

And that's going to do it for this explainer. Hopefully this helps those of you confused as to why reviewers go about things the way they do, better understand testing methods as that was the goal all along and this will help you to make better sense of CPU reviews.