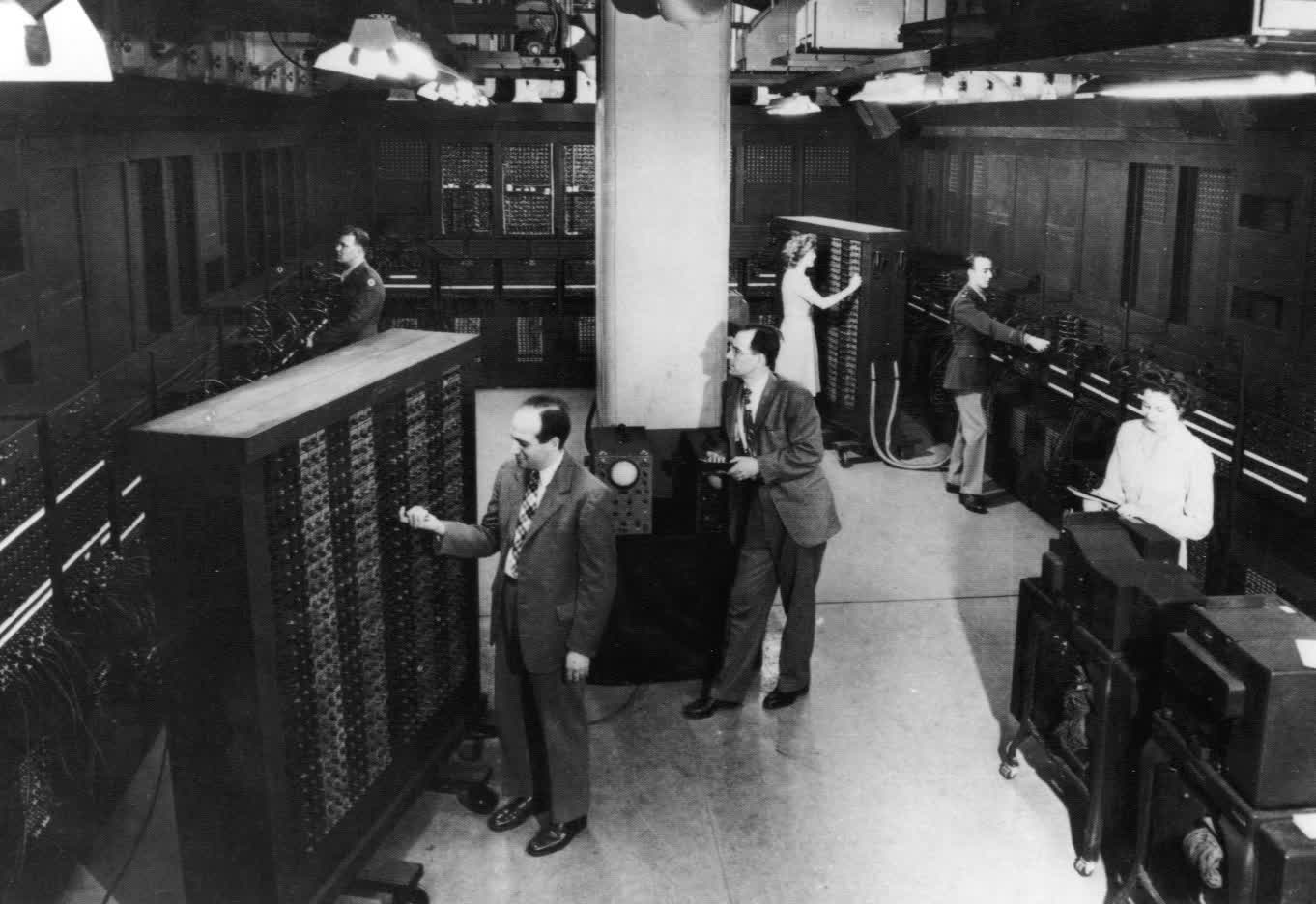

The Electronic Numerical Integrator Analyzer and Computer, or the ENIAC as it is commonly known, is a lasting product of the Second World War. It is generally credited with starting the modern computing age, even though its original purpose was much more computationally modest, intended as a ballistics calculator for WWII.

The 30-ton computer consumed 160 kilowatts of electrical power, took up more than 1,800 sq feet (167 sq meters), and contained more than 17,000 vacuum tubes. It could perform 5,000 additions, 357 multiplications, or 38 divisions per second, an unprecedented feat at the time. However, its true advantage and novelty was that it was the first programmable machine, and could be used besides its original purpose.

It took roughly 50 years between the invention of the vacuum tube transistor and the ENIAC to be built; however, the realization of the programmable system opened the doors for the man to reach the moon...

A programmable environment opens up the door for innovators across different fields to take advantage of the underlying computing fabric. It took roughly 50 years between the invention of the vacuum tube transistor and the ENIAC to be built; however, the realization of the programmable system opened the doors for the man to reach the moon, a myriad of medical techniques and technologies, and an unprecedented turnaround time for vaccine development.

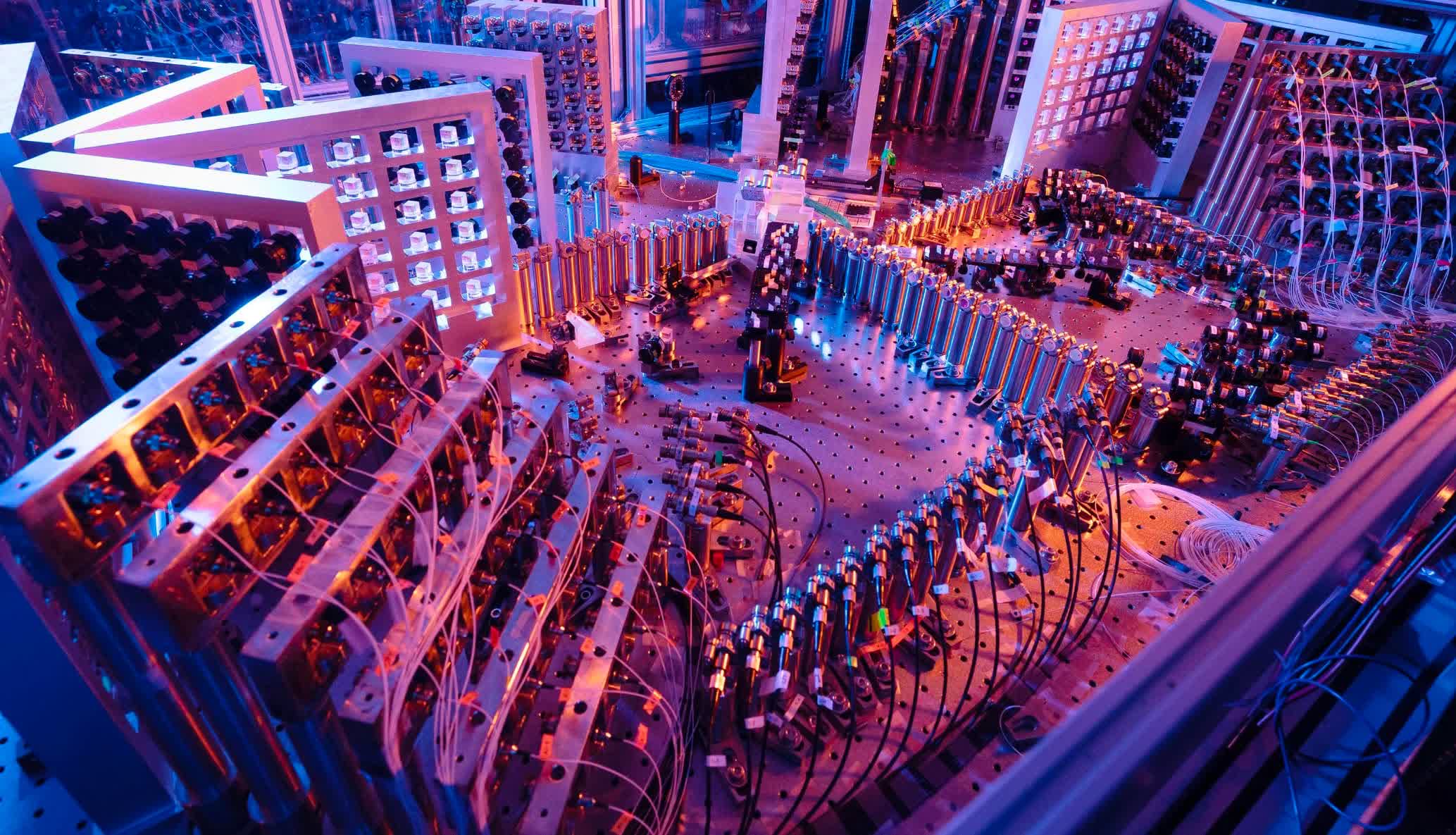

Quantum computing "systems" are still in development, and as such the entire system paradigm is in flux. While the race to quantum supremacy amongst nations and companies is picking up pace, it's still at a very early stage to call it a "competition."

There are only a few potential qubit technologies deemed practical, the programming environment is nascent with abstractions that have still not been fully developed, and there are relatively few (albeit extremely exciting) quantum algorithms known to scientists and practitioners. Part of the challenge is that it is very difficult and nearly impractical to simulate quantum applications and technology on classical computers – doing so would imply that classical computers have themselves outperformed their quantum counterparts!

Nevertheless, governments are pouring funding into this field to help push humanity to the next big era in computing. The past decade has shown an impressive gain in qubit technologies, quantum circuits and compilation techniques are being realized, and the progress is leading to even more (good) competition towards the realization of full fledged quantum computers.

ICYMI: What is Quantum Computing?

In our first article of this two-part series, we focused on the physical aspects which make quantum computing alluring fundamentally to researchers today, and the potential technical and societal benefits making it a worthy investment.

In this article, we'll focus on the quantum computing stack, exploring the recent developments in qubit technologies, how they can be programmed for computations, and the challenges and open questions in the field.

Let's dive right in!

Recap of Fundamentals

In the first part of this series, we discussed various aspects which make classical computing fundamentally different from quantum computing. We encourage you to go over that article for more details, but a few basic takeaways are:

- Quantum bits (qubits) are not binary: they are a superposition of both 0 and 1 values, and thus are much more expressive than a classical digital bit.

- Qubits exhibit a property known as entanglement. This property means that two (or more) qubits are intrinsically associated with one another. Einstein defined this as "spooky action at a distance."

- Qubits decohere over time. In other words, maintaining the value of qubits is a fundamental challenge for the realization of fault tolerant (FT) quantum systems of the future.

Given these physical building blocks, what types of technologies can actually take advantage of these properties?

Qubit Technologies

Designing qubits for a quantum computer is no simple task. Quantum systems require very strict isolation of particles and an ability to manipulate complex physical systems to a level of precision never attempted before.

There are a few competing technologies that have come up in recent years, including trapped ion qubits, superconducting qubits, semiconductor spin qubits, linear optics, and Majorana qubits. The general philosophy of qubit design can be summarized by the following few points (called the DiVincenzo criteria):

- Well-characterized qubits for a scalable system

- Ability to initialize qubits (for computation)

- Stability of qubits (i.e., long decoherence times)

- Support for universal instruction set for arbitrary computation

- Ability to measure qubits (i.e., readout in computational basis)

Interestingly, these goals are in tension with one another – as if quantum computing wasn't complex enough! Specifically, initializing and performing computations on a qubit require interactions on the system, which will inherently break the required isolation of making a qubit stable. This is one reason why it is fundamentally difficult to build a quantum computer.

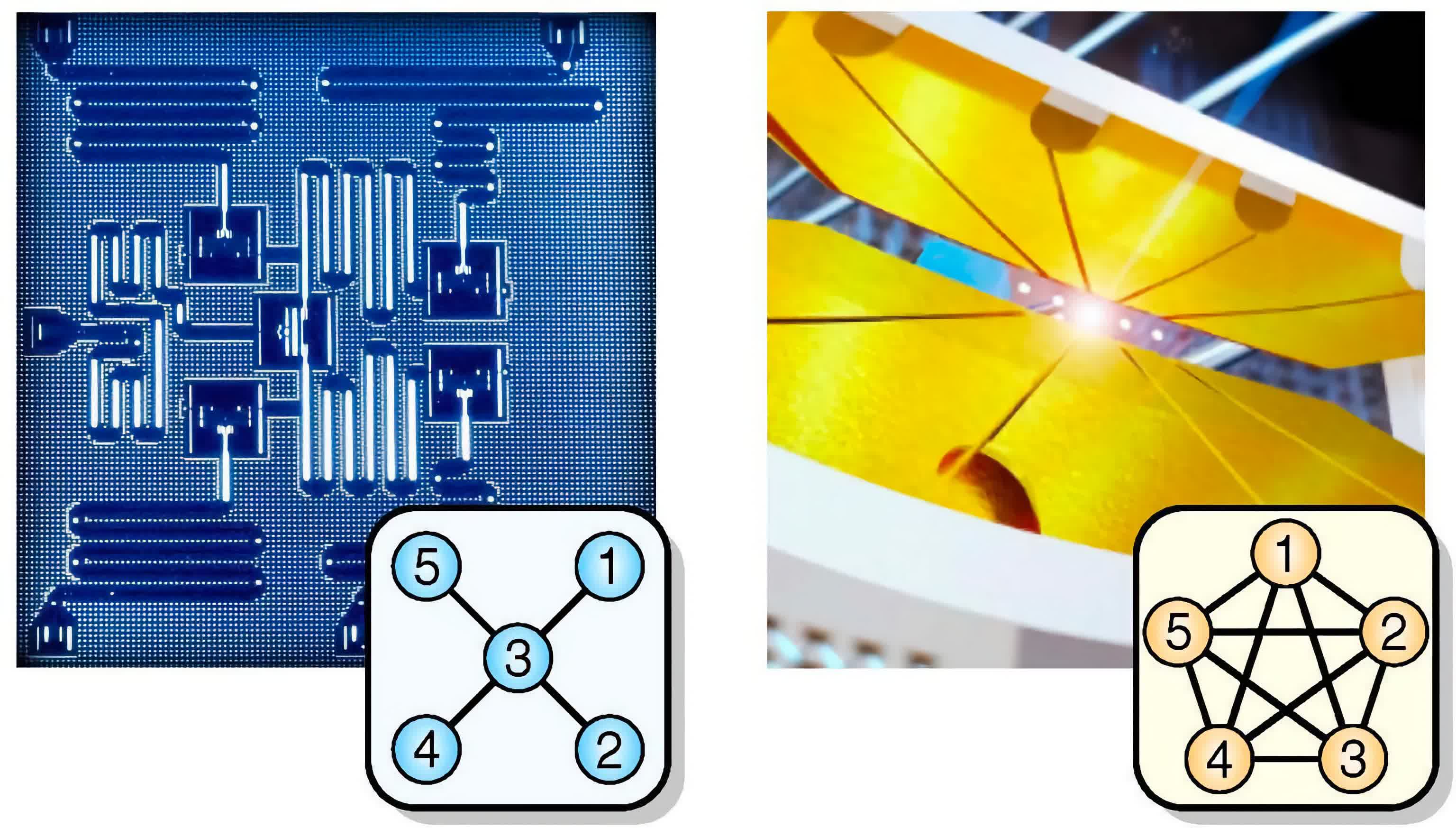

Nevertheless, the two most promising candidates for NISQ-era (noisy intermediate-scale quantum) bits have been the trapped-ion qubit and the superconducting qubit.

Trapped-Ion Qubits

A trapped ion qubit operates on atoms. Since such a qubit uses the properties of atomic ions, it can naturally leverage the quantum mechanical properties via internal energy levels of the atoms. Common ions such as Ca+, Ba+, Yb+ (amongst many others) can be used.

Conceptually, the idea is to specify two atomic energy levels of the ion, and denote those as the 0 and 1 energy levels. The choice of the two levels determines how the qubit is controlled: a large separation between the energy levels (e.g., 10^15 Hz, around the frequency of light) means the use of laser beams to excite ions and move them from one state to another. These are called optical qubits.

Hyperfine qubits, on the other hand, have a smaller energy separation (around 10^10 Hz). This latter type falls in the microwave frequency, and thus can be controlled via microwave pulses. The advantage of microwave controlled single-qubit gates is its lower error rate (10^-6), while the disadvantage is that it is difficult to focus on individual ions due to the large wavelength.

How can you stabilize an ion and use it for computation? As the name implies, you need to "trap it" to keep it in place to control it. This can be done by applying an electromagnetic field in a particular way (an RF Paul trap) to freeze the ion at a saddle point. Once "trapped", the ions need to be physically cooled to reduce vibrations and decoherence. As you might imagine, such a setup would need many components, including lasers, control electronics, liquid helium for cooling, and an extreme level of precision during operation.

Trapped-Ion qubits are gaining quite the fanfare recently. On October 1, 2021, IonQ (a University of Maryland spin-out firm) was publicly listed on the NYSE. While the theoretical foundations have been around since 1995, only recently has its implementation really taken off.

Additionally, their lower error rates provide a compelling technology to be the qubit of the future during the NISQ era. While this is all promising, trapped-ion qubits do have some drawbacks, the biggest of which is that they are slower than superconducting qubits. This characteristic may be important to account for real-time errors coming out of the system. Additionally, there are limits to how many ions can fit in a single trap and be made to interact. All this does not detract from the promise of trapped-ion qubits.

Superconducting Qubits

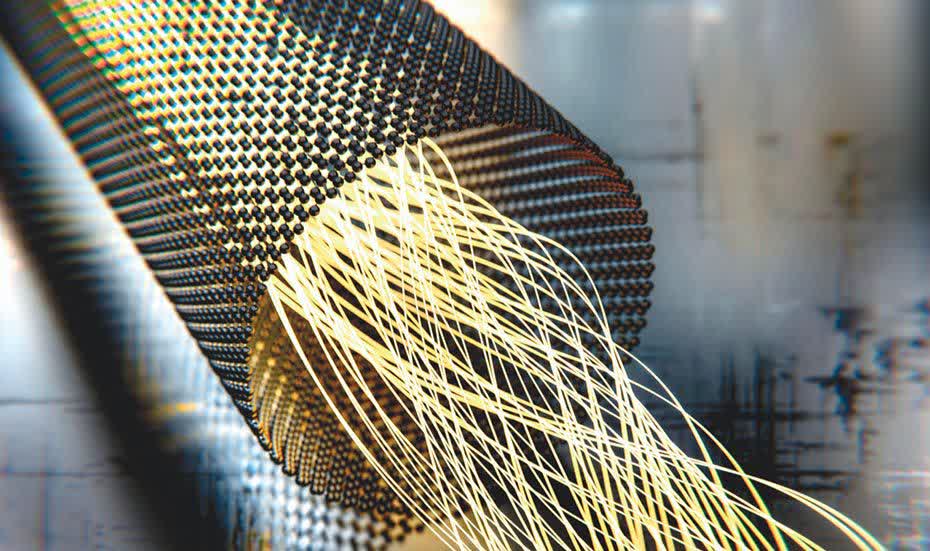

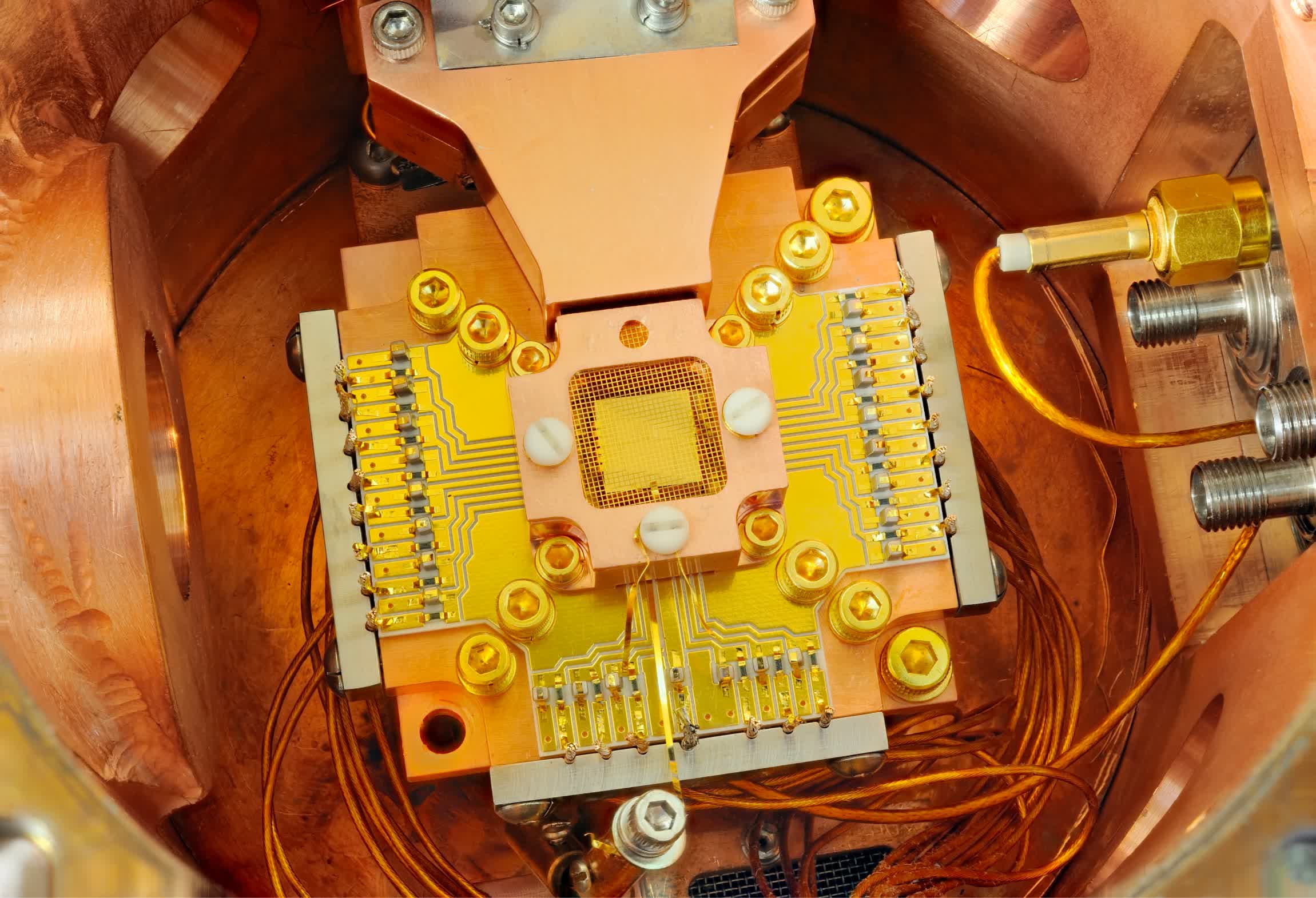

In contrast to trapped-ion qubits, a superconducting qubit is implemented with lithographically printed circuit elements. Essentially, these are "artificial atoms" with desired quantum mechanical properties. Until the recent rise of trapped-ion qubit technology, superconducting qubits have attracted significant industrial attention since it more closely follows existing integrated circuit technology.

A superconducting qubit revolves around an electrical circuit element called a Josephson junction, which is essentially an insulator in between two superconductors. Below a critical temperature, the superconductor resistance is dropped to zero, forming a pair of electrons known as Cooper pairs.

Traditional electrons have +-½ spin (known as fermions), while Cooper pairs have a total spin of 0 (bosons). In a Josephson junction, cooper pairs can quantum tunnel, and give rise to discrete energy levels needed to make a qubit. The number of Cooper pairs tunneling across the junction relates to the quantum state. There are multiple types of superconducting qubits, including charge qubits, flux qubit, and phase qubit, which differ in their circuit designs and (in turn) their operation and physical mechanisms to implement, control, and measure a qubit.

Superconducting qubits have opened the door for various technologies, including silicon-based spin qubits, and have had industrial backing for longer than trapped-ion qubits. There is no clear victor in the technology space at this point: each tech has its own advantages with various backers. At the same time, fundamental limitations will help push more innovation on all fronts to identify ideal qubits for future quantum computing systems.

Quantum Gates

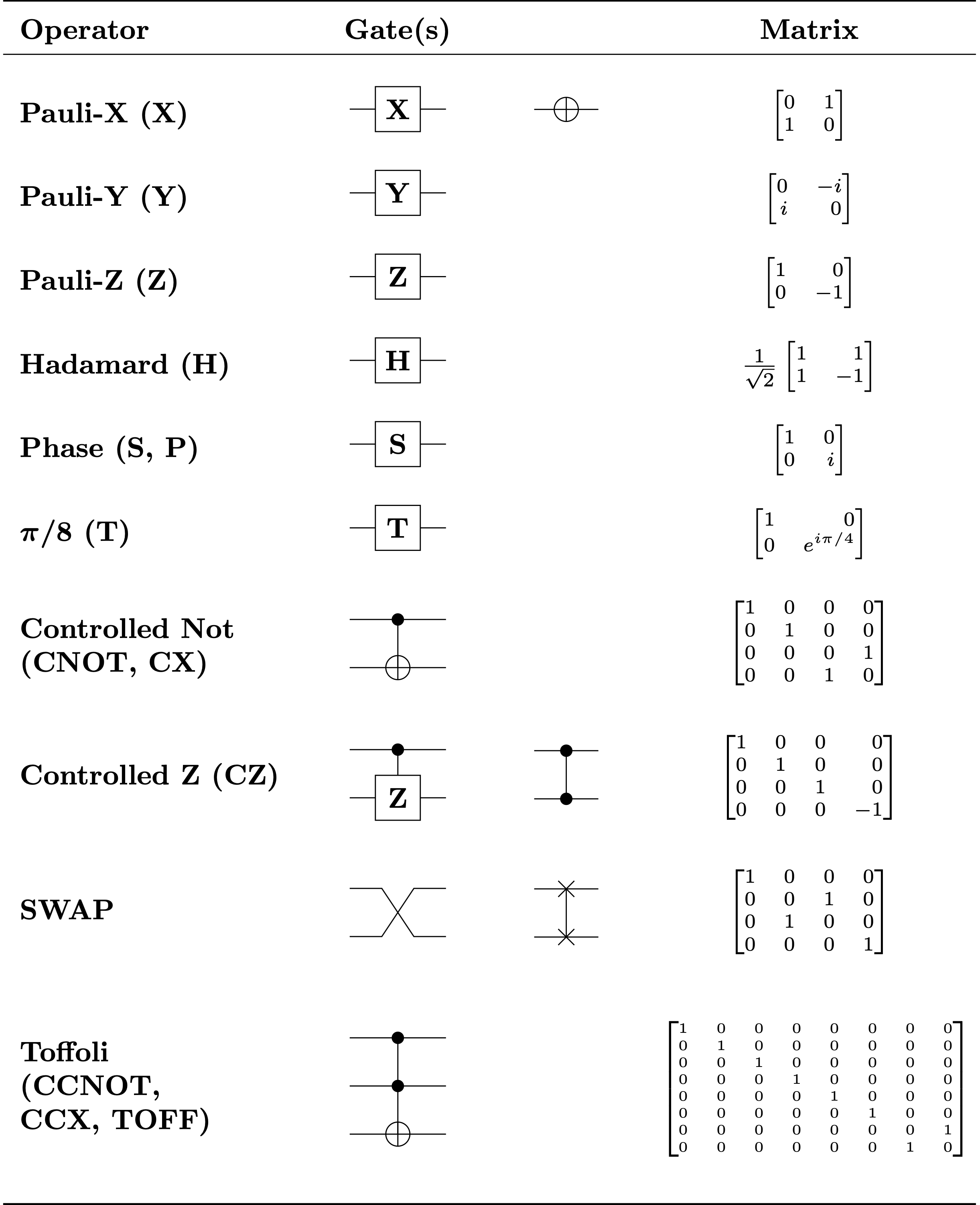

Depending on the qubit technology, quantum logic gates (or more precisely, qubit operations or quantum instructions) require different physical operations to process information. A quantum gate is fundamentally a logical transformation represented by unitary matrices.

Recall that while classical computations operate under the laws of Boolean algebra, quantum computations operate under the rules of linear algebra. Thus, a transformation/gate is essentially an operation changing a qubit's state into another state, which can be interpreted based on the superposition of its 0 and 1 value.

One unique aspect of quantum gates (which sometimes make it difficult to understand) is that it differs from the classical concept of a Von Neumann architecture. John Von Neumann was a computer architect working on the Manhattan project in the 1940s, when he heard about the ENIAC development. He figured out a way to make the ENIAC much faster, by introducing the concept of a stored-program design. In modern nomenclature, this practically means separating the memory (for example, where a program is stored) from the compute units (where information is processed). Such a separation of concerns was instrumental in making machines more productive from a human standpoint – debugging time can shift to writing better programs, and computer architects can focus (almost independently) on improving each of the memory and compute structures for better performance.

A quantum architecture, however, does not have such a simple separation, since the "compute" occurs by physical transformations on the qubit "memory," and is fundamentally tied to the technology. While that might seem a bit foreign to a traditional computer programmer, it does come with a unique perk: quantum computers can heavily leverage reversible computations.

A reversible operation is one where the transition function maps old computational states to new ones is a one-to-one function. In other words, knowing the output logic states uniquely determines the input logic states of the computational operation. For example, a NOT gate is a reversible function, operating on one bit (or qubit). By extension, a controlled-NOT gate (or CNOT) gate uses two logical bits/qubits, where the second bit controls how/when the NOT gate is flipped. As an analogy, a CNOT gate can be thought of as a reversible XOR gate. Adding one more control bit/qubit introduces the Toffoli gate, where you can control the control bit going into a CNOT gate.

How is this useful and relevant to quantum computing? Well, the Toffoli gate is a universal reversible computation, meaning that you can implement any (possibly non-reversible) Boolean logic circuit solely using Toffoli gates. In the Von Neumann computing design, this is similar to a NAND gate (Not-AND), and its generalizability is a reason why we can program a computer today to perform practically any computation (and perform millions of them very quickly).

But why stop at reversible computations? Another important element of quantum gates is their association with randomized computations. Many classical algorithms take advantage of randomization, since the natural world behaves unpredictably and (to a certain extent) statistically. Quantum computing has that baked in already, as randomness is a fundamental property closely linked to superposition.

In other words, when measuring a quantum system, you require many computations (all of which have inherent randomness due to atomic properties), and your output is a probability distribution of the samples you made to capture the result. While it seems like a new model of computing, it is actually closer to the fundamental nature of the world as very few things in the world are certain (with the exception of death and taxes as Benjamin Franklin said in 1789).

Other popular quantum gates (not discussed here but highly relevant) are the Hadamard gate, the Controlled Hadamard gate, a Pauli gate, a SWAP gate, a controlled SWAP gate, and others (do you see a pattern?). While these are important for various algorithms, the main takeaway is that these gates are essentially linear algebra operations, and can be used to transform the state of a qubit for desired computations.

Quantum Compiling

So far, we've been going "up the stack" in describing a quantum computing system: quantum physical properties can be captured with qubits, which in turn can be operated on with various logic gates for information processing, with the high-level objective of implementing a quantum algorithm. The connection between the high-level quantum algorithm and the low-level quantum hardware requires the realization of a quantum compiler. A quantum compiler is a series of transformations and optimizations on a quantum intermediate representation (QIR) of a program.

Let's unpack that jargon a bit.

In the classical sense, a compiler is a piece of software that is tasked with transforming a high-level programming language (such as C++, Python, etc.) into a machine-defined instruction set architecture or ISA (such as x86, ARM, Power, RISC-V, etc.) The ISA forms the contract between the programmer and the machine, allowing users to write code which a compiler then "translates" for the machine to understand. The machine then executes the instructions to execute the program on the machine, using the compiled "recipe."

The instruction set of a quantum computer are the gates described above: CNOT gates, Hadamard gates, "Clifford+T" gates, and other operations on qubits. However, a quantum compiler's job is more complex than just "translating" a higher level language (examples include cQASM, Quil, and Q#) into a series of gates. It also has to take into consideration the quantum physics of the underlying computations.

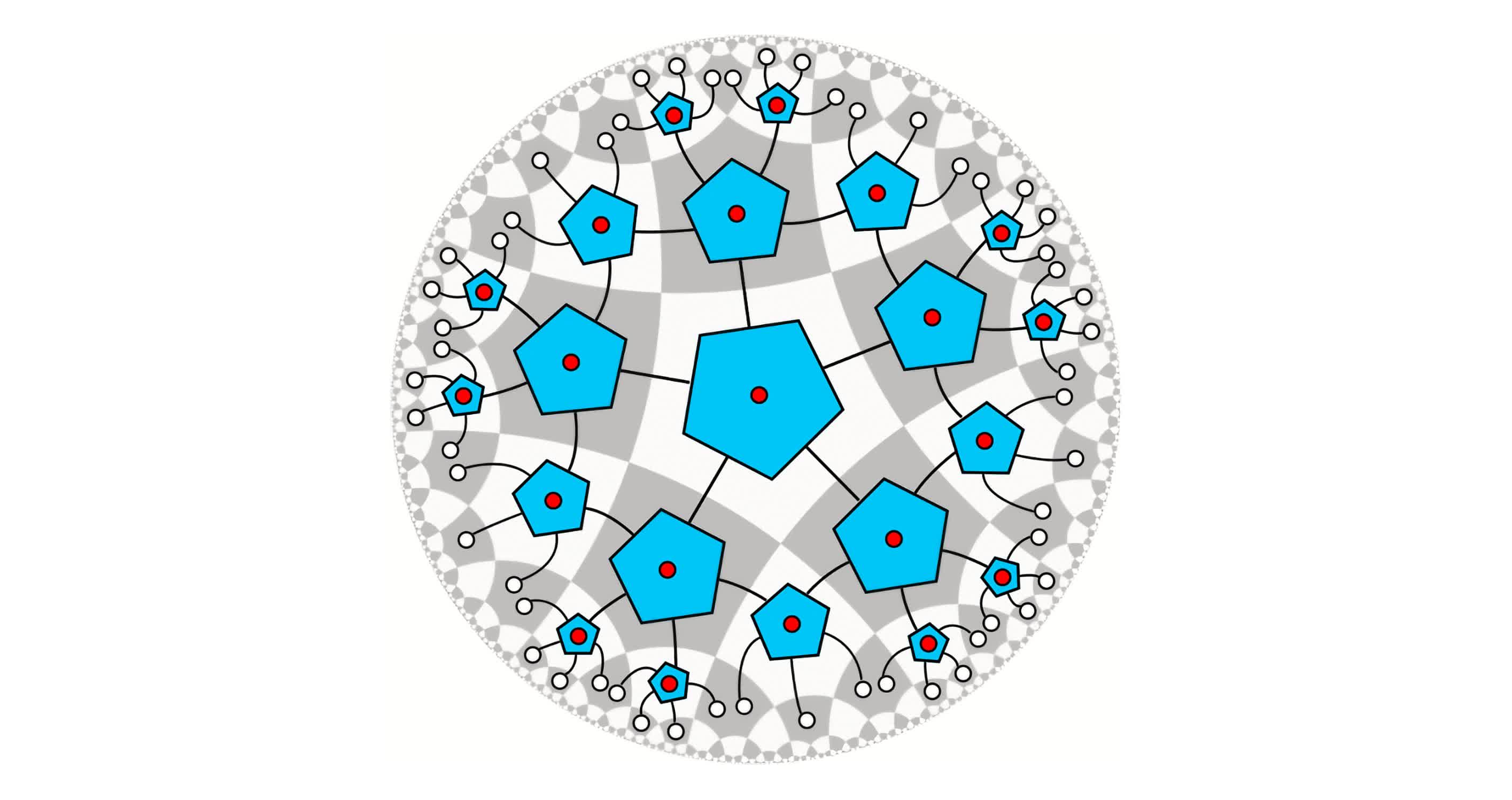

For example, qubits can be entangled, and so their interactions need to be scheduled accordingly. Qubits also decohere over time; thus you need an optimal configuration to reduce (and take into consideration) the noisy nature of the operations. Interactions between qubits might also be limited by the underlying technology: not all qubits can interact with one another physically, and thus the compiler needs to be aware of hardware constraints when implementing an algorithm. In case this didn't seem like enough work for a quantum compiler, a highly remarkable phenomenon known as quantum teleportation increases the complexity further. Quantum teleportation leverages entanglement to transfer information across "far away" qubits. While seemingly far-fetched, this feature can help reduce communication costs via scheduling, but of course, it needs to be properly managed by the system.

If this all seems too much to comprehend at once - you're not alone! Quantum compiling is a hot research area precisely for all the complexities involved. While classical computer systems have been designed with nice abstractions enabling innovations at separate levels in the computing stack, the quantum computing "system" is very much still in flux.

To quote Dr. Yongshan Ding and Dr. Fred Chong from the University of Chicago, "the key to successful execution of quantum algorithms on NISQ devices is to selectively share information across layers of the stack, such that programs can use the limited qubits most efficiently." Quantum compilation aims to do that for us; however, it is still very much at a nascent phase itself.

Fault Tolerance and Noise Mitigation

Today, quantum computing is very much synonymous with fault tolerance and noise mitigation – it is quite literally in the name: noisy intermediate-scale quantum (NISQ) computers.

Most qubits in a system are being used to counteract the decoherence of the quantum system, and compensate physically and algorithmically for computing faults.

The primary metric used to measure how long a quantum state can last is aptly called the coherence time. For reference, modern day coherence time is measured in the order of minutes to roughly one hour. Imagine if each bit in your current processor randomly flips its value every hour... getting anything (useful) done would be quite impractical!

That said, given the processing power of a quantum bit, you can actually perform many computations (i.e., qubit transformations) within such a time frame, but it is much less feasible to execute long-running algorithms exhibiting quantum supremacy.

One of the common techniques today to mitigate the natural decoherence of qubits during computation is to use redundancy, much like classical systems. Specifically, quantum error code correction (QECC) essentially mirrors modern ECC, where additional (qu)bits are expended to detect if an error occurred and to correct it. A common quantum error correcting code is the 9-bit Shor code (or simply, the Shor Code), where 1 logical qubit is encoded using 9 physical qubits that can correct for arbitrary errors in a single qubit. In other words, for every qubit in your system doing "useful" computations, you'd need an extra 8 to make sure it is operating properly.

QECC isn't the only way to address faults in a quantum system. Another method is randomized compiling. The idea is to insert random gates into a quantum circuit, and average over many of those independently sampled random circuits. While the effect of noise on the individual circuit may be different, the expected noise on multiple random circuits is tailored into a stochastic form. Essentially, you can use clever math to compensate while incorporating error directly in the computations.

The quantum compiler can also perform various mapping smarts to account for errors, such as minimizing cross-talk between qubits during hardware circuit translation, recalibration of the system error rate and remapping qubits, and minimizing the circuit length to "speed up" the computations before decoherence takes over.

All these techniques exist because of the imprecise control of quantum hardware and natural decoherence of states. While many of these techniques are specific to the NISQ era, a major goal is to better understand errors and mitigate them for the eventual Fault Tolerant (FT) era of quantum computers.

Many believe that the FT era is when quantum supremacy can actually be realized for many applications.

Summary and Takeaways

We've come a long way in the development of quantum computers, with many companies and research facilities pushing the envelope in fully capturing the quantum properties of our universe. While many of the foundational theories are now being implemented, full quantum "systems" remain in active development.

Competing qubit technologies, noisy systems and decoherence, and an incredible "do-all" compiler are still challenges for the race to quantum supremacy. Furthermore, the NISQ era we are currently experiencing and the future FT era might look vastly different. The modern computing "stack" is still in early development, and many new and exciting quantum technologies are sure to arrive in the near future.

While quantum computing has not taken the world by a storm (yet), one can imagine the benefits and consequences of having quantum computers at our disposal. While the foundations of quantum mechanics are nearly a century old, researchers and practitioners are now beginning to actually design these systems and solve many fascinating research and engineering challenges. With many players now invested in the quantum race, the number of qubits in a system is increasing practically every other day, and it will only be a matter of time before truly fault-tolerant systems are a reality.