Nvidia's deep learning super sampling, or DLSS, is one of the highly anticipated features present on RTX graphics cards. We previewed it months ago, but until support arrives on working titles, there was just so much we could learn about it besides looking at demos and what Nvidia claims will be possible with it.

DLSS finally made its way to both Battlefield V and Metro Exodus this month, and as is the usual case for us, we'll be going through a full visual and performance breakdown in this article. For now we're sticking with Battlefield V in this investigation because according both Nvidia and developer 4A Games have said the DLSS implementation in Metro Exodus still needs some polish.

When we looked at DLSS back in September, there were only two demos Nvidia had for the launch of their RTX GPUs. The demos weren't particularly great as they were canned benchmarks, which we felt would give Nvidia's DLSS neural network an unrealistic advantage at optimizing the image quality, compared to a dynamic game environment. However, we still discovered back then that DLSS performed roughly the same as reducing the image from 4K to 1800p, while providing roughly the same image quality as 1800p.

DLSS has also been available in a real game for a little while, Final Fantasy XV. But because that game has a terrible anti-aliasing implementation, we decided it was not a good test bed and is not reflective of most other decent games. But both Battlefield V and Metro Exodus have good anti-aliasing, which provides an excellent comparison between DLSS and a high quality native image.

In all DLSS games released so far, the feature is locked down, preventing you from simply enabling it with any combination of settings or resolutions. In the case of Battlefield V, you must have DXR reflections enabled to enable DLSS, so there is no option to use DLSS without ray tracing. That's already disappointing, because we feel most gamers should play with ray tracing switched off. So if you simply wanted to use DLSS to boost performance ray tracing aside, that is not possible.

But it's locked down further, on a GPU by GPU basis. If you're playing at 4K, all RTX cards can access DLSS. However if you're a 1440p gamer, the option is only available for the RTX 2080 and below. At 1080p, only the RTX 2060 and 2070 can use DLSS. And there are similar limitations with Metro Exodus.

According to Nvidia, the reason for this restriction is that activating the neural network for DLSS takes a fixed amount of time for each frame. As your performance level increases, DLSS begins to occupy a proportionally higher percentage of the rendering time, up to a point where for fast GPUs, it takes longer to process DLSS than it does for the native frame. So Nvidia has made the choice to block users from activating DLSS in situations where the performance uplift is negligible, or in some cases even worse than just native rendering.

This definitely prevents DLSS from being that one-click performance improving feature Nvidia advertised it as. Many popular configurations, especially those that deliver high-framerate gameplay like the RTX 2080 Ti at 1440p, can't benefit from DLSS.

Before we jump into the image quality comparisons, we wanted to go over the performance results first. We tested Battlefield V with a Core i9-9900K rig, and for this initial batch of 4K testing we used a GeForce RTX 2080 Ti with all settings set to Ultra.

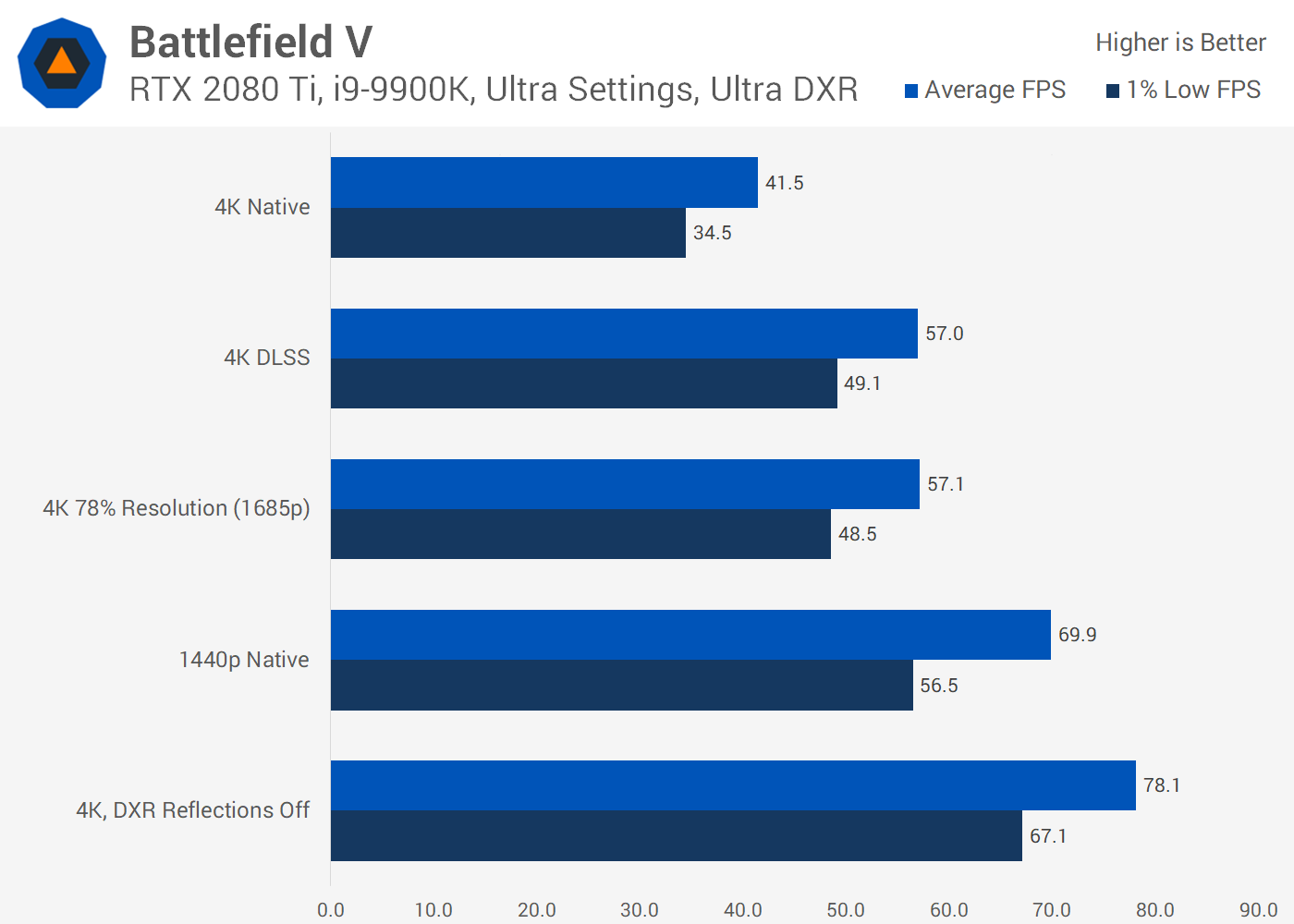

Testing at 4K with the RTX 2080 Ti

Above you have the performance at native 4K, then performance at 4K with DLSS enabled, and native 1440p. Crucially, we've also added in the performance with the game set to 4K but with a 78% render scale. As you can see, the performance of 4K at 78% resolution, which equates to a resolution of about 2995 x 1685, is roughly the same as 4K DLSS. This will come into play for the quality comparison. Then there's also performance with ray tracing and DLSS disabled.

Compared to native 4K rendering, which with Ultra DXR reflections is only a 40 FPS experience, DLSS improves performance by 37 percent looking at average framerates. That's definitely a sizable improvement, and again, it's the same as rendering at a 78% resolution scale. However it doesn't bring the game running at 4K with DXR back up to the performance level of the game running without Ultra ray tracing.

Switching off DXR led to an 88% performance improvement. This is Ultra DXR not Low, but our previous testing has shown the performance gain going from Low to Off to still be around 50%.

But the real kicker is looking at the visual quality comparisons. We'll start with native 4K versus 4K DLSS. Across all the scenes we tested, there is a severe loss of detail when switching on DLSS. Just look at the trees in this scene, it's exactly what you'd expect from a 4K presentation - sharp, clean, high detail on both the foliage and trunk textures.

DLSS is like a strong blur filter has been applied. Texture detail is completely wiped out, in some cases it's like you've loaded a low texture mode, while some of the fine branch detail has been blurred away or even thickened in some cases.

Of course, this is to be expected. DLSS was never going to provide the same image quality as native 4K, while providing a 37% performance uplift. That would be black magic. But the quality difference comparing the two is almost laughable, in how far away DLSS is from the native presentation in these stressful areas.

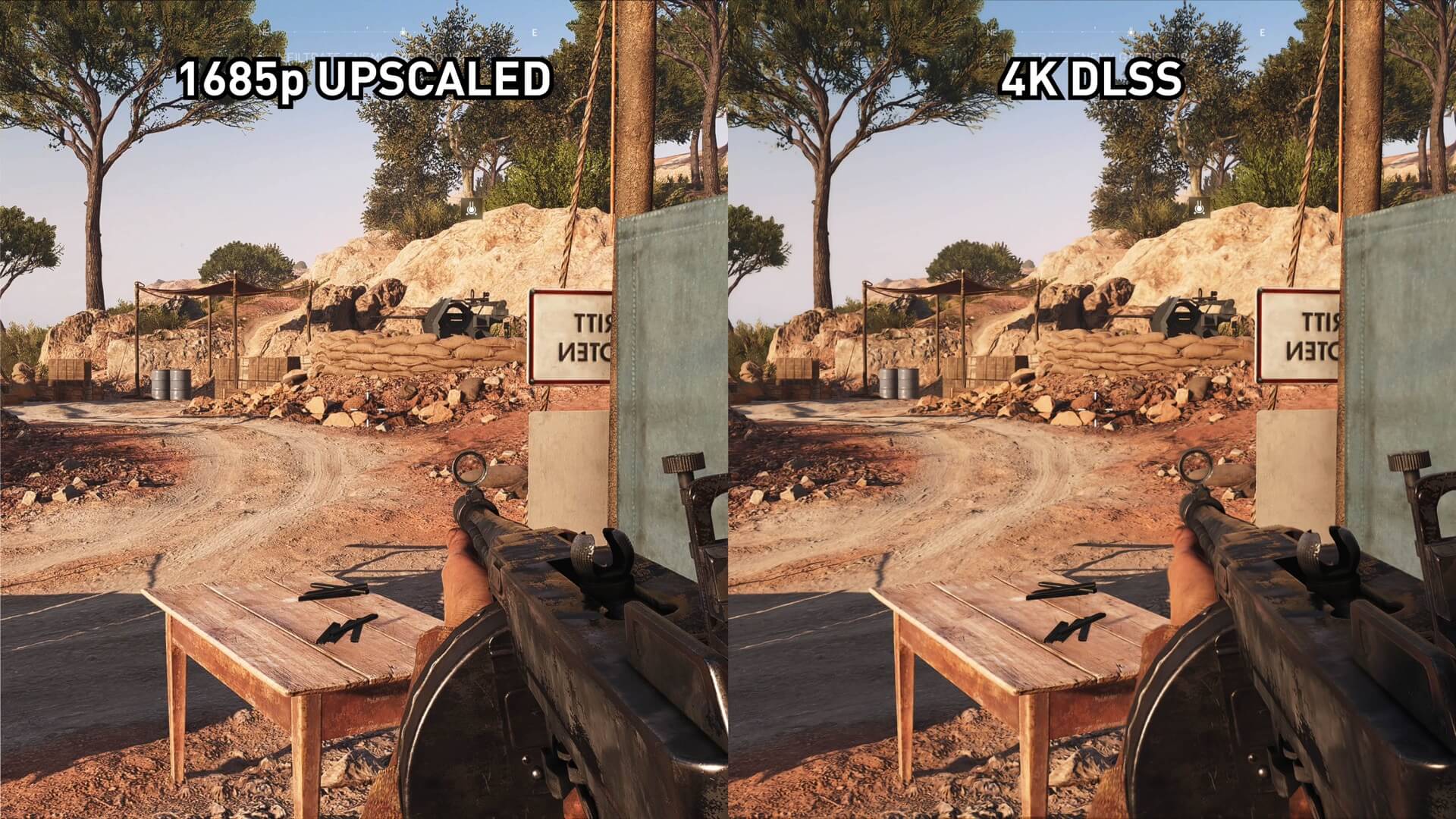

It gets worse though, and we'll switch to a different scene for this one. Here is a comparison between DLSS and our 78 percent resolution scale, roughly 1685p, which we found to perform exactly the same as DLSS. It's a complete non-contest. The 1685p image destroys the DLSS image in terms of sharpness, texture detail, clarity, basically everything.

Just look at the quality difference between these two areas, when zoomed in. The 78% scaled image preserves the fine detail on the rocks, the sign, the sandbags, the cloth, the gun, everywhere! With DLSS, everything is blurred to the point that this detail is lost. And we're not talking about a situation where DLSS is noticeably better at anti-aliasing, the 1685p image is already using Battlefield's TAA implementation which is quite good.

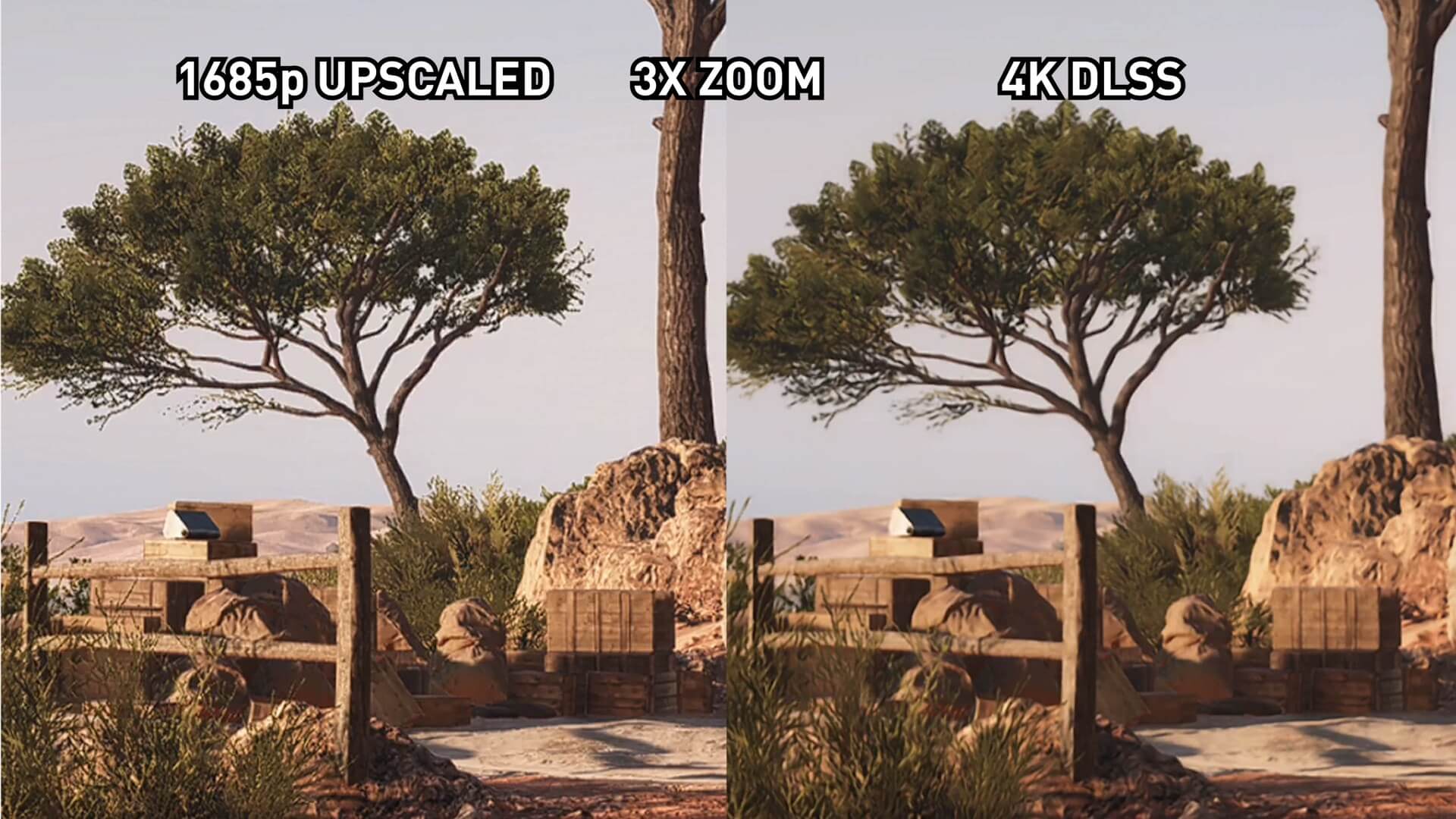

There are some instances where DLSS is smoother, looking at extremely fine detail when zoomed reveals less aliasing in the thin tree branches, but this has come at the complete loss of detail in the foliage and everywhere else. And we only spotted this when zoomed in. Looking at the full image on a regular 4K PC monitor, it's hard to tell the difference in aliasing because the pixel count is already very high, and the non-DLSS presentation with TAA removes most of the key artifacts you'd spot without anti-aliasing. This leaves DLSS looking like Vaseline has been smeared on the display.

That last scene was particularly bad for DLSS, but there's a common theme throughout the areas we tested. And we're not even talking about DLSS versus native 4K here, we're talking about DLSS versus a 1685p image upscaled to 4K, both of which deliver the same performance. 1685p is a little behind native 4K in terms of sharpness as you'd expect from upscaling, but DLSS is miles behind either of them.

In some situations we wouldn't even say DLSS is superior to a 1440p image upscaled to 4K. 1440p sees a further loss of fine detail to the image, and in some environments DLSS can restore that detail and smooth out any jagged artefacts. However the DLSS image is still very soft and blurred, often with lower texture detail. It depends on the environment and it's definitely a lot closer than some of our previous comparisons, but a lot of the same problems remain. It gets closer again if you downscale the 4K DLSS image to native 1440p and do the comparison at that resolution, rather than upscaling both to 4K, but DLSS isn't clearly better.

Battlefield V's regular TAA implementation is very good, too, and doesn't blur out the image like TAA can do in other games. Final Fantasy XV for example, is quite blurry with TAA enabled. Compare TAA to DLSS in that game, and it's similar. But Battlefield V is sharper overall, and DLSS simply can't match up. In fact, the native and scaled images completely obliterate the blurry mess that is DLSS.

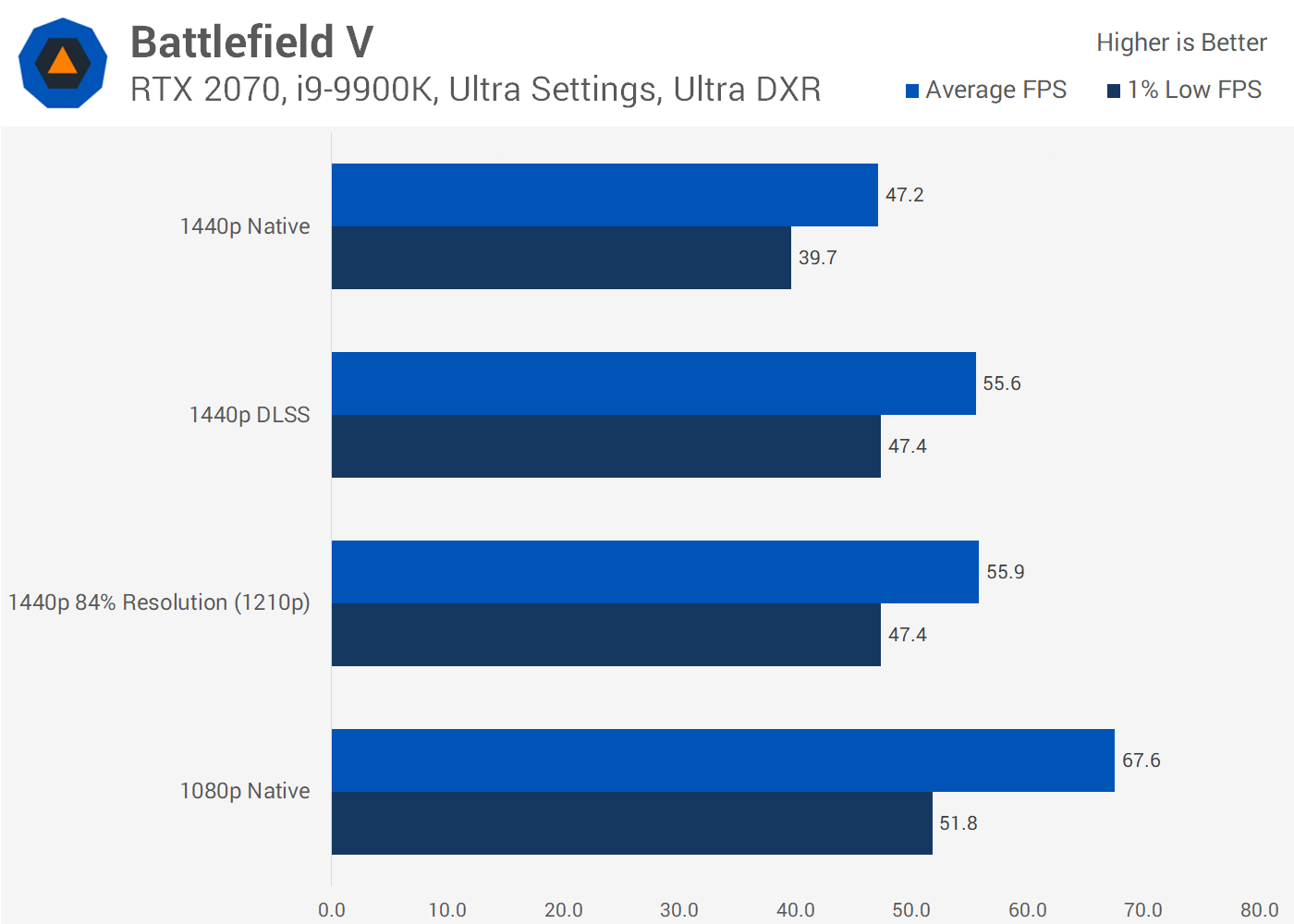

Testing with the RTX 2070 at 1440p

We ran some brief tests at 1440p as well, this time with an RTX 2070. Here DLSS performs more in line with an 84% resolution scale, so roughly 2150 x 1210, delivering a modest 18% performance improvement over native 1440p.

Part of this is due to 1440p performing better on the RTX 2070 than 4K does on the 2080 Ti with Ultra DXR. The other part is the RTX 2070's more limited RT and Tensor core resources.

We're seeing most of the same problems at 1440p as we did at 4K. The DLSS image is softer and blurrier compared to upscaling a 1210p image to 1440p. In some areas there is less aliasing, but that advantage is not worth it when the overall image is so blurry. DLSS is an improvement in some areas over native 1080p, but it's not a definitive victory.

There's no other way to put it: DLSS sucks right now.

From what we can see in Battlefield V, as well as Metro Exodus which is still an early implementation, DLSS is complete garbage and a huge waste of time. A lot of people hated on ray tracing and the implementations we've seen so far, but to me, DLSS is by far the worst of the two key RTX features.

Right now, DLSS provides much worse image quality than upscaling from a resolution that provides equivalent performance (Nvidia has even acknowledged it's not good right now, though with less straightforward adjectives). For 4K gamers with a 2080 Ti, our upscaled 1685p image looked far better than DLSS, while providing an equivalent performance uplift over native 4K. At 1440p, upscaling from 1210p also looked better than DLSS.

This is a significantly worse outcome than our initial DLSS investigation. Previously, in an optimal canned benchmark, DLSS looked and performed like an 1800p image. So it provided no benefit. Here, DLSS looks worse than a 1685p image, at the same performance. So enabling DLSS in a real-world game like Battlefield V is actually worse than using simple resolution upscaling that's been available in games for decades.

And that's why DLSS is complete rubbish. At least with ray tracing we're getting a superior image than what we had before, at an admittedly high performance cost. With DLSS, we're not gaining anything in either the performance or visuals department compared to traditional techniques.

This is what we feared when DLSS was first demonstrated. While not all that impressive in canned demos either, we assumed having a repeatable benchmark run with the exact same frames in each run would present the absolute best case scenario for the AI-based reconstruction DLSS uses. The AI could just train itself on high resolution samples of the frames it will then reconstruct later.

But games are far more dynamic than this. It's impossible at this stage to train a neural network for every single frame a game could output. So when DLSS is paired with a real world game, not just a demo, it's completely falling apart trying to reconstruct frames it's never seen before. Because this is a neural network that is constantly learning, yes, DLSS could improve over time. But the gap between DLSS and our equivalent upscaled images is so large, that who knows how long it will take for DLSS to catch up, if it's even possible.

There are times where the comparison between DLSS on and off will be more favorable, even in dynamic games. Titles that have bad anti-aliasing implementations, such as Final Fantasy XV or more recent games like Resident Evil 2 with TAA, are already quite blurry. But many modern titles have been optimizing TAA to look better than ever, preserving more detail and there DLSS can't keep up. Battlefield V being a prime example.

And this is without mentioning all the limitations that come with DLSS, including limited resolution support depending on the GPU you have, and for Battlefield V, the inability to use DLSS without ray tracing. On top of that, Nvidia admits it's useless for high frame rate gaming, unlike resolution upscaling which works across everything.

As it stands today, DLSS in Battlefield V is so bad we think it should be removed from the game. Having the setting there might tempt gamers into using it when at best it provides no benefit, and at worst it's degrading the experience. Only when DLSS is at least equivalent to resolution scaling should the feature be reintroduced into the game.

But let's be clear, gamers and RTX owners were promised something entirely different: ray tracing with nearly no performance hit thanks to DLSS, which could even improve graphics quality. That is not what we have received in the few titles that support either feature.

Unfortunately, DLSS was a feature that people were thinking would be the best of the RTX features, judging by the way Nvidia was selling it. The claims amounted to a free performance uplift thanks to the power of AI. But the reality is so far from this, it's laughable.