Recently we've been checking out previous-gen graphics cards that you can buy at "decent" prices used, if you want a GPU for gaming, but don't want to pay inflated prices. It's a valid trade-off for many and so we've revisited the likes of the GeForce GTX 1070, GTX 1060 6GB, and Radeon RX 580, running the latest drivers and today's games to see how they do.

Today we're taking a look at the 3GB version of the GeForce GTX 1060 which sells for ~$200-250 on eBay, and that's about $100 less than what you can expect to pay for the 6GB model. A second reason to put together this test is that we want to see how a graphics card with just 3GB of VRAM is getting on in 2021. That alone should be quite interesting.

As a quick refresher, the original GTX 1060 6GB was released back in July 2016, that's 5 years ago. Hot on the heels of that release, a month later, Nvidia released the controversial 3GB version. The reason for the controversy was Nvidia doing Nvidia things: the 3GB version of the GTX 1060 sounded like it'd be the same as the original but with half as much VRAM. But if you made that assumption, you would be wrong.

In addition to the VRAM difference, Nvidia also went with defective silicon supporting 1152 cores, rather than the full 1280 cores used by the 6GB version, or a 10% reduction in core count. Based on our testing 5 years ago, when not limited by VRAM the 3GB model was ~7% slower, but we did see an example with Mirror's Edge Catalyst where it was up to 41% slower due to insufficient memory, so it will be interesting to see how the two GPUs compare today.

Pascal was an epic release from Nvidia back in the day, but they did have the few bad apples like this one and the GT 1030 DDR4 version. But all that drama is now in the past, and what we have in the present is a second hand 3GB GTX 1060 that we're going to re-test.

If you're thinking of buying a used graphics card as a make-do solution until RTX 3070's don't cost more than your first car, you probably want to know how it performs in today's games, so let's go find out.

For comparison we have the GTX 1060 6GB to be compared in 15 games at 1080p and 1440p using a range of quality settings. All testing was conducted on our Ryzen 9 5950X test system using 32GB of DDR4-3200 CL14 memory and the latest available display drivers, so the results are going to be entirely GPU limited.

Gaming Benchmarks

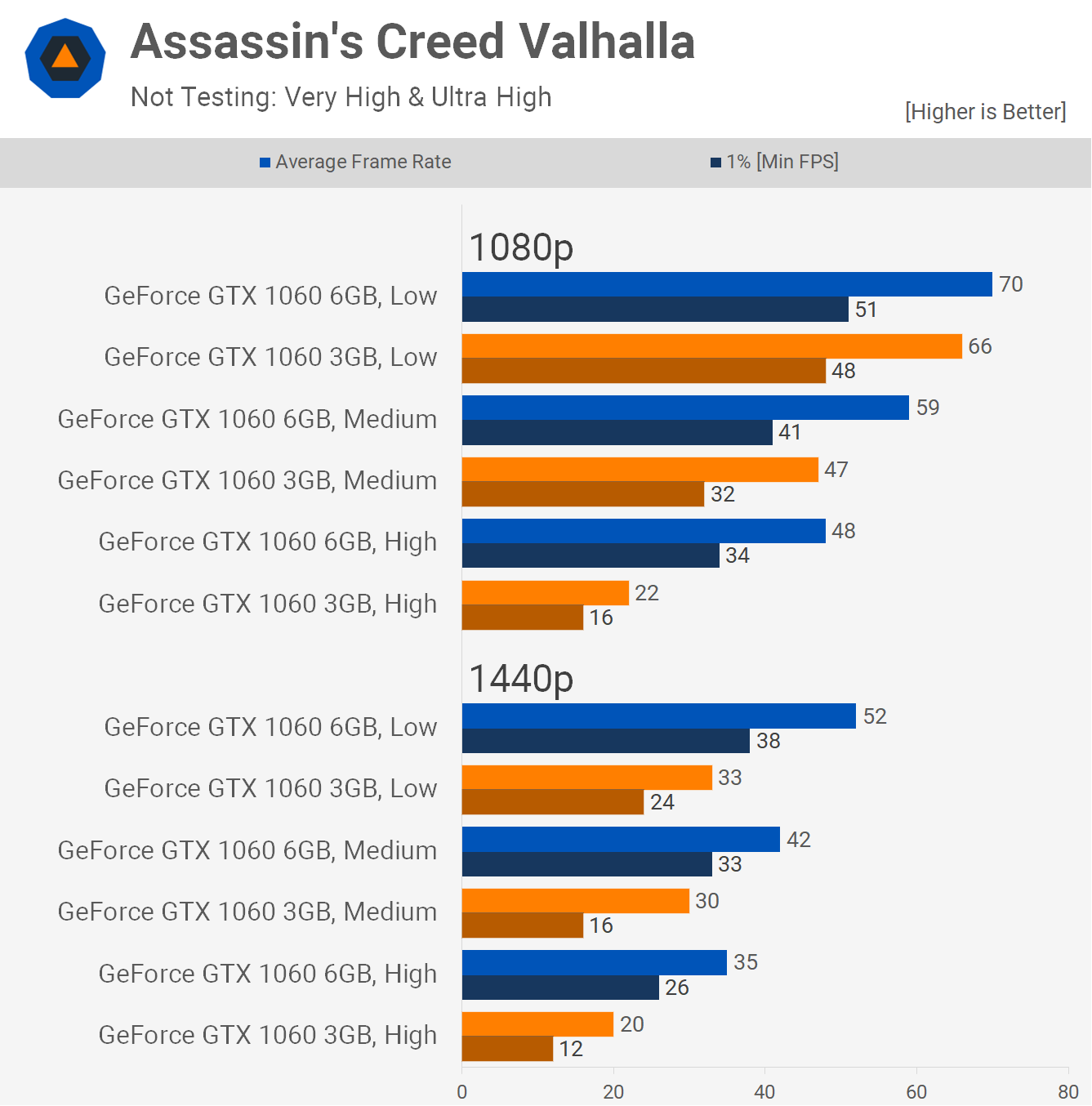

Starting with Assassin's Creed Valhalla we see that the 3GB model is 6% slower at 1080p using the low quality settings, which is what you'd expect when not exceeding the VRAM buffer. However, by using the medium quality preset we start to run into trouble as that margin swells to 20%, dropping the average frame rate from 59 fps down to 47 fps.

Things do get worse with the high quality preset as the 3GB model drops by a huge 54% margin, going from a playable 48 fps to a stuttery mess with just 22 fps.

This means you'll want to keep texture quality at the lowest possible value when tuning quality settings and it'll have to be at 1080p, because at 1440p there's really no way to make the 3GB model work.

We're looking at a 37% performance reduction using the low quality settings at 1440p, so medium and high are obviously a no go. In terms of value, the 6GB model is worth paying ~50% more for if you predominantly play games like Assassin's Creed Valhalla as it nets you around 50% more performance, but more crucially, it will enable playable performance.

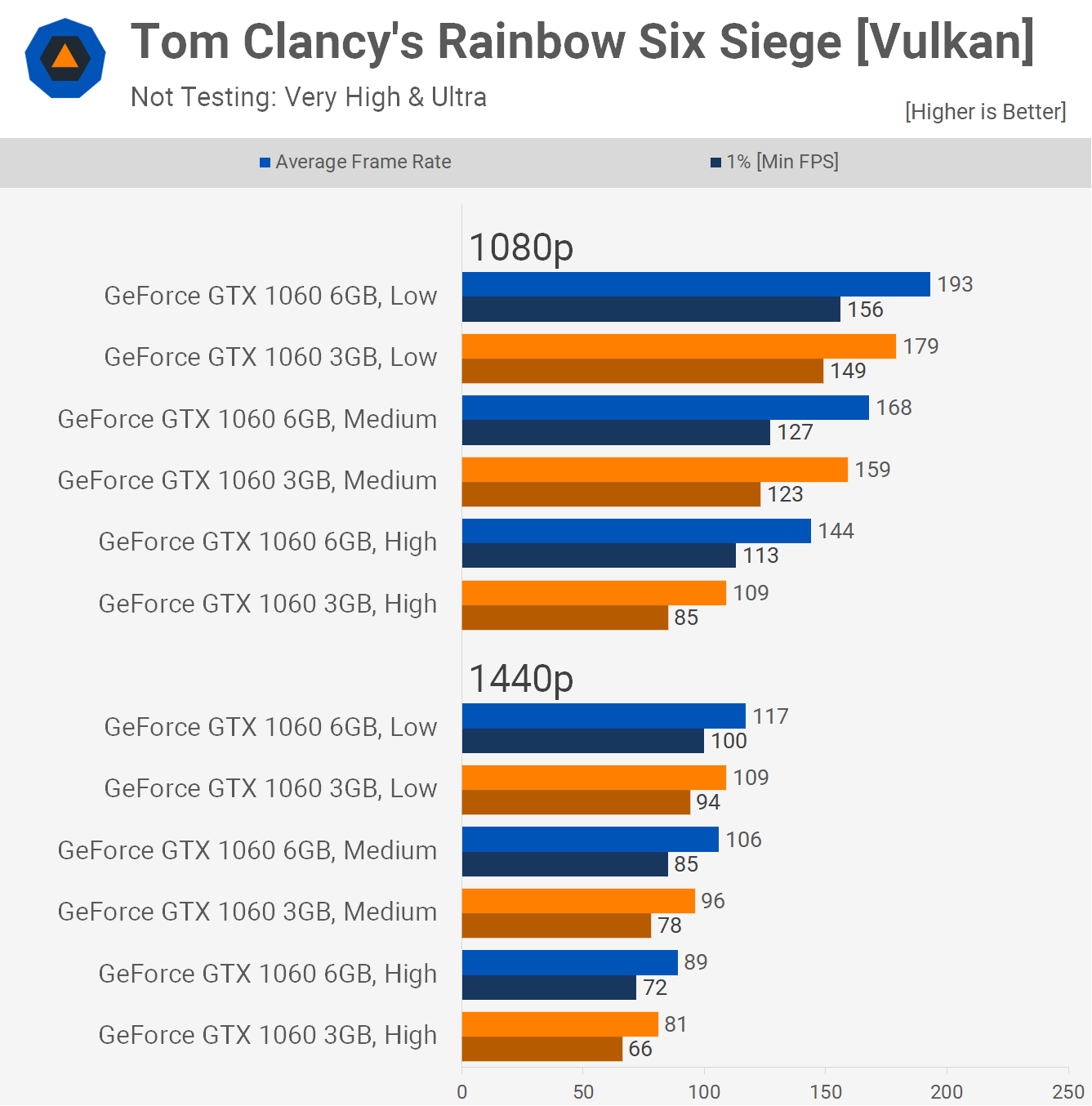

Tom Clancy's Rainbow Six Siege results are also interesting, though here the 3GB frame buffer is sufficient for the most part. For example, at 1080p low settings, we see a 7% drop and then just 5% with medium. However, we're looking at a far more significant 24% drop off using high, though the game is still playable.

Given the reduction in performance for the 3GB model when using the high quality preset at 1080p, you might expect the 1440p data to be a disaster, but it's not. Using the same quality setting the 3GB model was just 9% slower, dropping from 89 fps to 81 fps. We believe this is because memory capacity is no longer the greatest performance limiting aspect of the card, and isn't severe enough where it completely tanks performance.

In other words, the 3GB model does reasonably well here and was certainly able to deliver playable performance.

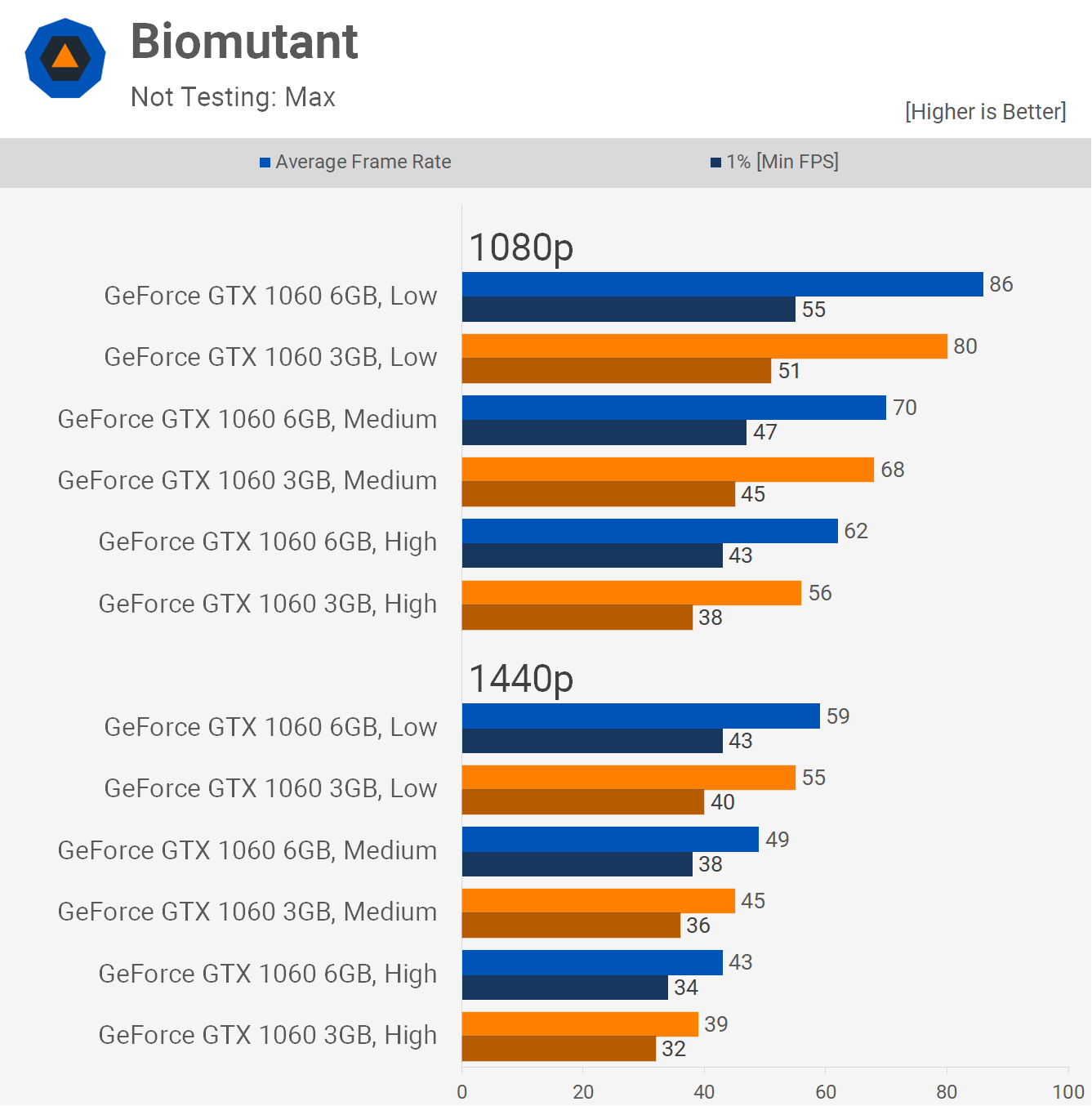

Biomutant doesn't run into any issues with the 3GB GTX 1060 using the low, medium and high quality presets, though be aware we're not testing with the maximum quality preset.

That being the case the 3GB model is 7-10% slower than the 6GB version, and that's what you'd typically expect to see when not limited by memory capacity.

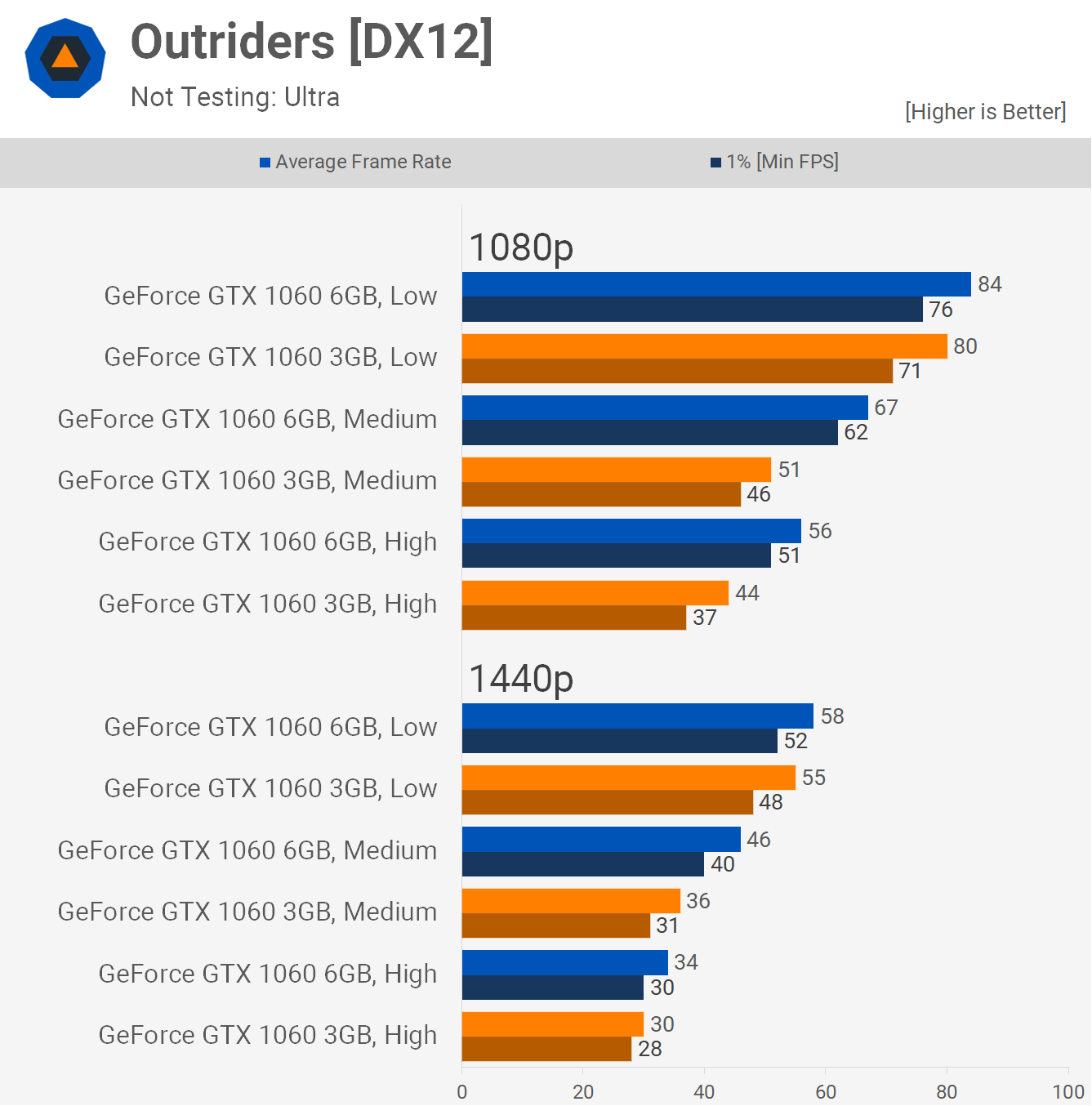

The margins are all over the place in Outriders. At 1080p using the low preset the 3GB model is 5% slower, and that's a best case scenario. However, turning the quality settings up a single notch saw the margin open up to 24% as the 3GB model dropped down to just 51 fps whereas the 6GB card was good for 67 fps.

Quite interestingly, as we move to 1440p the 3GB model is still 5% slower using low and 22% slower with medium but then just 12% slower using high. But as was seen in Rainbow Six Siege, it is possible to get to a point where running out of VRAM isn't the primary cause for limiting performance, rather it's GPU performance and we see this with the high quality preset.

You'd expect the 22% margin seen with the medium preset to be mirrored or extended with high, but instead it's reduced to just 12% and that's because GPU shader performance becomes the primary limitation.

We see more evidence of this behavior with Horizon Zero Dawn. You'd expect the limited VRAM buffer of the 3GB model to have the least amount of impact using the lowest quality preset, so in this case favour performance. But here we're looking at a 21% drop in performance for the 3GB model and at least half of that figure can be attributed to the VRAM buffer.

Using the higher quality 'original' preset the margin is reduced to 17% and then with favour quality, which is the second highest quality preset, the margin is reduced to just 12% which isn't far off what you'd expect to see when using 3GB less VRAM.

The truth is the VRAM buffer of the 3GB model was exceeded under all three test conditions, it just so happened to be that it was the primary performance limitation using the lowest quality preset.

Preset scaling behavior is more what you'd expect to see in Shadow of the Tomb Raider when running out of VRAM. At 1080p the 3GB model was 5% slower using the lowest and low quality presets. But using medium kills the 3GB 1060 as performance drops off to the tune of 39%, slashing frame rates from 61 fps to just 37 fps.

Had VRAM capacity not been an issue like what we see with the low and lowest settings, the 3GB model would have delivered around 58 fps.

Then at 1440p we find the 3GB model is 8% slower using the lowest preset, 13% slower with low and 29% slower using medium.

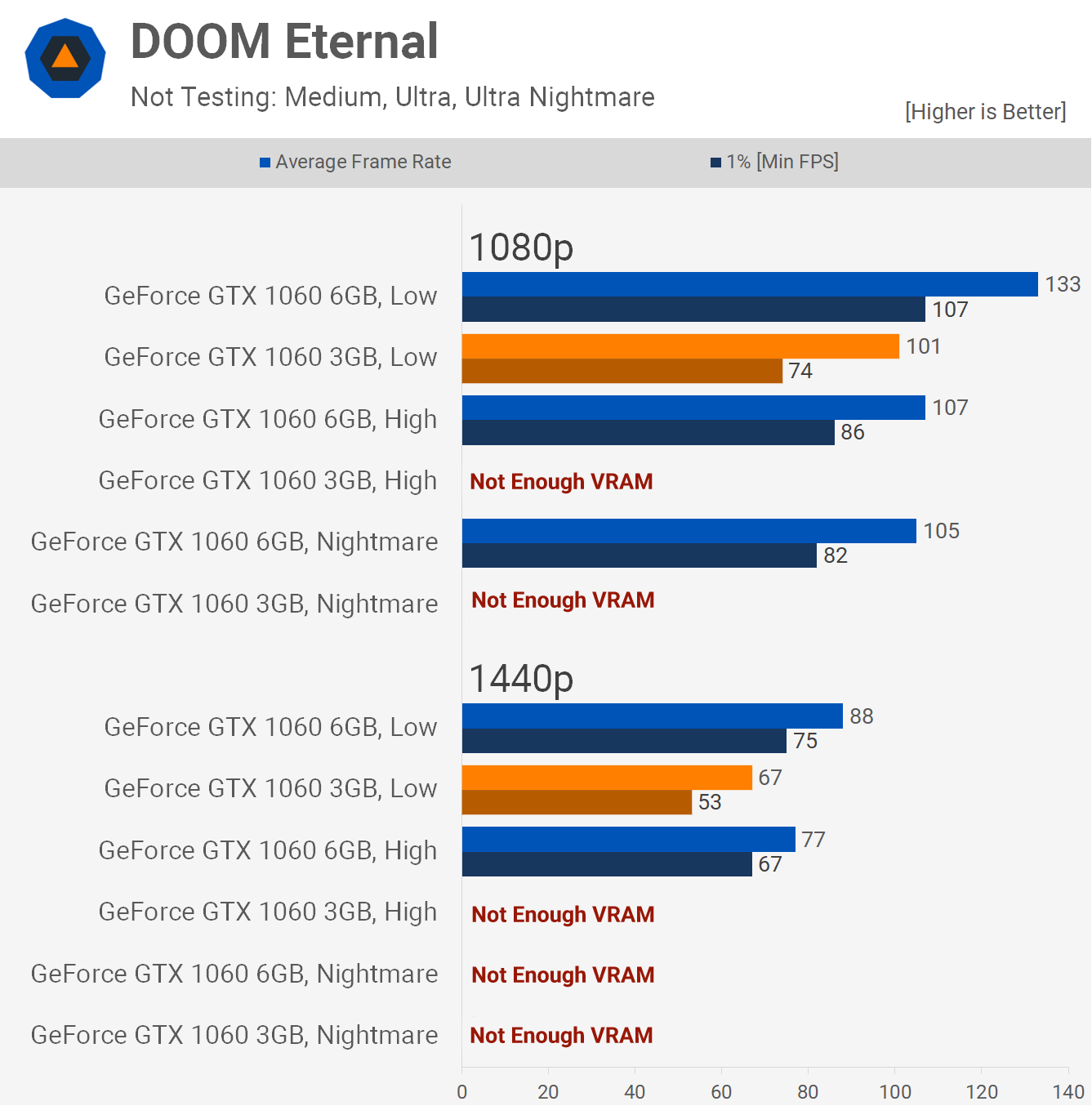

Doom Eternal doesn't allow you to exceed the VRAM buffer, so it's not possible to test certain quality settings. Worse still, even with the low quality preset, the buffer is already maxed out and as a result it trailed the 6GB version by a 25% margin at 1080p and 24% at 1440p.

The game was still playable at both resolutions, so there is that, but you're limited to low quality settings with this model.

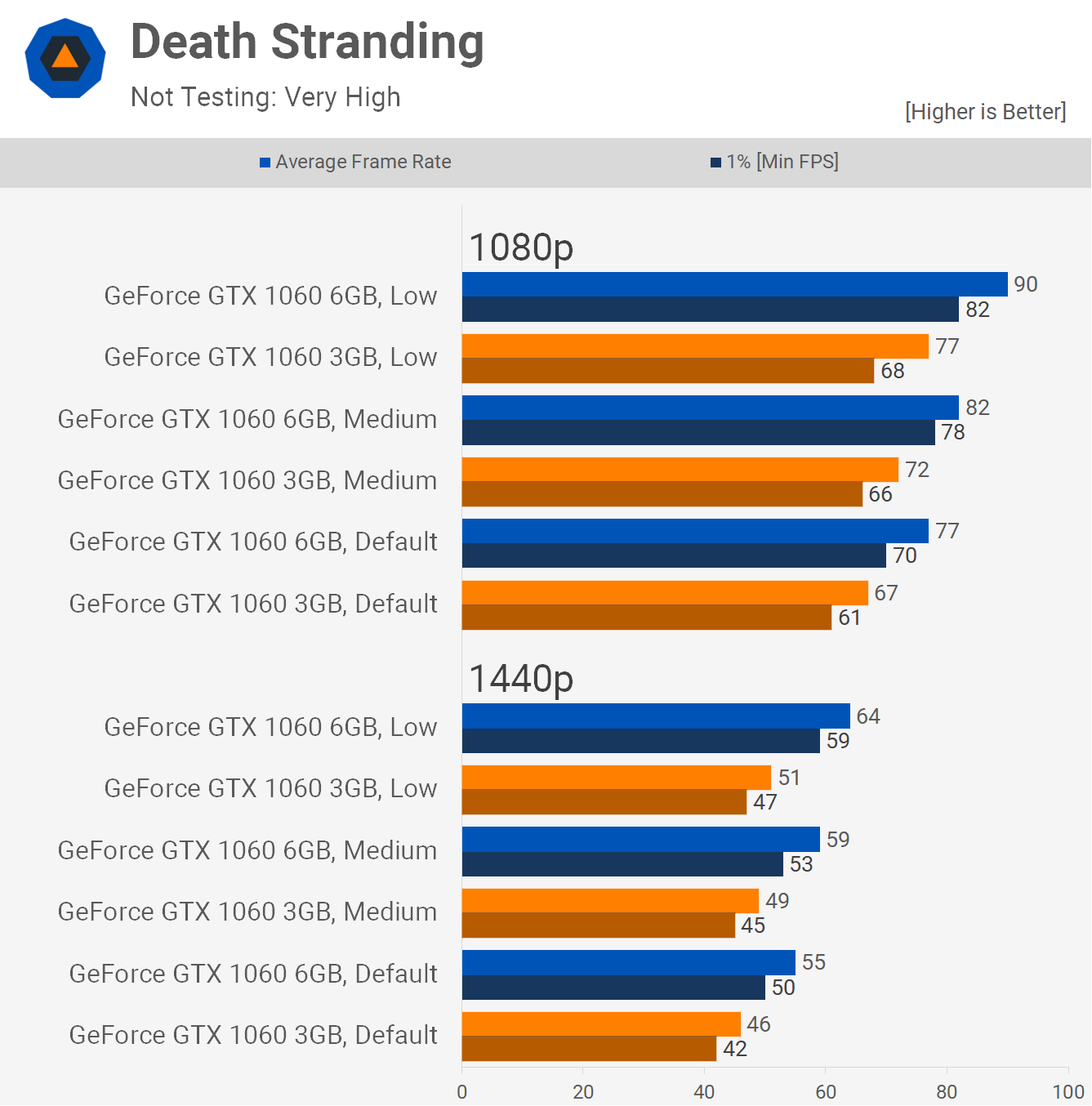

The 3GB GTX 1060 was consistently 14% slower than the 6GB model in Death Stranding at 1080p, which is a larger margin than you'd expect to see, so perhaps there's some memory limitations creeping into these results and that certainly appears to be the case at 1440p.

Here the 3GB model was between 16-20% slower and that's due to the limited VRAM buffer as it should only be up to 10% slower. This is an issue for those wanting to play Death Stranding at 1440p as it meant dropping down from almost 60 fps using the medium setting with the 6GB model, to just shy of 50 fps with the 3GB version and you will certainly notice that difference.

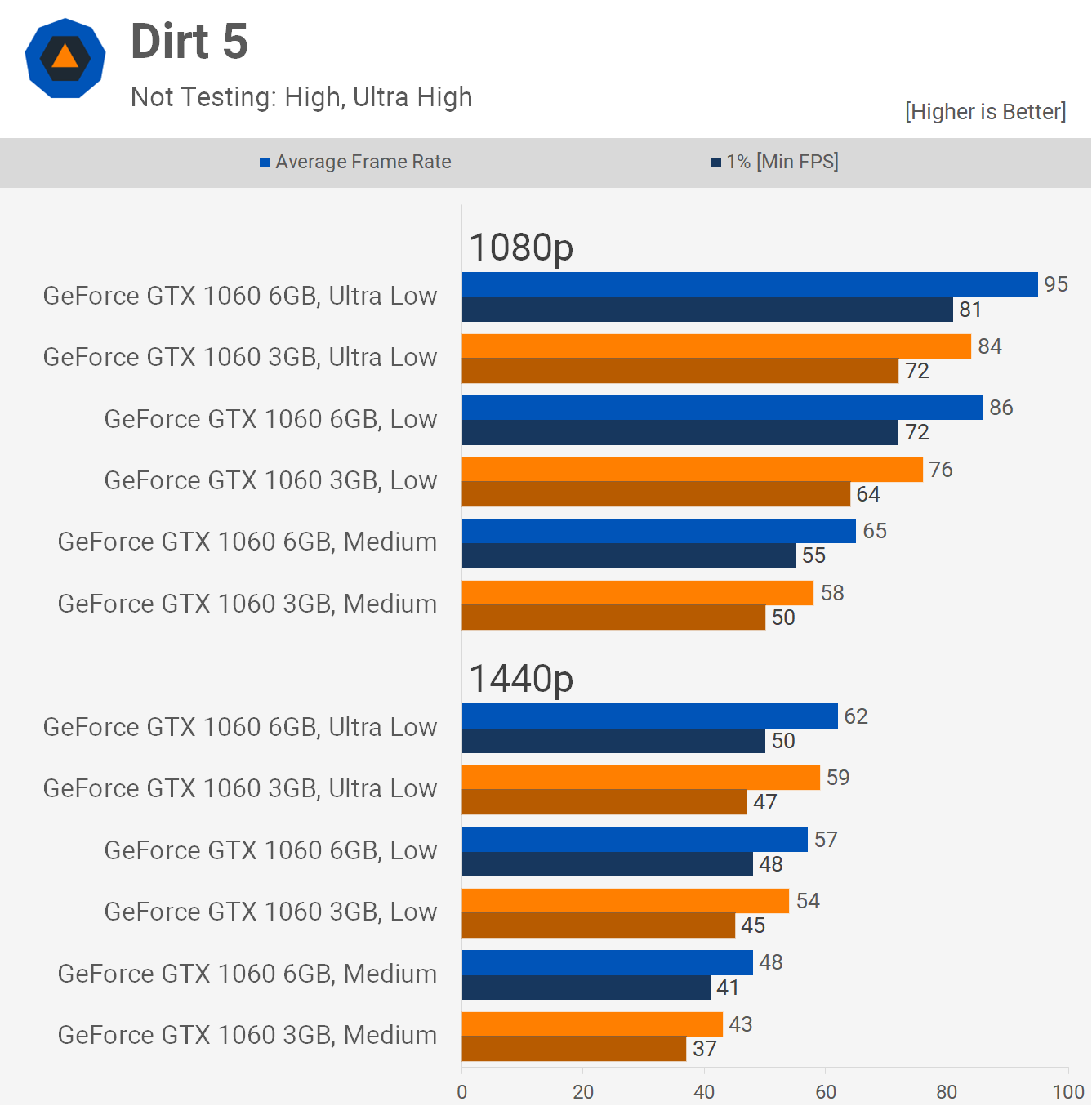

Moving on to Dirt 5, the GTX 1060 3GB was 12% slower at 1080p using the ultra low quality setting, then 11% slower with low and medium. So pretty similar scaling across those three presets at 1080p. Then at 1440p we see a 5% reduction in performance for the 3GB model using the ultra low and low presets, and then a 10% drop with medium, so for the most part the 3GB model works fine in Dirt 5 using the lower quality settings.

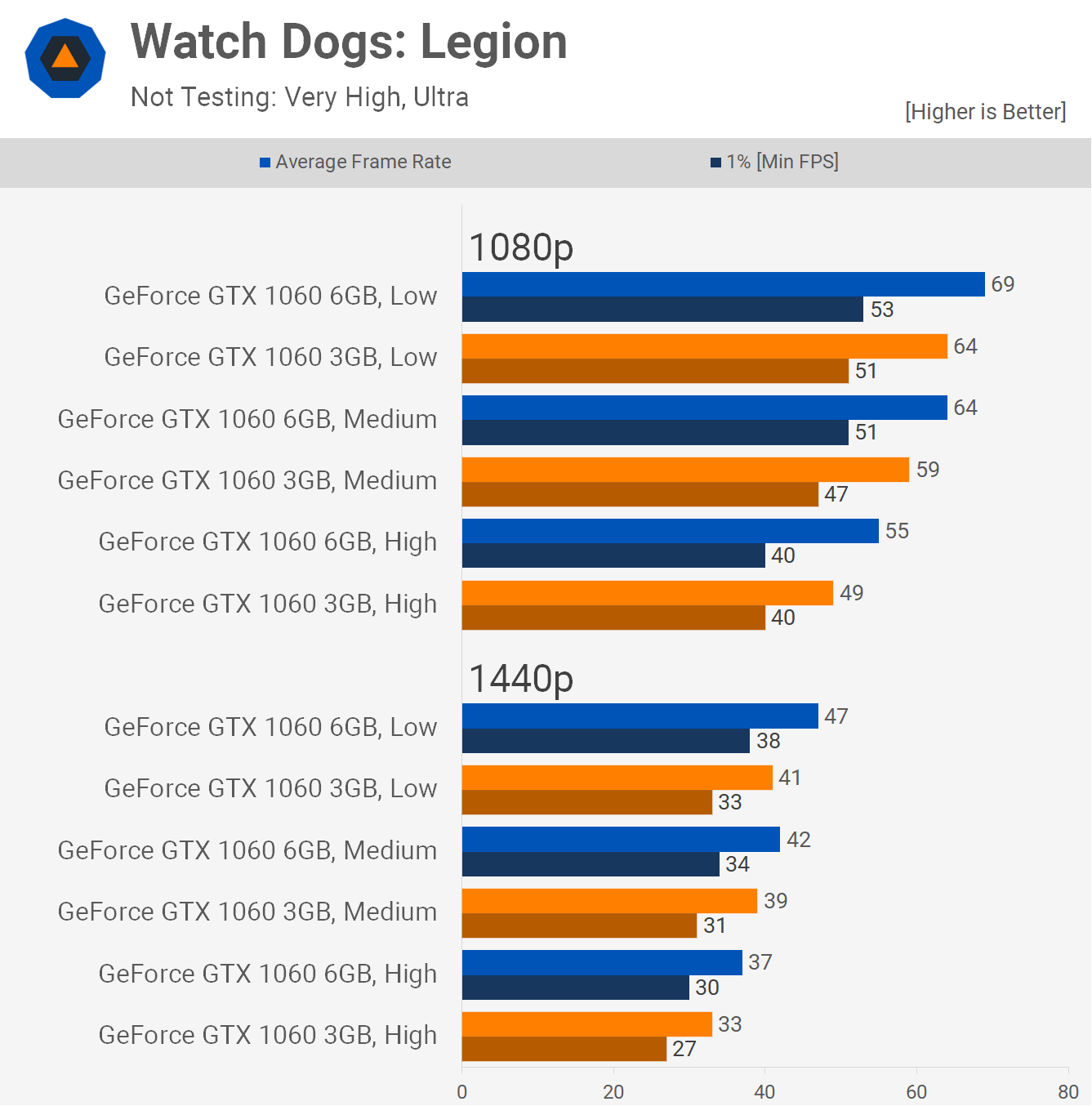

For testing Watch Dogs: Legion with these older products we've ignored the very high and ultra quality presets as these GPUs aren't powerful enough. That being the case, the 3GB 1060 does reasonably well, with performance typically between 7 - 13% slower than the 6GB model.

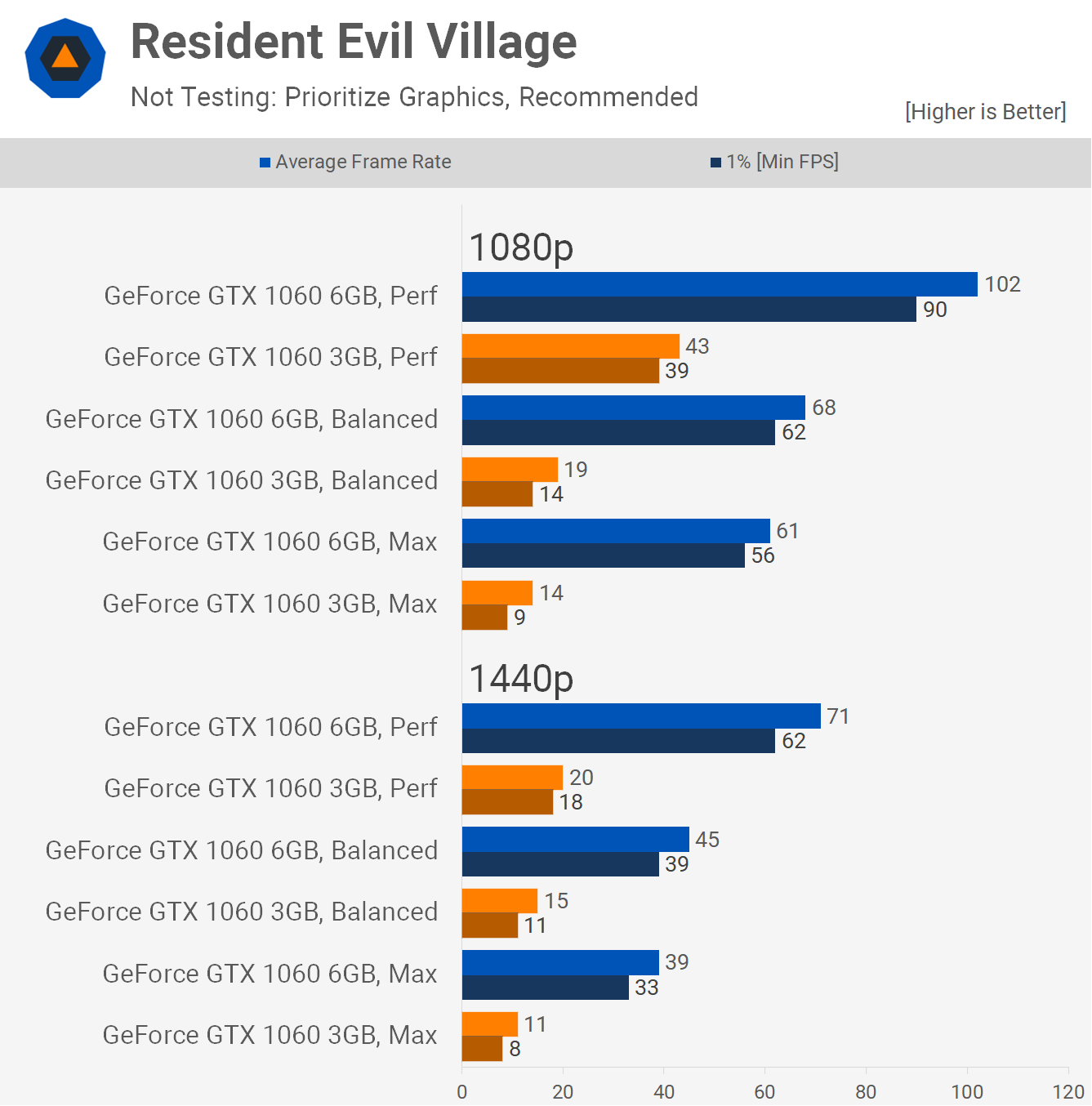

These results are a bit of an eye opener, playing Resident Evil Village with a 3GB 1060 is extremely difficult and short of using the lowest quality preset at 1080p, you really can't and it's not possible to achieve a 60 fps experience. This is an example of a game that make the 3GB 1060 obsolete.

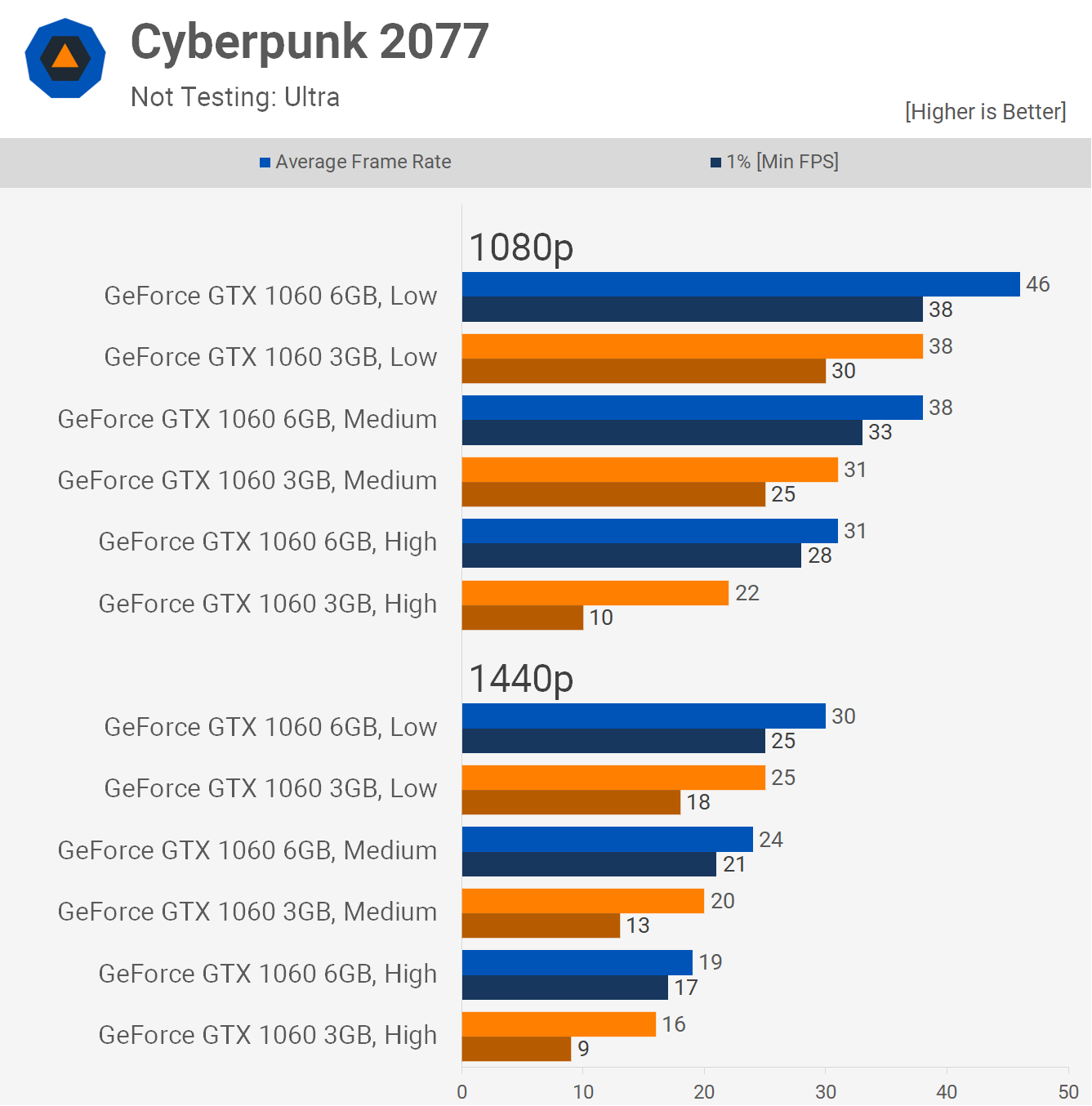

Another game that makes the 3GB 1060 obsolete is Cyberpunk 2077, here it's not even possible to achieve 40 fps using the absolute lowest quality settings at 1080p. The 6GB model isn't exactly amazing either but with over 20% greater performance it's at least playable.

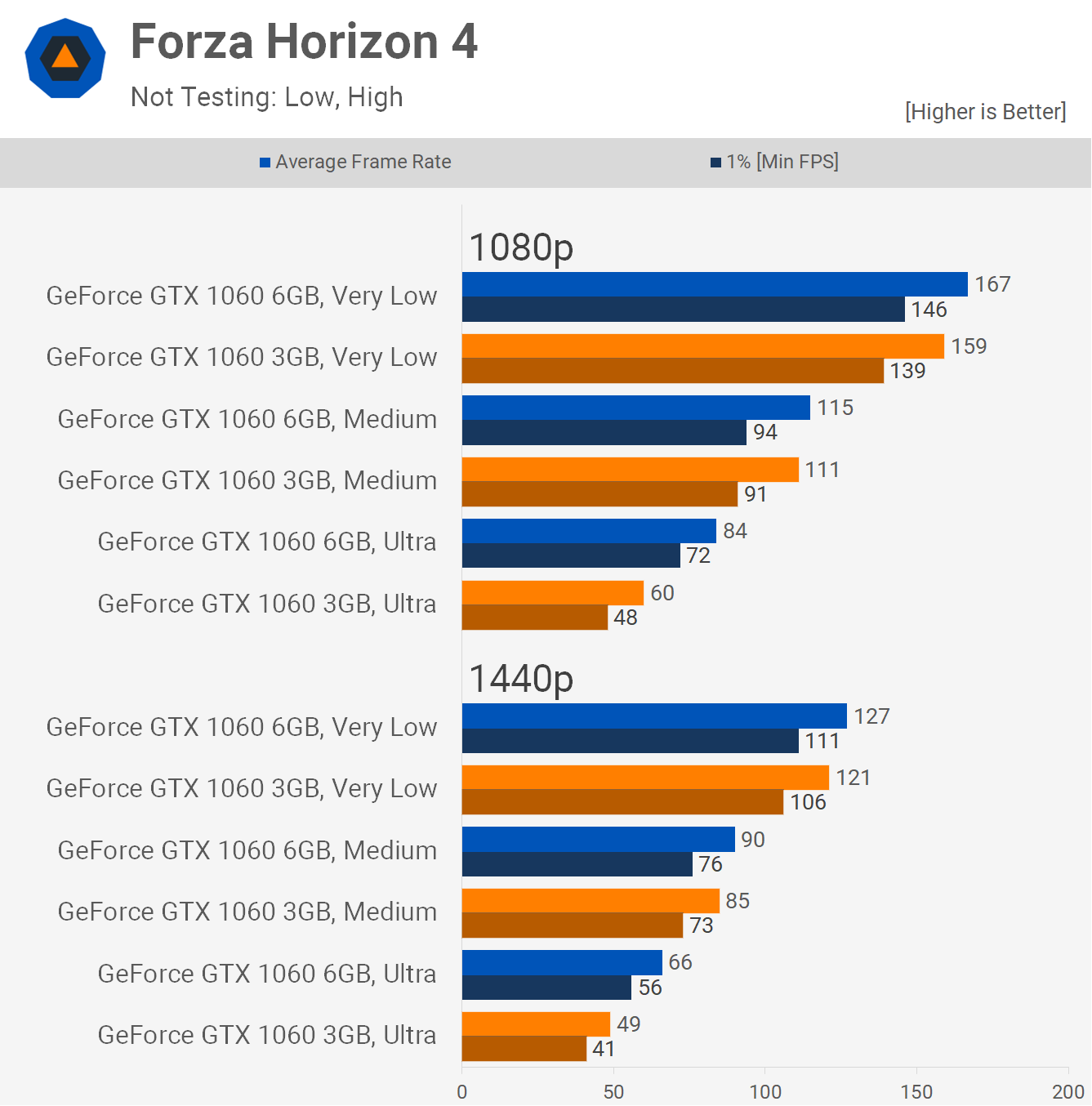

Performance in Forza Horizon 4 for the 3GB model is quite good and using the lowest quality preset at 1080p allowed for 159 fps, just 5% fewer than the 6GB version. We see similar scaling with the medium quality settings and then a large drop using ultra where the 3GB card was almost 30% slower. Similar scaling was witnessed at 1440p.

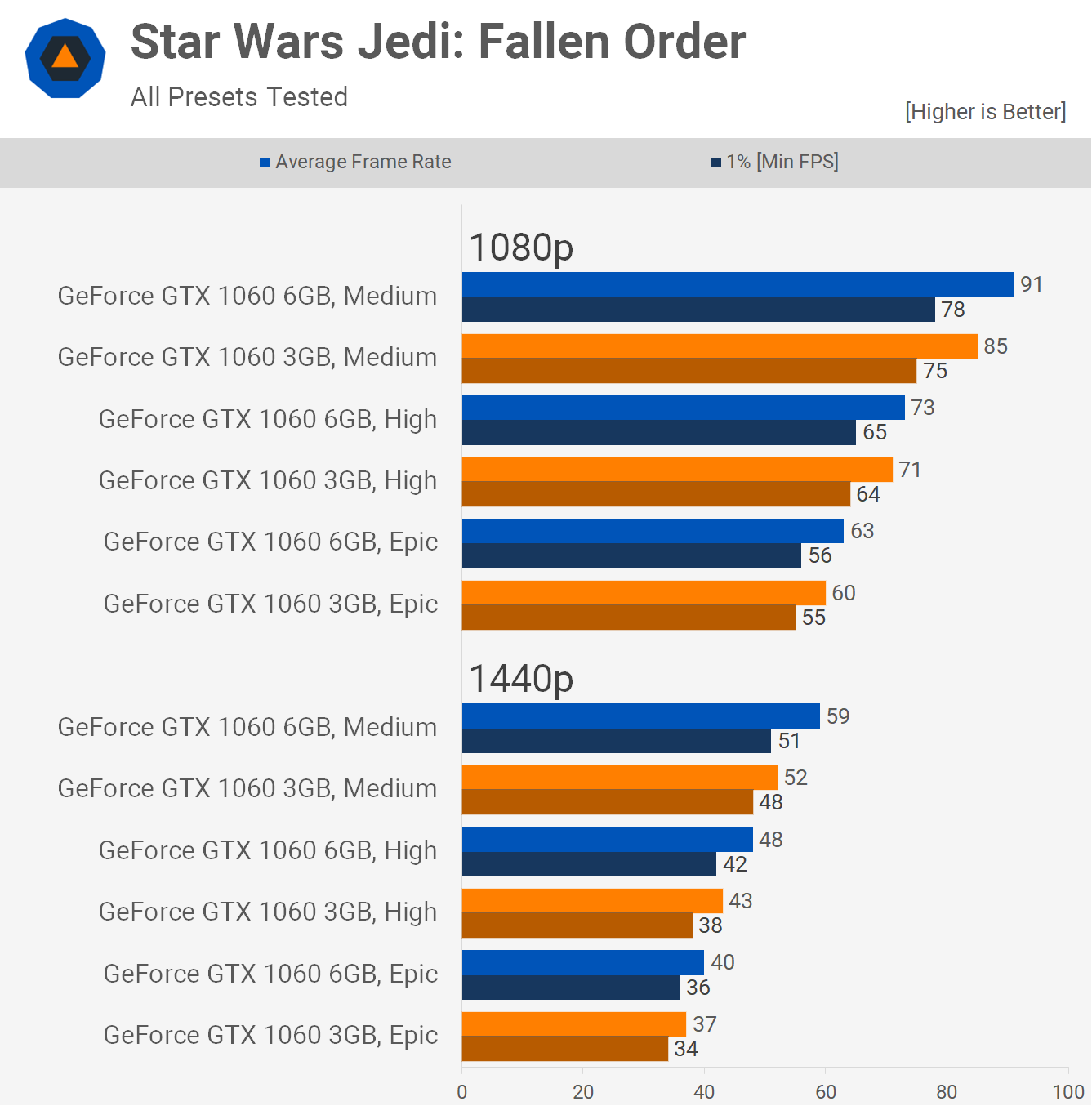

Surprisingly, Star Wars Jedi: Fallen Order plays perfectly fine on the 3GB 1060, even when using the highest quality settings, though you'll want to stick to 1080p as there's simply not enough GPU power here for 1440p gaming, at least with the Epic preset enabled.

At 1080p the 3GB version was typically 5-7% slower and then up to 12% slower at 1440p, but overall decent performance in this title.

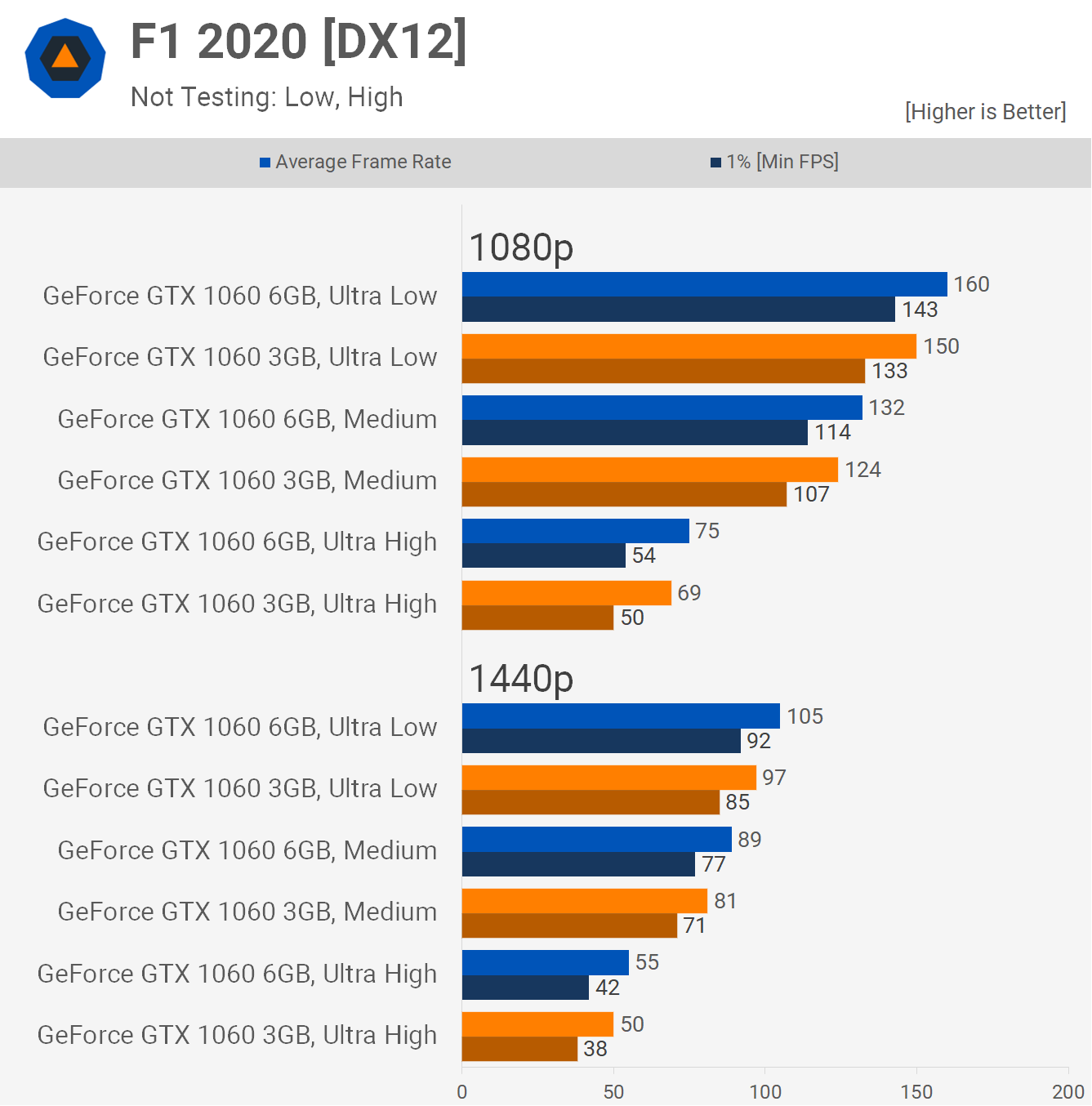

Last up we have F1 2020 and no surprises, the 3GB 1060 plays fine, trailing the 6GB model by a 6-8% margin. As a result, the 1080p performance was highly playable, even with the maximum quality preset and really you could get away with running this card at 1440p.

Average Framerates

Before our wrap up, below is a look at the 17 game average and as you can see the 3GB version of the GTX 1060 is faring much worse than it did a few years ago. It's no longer 7% slower on average at 1080p. In fact, using the lowest quality settings, it was on average 14% slower, then 20% slower using medium settings, and a whopping 32% slower with high quality settings.

It's a similar but slightly worse story at 1440p as the 3GB model trailed by a 20% margin using low, 22% slower with medium and 23% slower using high. The margins using the high preset at 1440p are actually better for the 3GB card when compared to what we see at 1080p, and this is down to the fact that the GTX 1060 becomes more limited by its own raw performance.

Wrap Up: 3GB of VRAM in 2021?

It's been a few years since we last looked at the 3GB GTX 1060 and it's been interesting to see how it tackles the latest and greatest games. We knew upfront it wasn't going to be pretty when using higher quality settings, but we also expected it to fail less than it did with lower quality settings.

Games such as Resident Evil Village and Cyberpunk 2077 are complete write offs, but we also saw large performance drops in games like Doom Eternal, Death Stranding, and Horizon Zero Dawn.

Then of course, there are many games where the 3GB buffer is a non-issue and highly popular esports titles like Rainbow Six Siege run just fine. If you're a competitive gamer using an older and slower graphics card, think GTX 750 Ti, there's certainly value in upgrading to a 3GB GTX 1060 as that will net you quite a bit of extra performance. This is even more attractive when you consider it's one of the cheapest graphics cards available on the second hand market right now.

Ultimately though, my advice would be to hold out, wait for the industry to improve, and buy a more modern graphics card at a reasonable price. We have no idea how long it will take before we're back to "normal" though. So if you're desperate to get gaming and don't want to break the bank, this is one of the better options available right now, especially for esports-type gamers.

On a side note, we expect this testing will open up the discussion regarding newer GeForce GPUs, such as the RTX 3070 which comes with 8GB of VRAM, whereas competing parts like the RX 6800 pack as much at 16GB.

In the case of the 3GB vs 6GB battle, it took at least 3 years before the lower capacity model started to regularly run into issues and about 5 years before it was unusable in some games. You could argue that 3GB of VRAM wasn't considered "a lot" back in 2016, and perhaps 4GB vs 8GB would be a more valid comparison, but at the end of the day I think the results would be much the same.

We can probably agree that in 2016, 4GB of VRAM was considered mid-range at best, and probably more suitable for a lower end product. Meanwhile 8GB was high-end with products of the time, at least on Nvidia's side receiving 11 and 12GB buffers.

Fast forward to today, we're not 5 years into the future from when Pascal launched. Is 8GB of VRAM still suitably high-end or mid-range even? I think not and AMD arguably on the right track when it comes to memory buffers... 16GB or more at the high-end, 8-16GB at the mid-range, and then 6 to 8GB at the low-end. I do believe we're past the point where 4GB buffers are useful.

Right now the RTX 3070 works fine and is considered a mid to high-end GPU offering, but you have to wonder how an 8GB buffer will get on in a few years time. Of course, you can always turn down stuff like textures to reduce VRAM load and that's a perfectly acceptable compromise to make on low-end and even some mid-range products, but probably not for $500+ graphics cards.

The RTX 3070 vs. RX 6800 battle is complicated and can't simply be decided by measuring VRAM buffers. The GeForce GPU has a number of strengths such as more mature ray tracing support, a growing list of DLSS supported titles, and Nvidia's encoder. But if it becomes heavily VRAM constrained in a few years, all those advantages go out the window and the 3070 becomes a gimped product.

If you wish to hold onto your graphics card for 3-5 years, I'd expect the RX 6800 to end up being a much better investment for you, but if you upgrade every 2-3 years the RTX 3070 might offer more value in the short term thanks to features such as DLSS, if it's supported in the games you play.