A few weeks ago we did a horrible thing. A horrible, biased, and frankly unforgivable thing. At least that's what Steve was told by well-informed folks over on Reddit... we benchmarked the RTX 4070 Ti using FSR, in 6 of the 50 games we tested. That's right, we used FSR. Even though actually one game enabled FSR by default, so we guess we can only be directly blamed for that horrible thing we did in 5 of the 50 games tested, but that's no excuse.

Sarcasm aside, what we thought was a reasonable and fair approach to tackling an issue, kicked off a violent controversy that escalated to abused levels over on Reddit with countless users claiming things they simply have little or no knowledge of. But the good news is, we can now set them straight, which we're sure won't help to avoid this sort of thing in the future, but it will provide the rest of gamers with some useful benchmark data.

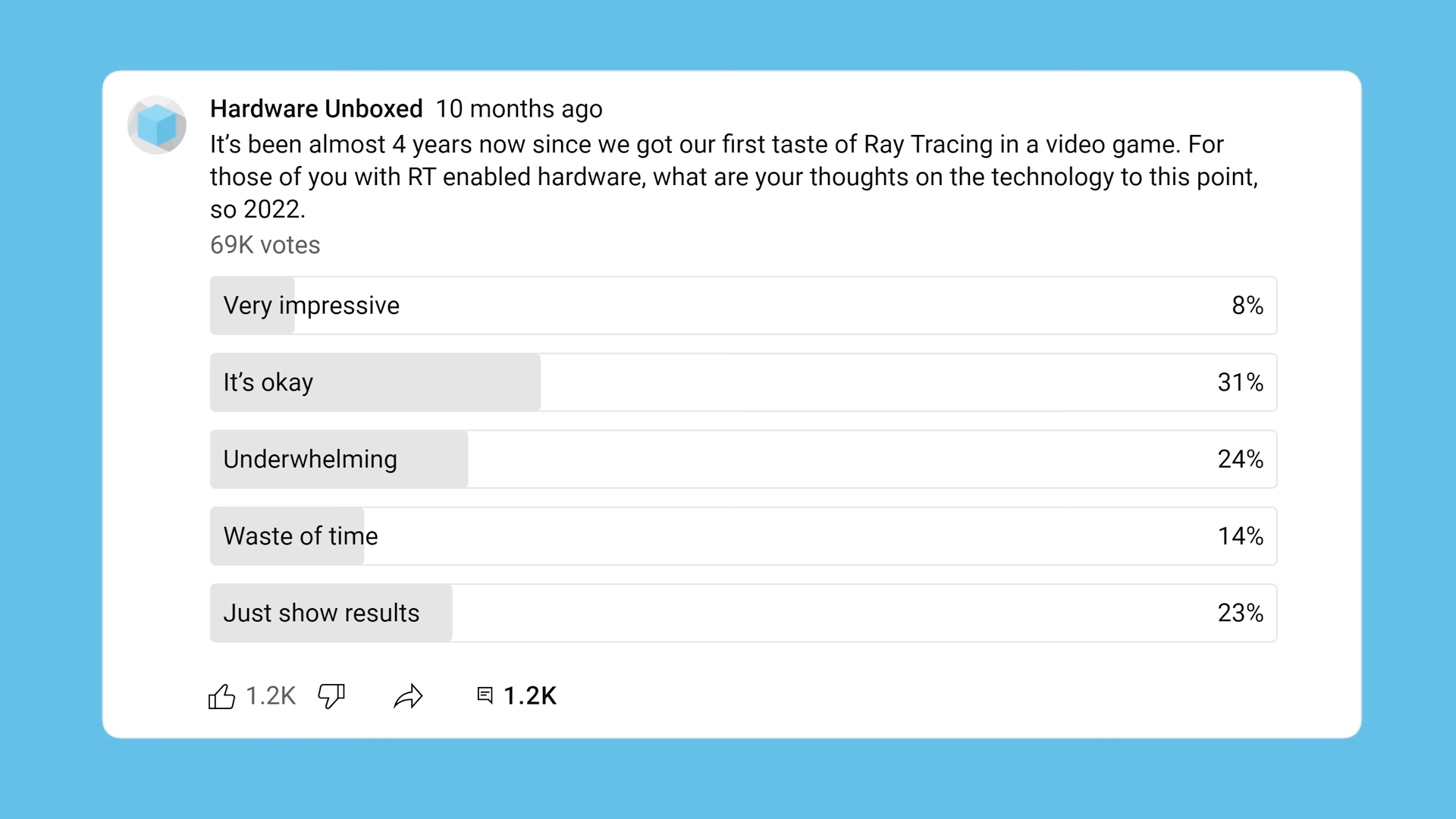

A bit of back story first. When we recently compared the GeForce RTX 4070 Ti and Radeon RX 7900 XT head to head in 50 games, we decided to include a lot of ray tracing-enabled results, as games using the added effects have continued to gain ground and become an important aspect of GPU performance. Despite that fact that every time we poll your interest in ray tracing, the data generally comes back looking very bleak for the technology - seems like most of you still don't find the visual upgrade significant enough to warrant the massive performance hit, but that's a separate matter.

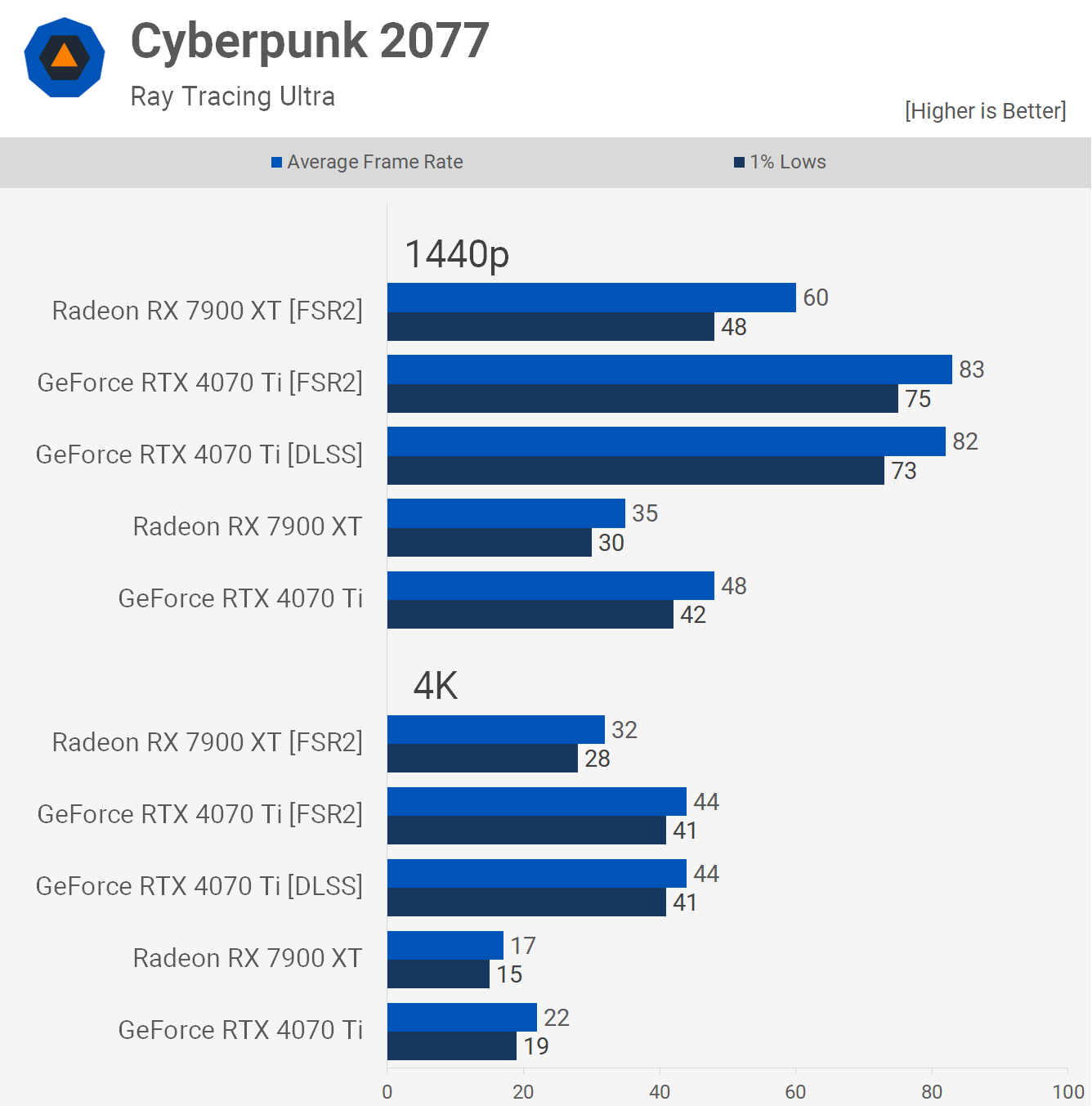

In our test, there were a few games like Cyberpunk 2077 which are actually a good showing for ray tracing, and in this specific example we saw frame rates dip well below 30 fps on the RTX 4070 Ti at 4K, but with upscaling we could get up over 40 fps. Since are not expecting anyone to game at 17 fps, we felt the upscaling data would be more useful, even if it's not truly showing 4K performance.

For a better representation of image quality comparisons, check out the HUB video below:

The next decision we faced was: which upscaling technology to use? The options included testing GeForce RTX GPUs with DLSS and everything else with FSR, or to keep it as apples-to-apples as possible, so test everything using FSR.

Given we're trying to accurately compare frames per second performance, we always like to keep the testing as similar as possible. In fact, our main concern was not to provide AMD with a potential performance advantage. We know that DLSS is the superior upscaling technology, generally providing better image quality, and we also know that in terms of FPS performance GeForce GPUs tend to perform much the same using DLSS and FSR.

In other words, we didn't want to run tests on any game where FSR looked noticeably worse than DLSS, while also providing greater frame rate performance to the Radeon GPU. So, in order to avoid all those potential issues, we felt testing both GPUs with the same open-source upscaling technology was the best option.

After the RTX 4070 Ti vs Radeon 7900 XT shootout went live, we saw many comments complaining about how biased the test was as we didn't use DLSS, despite mentioning in the article that in terms of image quality DLSS is superior to FSR, and the reason that we used FSR for benchmarking to keep the comparison as apples to apples as possible.

On the HUB community, I made a community post, addressing some of the misconceptions around the topic and ran a poll. Thankfully, the majority of viewers agreed with our decision, but even so the results were fairly split. This community post then led to multiple Twitter threads, essentially calling us out for using FSR on GeForce GPUs. It was troubling to see low effort posts with no evidence or any real information getting upvoted, while those explaining both sides of the story were heavily downvoted.

The primary argument (or rather, assumption) from those who opposed our choice to use FSR believed that DLSS is something Nvidia's accelerating using their Tensor cores and therefore DLSS will be faster than FSR on GeForce RTX GPUs. Mind you, there's no evidence to support this beyond Nvidia's own marketing material. A quick search on YouTube for stuff like "RTX 3080 FSR vs DLSS" or any other popular GeForce GPU, and you'll find plenty of content comparing various games with the two upscaling methods, showing virtually identical frame rates.

We have, of course, done our own in-house testing. With no actual evidence to support complainers' claims, we did what we always do, and got benchmarking. So let's go take a look at that...

Benchmarks

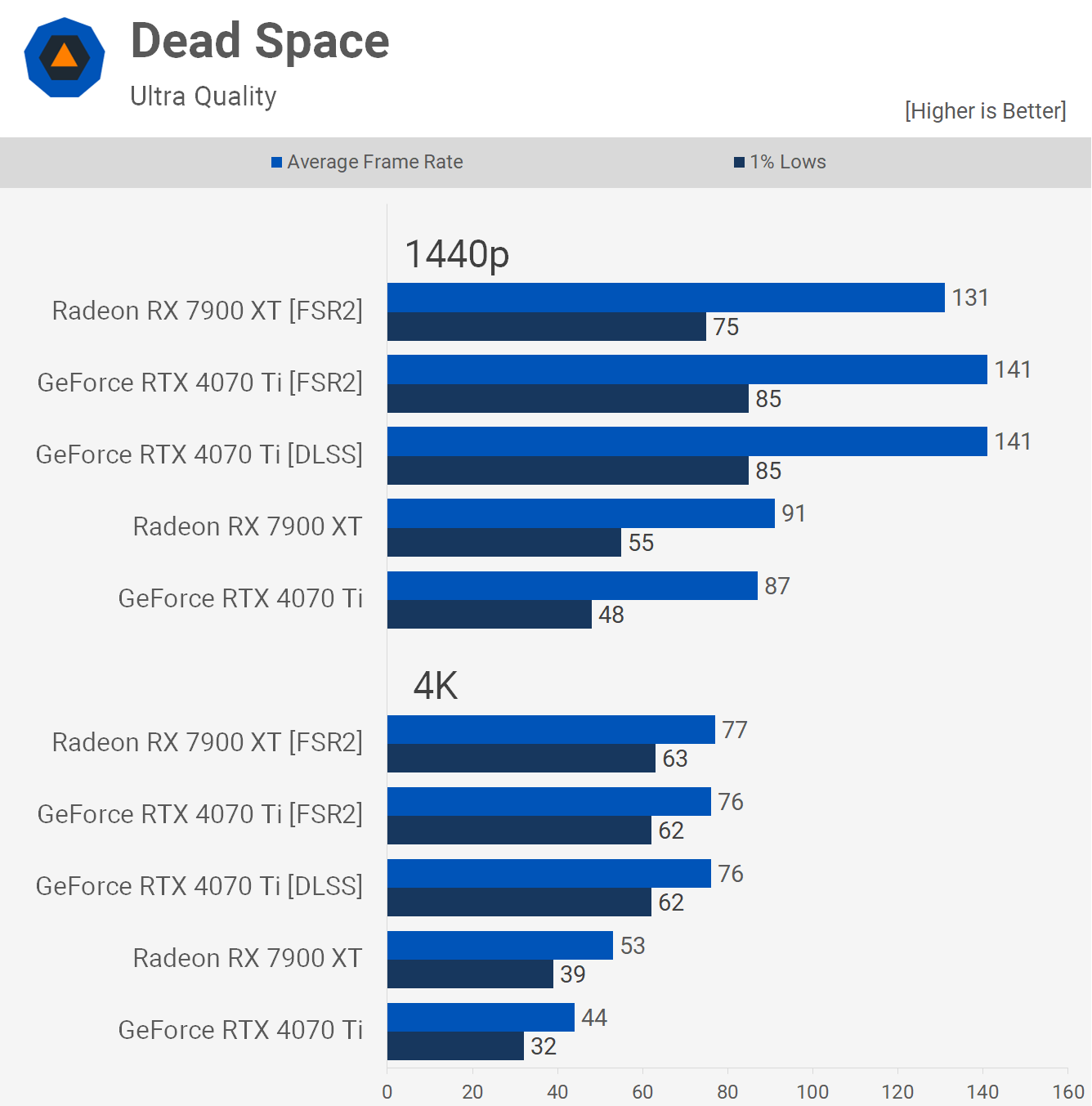

Here's a look at DLSS quality mode vs. FSR quality mode in Dead Space. Please note we've only used the respective 'quality' modes for all our testing. As you can see in this example, there's no frame rate performance difference between the two upscaling methods, the RTX 4070 Ti delivered 141 fps using either technology at 1440p and 76 fps at 4K.

As for scaling, at 4K the Radeon 7900 XT saw a 45% increase when using FSR, whereas the GeForce RTX 4070 Ti saw a significantly more substantial 73% increase.

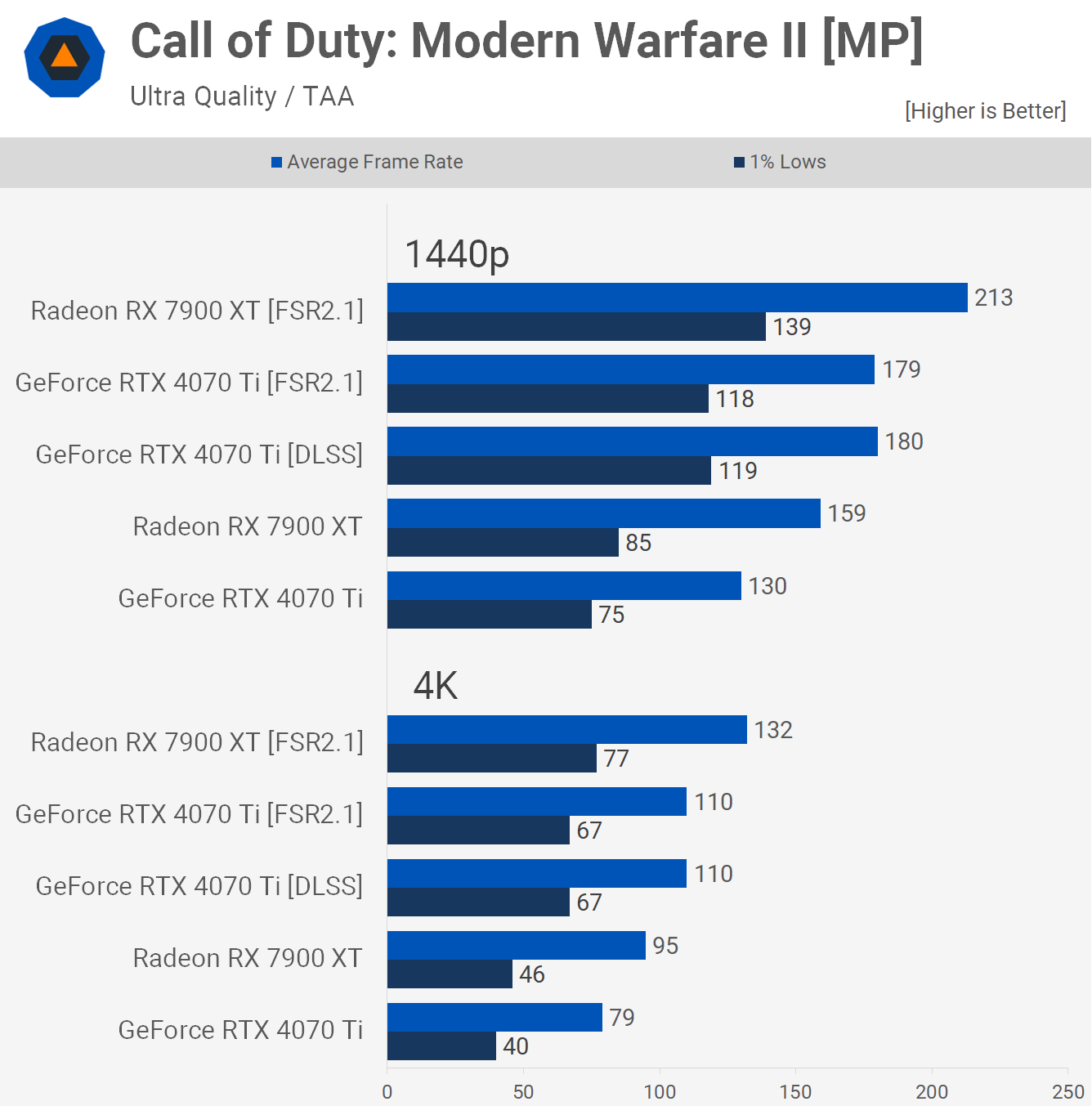

It's the same story in Call of Duty: Modern Warfare II and this data can be easily verified by anyone with a Radeon and GeForce GPU as we're using the built-in benchmark.

The RTX 4070 Ti provided the same result using FSR and DLSS at both tested resolutions.

As for scaling, the 4070 Ti saw a 38% increase when using FSR at 1440p and the 7900 XT a 34% increase. Then at 4K both the 4070 Ti and 7900 XT saw a 39% increase.

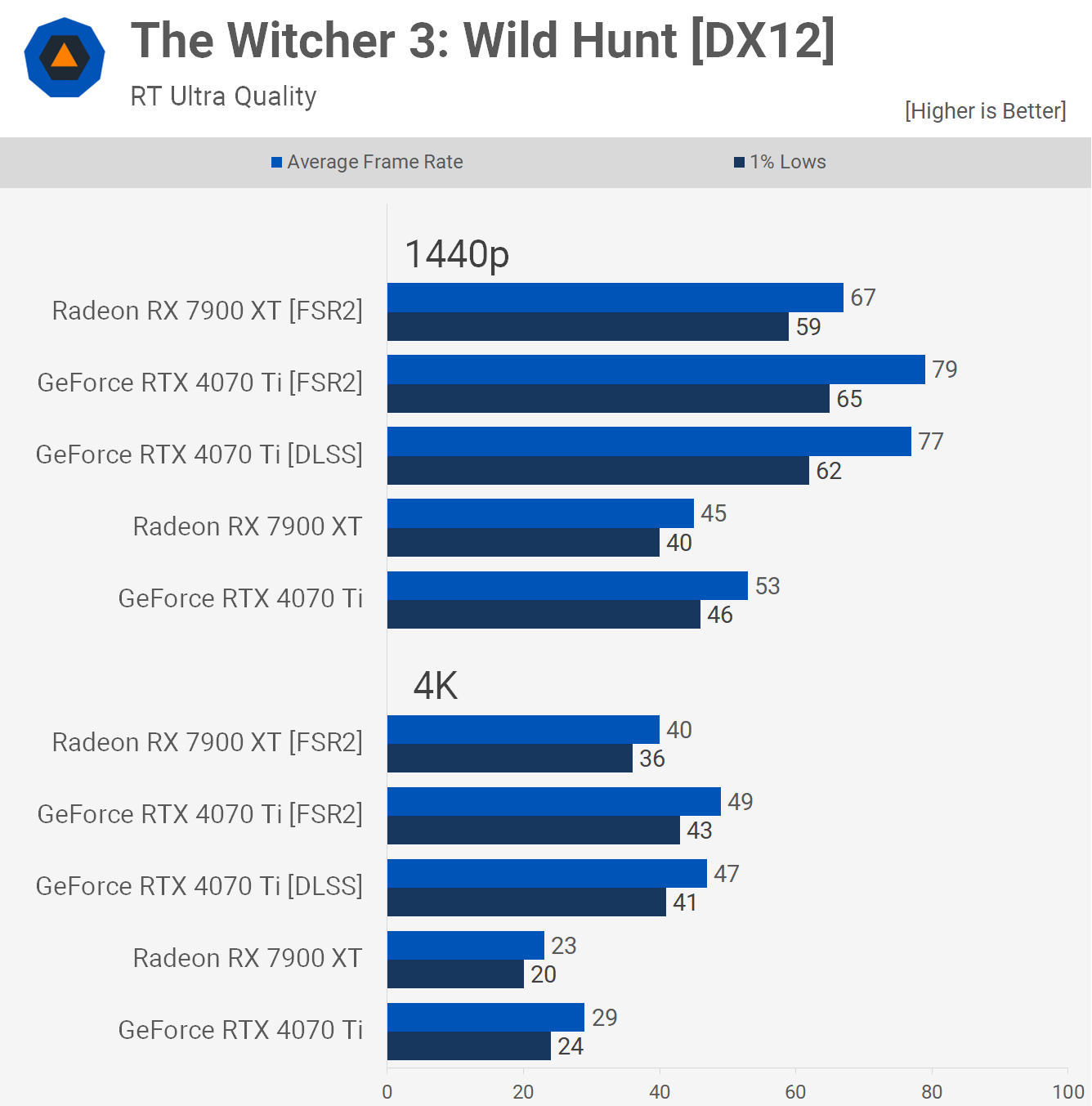

Next we have The Witcher 3, where the RTX 4070 Ti was 2% to 4% faster when using FSR opposed to DLSS. Not exactly a significant difference, but in this example FSR was faster than DLSS.

The Radeon 7900 XT saw a 49% increase when using FSR at 1440p and 74% at 4K, whereas the 4070 Ti saw a 49% increase at 1440p and then 69% at 4K, so a slightly larger uplift for the Radeon GPU at 4K.

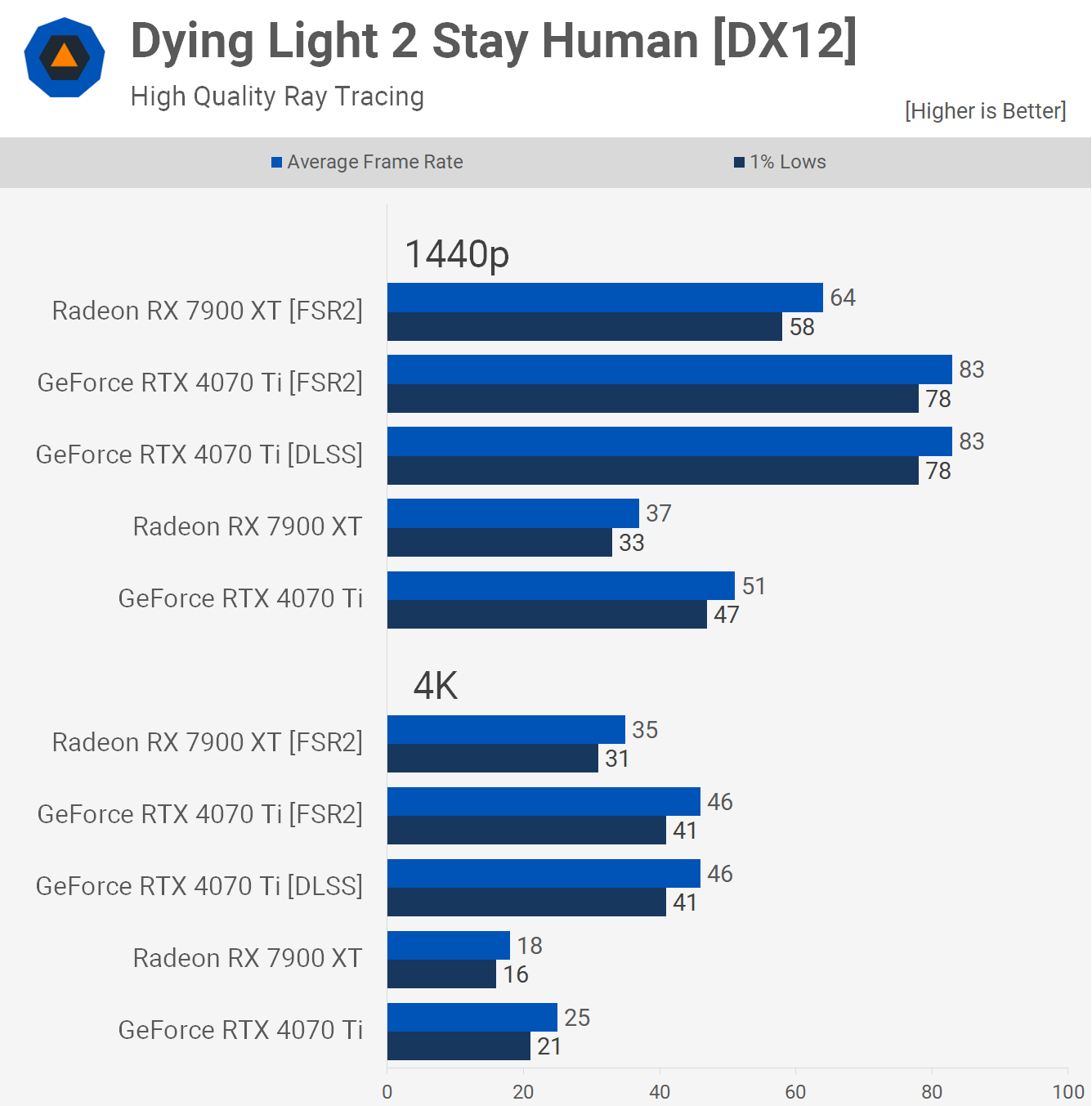

Dying Light 2 Stay Human was tested using the high quality ray tracing model as this enables DX12.

Here the RTX 4070 Ti saw the exact same level of performance using either FSR or DLSS. Scaling was also slightly better for the Radeon GPU at both tested resolutions.

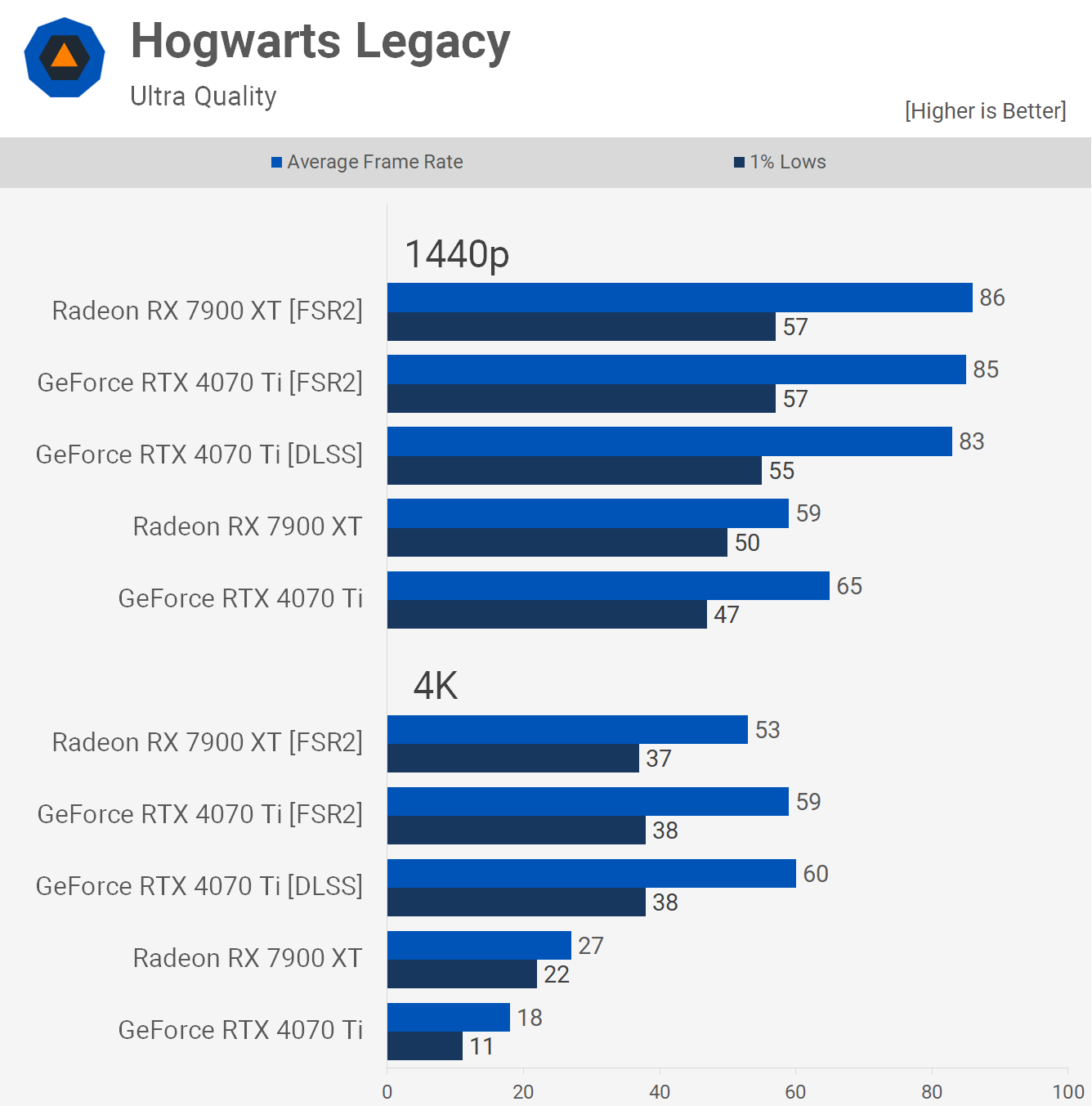

Hogwarts Legacy also saw the same level of performance for the 4070 Ti using either FSR or DLSS with no more than a 2% performance discrepancy.

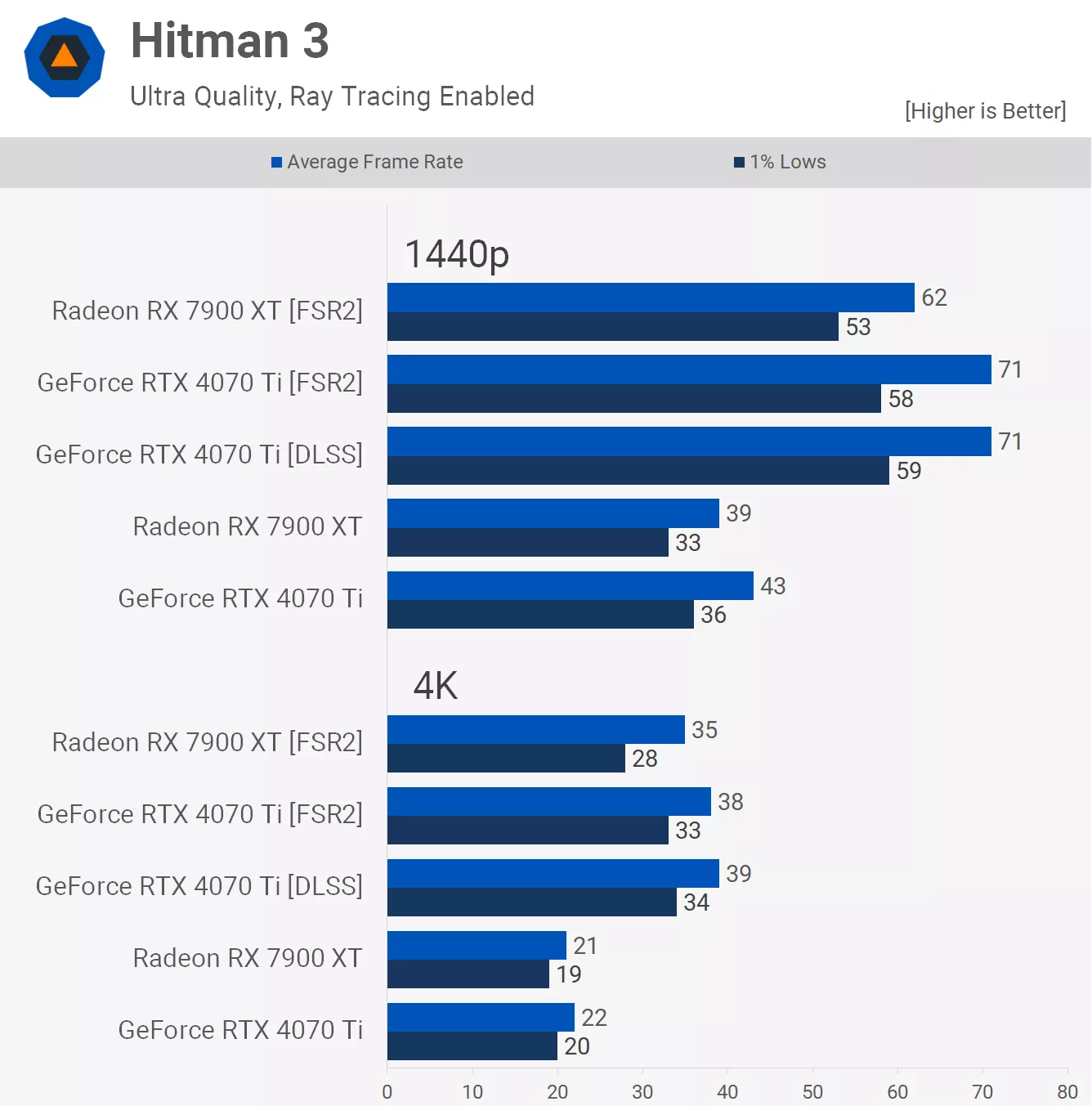

Hitman 3 also saw no change in performance when using either upscaling method with the RTX 4070 Ti, both delivered 71 fps at 1440p and then 38-39 fps at 4K.

The performance uplift when using FSR was also similar for both GPUs.

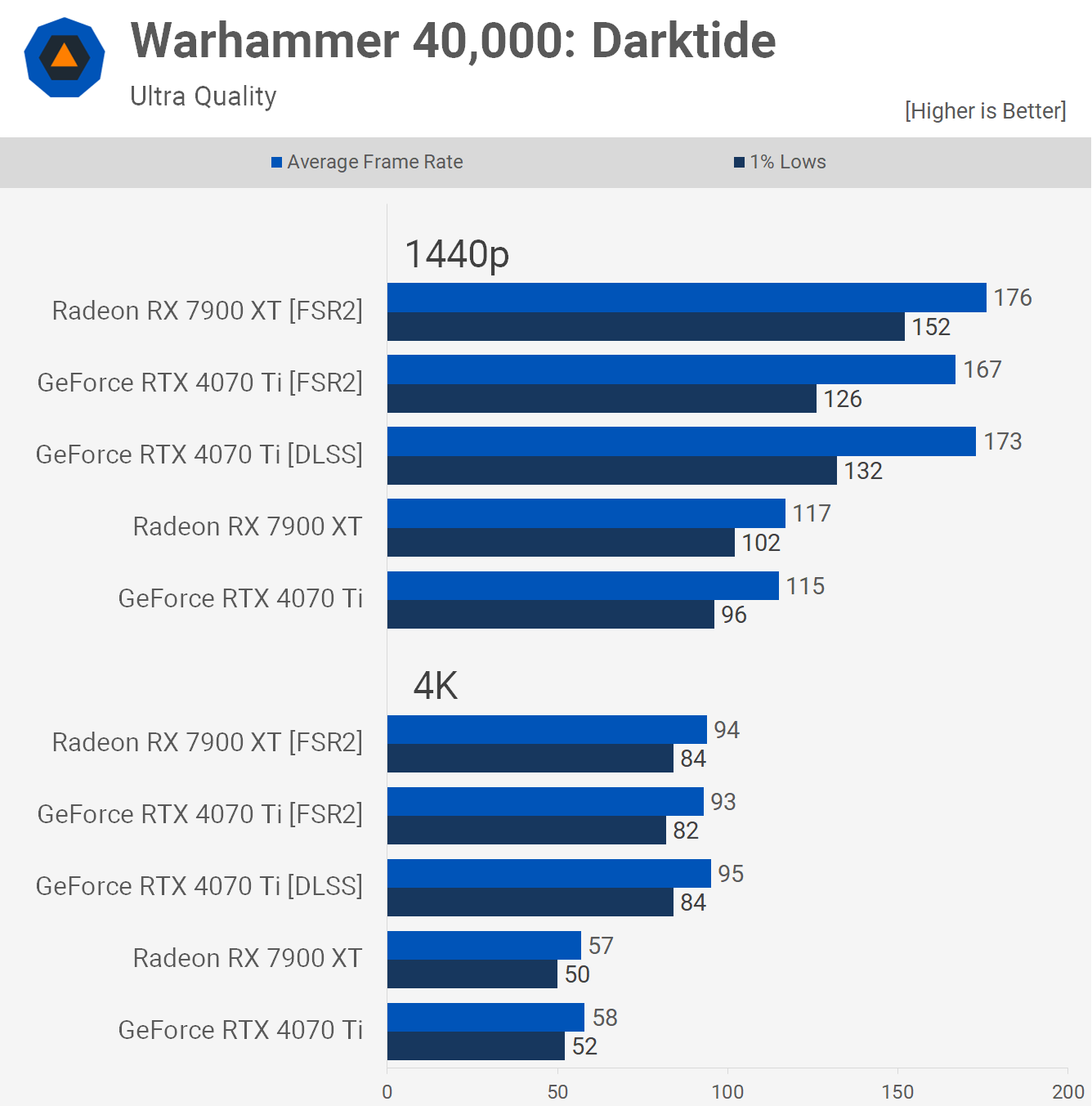

Warhammer 40,000: Darktide runs slightly better when using DLSS, the RTX 4070 Ti was 4% faster at 1440p and 2% faster at 4K. No big margins, but this is the first game where DLSS is repeatedly a few frames faster than FSR.

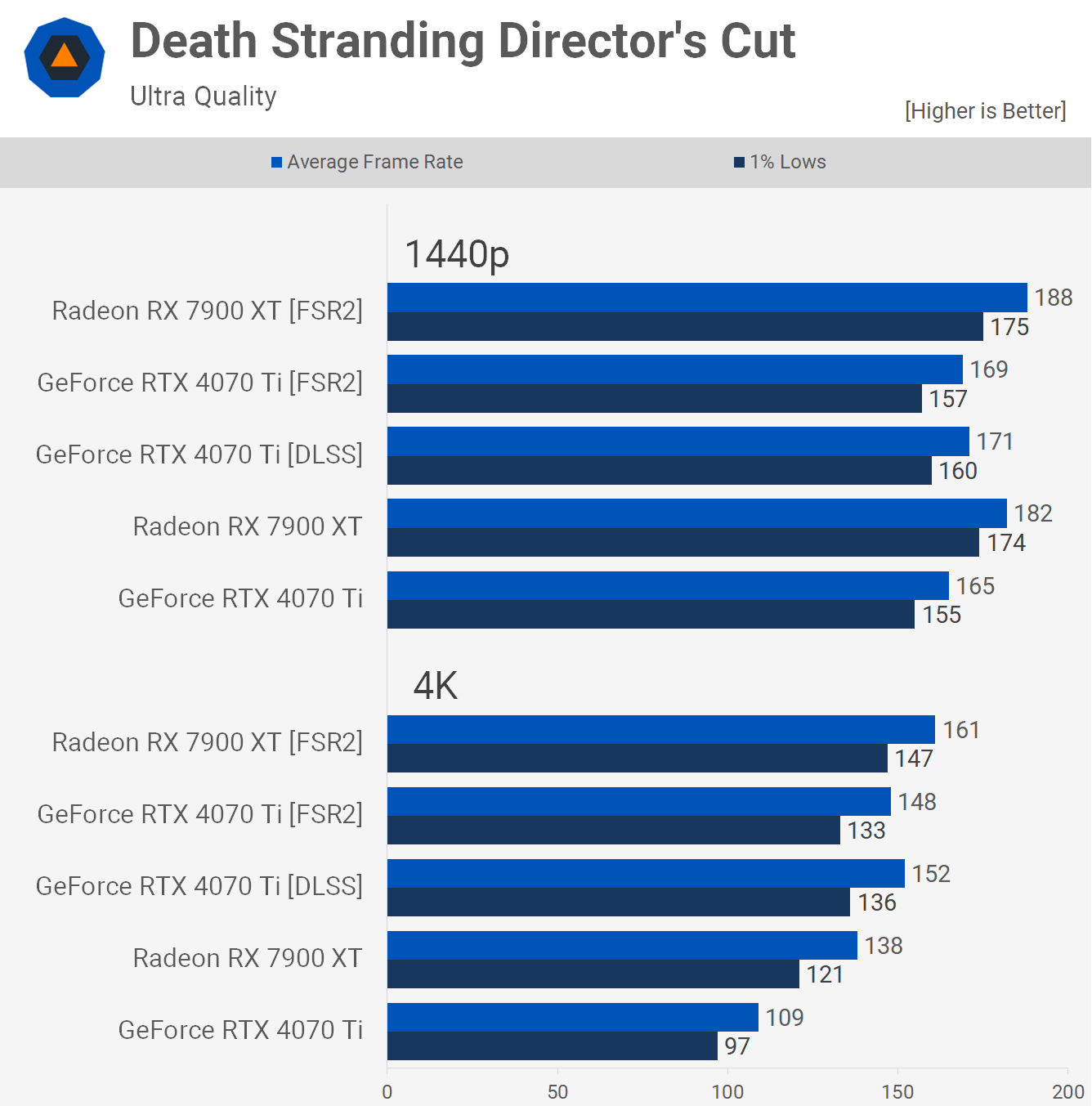

We also found DLSS to be a few frames faster in Death Stranding, though in this case we're talking about a 1-3% difference in performance, which we don't find statistically significant.

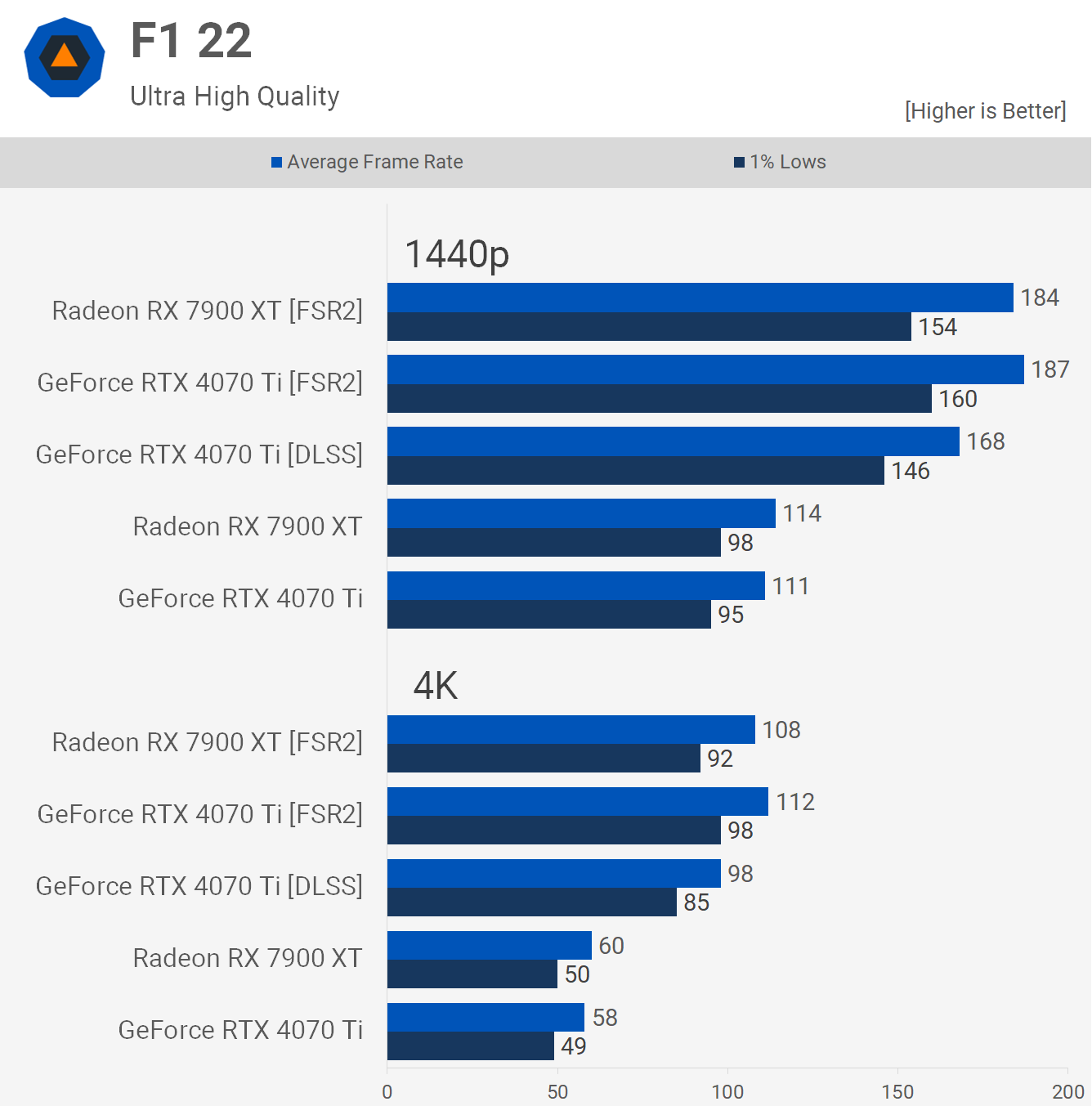

The F1 22 results are surprising, here FSR was 11% faster than DLSS for the RTX 4070 Ti at 1440p and 14% faster at 4K, and these results were recorded using the built-in benchmark with the ultra high quality preset, and based on a 3-run average.

In this example, testing the 4070 Ti using DLSS would place it at a disadvantage on a benchmark graph. That said, while we did test F1 22 with ray tracing enabled in our big head to head benchmark, we didn't use upscaling of any sort as the frame rates were already quite high, at least at 1440p.

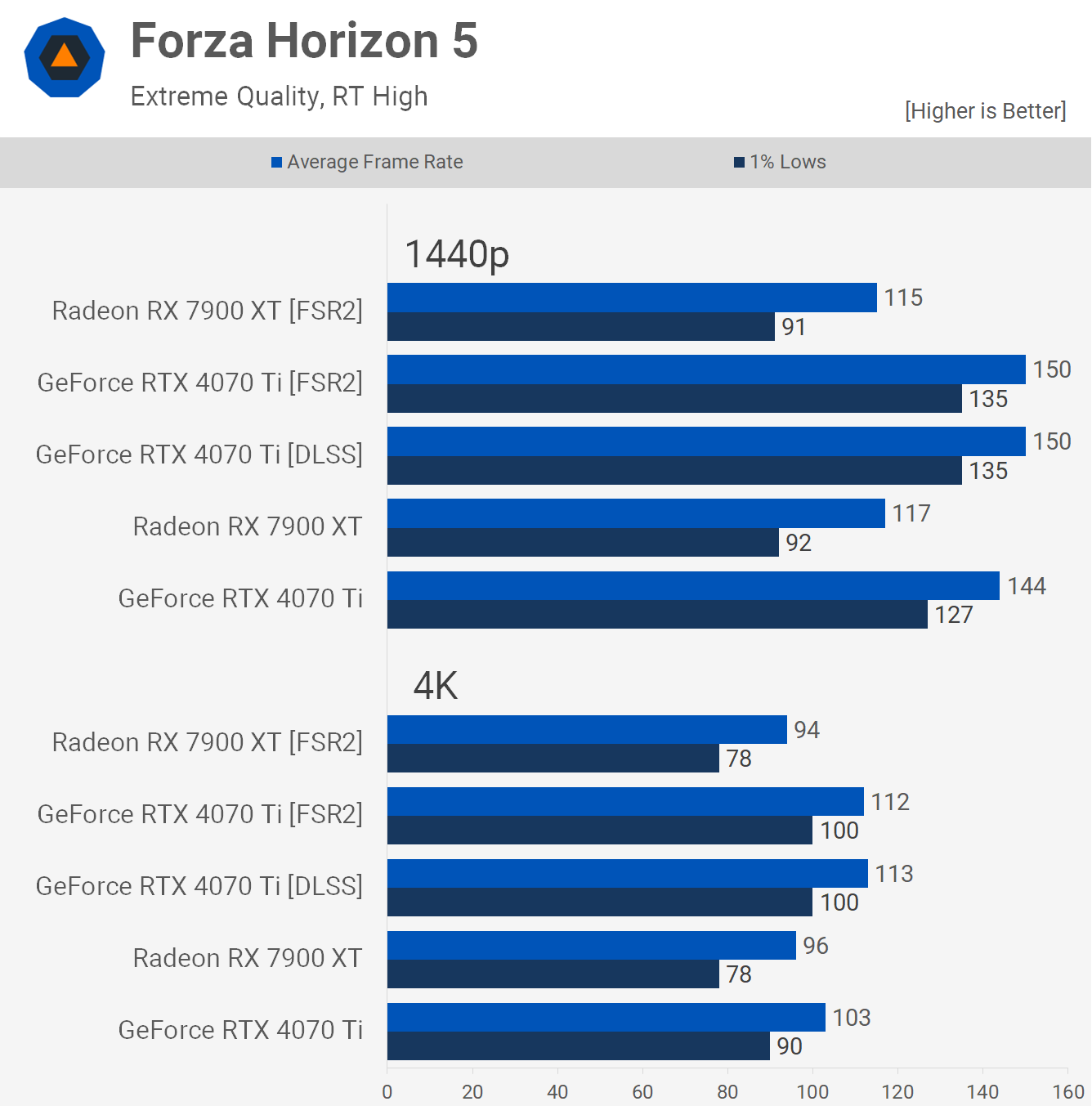

Forza Horizon 5 has a very typical set of benchmark results for us. In short, FSR and DLSS provided identical frame rates. Oddly though, FSR seems broken on the Radeon 7900 XT in this title, as we saw no performance uplift when using it. Forza Horizon 5 has proven to be a very poor title for RDNA 3, which we suspect is related to a driver issue.

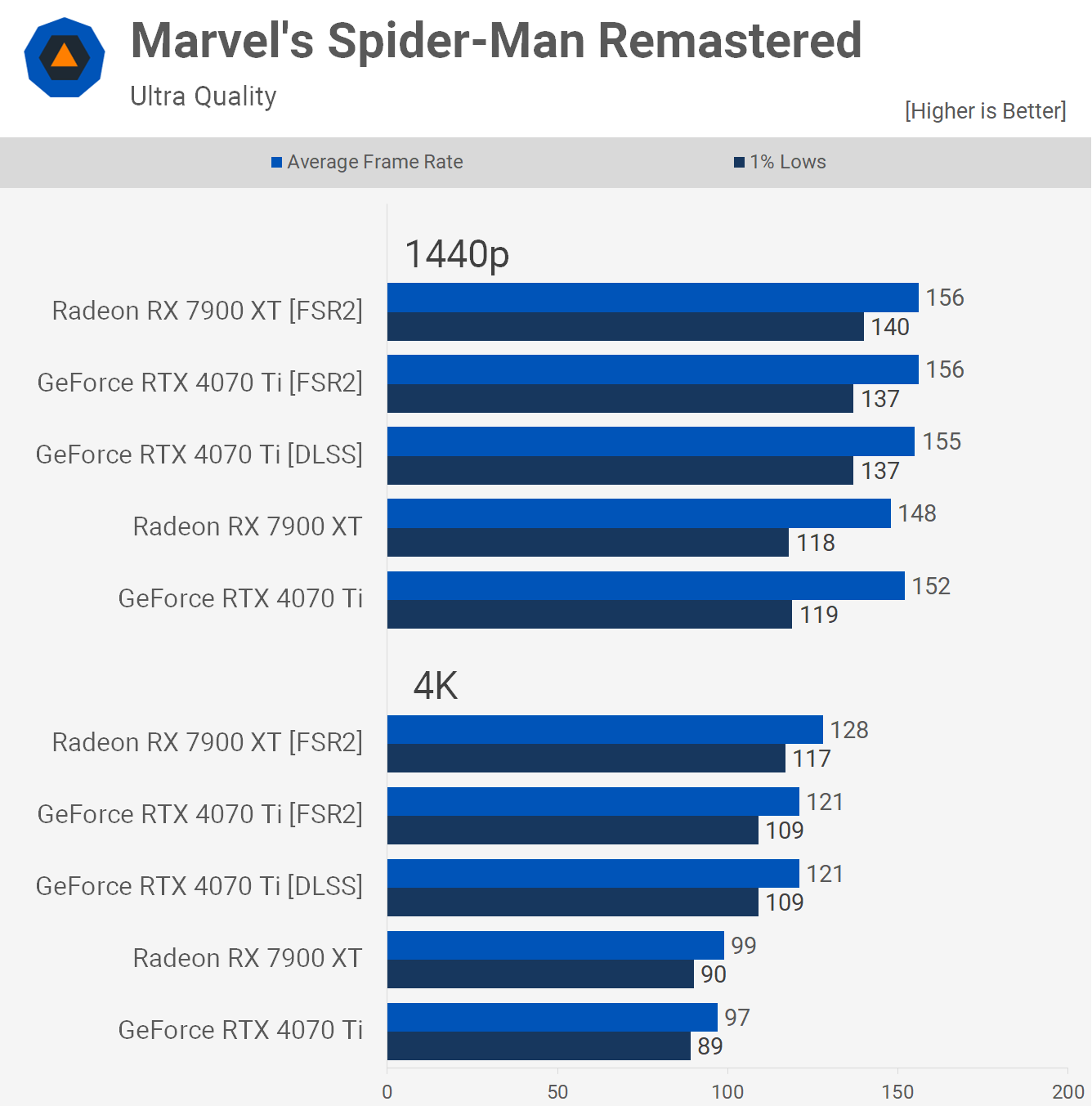

Moving on to Spider-Man Remastered, we have yet another example where FSR and DLSS provided identical results at both tested resolutions.

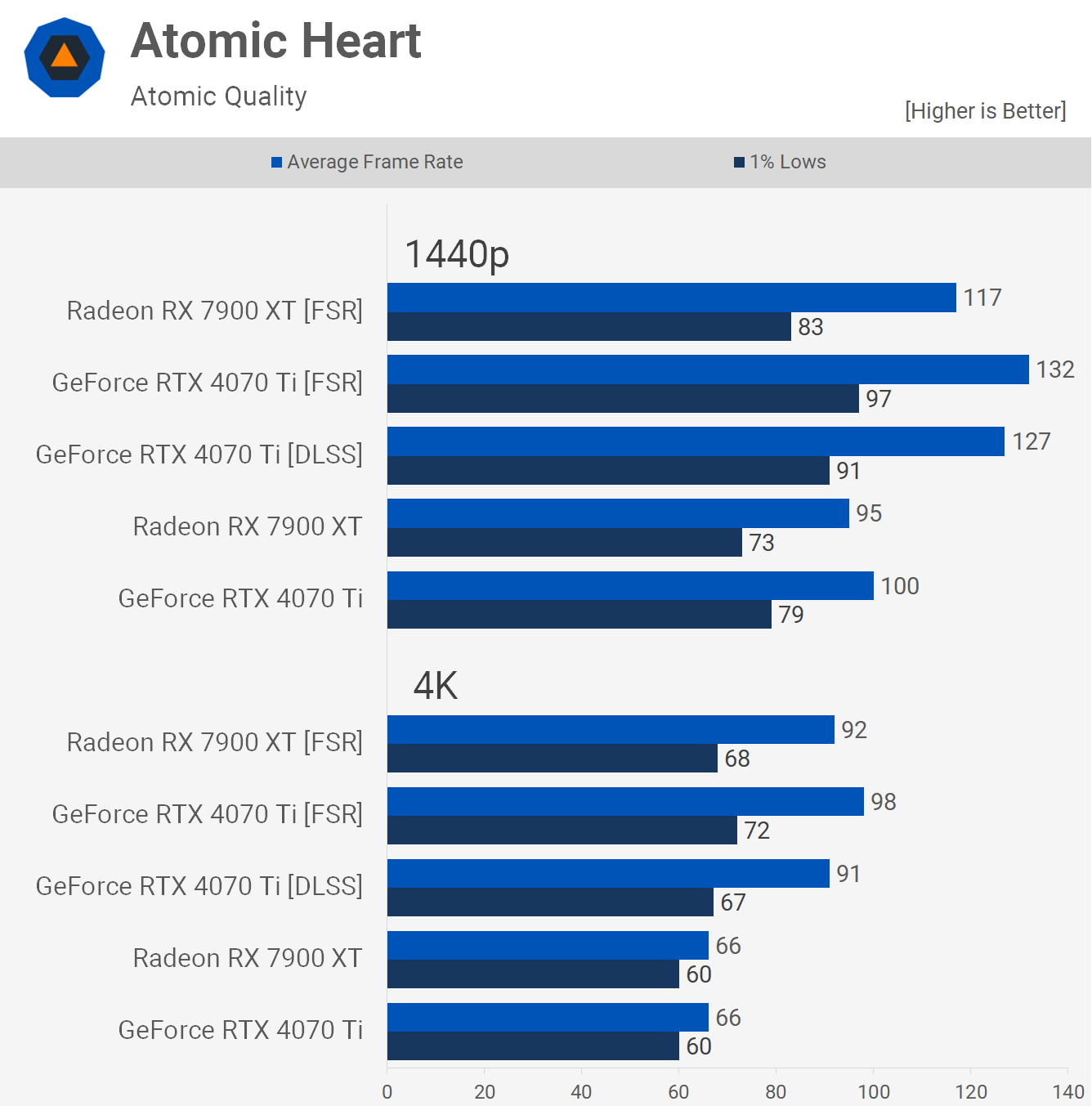

Atomic Heart, like F1 22, did see the 4070 Ti performing better with FSR opposed to DLSS, in this game we're looking at a 4% uplift at 1440p and a more substantial 8% increase at 4K.

The RTX 4070 Ti would have only matched the 7900 XT if we had tested and compared using DLSS, but in our graphs it was shown to be 7% faster when using the same upscaling method.

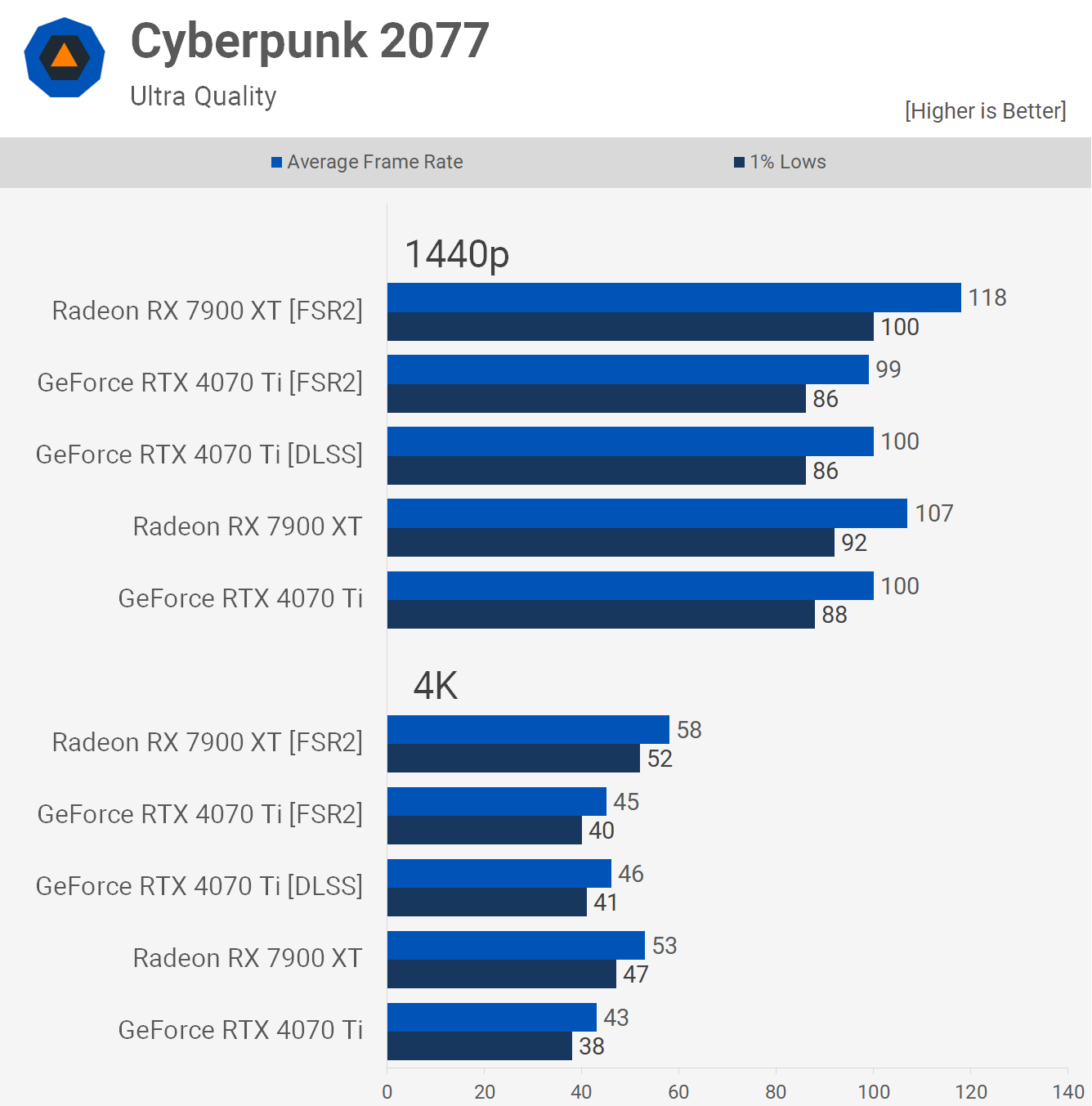

Last up we have Cyberpunk 2077, which we tested twice: one with RT disabled and then with the RT preset. First, using the standard ultra preset we find no difference between FSR and DLSS with the 4070 Ti, performance is basically identical.

With RT enabled we find the same: FSR and DLSS result in the same level of performance for the 4070 Ti. So from a performance standpoint, it doesn't matter which upscaling method you use, the frame rates will be the same, though we highly recommend GeForce users opt for DLSS as it generally provides better image quality.

What We Learned

Well, that was a lot of drama and false accusations over nothing now wasn't it? Generally speaking, FSR and DLSS deliver a similar performance boost for GeForce GPUs, though we did find a few games where FSR was notably faster, which is important to note for benchmark purposes. If we had tested the Radeon with FSR and the GeForce with DLSS and put that in the same graph, the Nvidia GPU would have been at a disadvantage.

Let's be as clear as we can using the English language: we're by no means suggesting that FSR is better than DLSS in those games where frame rates were higher. On the contrary, the image quality of DLSS is almost guaranteed to be superior, therefore GeForce owners would want to use Nvidia's proprietary upscaling technology. But for the purpose of a benchmark comparison, we wanted to provide you with apples to apples data, and we think we've now proven that we did just that.

Some may still want to argue, "if I'm going to buy an RTX 4070 Ti, I'm going to use DLSS, so FSR benchmarks are completely pointless for me," and we saw plenty of people making that argument. But as other users pointed out, review outlets such as ourselves are looking at relative performance, which is why we often test the way we do.

If you think we're trying to please everyone here, we're not, that's an impossible goal, rather we're trying to do what's right. We know if we don't use any upscaling people will still scream AMD shill, because DLSS is better than FSR, and they will have convinced themselves we're trying to pretend DLSS doesn't exist, despite discussing it and testing over the past months and years.

We're damned if we use DLSS for GeForce and FSR for Radeon, as FSR often doesn't look as good and could provide AMD with a performance advantage, and as we've just found. Meanwhile we're damned if we try to make an apples to apples comparison by using the same upscaling technique for all GPUs, as we found in our recent 4070 Ti vs 7900 XT content.

Ultimately, after careful consideration, we've come to the conclusion that for big head-to-head GPU benchmarks we won't use upscaling at all, something many others also suggested. Again, this doesn't solve the issue, people will still cry biased, because their own personal biases mean you're either with them or against them in supporting the corporation they love (?), but it does solve an obvious issue for us, we're actually testing at the resolution we claim to be testing.

Testing at 4K with DLSS or FSR isn't actually testing at 4K, and if we're going to claim to be benchmarking at 1440p and 4K, we should really make sure we're rendering at those resolutions. So there, problem solved. As for product reviews, like a day-one review of the GeForce RTX 4070 Ti, we will still include DLSS benchmarks there, for the specific section of the review that looks at upscaling.

It would be nice if we could have better discussions about this sort of stuff, just because someone has a different opinion doesn't mean they're instantly morally corrupt, they just have a different opinion, they might even be wrong, but screaming shill isn't going to help solve the problem, and it certainly doesn't strengthen your position. It's also good to have some supporting evidence, because replying on marketing material from a beloved corporation isn't a great strategy either. Anyway, food for thought, and this is where we're going to end this one.