The latest series of Ryzen CPUs has been out for six weeks and yet only about a week ago were we able to get our hands on the Ryzen 7 3800X for the first time. The delay had us receiving numerous comments asking us to review it and comparing it to the 3900X, 3700X and 3600, all of which we've reviewed by now.

So what's the deal? Why has the 3800X been so hard to get, how does it differ from the 3700X and why has the TDP increased by over 60% for a 100 MHz increase in boost frequency? Apparently it's all about binning and yields that may not be as good as AMD hoped, or maybe demand has also played a role.

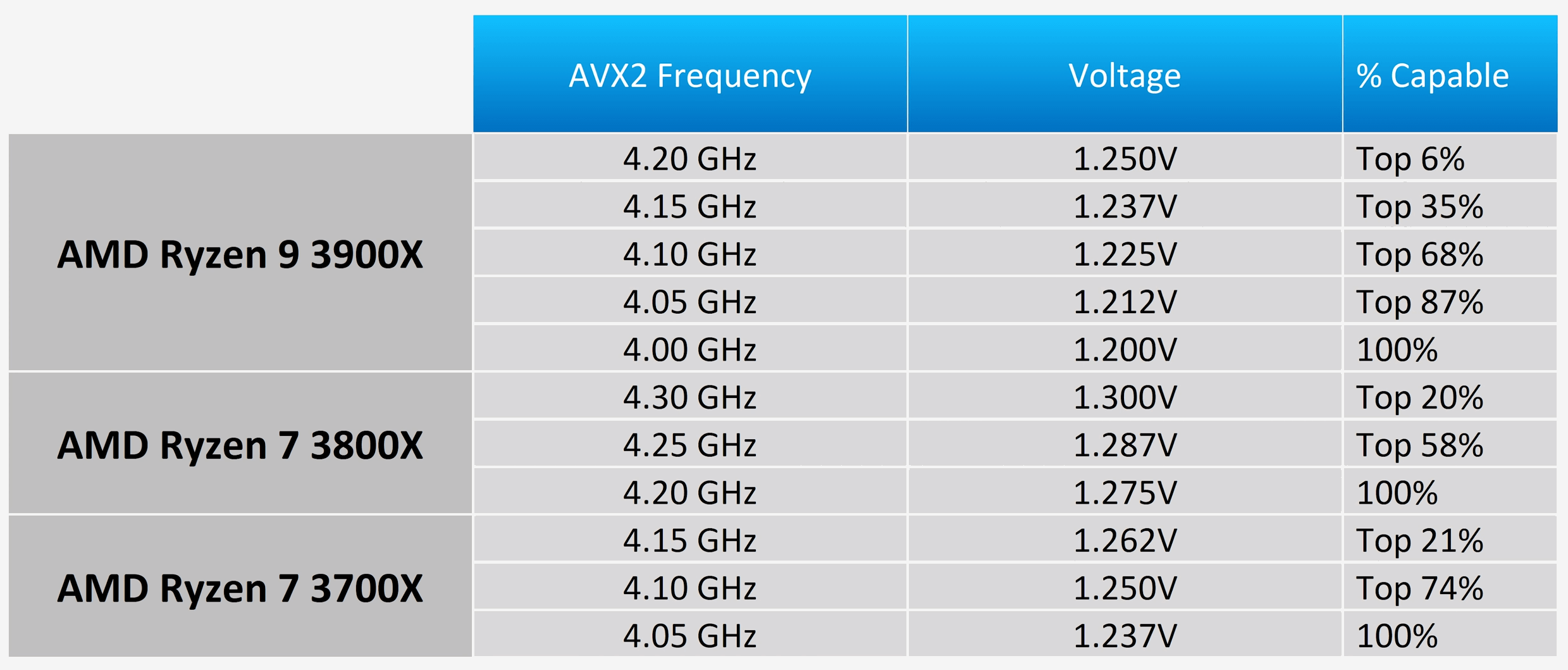

Silicon Lottery recently released some Ryzen 3000 binning data and this suggests the better quality silicon has been reserved for the 3800X. The top 20% of all 3800X processors tested passed their 4.3 GHz AVX2 stress test, whereas the top 21% of all 3700X processors were only stable at 4.15 GHz. Also, all 3800X processors passed the test at 4.2 GHz, while 3700X processors were only good at 4.05 GHz, meaning the 3800X has about 150 MHz more headroom when it comes to overclocking.

Silicon Lottery AMD Ryzen 3000 Binning Results

In other words, the average 3800X should overclock better than the best 3700X processors, but it's still a minor 6% frequency difference we're talking about between the absolute worst 3700X and the absolute best 3800X per their testing. For more casual overclockers like us the difference will likely be even smaller. Our 3700X appears stable in our in-house stress test and to date hasn't crashed once at 4.3 GHz. This is the same frequency limit for the retail 3800X we got. As for the TDP, that's confusing to say the least, but we'll go over some performance numbers first and then we'll discuss what we think is going on.

The benchmarks you see below were tested on the Gigabyte X570 Aorus Xtreme with 16GB of DDR4-3200 CL14 memory. The graphics card of choice for CPU testing is of course the RTX 2080 Ti, so let's get into the numbers.

Benchmarks

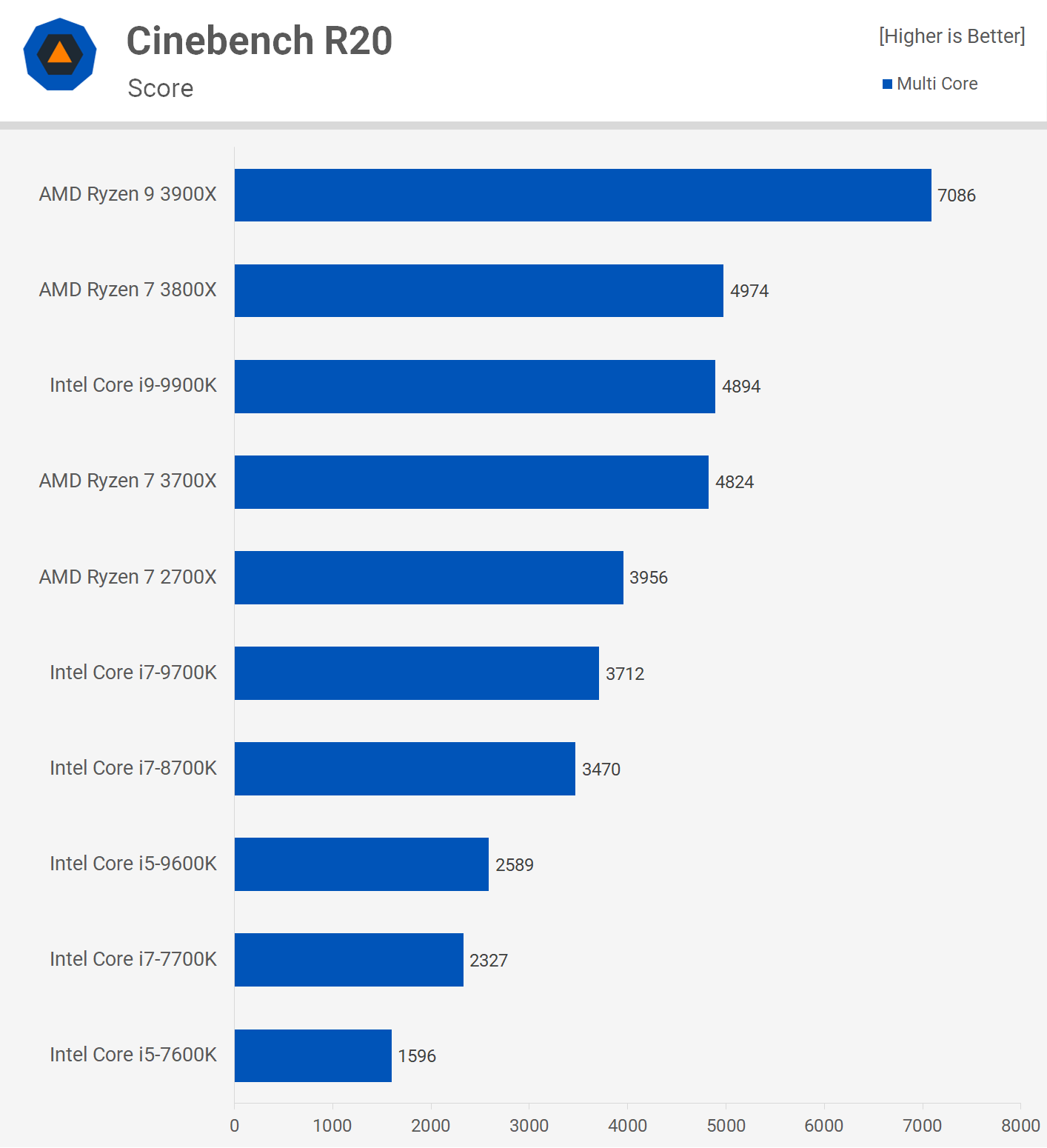

First up we have Cinebench R20 and hold onto your hats, we're looking at a 3% increase in multi-core performance with the 3800X, but hey at least it's faster than the 9900K now.

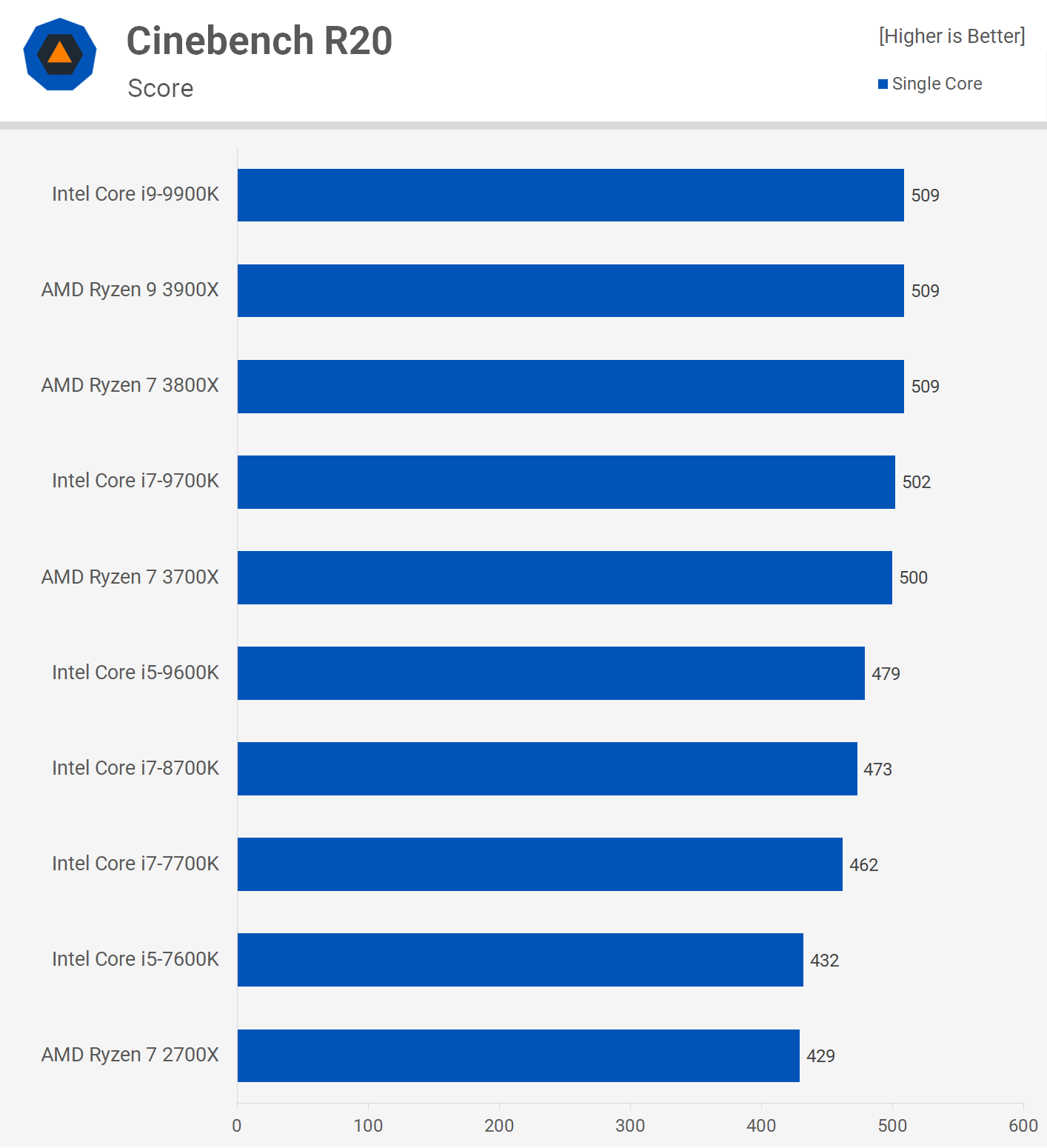

As for single core performance, we're looking at around a 2% boost here, allowing the 3800X to match the 3900X and 9900K.

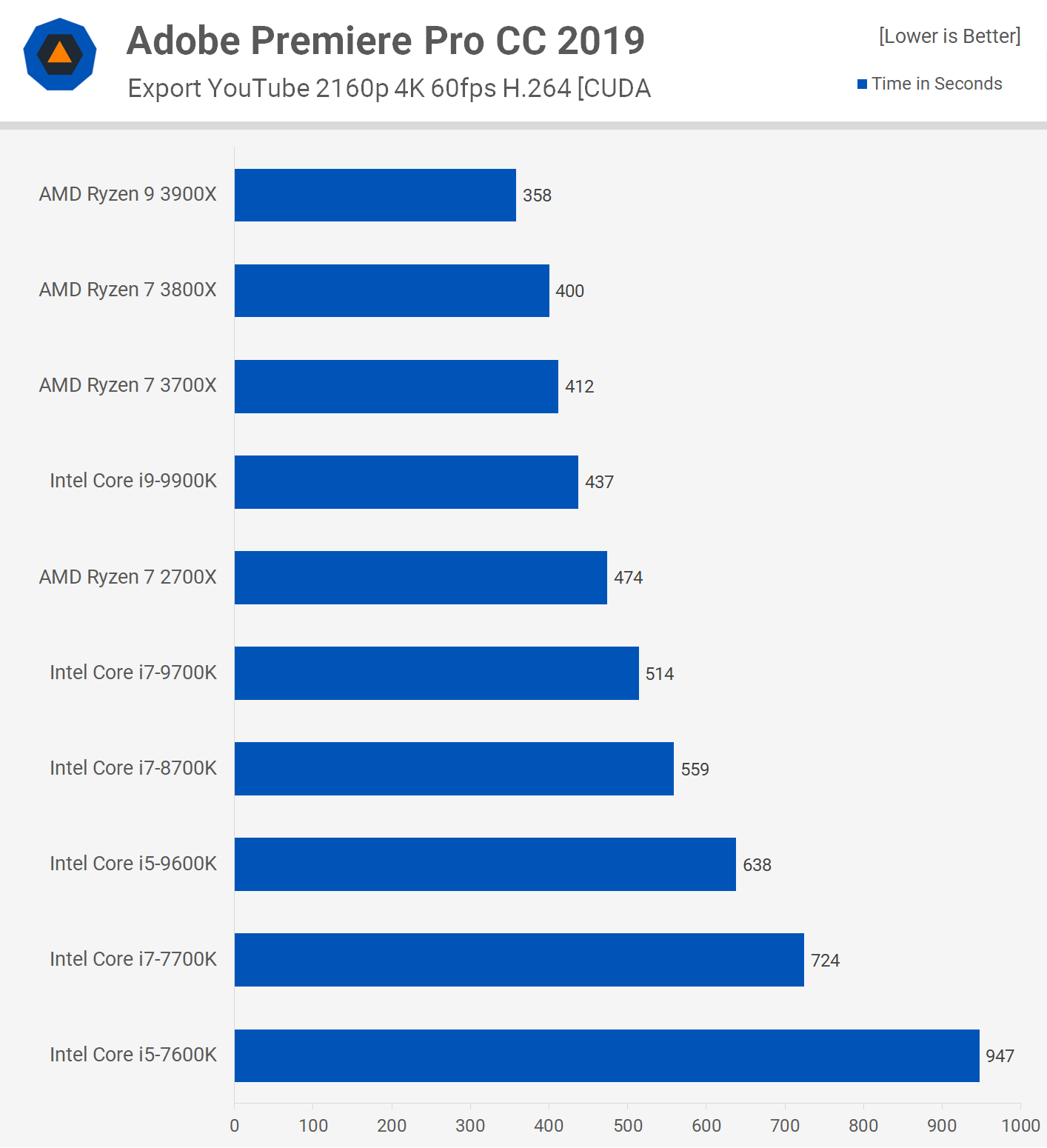

Encoding time in Premiere is reduced by 3%, shaving 12 seconds off our test, given what we saw in Cinebench R20 this result isn't surprising.

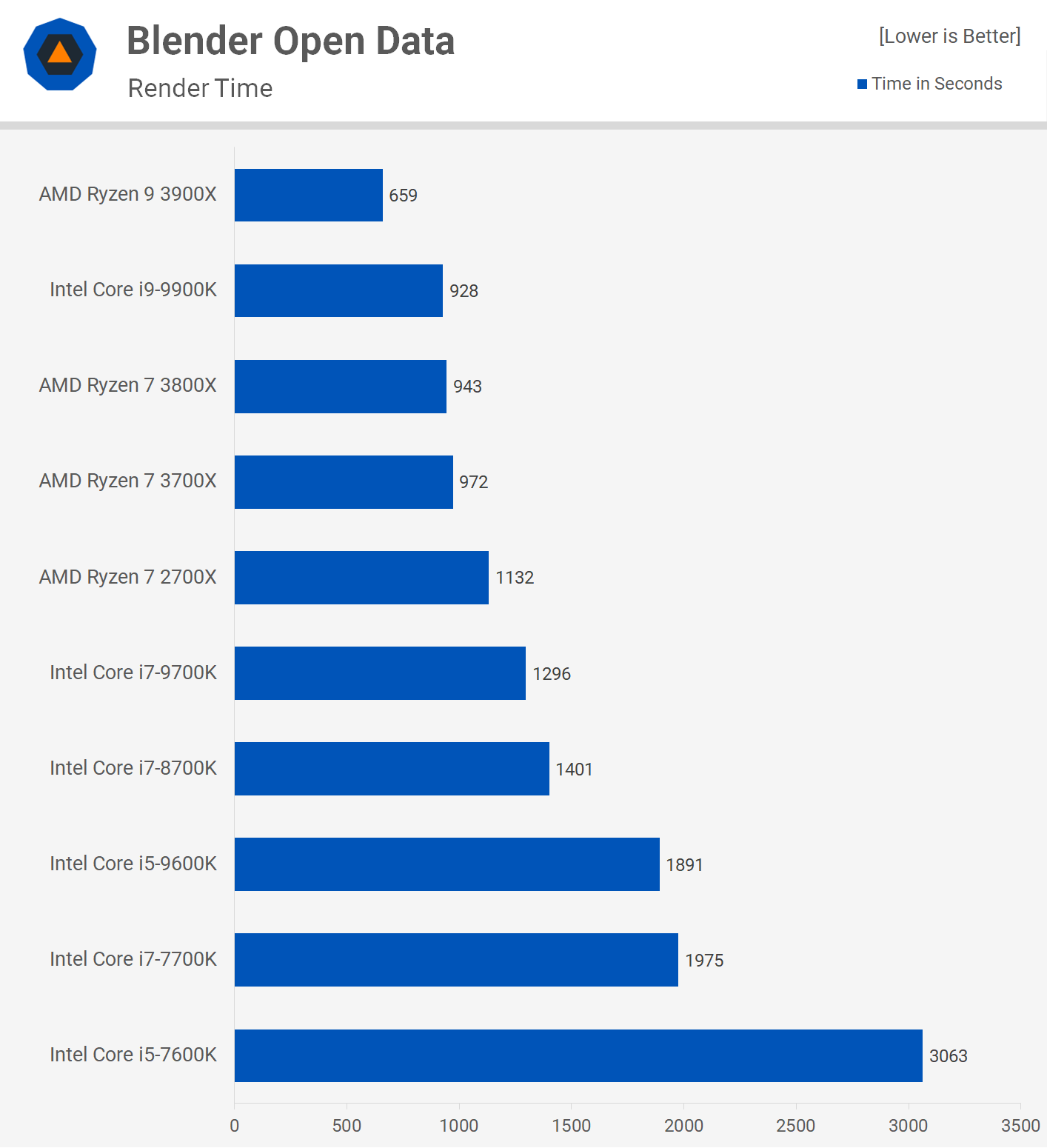

We also see a 3% reduction in render time when testing with Blender Open Data, so not much more to say really. Let's quickly check out a few games before jumping into power consumption and thermals.

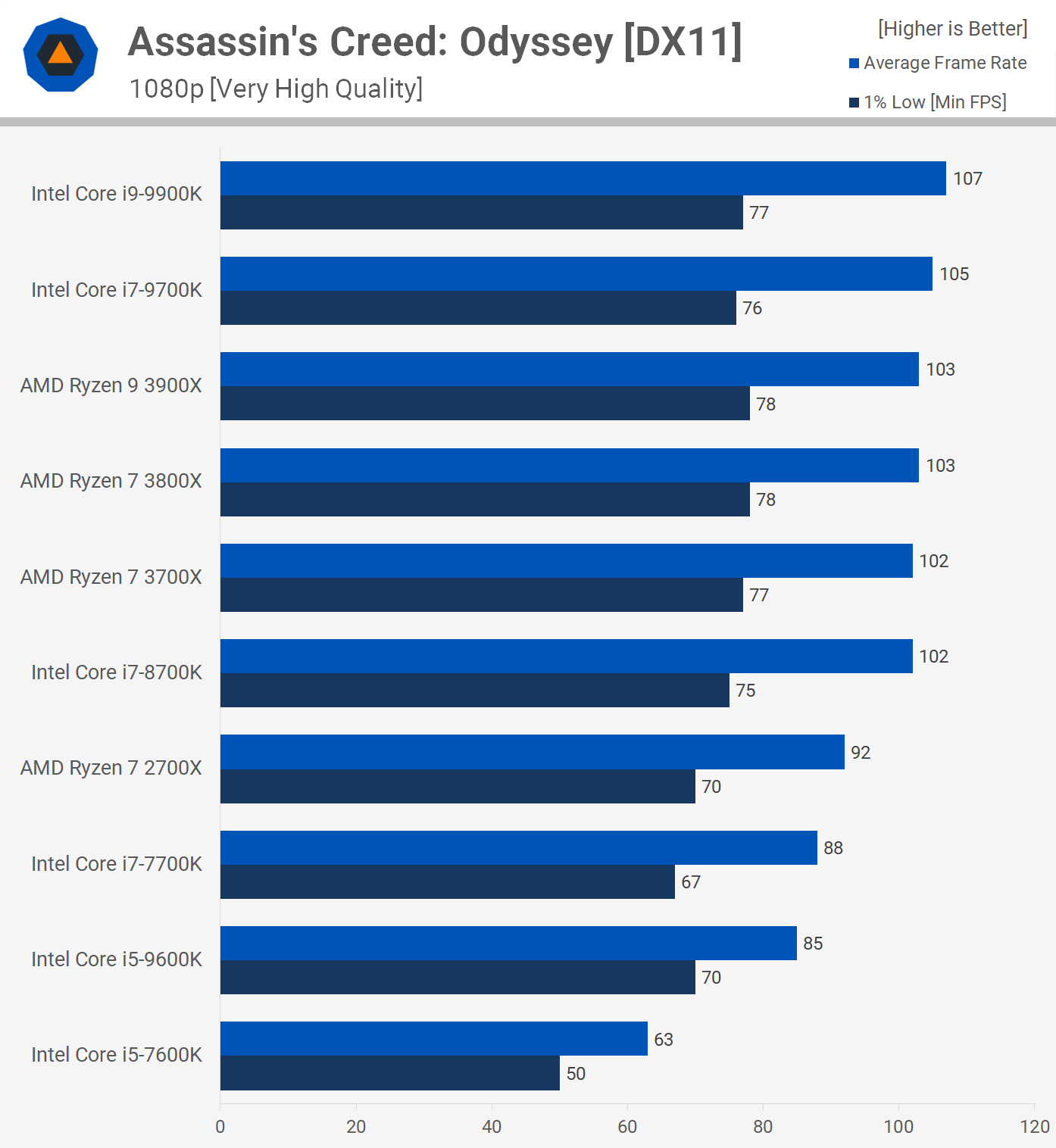

We're really looking at margin of error type margins in Assassin's Creed Odyssey between the 3700X, 3800X and 3900X. Needless to say they all deliver a similar gaming experience in this title.

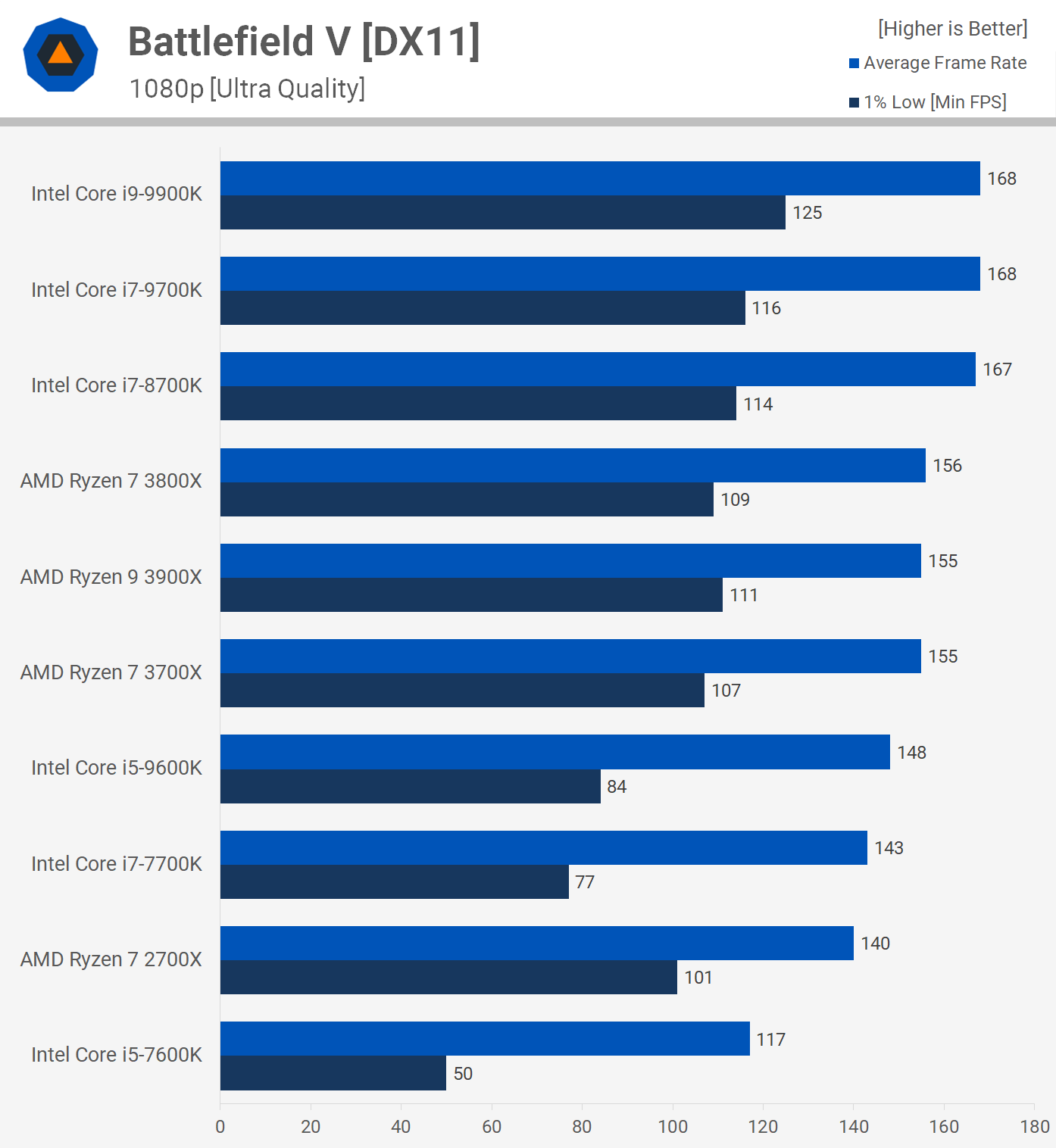

Much the same is seen when testing with Battlefield V, the 3800X is 1-2fps faster than the 3700X thanks for a very small increase in operating frequency, again there is no way you'll notice this performance difference.

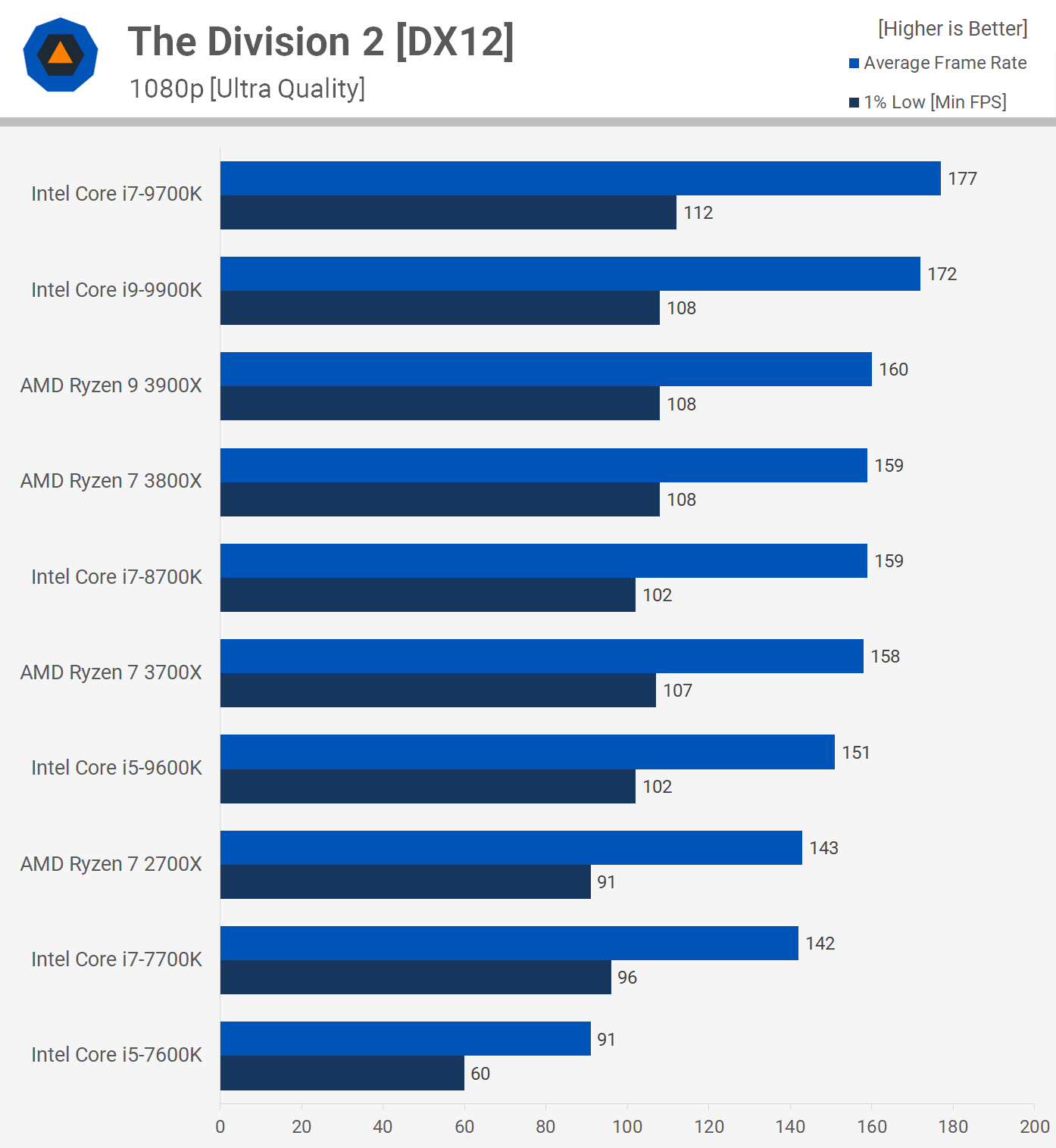

Virtually identical performance is also seen when testing with The Division 2, we're looking at Core i7-8700K like performance in this title.

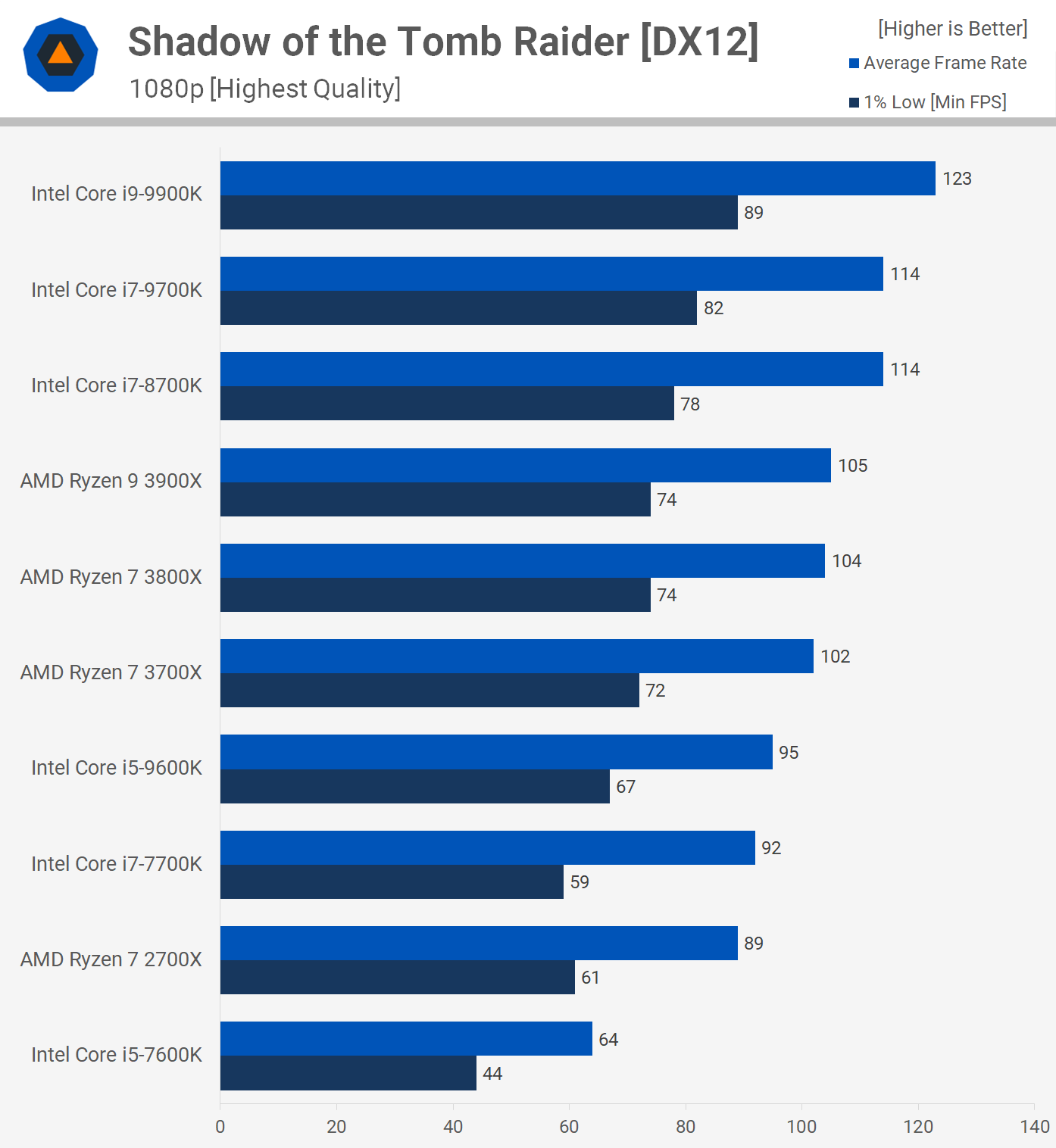

Finally, we have Shadow of the Tomb Raider and here the 3800X was 2fps faster than the 3700X, so let's move on to power consumption.

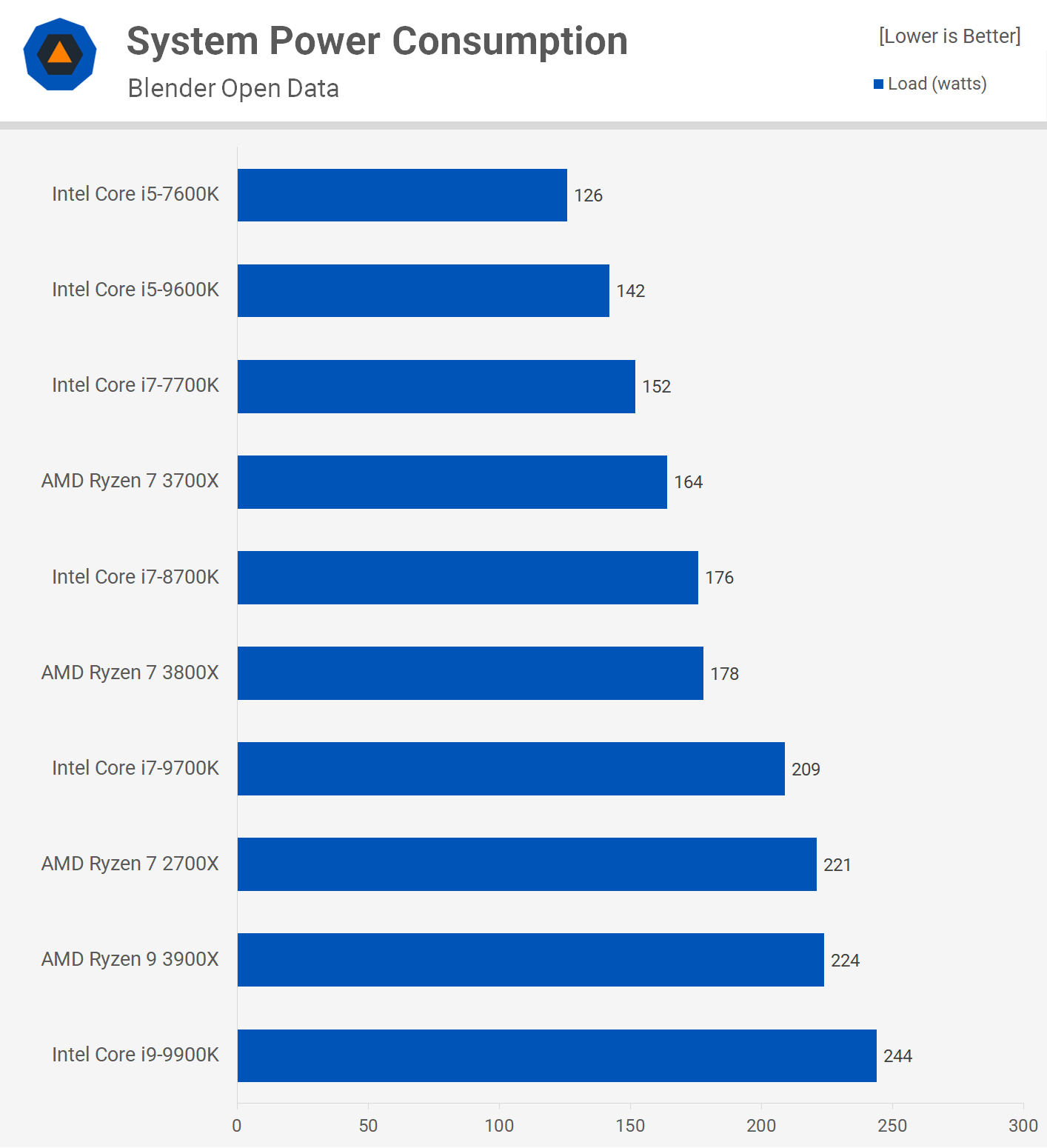

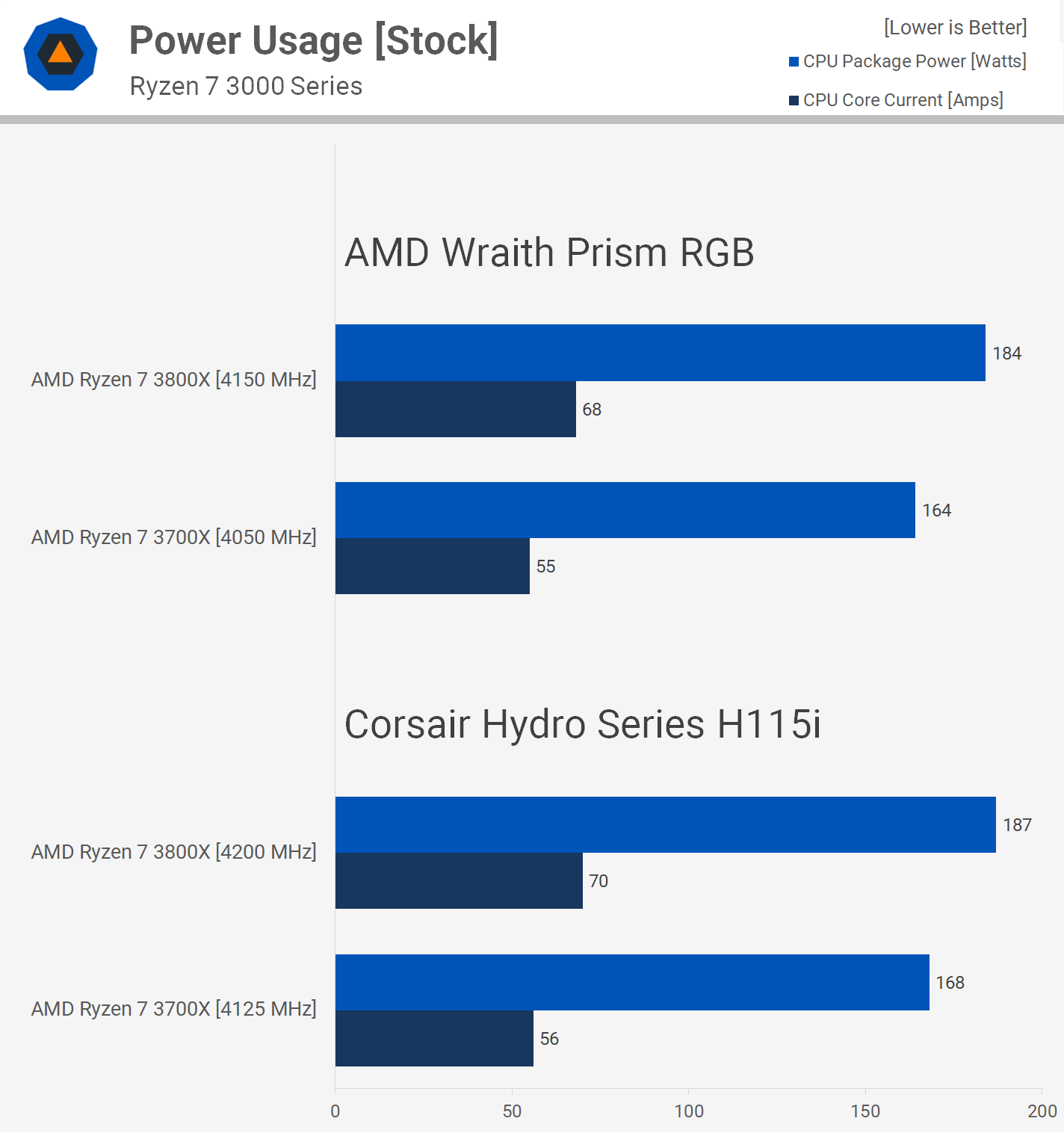

Here we see a 9% increase in power consumption, surprising given we're only talking about a 2-3% increase in operating frequency. However that frequency boost will come with an increase in voltage and this is likely why the 3800X was a little more power hungry than you might have expected.

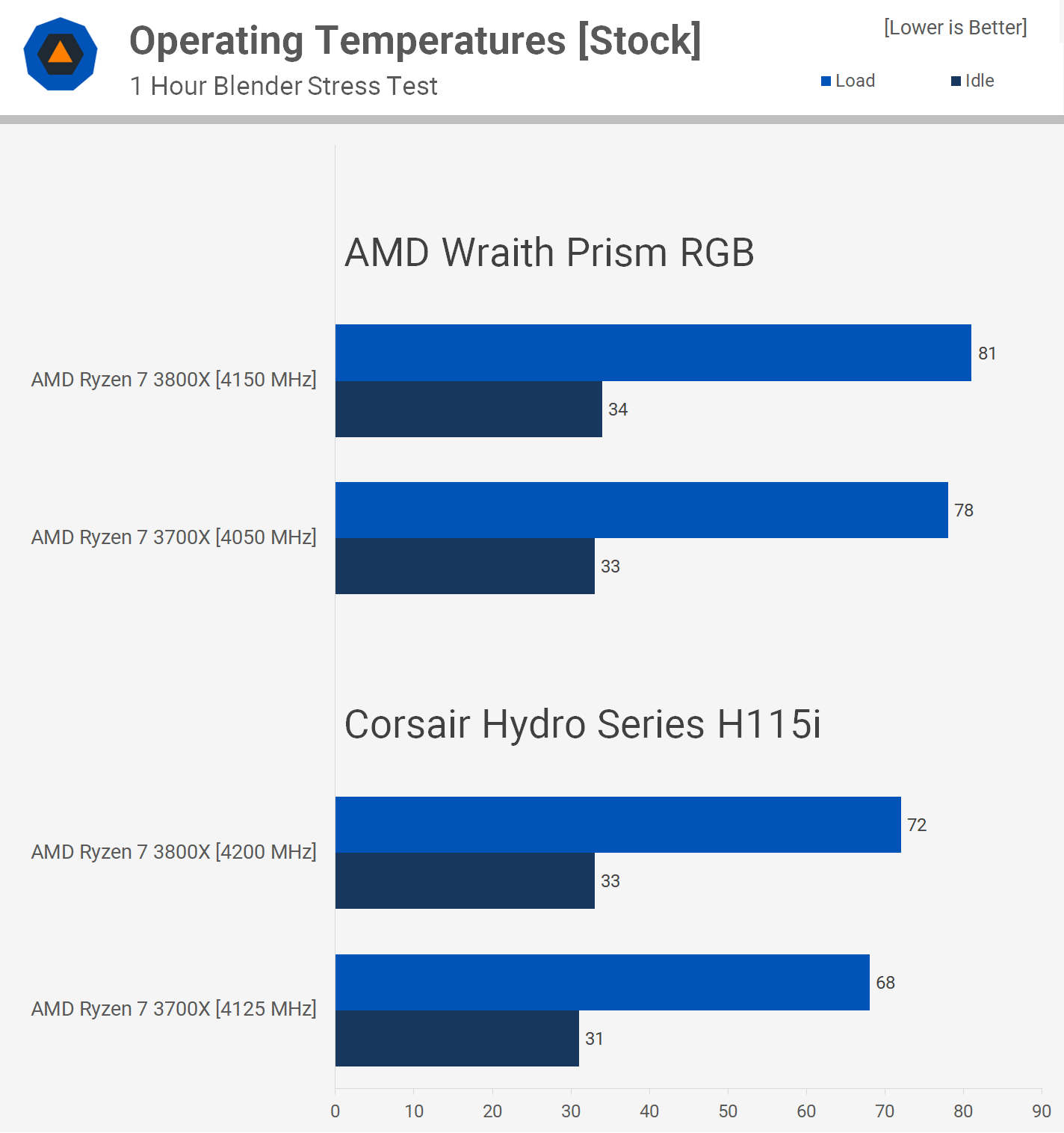

As a result of that increased power consumption the 3800X runs around 3 degrees hotter with the box cooler and 4 degrees hotter with the Corsair H115i Pro. Interestingly, with the box cooler the 3800X clocked 100 MHz higher, but just 75 MHz higher with the Corsair AIO.

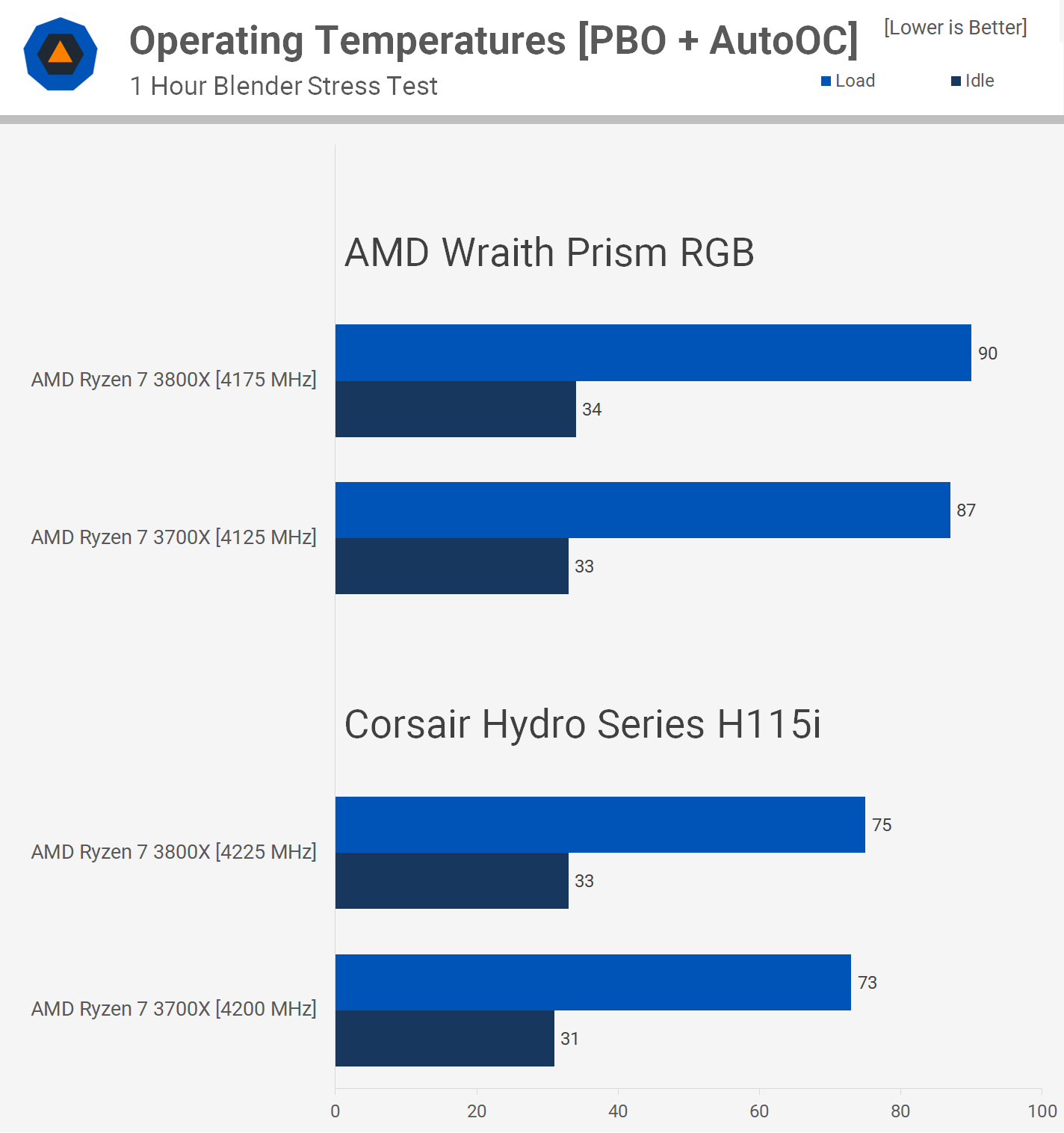

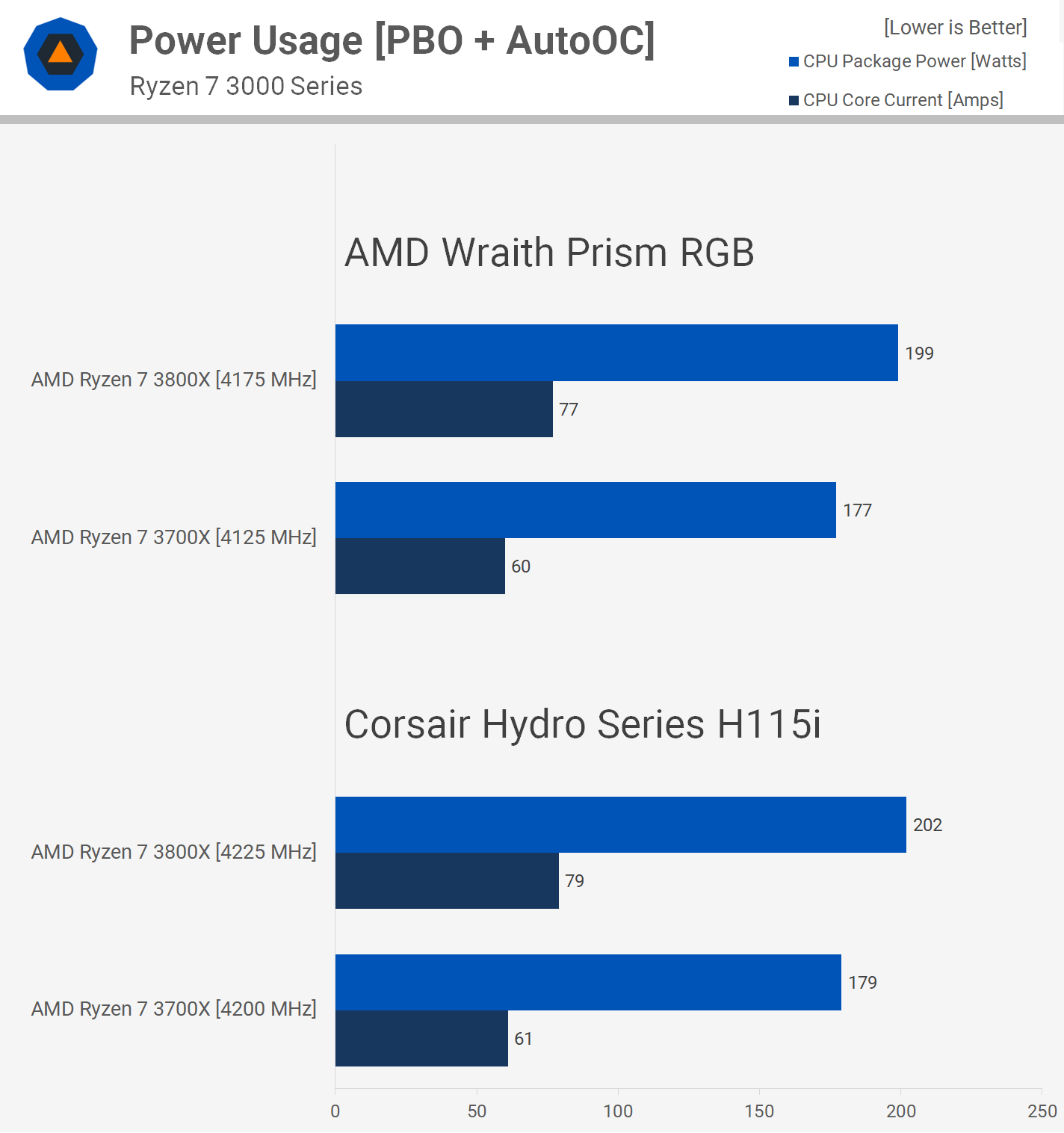

Enabling PBO still saw the 3800X run around 2-3 degrees hotter but now it's only clocking 25-50 MHz higher than the 3700X, so if you want to turn your 3700X into a 3800X, just enable PBO.

Power consumption sees the 3800X suck down quite a bit more power than the 3700X, at least relative to the extra performance it offers. We saw up to a 3% performance improvement and that's cost us around a 12% increase in power consumption.

The margins remain much the same with PBO enabled, the 3800X still consumes around 12% more power than the 3700X.

What's What?

That wasn't the most exciting benchmark session ever, but it did answer the question: what's the difference?

As it turns out, not a lot. During heavy workloads the 3800X clocks between 100 - 150 MHz higher, which amounts to a 2.5 - 4% frequency increase. This increased CPU power consumption by around 12%, which meant it ran a few degrees hotter, potentially making it a bit louder.

For this minor performance increase AMD has increased the MSRP by 21%, from $330 to $400, so the biggest percentage increase, if we ignore the TDP, comes from the price. And we believe that's all you need to hear, you'll get 3% more performance at best, by spending 21% more of your money.

If you're interested in that deal we have a heap of old Xeon systems... you can have them at a really good price.

Moving on, let's quickly talk about the 105w TDP which has been increased by 62% over the 65w TDP rating of the 3700X. It seems AMD's basically saying this: with a cooler rated to dissipate 65 watts of heat, the 3700X will run no lower than its base clock. The 3800X which is clocked 300 MHz higher for the base, may not be able to maintain 3.9 GHz with a 65 watt cooler.

The confusion creeps in when AMD skips their 95 watt rating for 105 watts with the 3800X. We accept that the 3800X might not be able to sustain 3.9 GHz with a 65 watt cooler, but surely it can with a 95 watt cooler.

As far as we can tell the TDP is a metric for OEMs who typically try and cut as many corners as possible. If an OEM puts a 65w cooler on a 95w part and some buyer says "I'm not hitting 3.9 GHz," then AMD can go "well, the OEM isn't meeting the base spec for the cooler."

At the end of the day we don't get why TDP is something they advertise at all when it's less than useful to consumers. AMD might be better off just advertising the rating of the cooler they provide with each processor, informing customers what kind of cooler performance they'll need if they want to upgrade. For example, the Wraith Prism is a 105w cooler, therefore you'll want something rated above that if you wish to upgrade, 150 watts for example.

Buying advise in short: we highly recommend avoiding the 3800X and instead grab the 3700X. If you find it necessary, upgrade the box cooler with the money saved. The Ryzen 5 3600 remains king of value bar none, and the 3900X offers more cores for productivity, gaming may not benefit as we observed in our GPU scaling benchmark.