In our last massive CPU and GPU scaling benchmark, we put Zen 3 series processors to the test, and while the results weren't terribly surprising, they were useful and most of you seemed to agree. We also did say that those "boring" results would pave the way for far more interesting benchmarking, so here we are today.

This time, we're comparing AMD's 6-core Ryzen processors using a range of quality settings, resolutions and GPUs, and the results are extremely interesting... and not just for the reasons we thought they would be.

For this one we want to see how the Ryzen 5 1600X, 2600X, 3600X and 5600X compare in half a dozen games using the GeForce RTX 3090, RTX 3070, Radeon RX 5700 XT and 5600 XT, using both the ultra and medium quality presets at 1080p, 1440p and 4K.

There's a lot to go over here, so we're going to jump into the results as quickly as possible.

If you're wondering why we didn't use more Radeon GPUs like the RX 6900 XT and 6800 in place of the RTX 3090 and RTX 3070, there are two main reasons: most of you are interested in buying a GeForce 30 series GPU and most of you have, at least according to a recent poll we ran. Also, it shouldn't matter as they represent a similar performance tier, though as you're about to see our decision to use the Ampere GPUs has led to the discovery of some very interesting results.

Our test platform consisted of 32GB of DR4-3200 CL14 memory in a dual-channel, dual-rank configuration for all CPUs, on the Gigabyte X570 Aorus Xtreme. Cooling them is the Corsair iCUE H115i Elite. Besides loading XMP, no other changes have been made to the BIOS. Let's get into the results...

Benchmarks

Cyberpunk 2077

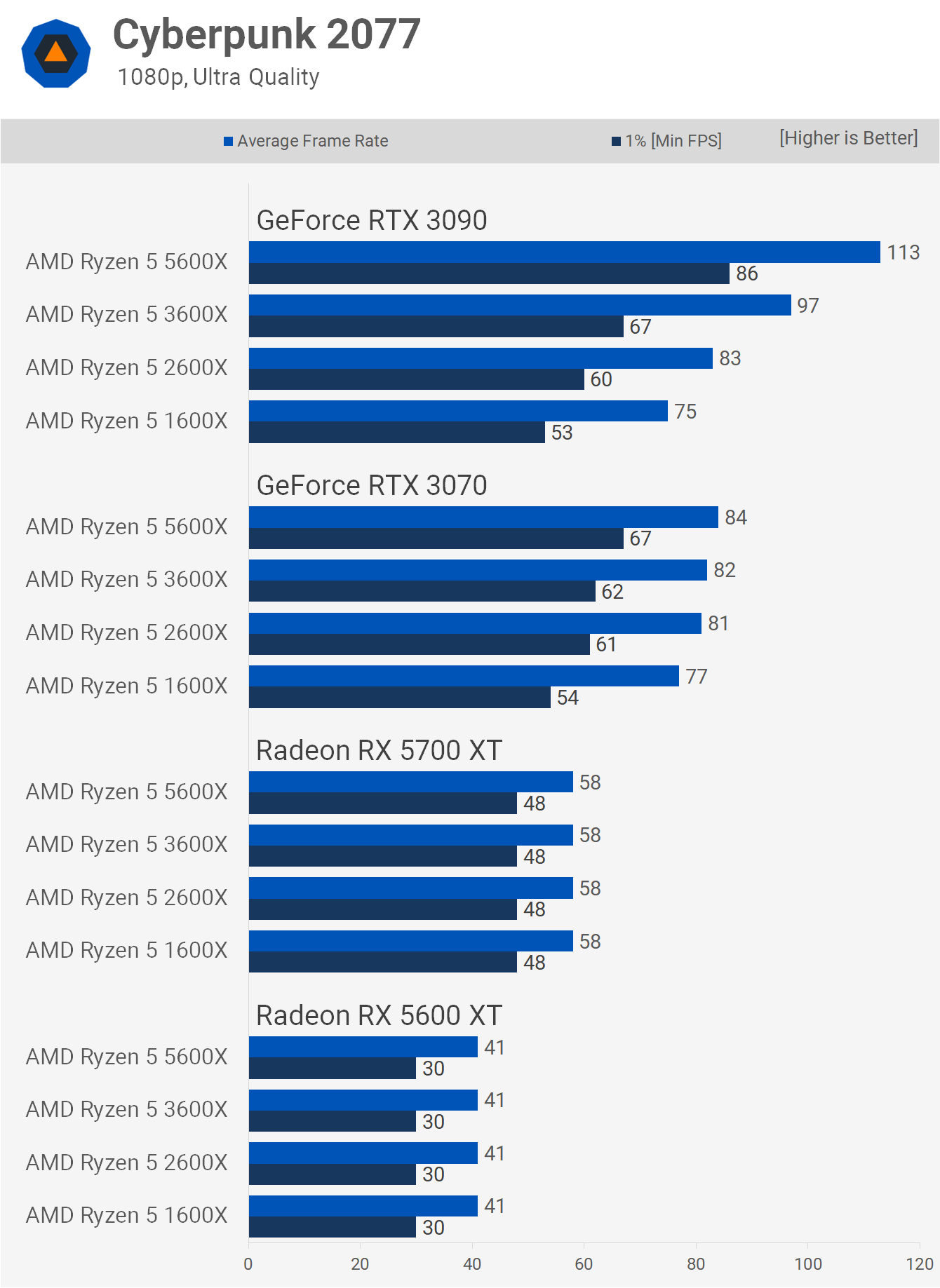

Kick starting our session is Cyberpunk 2077 using the ultra quality preset at 1080p, and the results are mostly as expected. Using the Radeon 5700 XT or 5600 XT all four CPUs delivered the same level of performance, though we are looking at sub-60 frame rates with even the 5700 XT.

Stepping up to the RTX 3070, we see the 5600 XT outperform the 3600X by an 8% margin when comparing 1% low data, 10% faster than the 2600X and 24% faster than the 1600X. Those margins only increase with the RTX 3090, the 5600X is now 28% faster than the 5600X when comparing the 1% low results, 43% faster than the 2600X and a massive 62% faster than the 1600X which limited average frame rate performance to around 75 fps.

You might have noticed the 1600X was a frame or two faster with the 3070 when compared to the 3090, and while that's typically deemed to be within margin of error, you're going to see there might be more to this.

By lowering the quality preset we're allowing the GPUs to render more frames which does increase CPU load, though you can also reduce the CPU workload as there's less information in each frame to calculate, so it's a different kind of load. As a result of the higher frame rate, the 1600X slips behind with the 5700 XT, at least when comparing 1% low performance, though this wasn't the case with the 5600 XT.

We also find that the game is now largely CPU bound using the Ampere GPUs as the RTX 3070 and 3090 performance is very similar. Using the 3090 we see that the 5600X is 19% faster than the 3600X, 41% faster than the 2600X and 58% faster than the 1600X, so some rather larger performance gains there over previous generation Ryzen architectures.

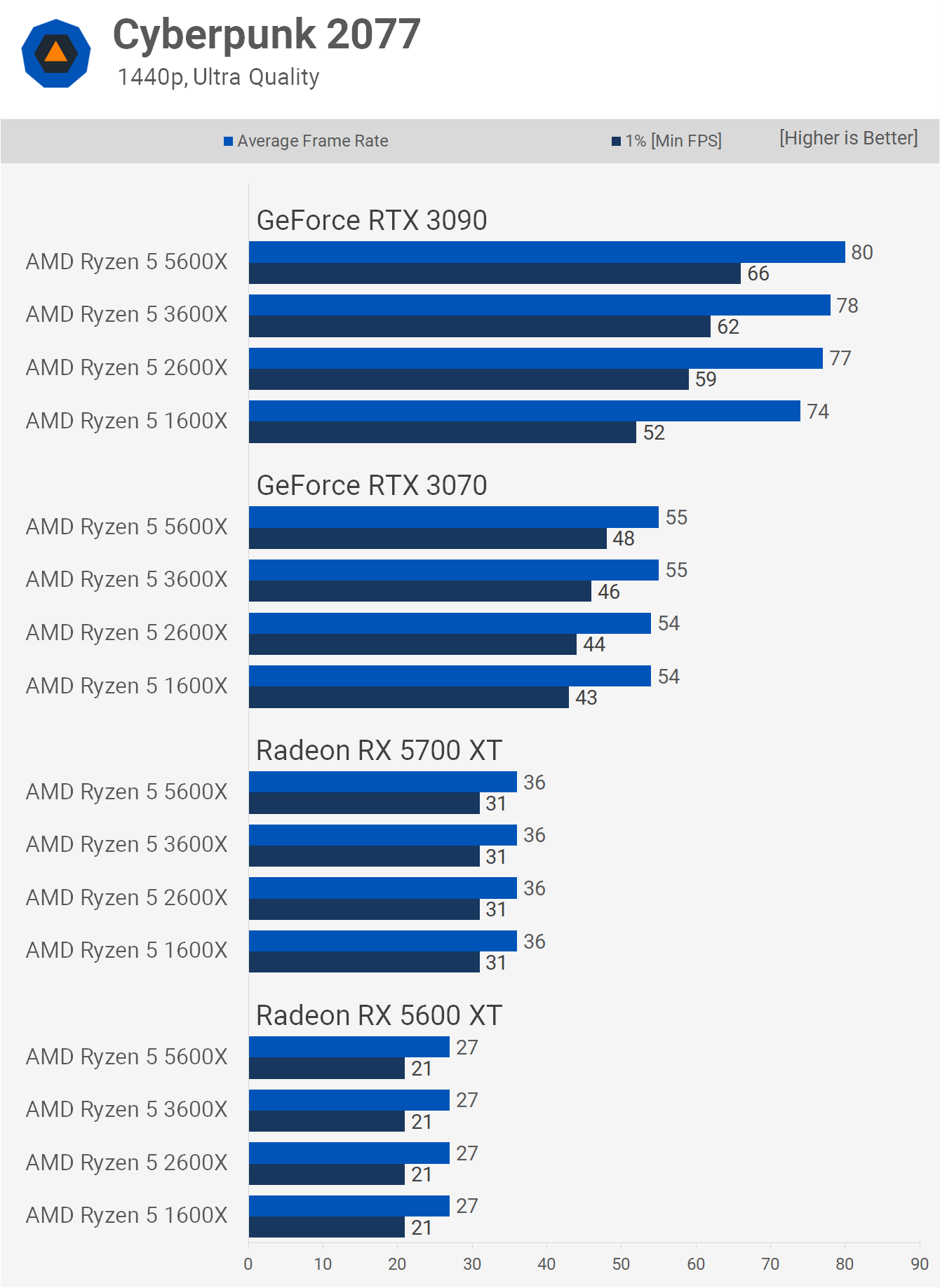

Now jumping up to 1440p which increases the GPU load more than it does CPU load sees the game become primarily GPU limited, at least when using an RTX 3070 or slower. Looking at the 1% low data we see that the 5600X is up to 12% faster than the 1600X when using the RTX 3070, not a huge difference when compared to 1st gen Ryzen, but there's still a difference here, not something we saw with the 5700 XT.

Then once we jump up to the RTX 3090 we see up to a 27% performance difference between the fastest and slowest 6-core/12-thread Ryzen CPUs tested, though there was just an 8% difference when comparing the average frame rate.

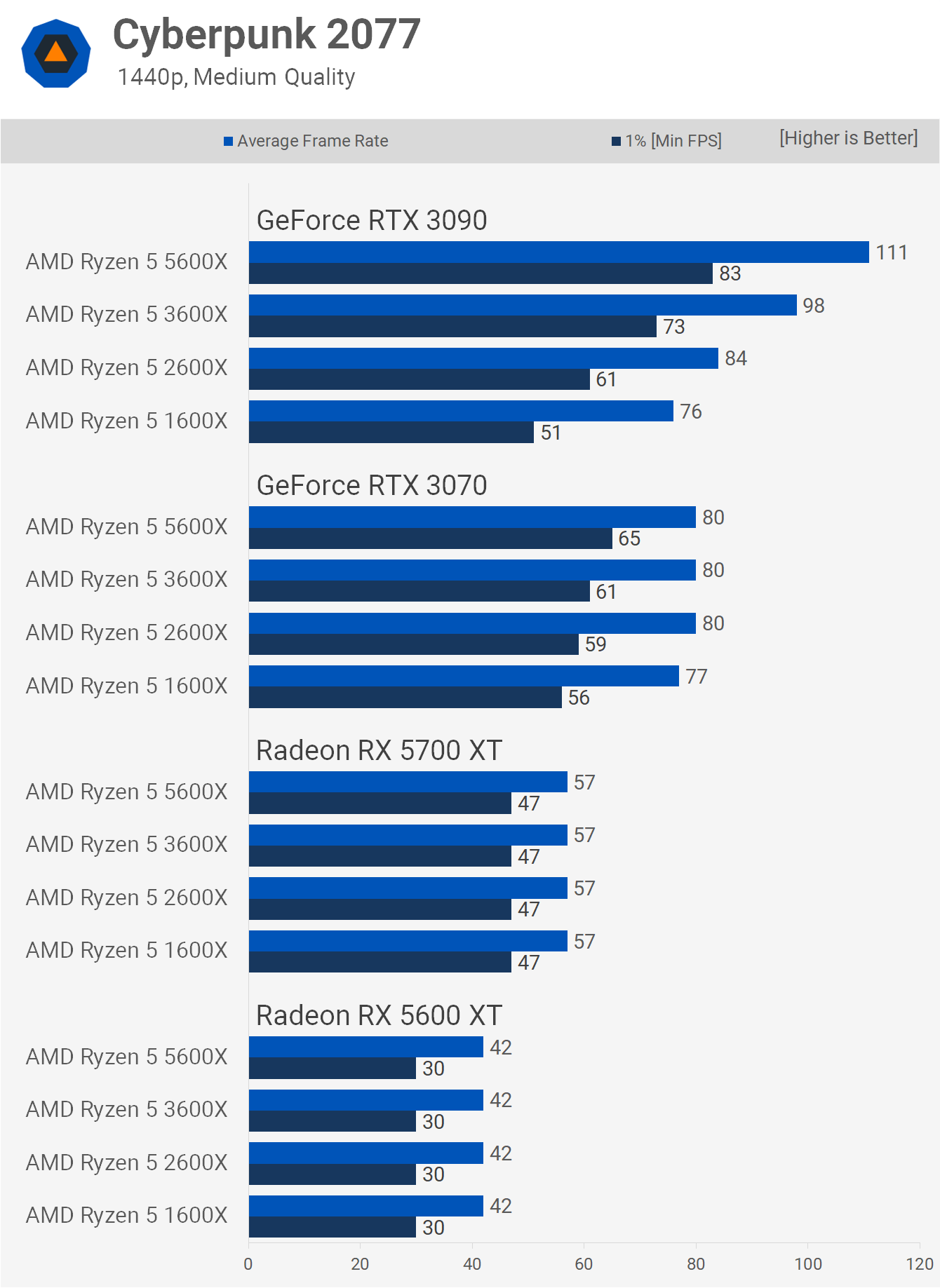

Now using the medium quality present at 1440p sees some variation in the 1% low performance when using the RTX 3070, here the 5600X was 7% faster than the 3600X, 10% faster than the 2600X and 16% faster than the 1600X.

The margins only become noteworthy when using the RTX 3090, here the 5600X was up to 14% faster than the 3600X, 36% faster than the 2600X and 63% faster than the 1600X which is obviously a massive margin, though it will require extreme GPU horsepower for that difference to be realized.

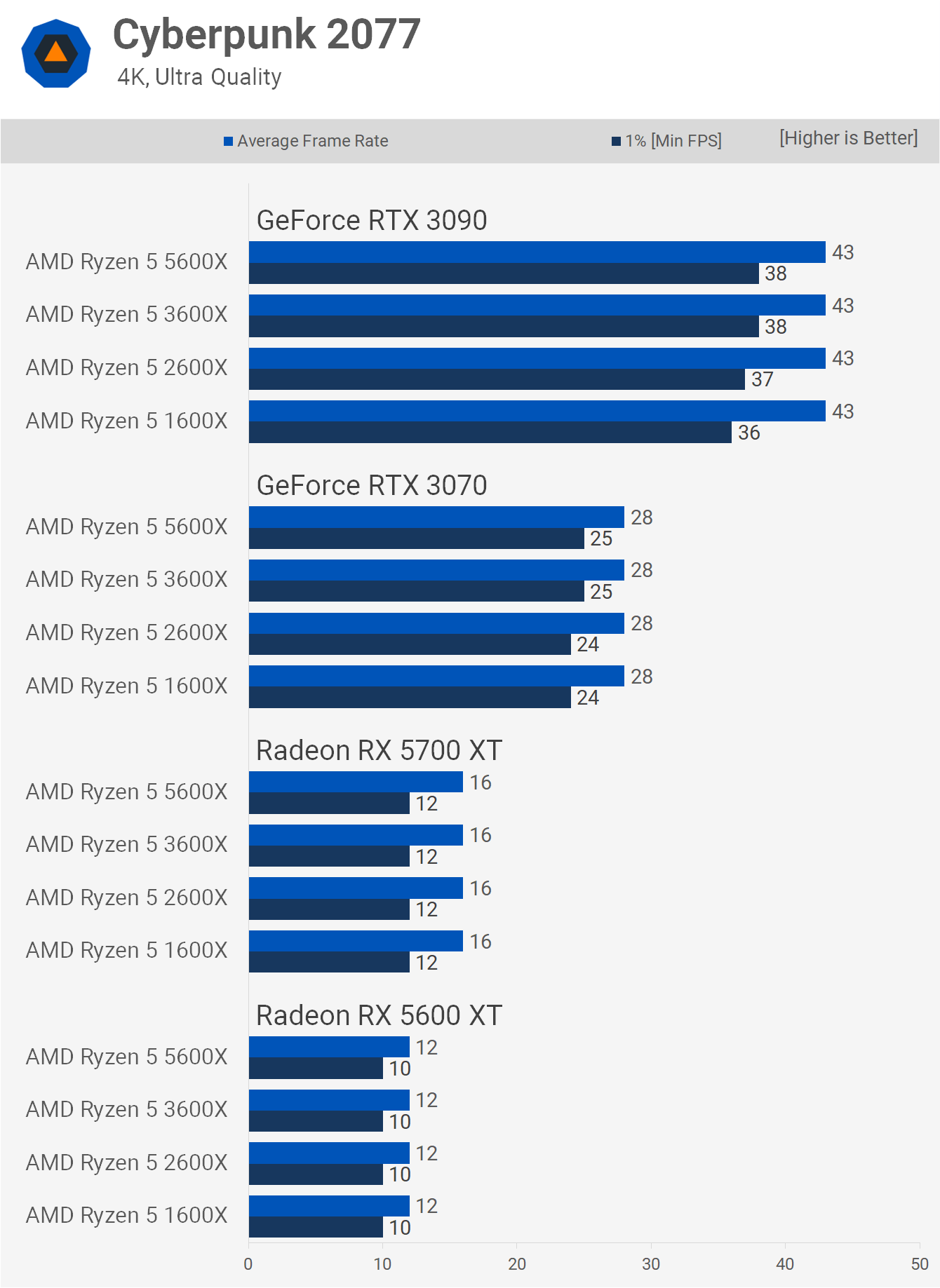

Climbing up to 4K virtually eliminates performance margins, even when using the RTX 3090, the 5600X was only up to 8% faster than the 1600X, that was a 2 fps difference while the average frame rate was identical.

Using the medium quality settings at 4K sees all four CPUs deliver the exact same performance using the RTX 3070, 5700 XT and 5600 XT.

The 1600X does suffer a little with the RTX 3090 and here the 5600X was 17% faster when comparing the 1% low data.

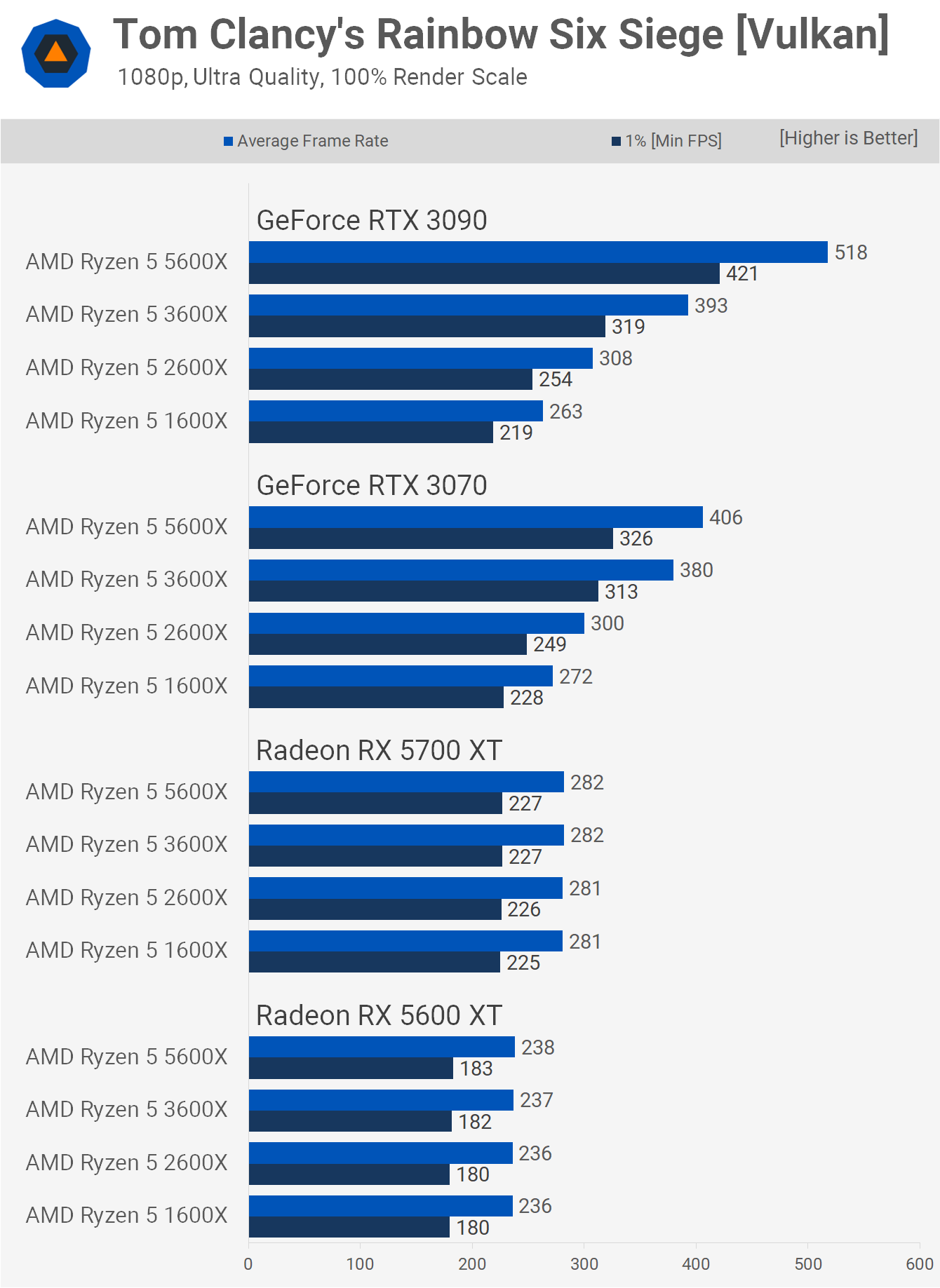

Rainbow Six Siege

Moving on to Rainbow Six Siege and this is where things start to get rather complicated. At 1080p using the ultra quality preset the results using the 5600 XT and 5700 XT are pretty much as you'd expect, the game is heavily GPU bound here and even the old Ryzen 5 1600X can max out these GPUs.

However, as we move to the Ampere GPUs a few strange observations can be made. Firstly, the Ryzen 5 1600X is slower using either the RTX 3070 or 3090 when compared to what we see with the 5700 XT. How is this possible you might be asking? We believe it's a two-pronged answer: the Nvidia drivers and the Ampere architecture.

Nvidia drivers have a high core dependency for multi-threading workloads, like what we see in DX12 and Vulkan titles. CPU architectures that feature a non-coherent cache like what we see with Zen, Zen+ and Zen 3 will need to be optimized for. We believe the issue is less pronounced with Zen+ due to cache optimizations that lead to the 2600X being faster overall, providing a less CPU-bound scenario.

Another oddity is that the Ryzen 5 1600X was able to deliver a slightly higher frame rate with the RTX 3070 when compared to the 3090. We're only talking about a 3% increase, but it was repeatable. We're guessing at this point but we believe the wider design of the GA102 die is creating more CPU load as the driver scheduler works harder to feed all those extra cores. We'll need to test more hardware configurations to work out what's going on here.

Whatever the case, it's fascinating to find that the Ryzen 5 1600X is able to deliver 7% more frames with the 5700 XT when compared to the RTX 3090. As for the RTX 3090 results, the 5600X was 32% faster than the 3600X, 68% faster than the 2600X, and an insane 97% faster than the 1600X, when comparing average frame rates.

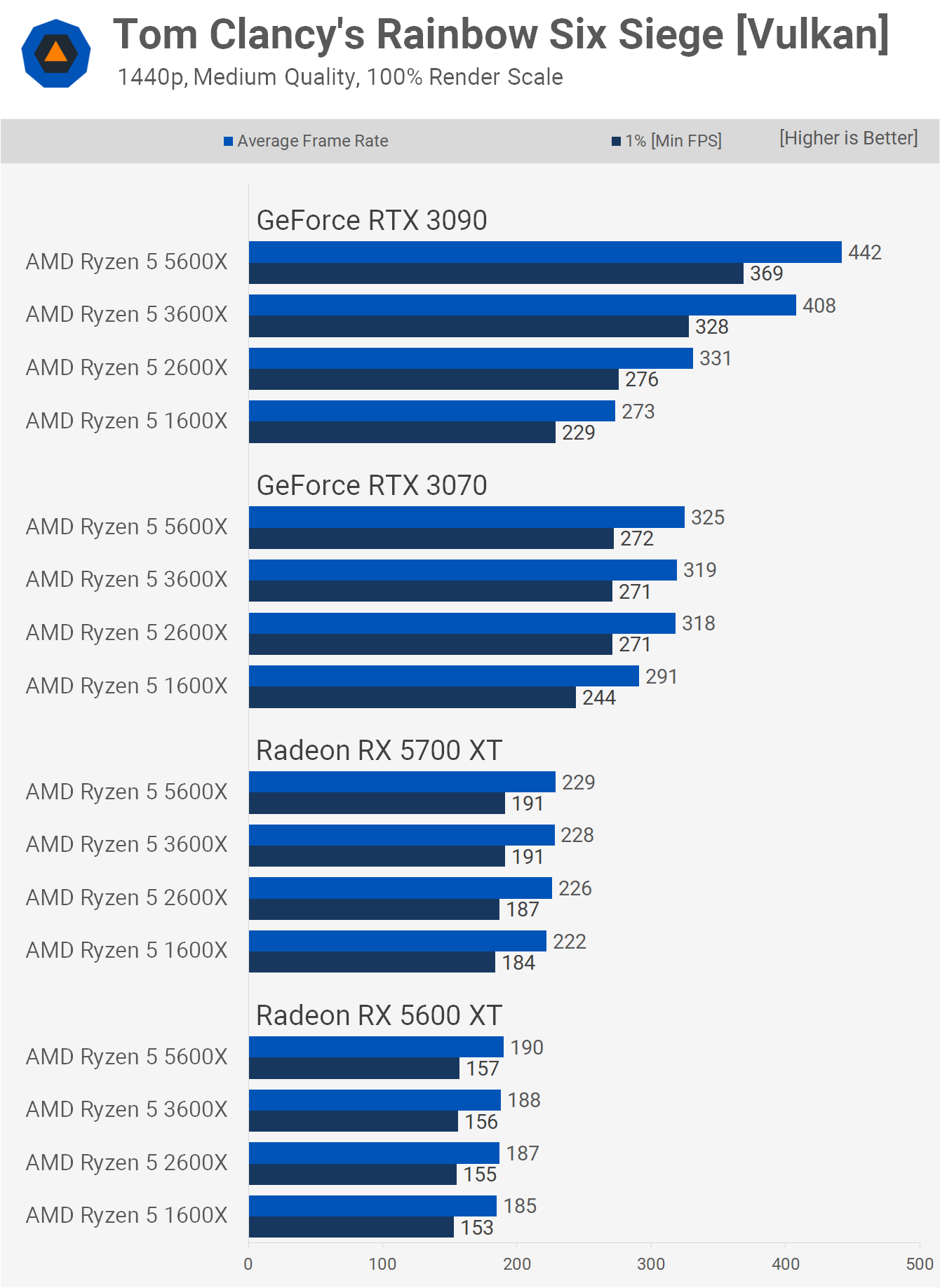

The funny results seen with Ampere GPUs using the ultra high preset are even more pronounced with the medium setting. If we look at the Ryzen 5 1600, we see that this CPU was able to roughly max out the Radeon 5600 XT with 272 fps on average and that figure was boosted by 18% with the 5700 XT to 321 fps.

However, by using what is technically a faster GPU with the RTX 3070 we find that the 1600X performance declines by 9% to 293 fps and then a further 6% with the RTX 3090 to 274 fps, or the same level of performance seen with the 5600 XT. This issue is less severe with the faster 2600X, but we still see a situation where going from the 5700 XT to the RTX 3090 does little to improve performance.

This issue is largely solved with the 3600X which saw a 25% performance increase when jumping up from the 5700 XT to the RTX 3070. Yet once again we find that the higher core-count RTX 3090 slowed down the 3600X by a 5% margin, dropping the average frame rate from 435 fps to 413 fps when naturally you'd expect the opposite.

The only CPU that scales correctly here is the 5600X and its unified L3 cache. We see a 26% performance boost when moving from the 3070 to the 3090, and a 65% increase from the 5700 XT.

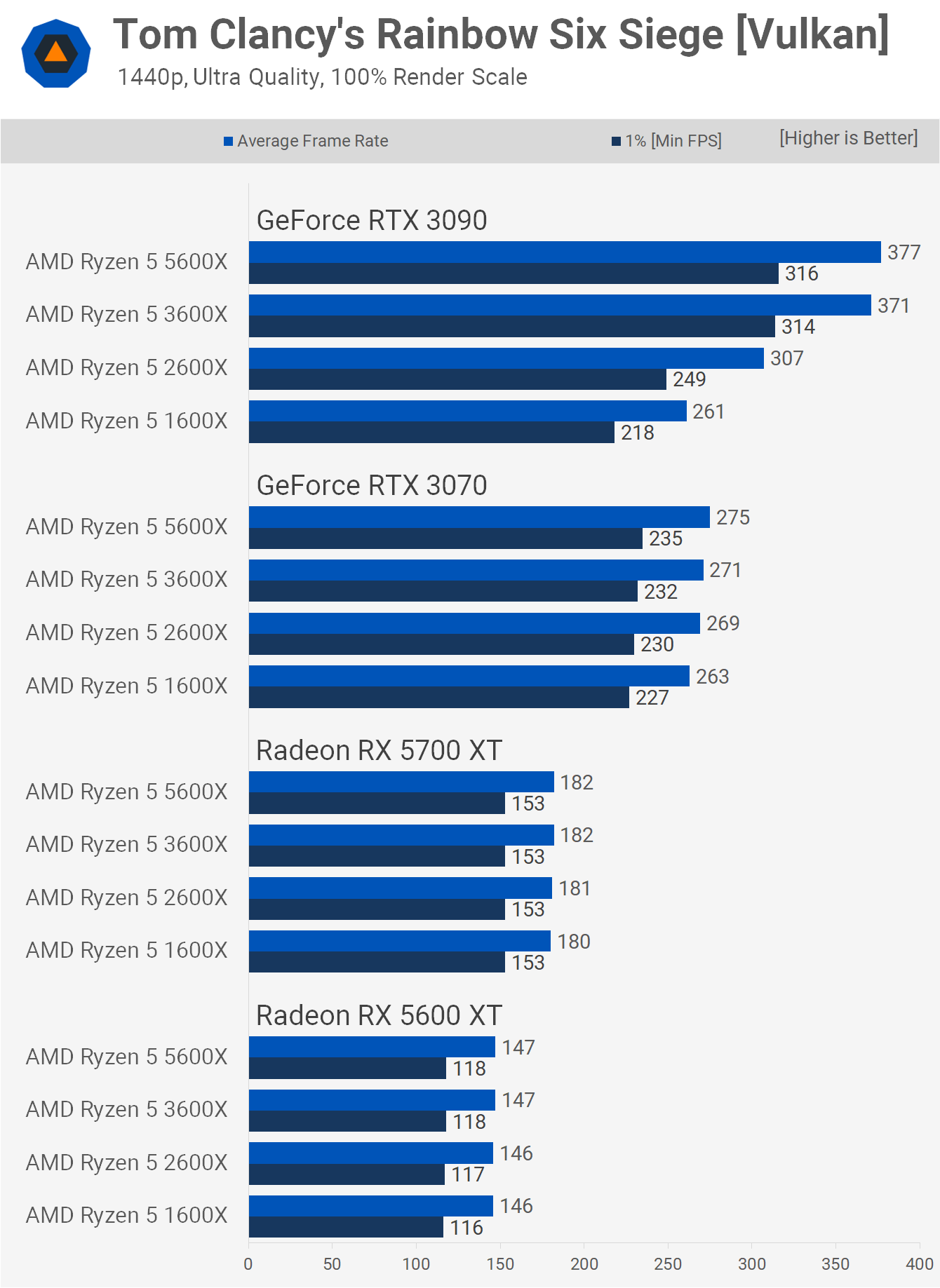

By drastically increasing the GPU load at the 1440p resolution, we find that scaling returns to normal. Here we're observing similar CPU performance across the board when using the RTX 3070 or slower. The R5 1600X did drop some when upgrading to the RTX 3090, though that wasn't the case for the 2600X which saw a 14% increase.

The 5600X and 3600X were both fast enough to max out the RTX 3090 and that meant the new Zen 3 processor was 23% faster than the 2600X and 44% faster than the 1600X.

Lowering the quality to medium at 1440p sees the 1600X trail the newer Zen parts and this just goes to show how significant the refinements made with the Zen+ architecture really were.

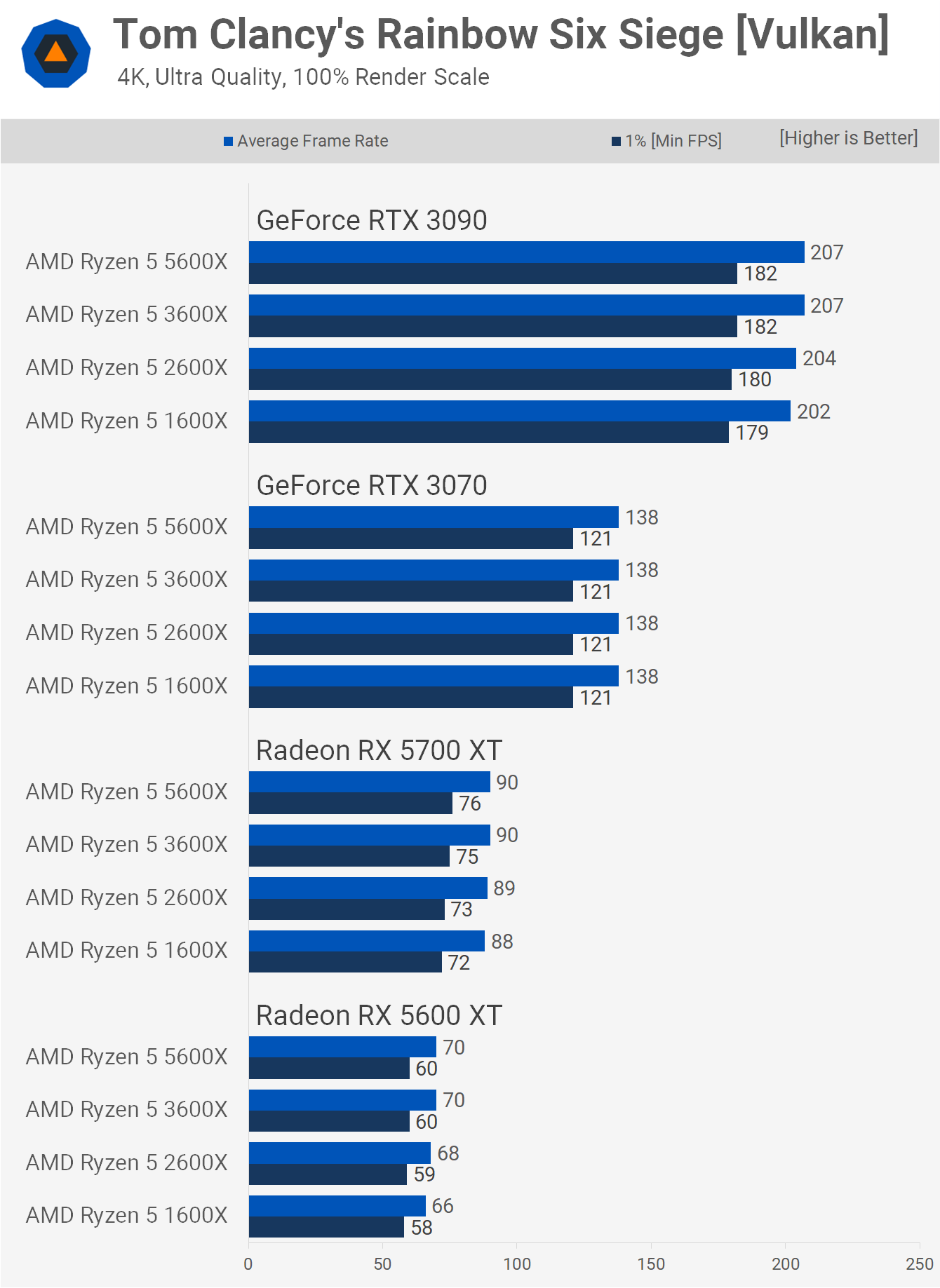

Once we reach 4K, there's little to see in the way of performance differences, basically we're heavily GPU limited and even the 1600X comes close to maxing out every GPU.

We see much of the same with the medium quality settings at 4K.

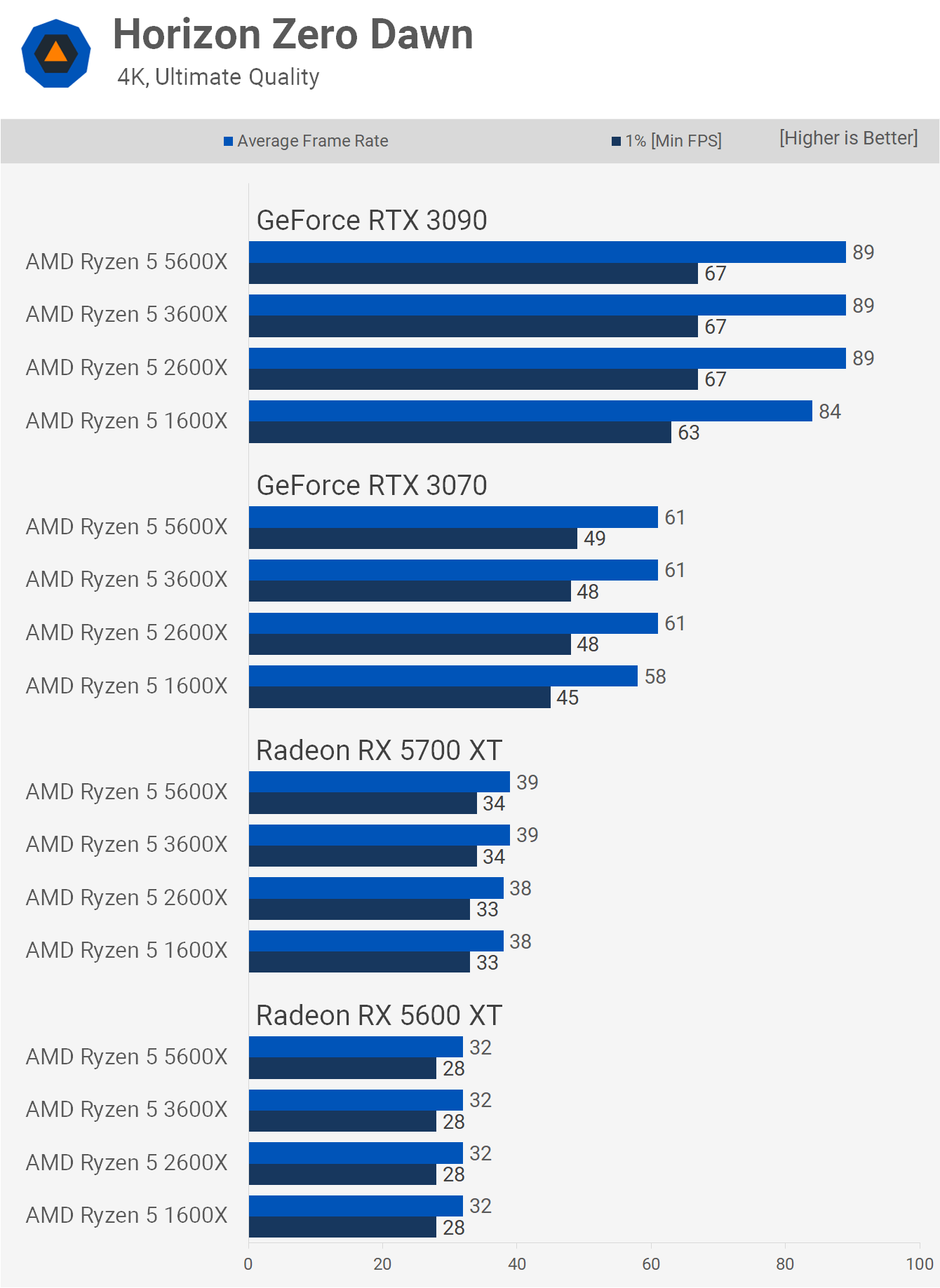

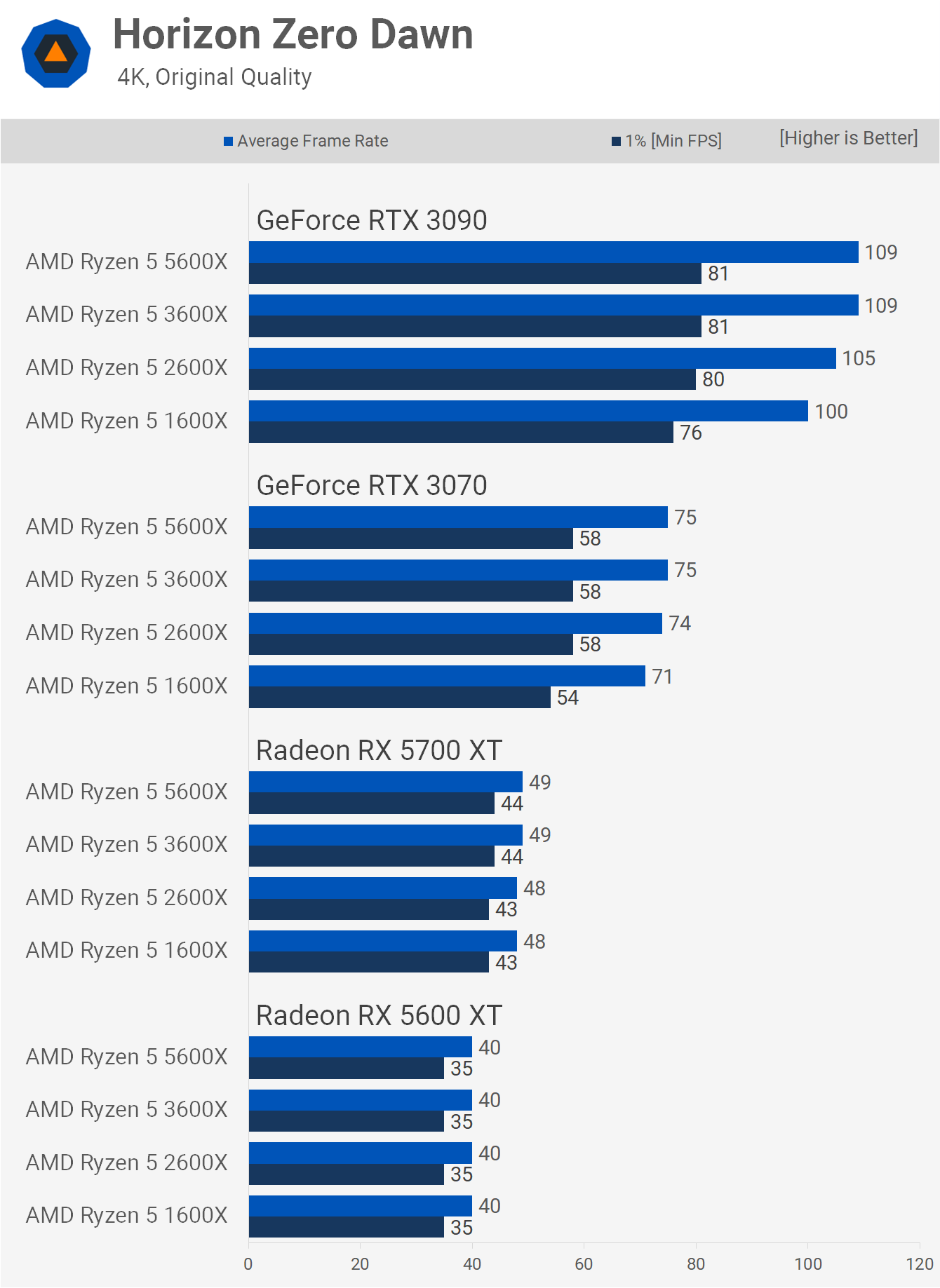

Horizon Zero Dawn

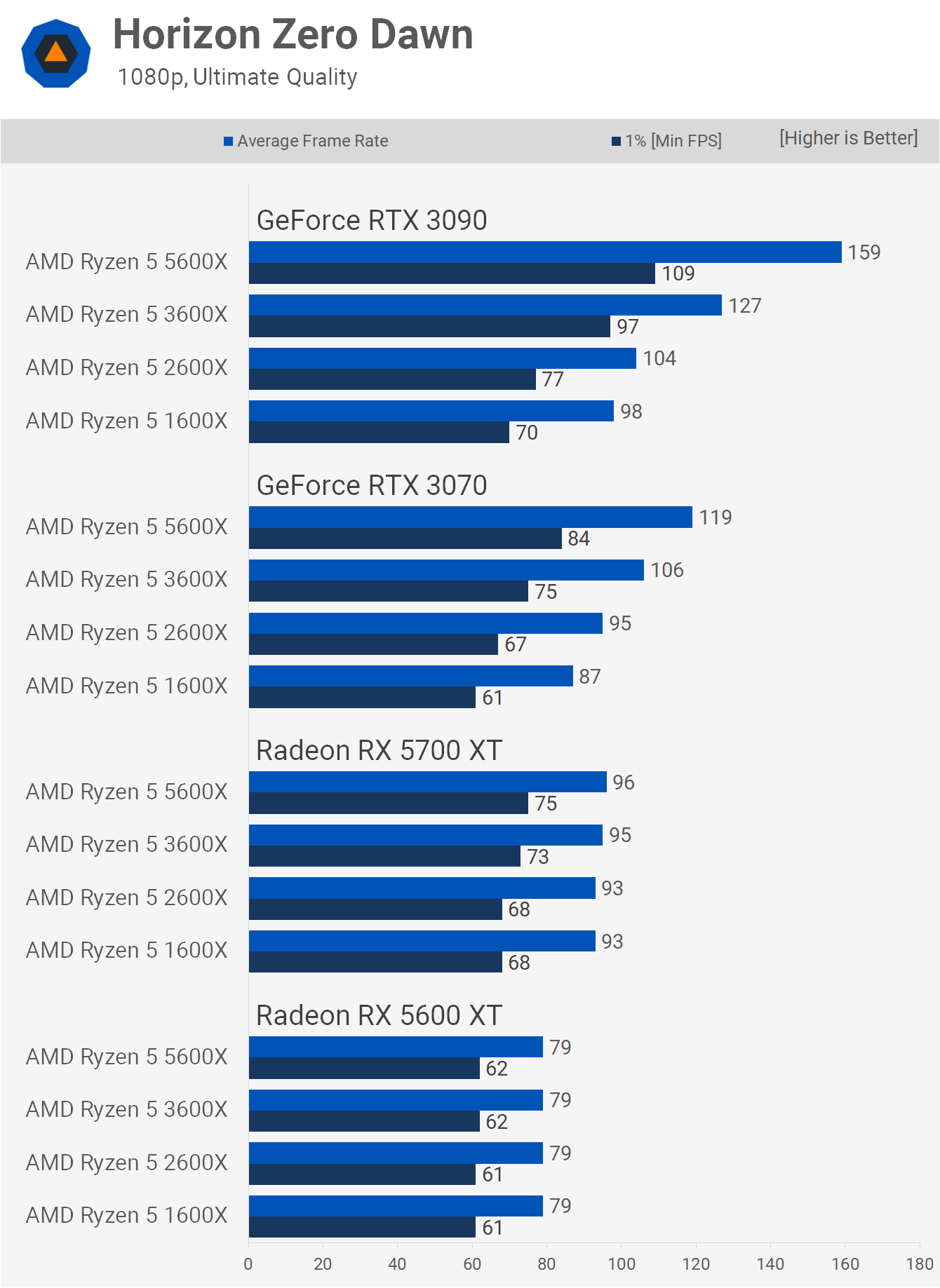

Time for more odd results in Horizon Zero Dawn when looking at 1080p ultra quality data. The Radeon results are what you'd expect to find, all four CPUs maxed out the 5600 XT, while we see a small performance improvement for the 3600X and 5600X using the 5700 XT.

However, it's again the 1600X results using Ampere GPUs that are confusing relative to what we saw with the 5700 XT. Whereas the 1600X averaged 93 fps using the 5700 XT, it was good for just 87 fps with what should be the faster RTX 3070, though it did peak at 98 fps using the RTX 3090.

The 2600X on the other hand was fast enough to mitigate the overhead with the Ampere GPUs, delivering virtually the same performance with the 5700 XT and RTX 3070 to see a 12% uplift with the RTX 3090.

The 1080p results are more extreme using the medium quality preset. Here we're seeing a bigger performance difference between the 1600X and 2600X when compared to the 3600X and 5600 XT using the 5700 XT, but that's not the most interesting thing to note.

The Ryzen 5 1600X results are truly bizarre as this CPU was good for 118 fps on average with the 5700 XT, yet just 98 fps with the RTX 3070 and 109 fps with the RTX 3090. A similar thing is seen when using the 2600X, it was good for 123 fps with the 5700 XT but just 109 fps with the 3070, though it did jump back up to 125 fps with the 3090. In fact, even the 3600X was faster with the 5700 XT when compared to the RTX 3070, though it was 8% faster with the 3090.

Looking at those results you'd think that the 5700 XT was the superior performer, but technically it's not as the 5600X was a massive 40% faster using the flagship Ampere GPU. Now if we look at the CPU limited RTX 3090 results we see that the 5600X was 32% faster than the 3600X, 50% faster than the 2600X and 72% faster than the 1600X.

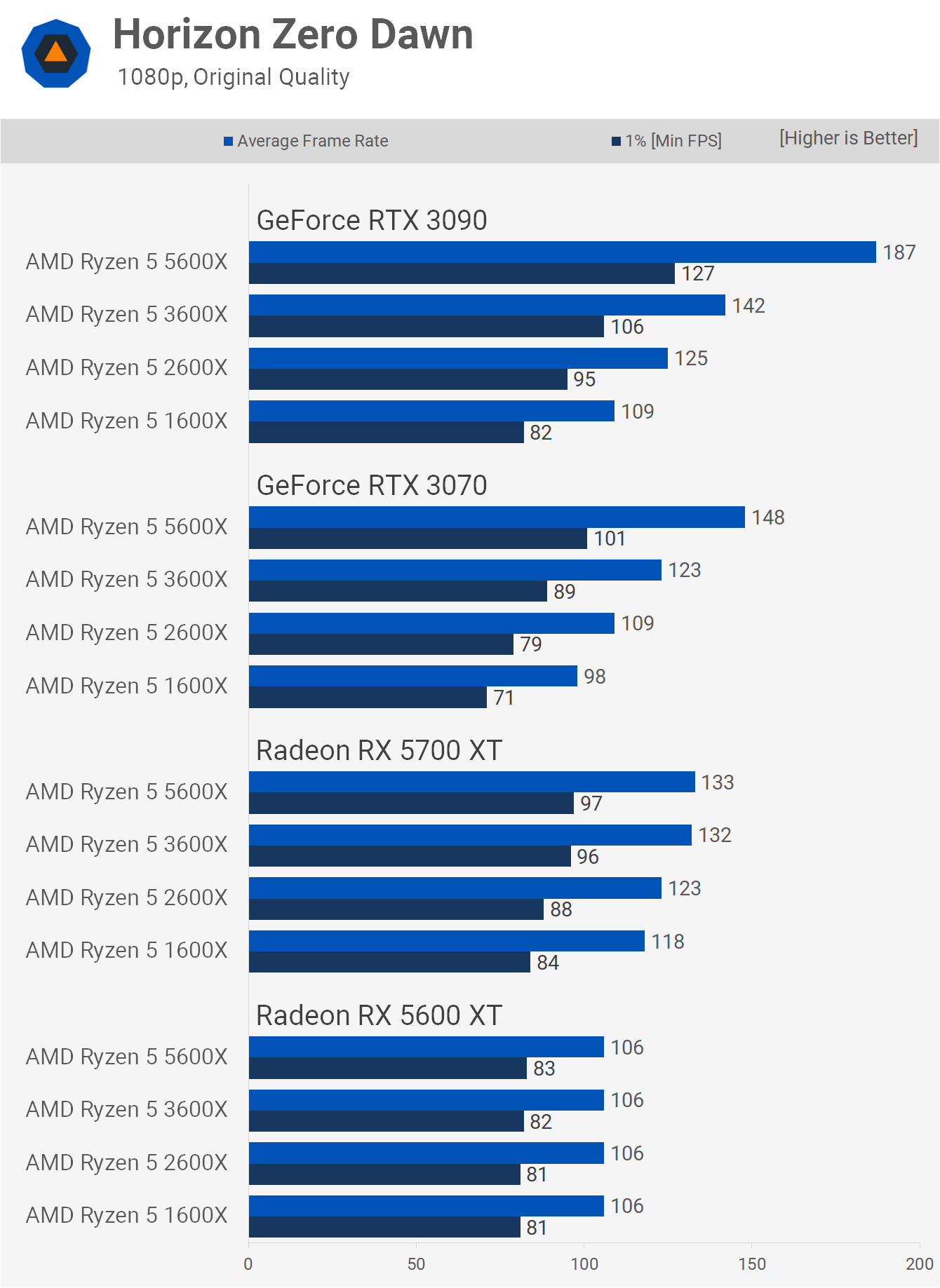

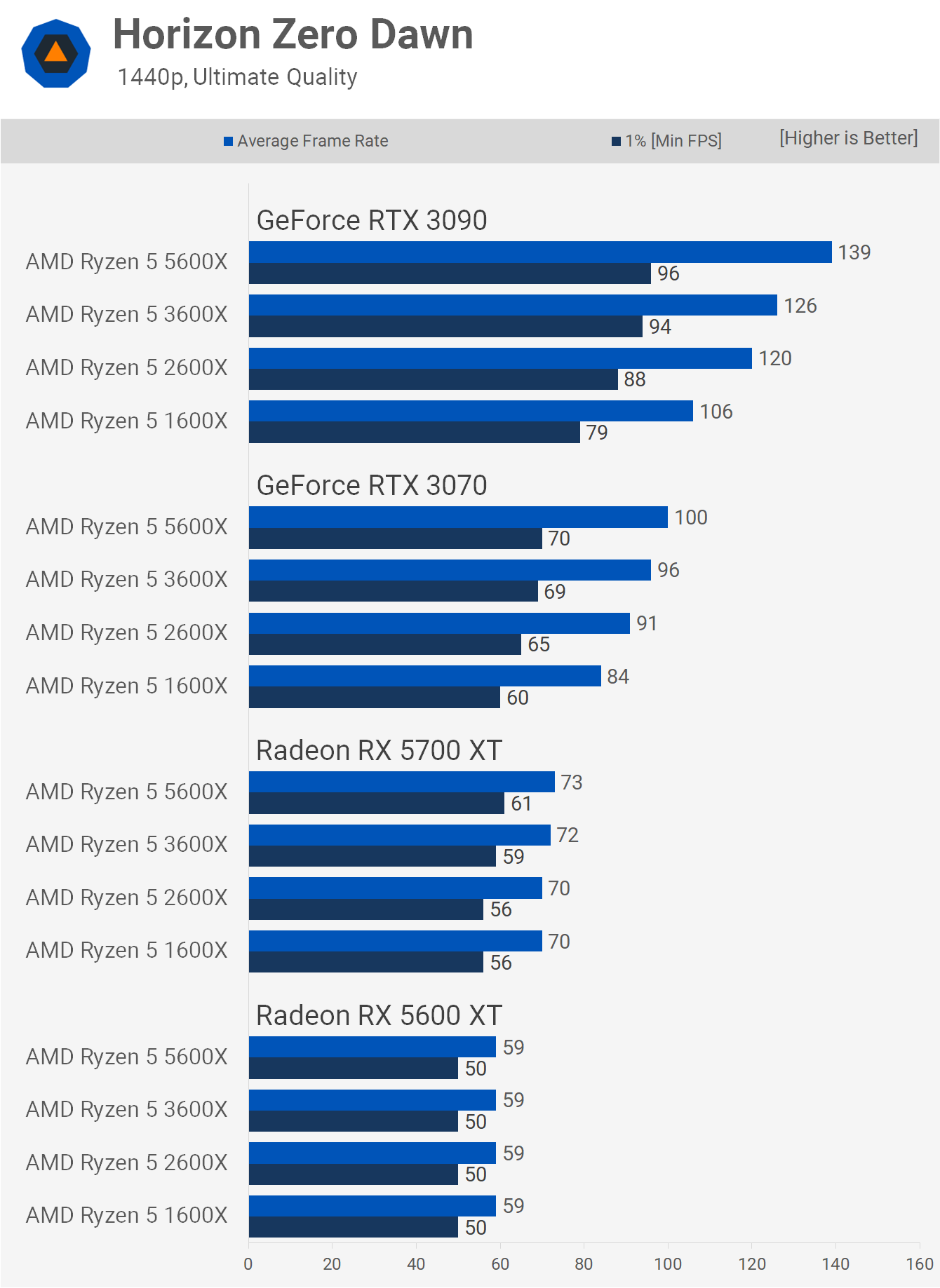

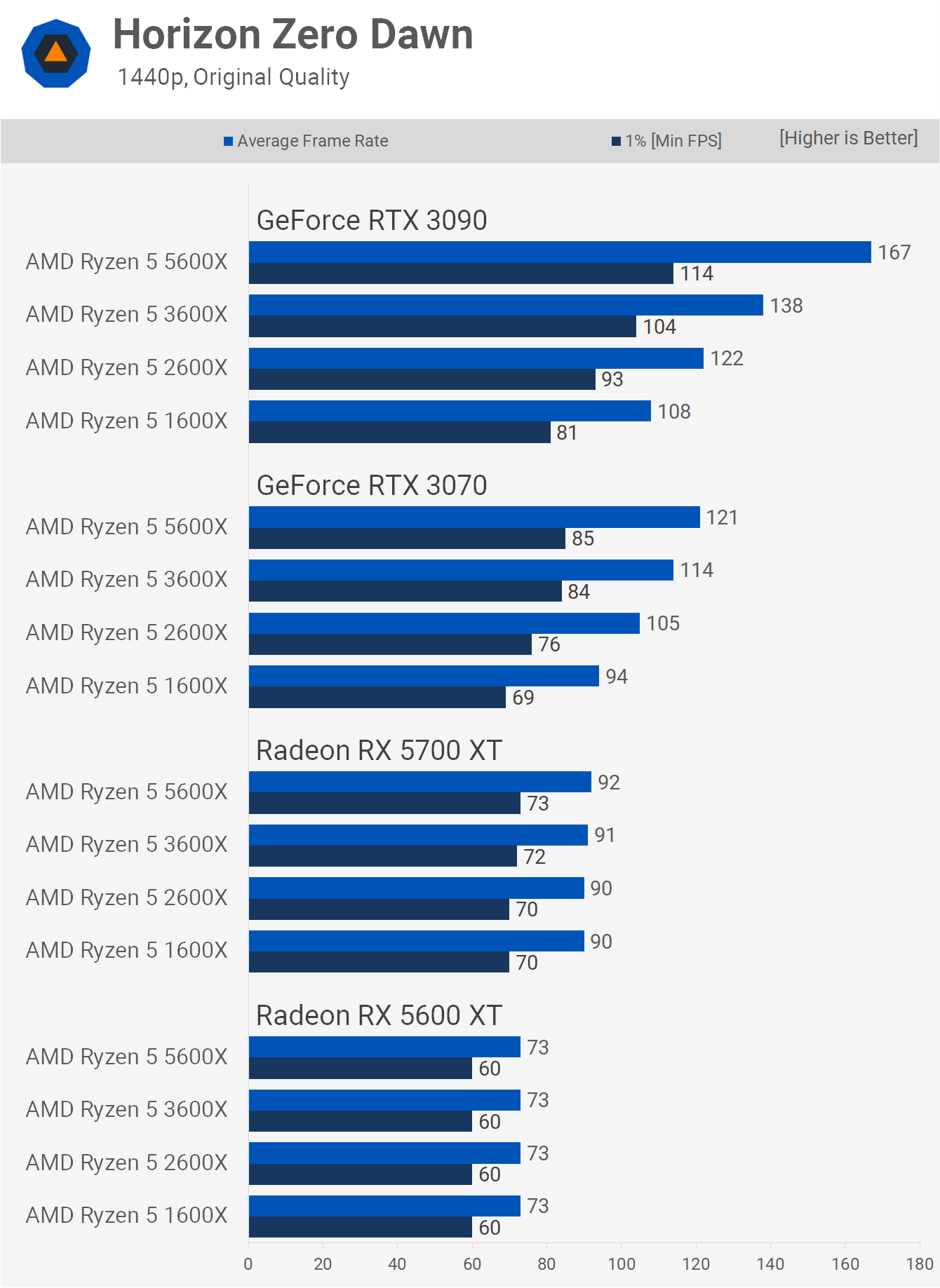

Once again we find that increasing the resolution to 1440p which makes the test more GPU bound, sees the strange results between the RDNA and Ampere GPUs with the older Ryzen processors disappear. All found CPUs maxed out the 5600 XT with only minor performance differences seen with the 5700 XT. Then we see a universal performance uplift when moving to the RTX 3070 with further gains for all processors seen with the RTX 3090.

Scaling with the RTX 3070 is fairly consistent, here the 5600X was 4% faster than the 3600X, 10% faster than the 2600X and 19% faster than the 1600X.

The 1440p medium quality margins are fairly similar to what we saw using the ultra preset, all four CPUs came close to maxing out the 5700 XT while we start to see a noticeable difference in performance with the RTX 3070. Then by the time we reach the 3090 the 5600X is seen to be delivering 21% more performance than the 3600X, 37% more than the 2600X and 55% more than the 1600X.

As expected, we're almost entirely GPU bound at the 4K resolution. The 1600X trailed slightly with the Ampere GPUs but outside of that performance was the same for the Zen+, Zen 2 and Zen 3 processors.

Using the medium quality settings at 4K saw some variation in the results using the RTX 3090, here the 3600X and 5600X were just 4% faster than the 2600X and 9% faster than the 1600X.

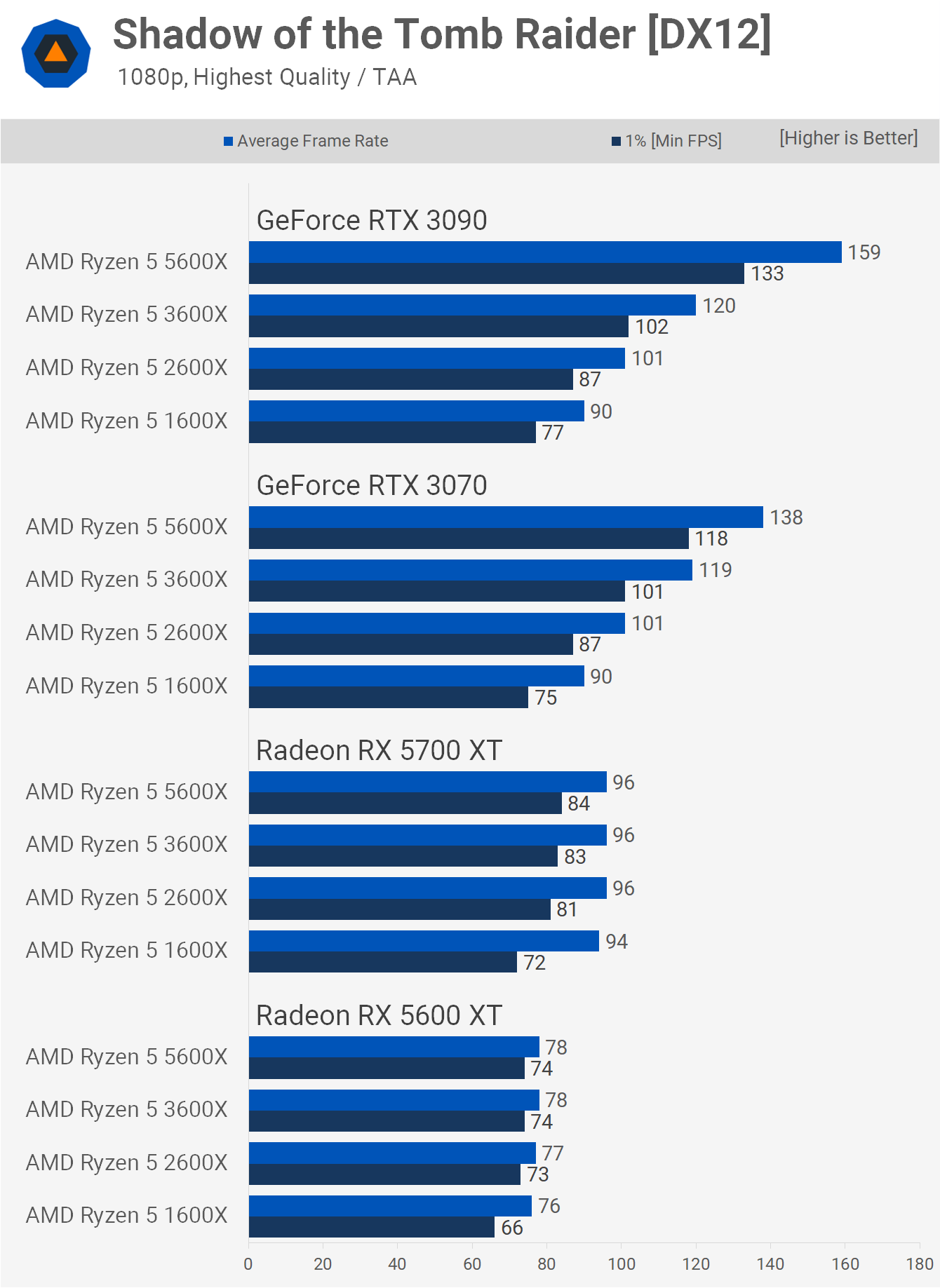

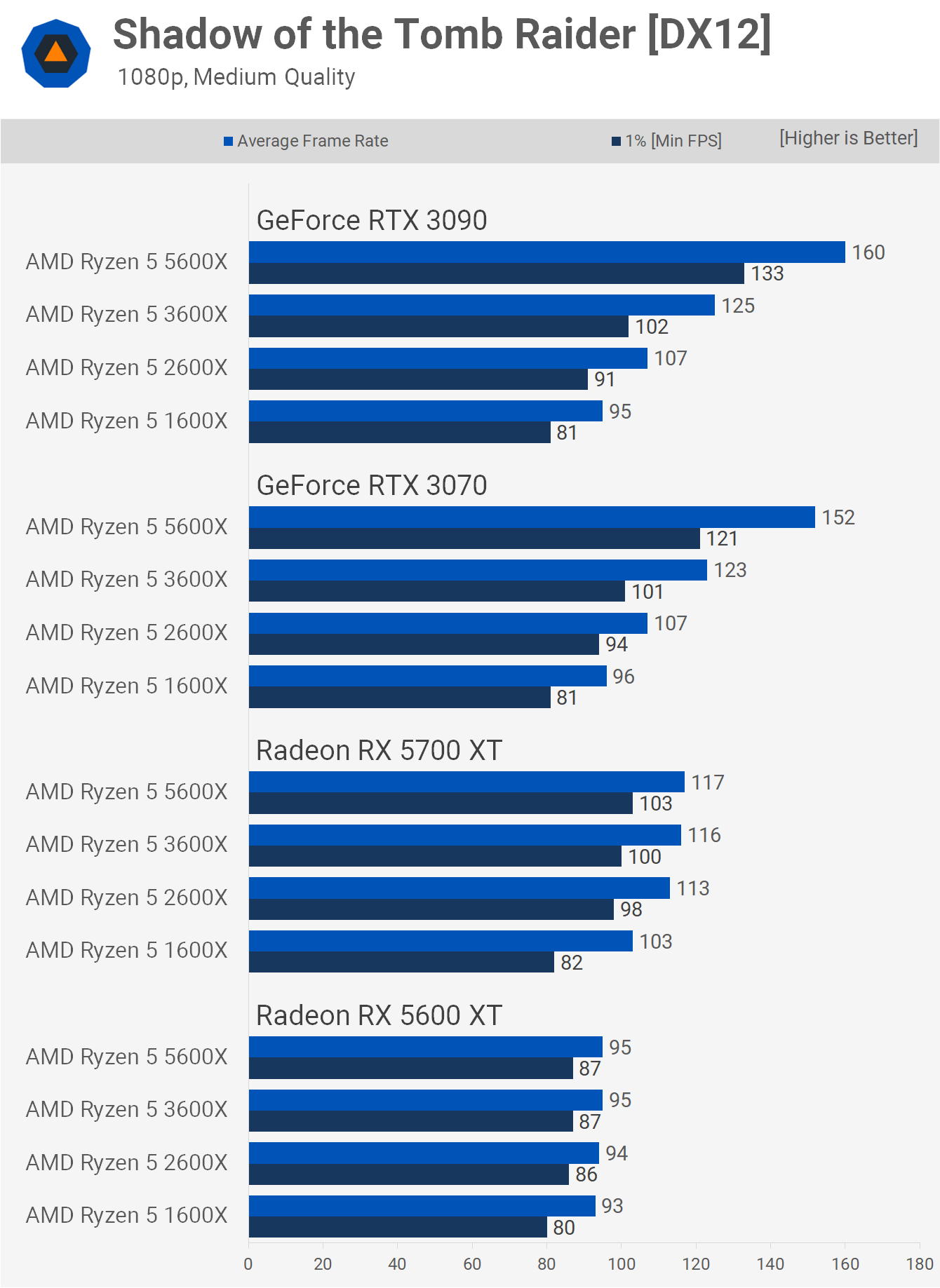

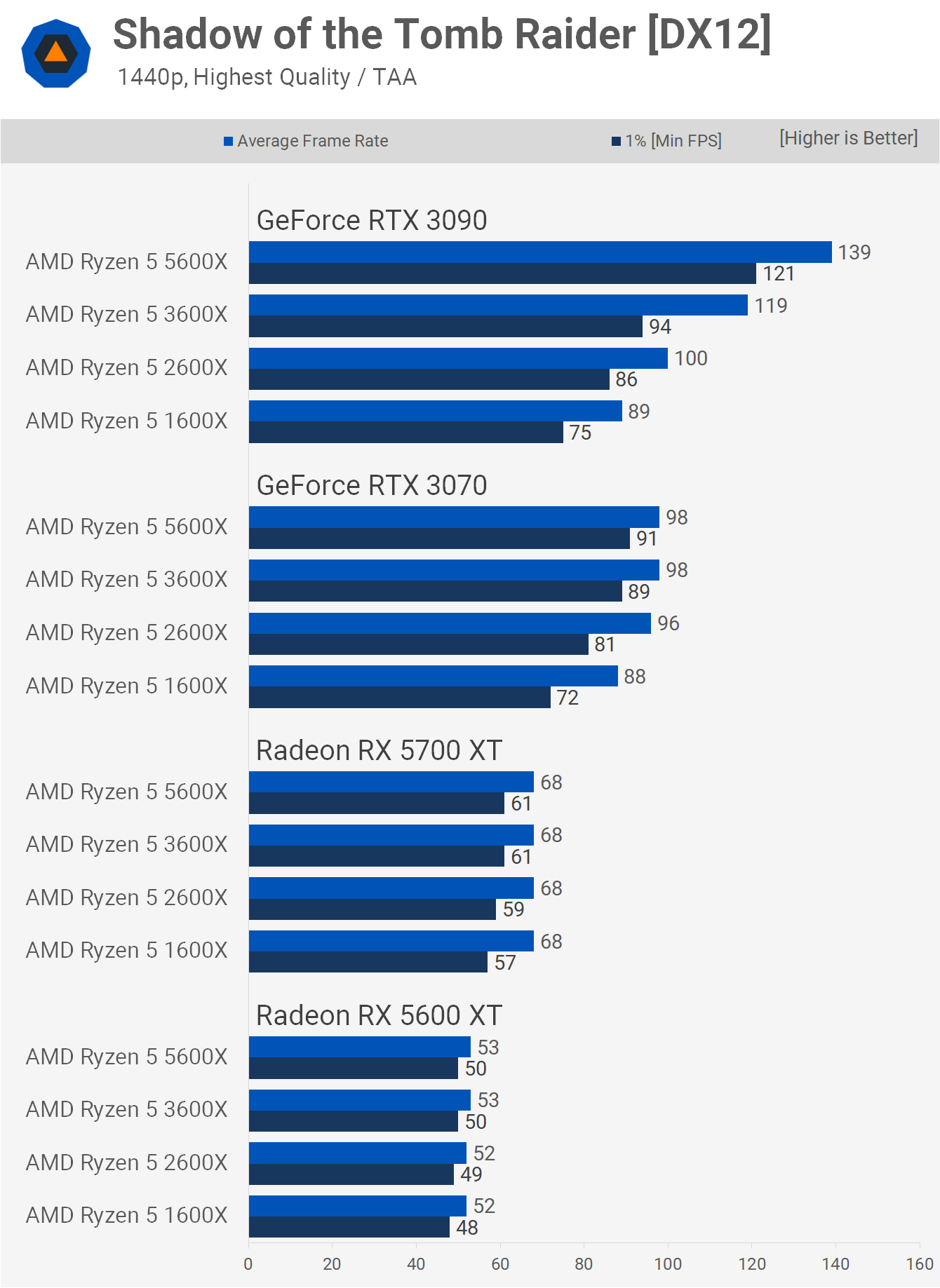

Shadow of the Tomb Raider

Next up we have the very CPU demanding Shadow of the Tomb Raider and here we find that even with the 5600 XT the Ryzen 5 1600X is dropping a few frames, particularly when looking at the 1% low performance. This is slightly more pronounced with the 5700 XT where the 5600X was up to 17% faster.

The 1600X saw a small reduction in average frame rate performance with the Ampere GPUs, though there was also a small uptick in 1% low performance. The 1600X, 2600X and 3600X all limited the performance of the 3090 and 3070 as the 5600X was 16% faster than the 3600X when using the RTX 3070 and 33% faster with the RTX 3090.

Using the medium quality settings at 1080p resulted in very similar performance margins and again the 1600X was slightly faster using the 5700 XT when compared to the Ampere GPUs, the same was also true of the 2600X.

Then with the RTX 3090 we see that the 5600X was 28% faster than the 3600X, 50% faster than the 2600X and 68% faster than the 1600X.

Increasing the resolution to 1440p helps alleviate the CPU bottlenecks seen at 1080p and now all four Ryzen processors are able to get the most out of the 5600 XT and 5700 XT. We're also seeing a situation where the 1600X and 2600X are significantly faster when paired with the RTX 3070 opposed to the 5700 XT. The game was also GPU limited using the RTX 3070 when paired with either the 5600X or 3600X.

The medium quality results see no real difference in performance between the various 6-core Ryzen processors when using the 5700 XT or 5600 XT. We start to see a bit of separation with the RTX 3070 and then once we jump up to the RTX 3090 the newer Zen 3 processor is much faster than the previous generations.

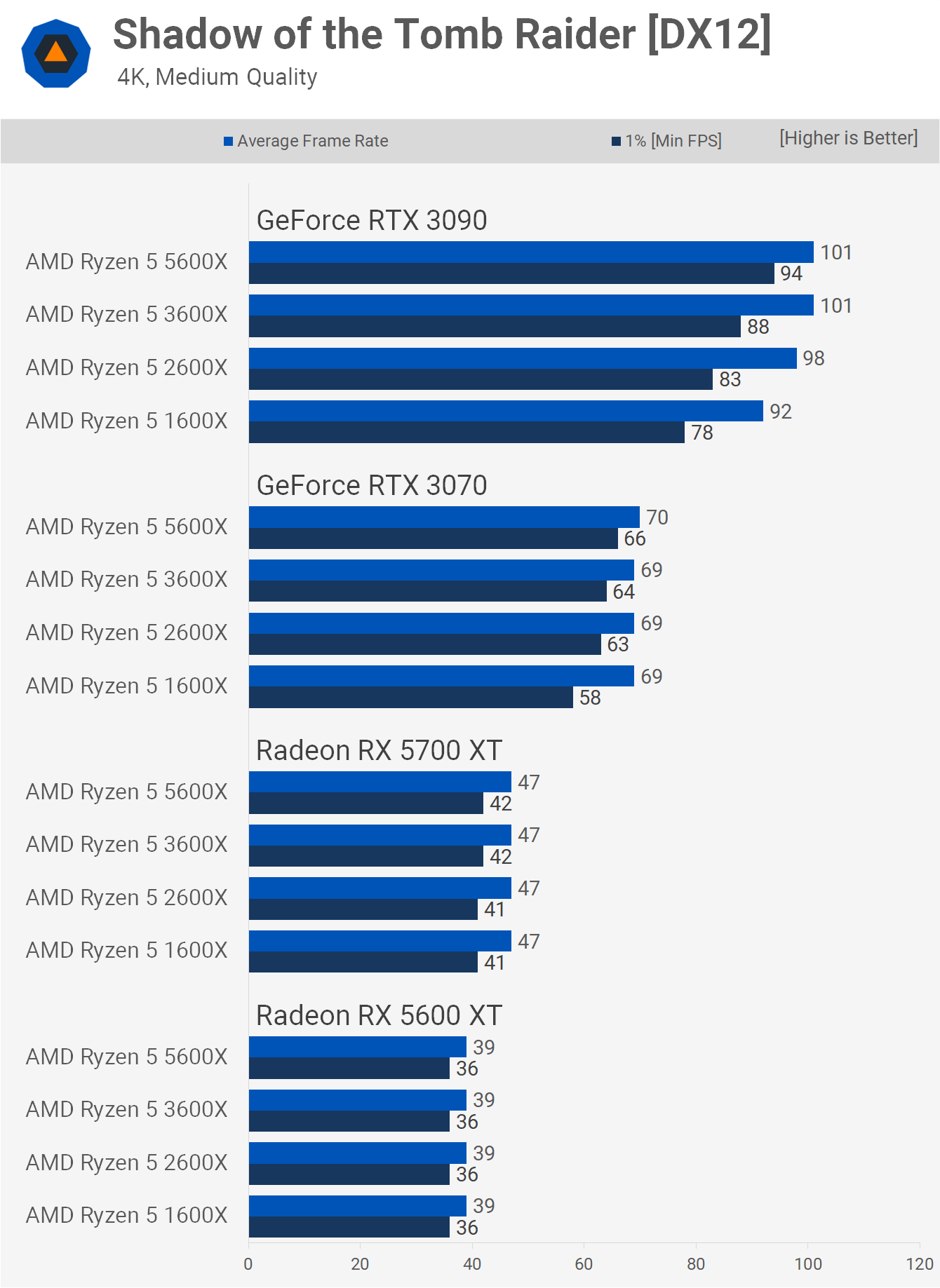

Jumping up to 4K makes the testing far more GPU limited and predictable, though the mighty RTX 3090 is still powerful enough to see some variation in CPU performance, especially when looking at the 1% low data.

Quite incredibly, the 5600X was still up to 18% faster than the 1600X, 13% faster than the 2600X and even 10% faster than the 3600X.

The 4K medium results are similar to what we saw before. It's not until you jump up to the RTX 3090 that we start to see a noteworthy difference in performance.

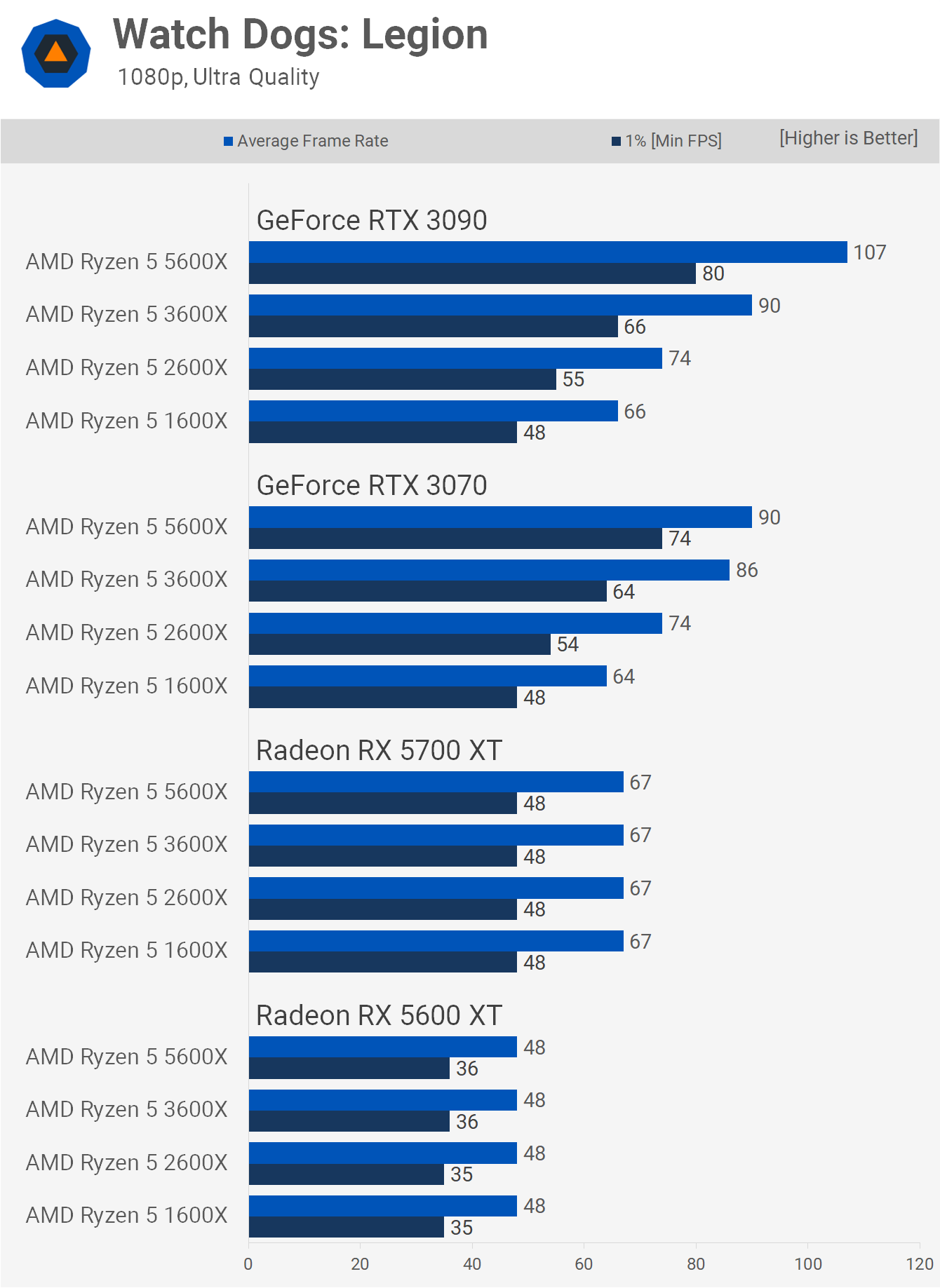

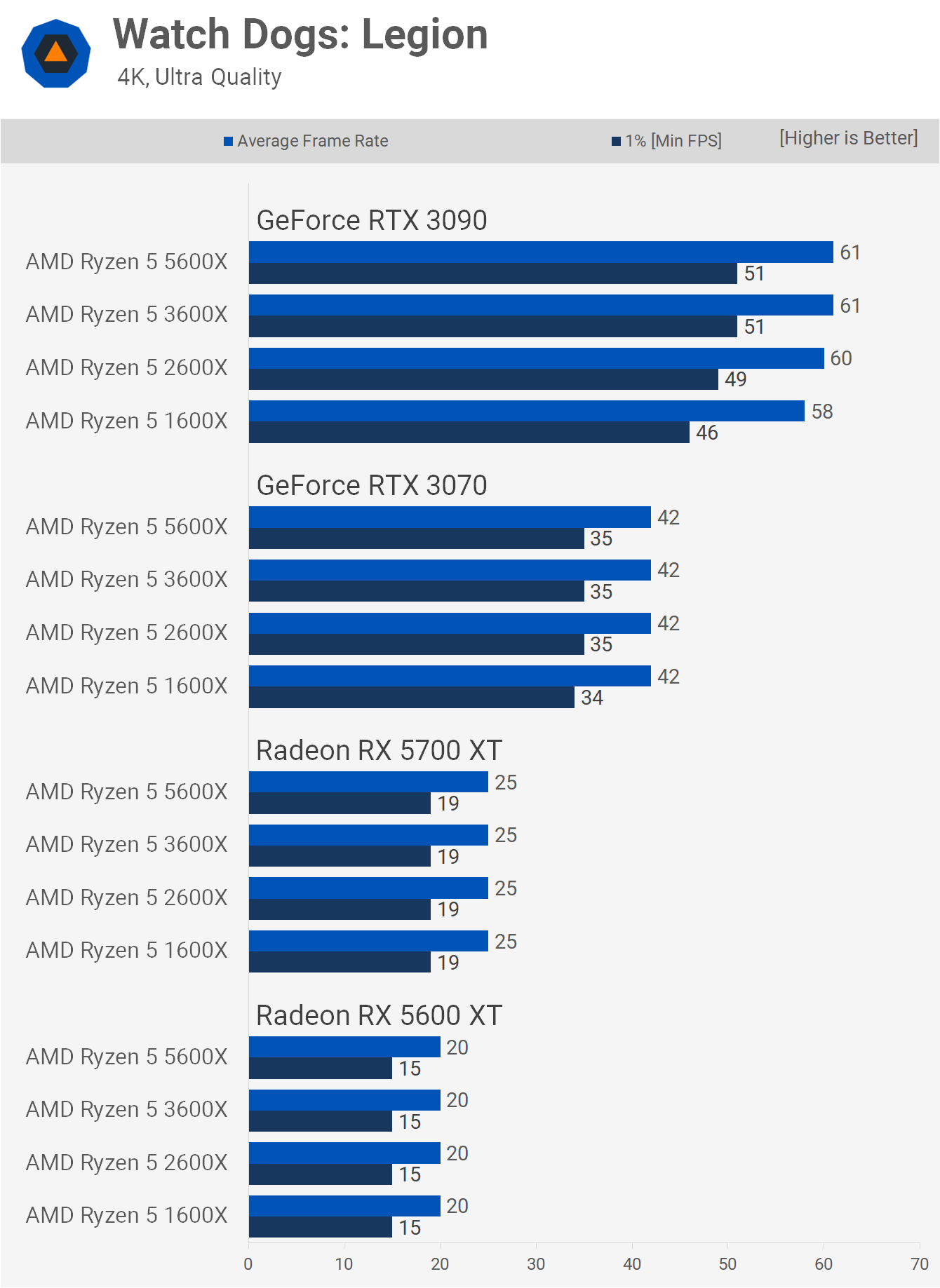

Watch Dogs Legion

In Watch Dogs Legion running at 1080p we're looking at a hard GPU limitation using both the 5600 XT and 5700 XT and this time moving to the RTX 3070 only had a very minimal negative impact on performance for the 1600X.

That said with the RTX 3070 it was only the 5600X that was able to find the limits of that GPU under these test conditions.

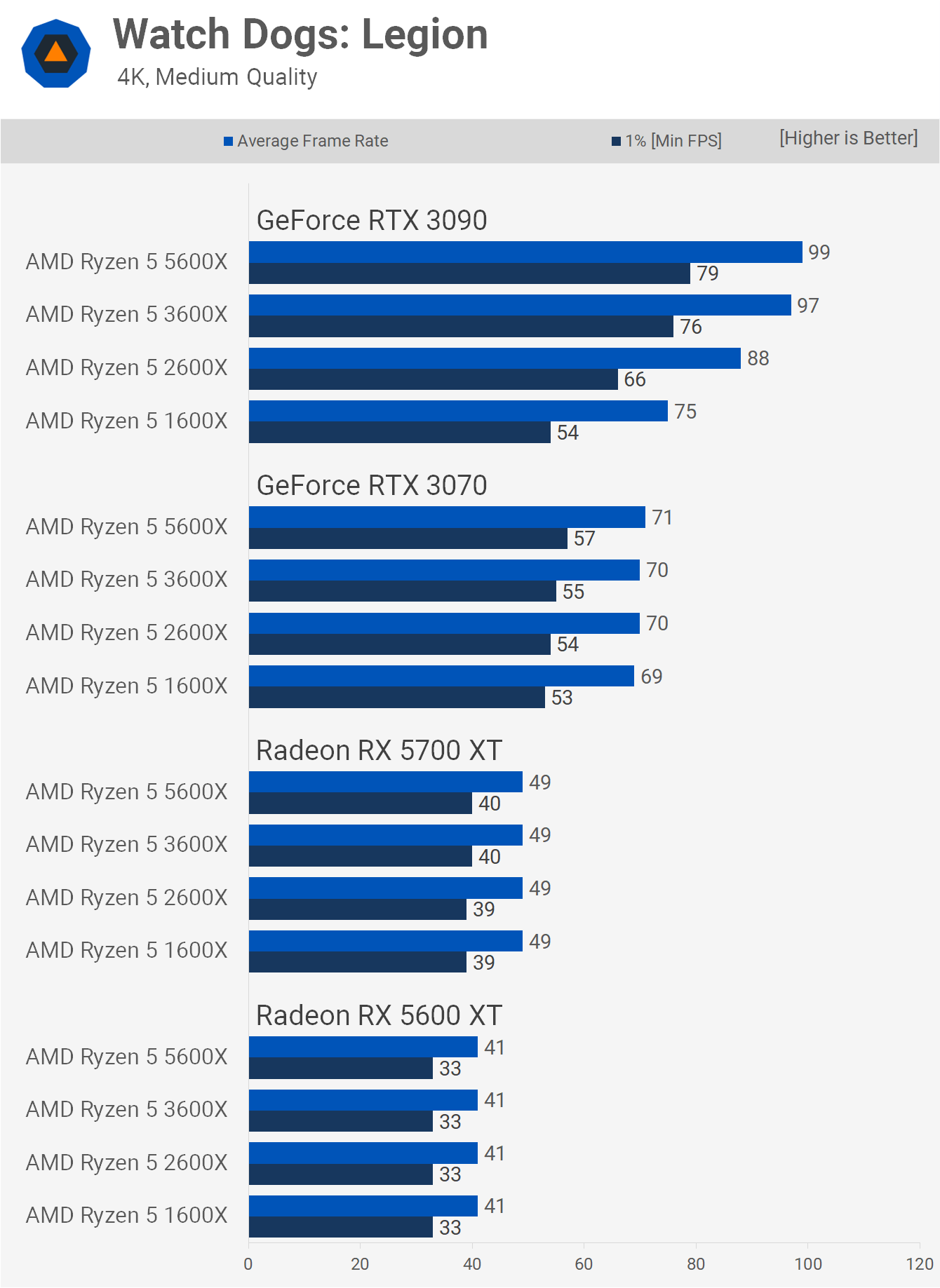

Then testing at 1080p again but using the medium quality preset results in the mess you see below...

The 5600 XT and 5700 XT results are fairly easy to make sense of. The 1600X and to a degree the 2600X aren't able to max out the 5600 XT, though the Zen+ part comes close. Then with the faster 5700 XT the 1600X and 2600X trail the 3600X and 5600X quite significantly, though you could argue that performance overall was very acceptable. Still with this now mid-range GPU the 5600X was 17% faster than the 2600X and 28% faster than the 1600X.

Where the data gets messy is when we compare the 1600X and 2600X 5700 XT data with the RTX 3070, in fact here you can also include the 3600X as well. The 1600X and 2600X were 20% faster when paired with the 5700 XT, and the 3600X was 17% faster. These Ryzen CPUs clearly perform better with the Radeon GPUs under these test conditions and the margins are very similar even with the RTX 3090.

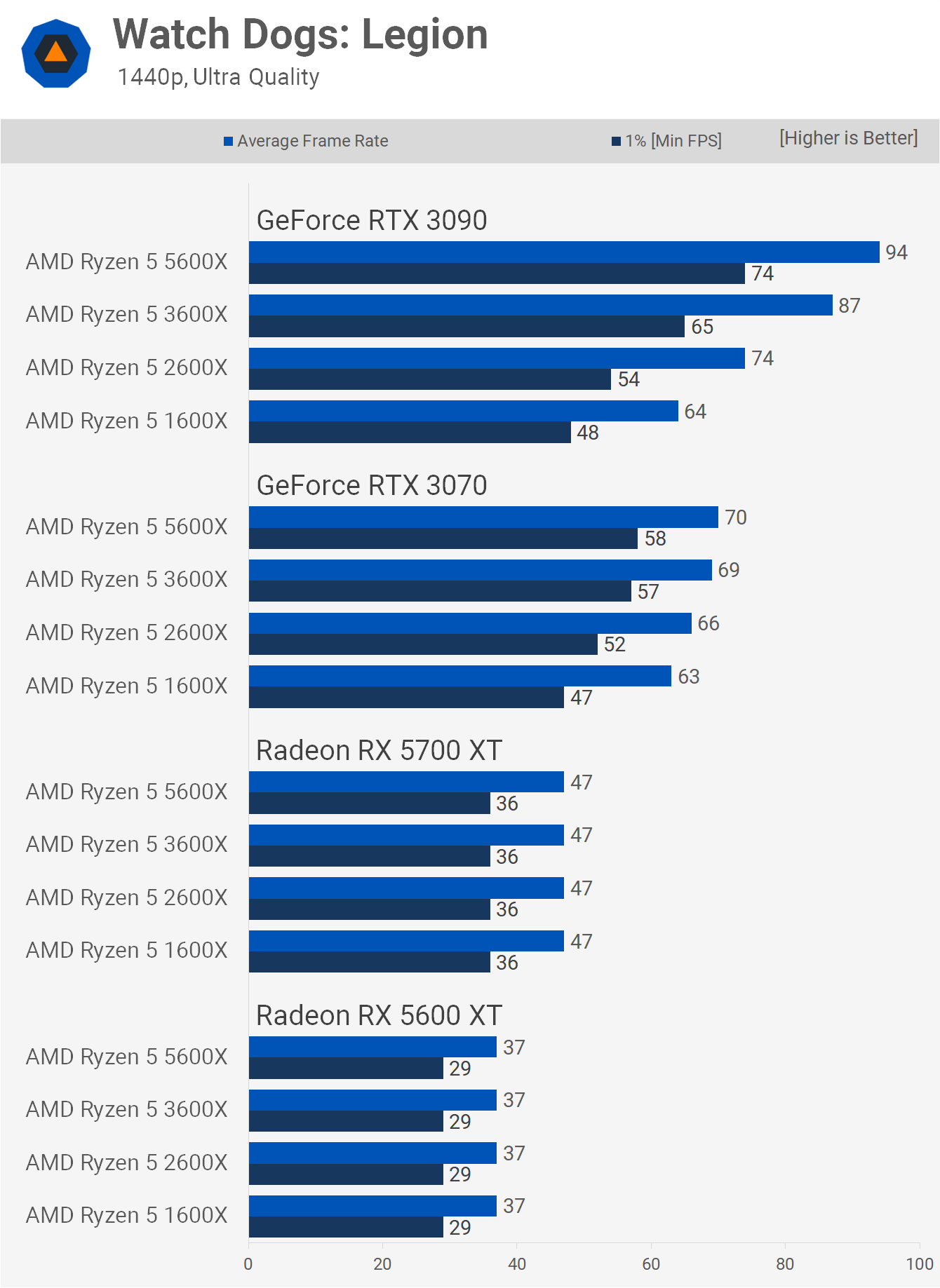

Increasing the resolution to 1440p with the ultra quality settings increased the GPU bottleneck with the RTX 3070 and here the 3600X and 5600X are basically identical in terms of performance. Even the 2600X wasn't far behind though the 5600X was up to 23% faster than the 1600X.

Then if we look at the RTX 3090 data we see that the 5600X was 14% faster than the 3600X when comparing the 1% low data, 37% faster than the 2600X and 54% faster than the 1600X.

Again the medium quality preset introduces some strange anomalies in the results, though we have seen this in other games under certain conditions. Here we're again seeing superior performance for the 1600X with the 5700 XT opposed to what should be the faster RTX 3070 GPU.

Other than that the results look fairly typical, we're GPU limited with the 5600 XT and 5700 xT while we're CPU limited with the RTX 3070 for the 1600X, 2600X and 3600X.

Increasing the resolution to 4K with the ultra quality settings saw Watch Dogs Legion become almost entirely GPU bound – in fact it was with the RTX 3070 and slower. We saw some small variation in performance using the 3090, here the 5600X was up to 11% faster than the 1600X.

Then at 4K with the medium quality setting we find the margins open up with the RTX 3090, though the 3600X is able to closely match the 5600X.

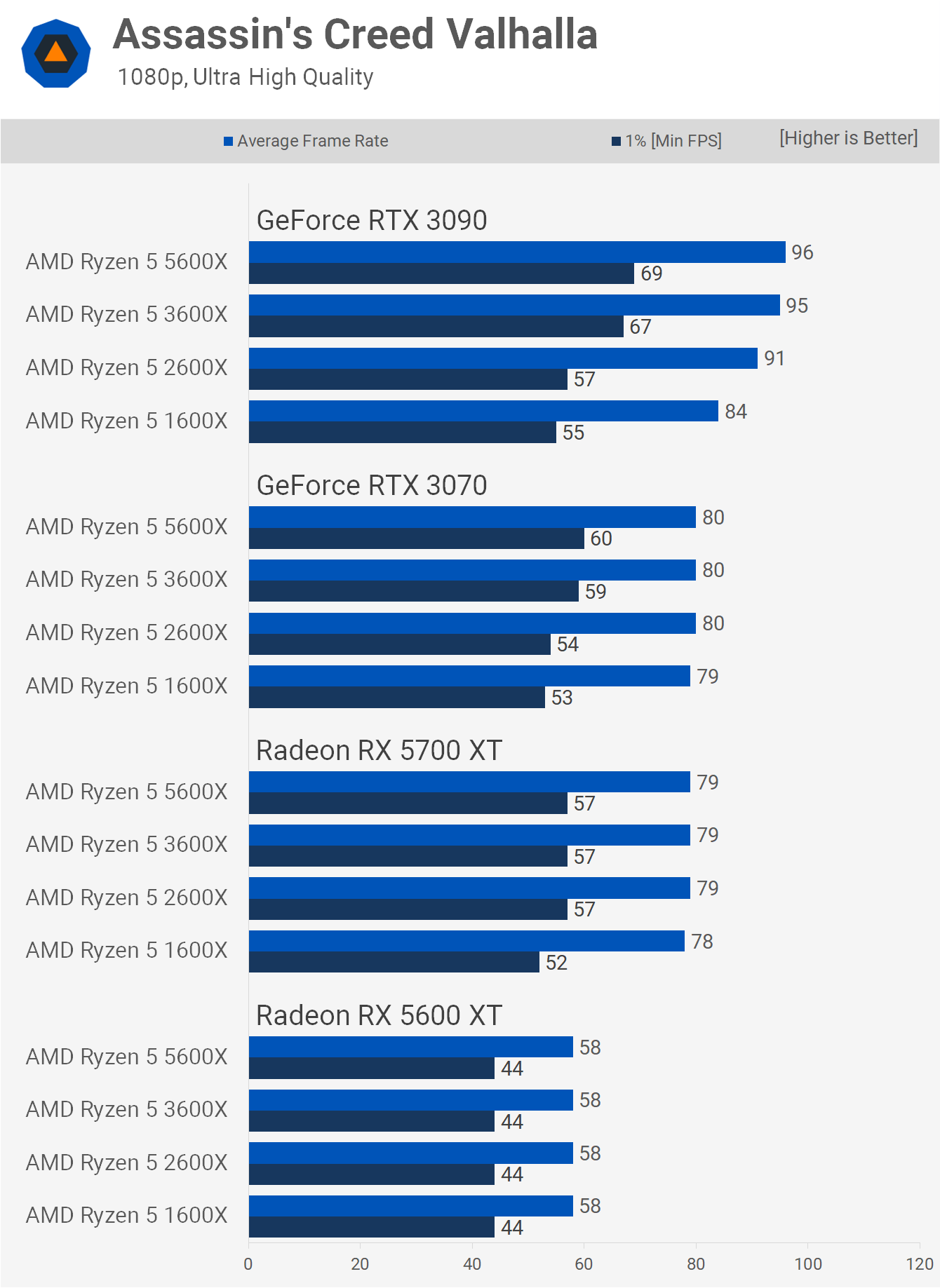

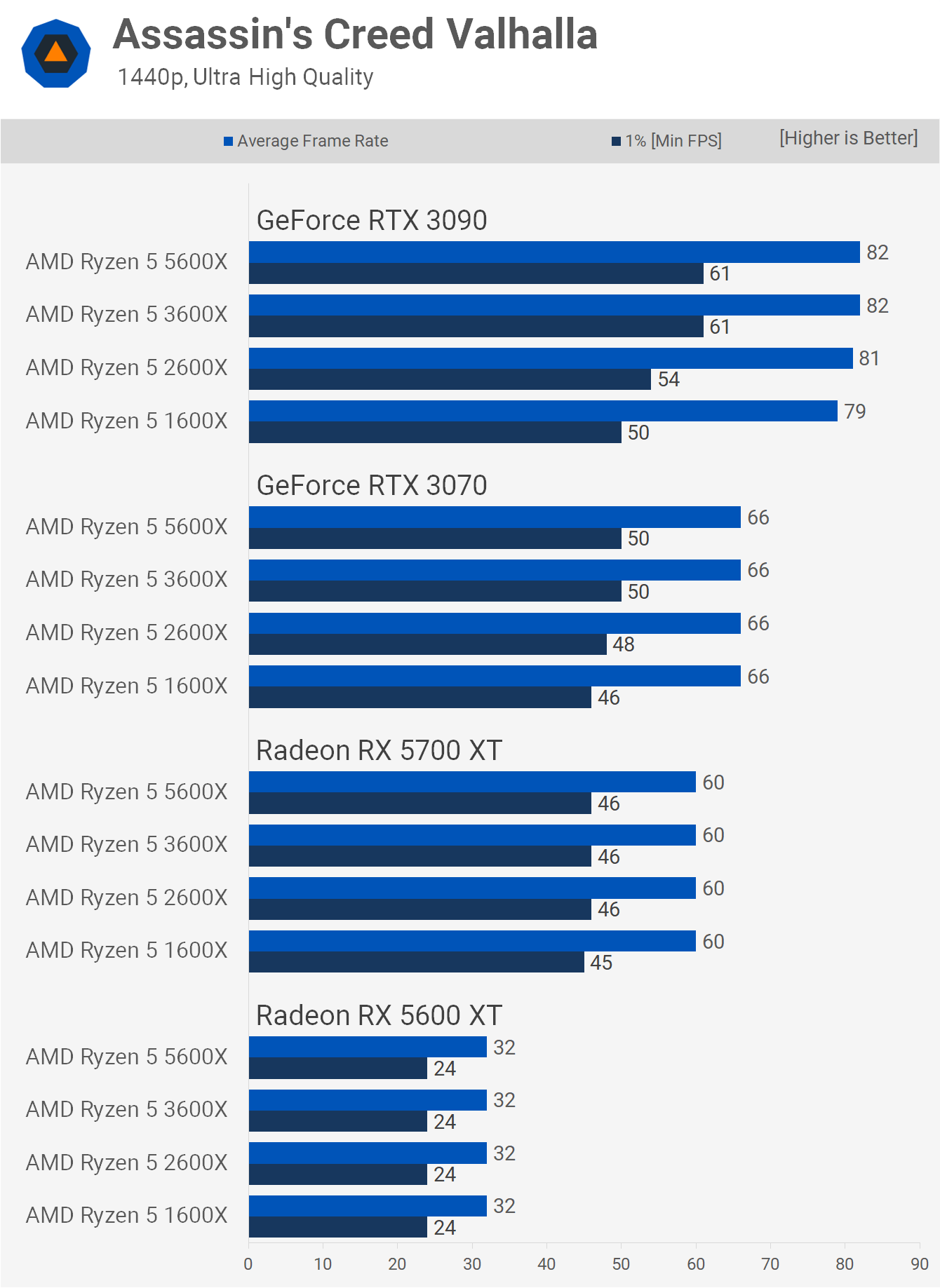

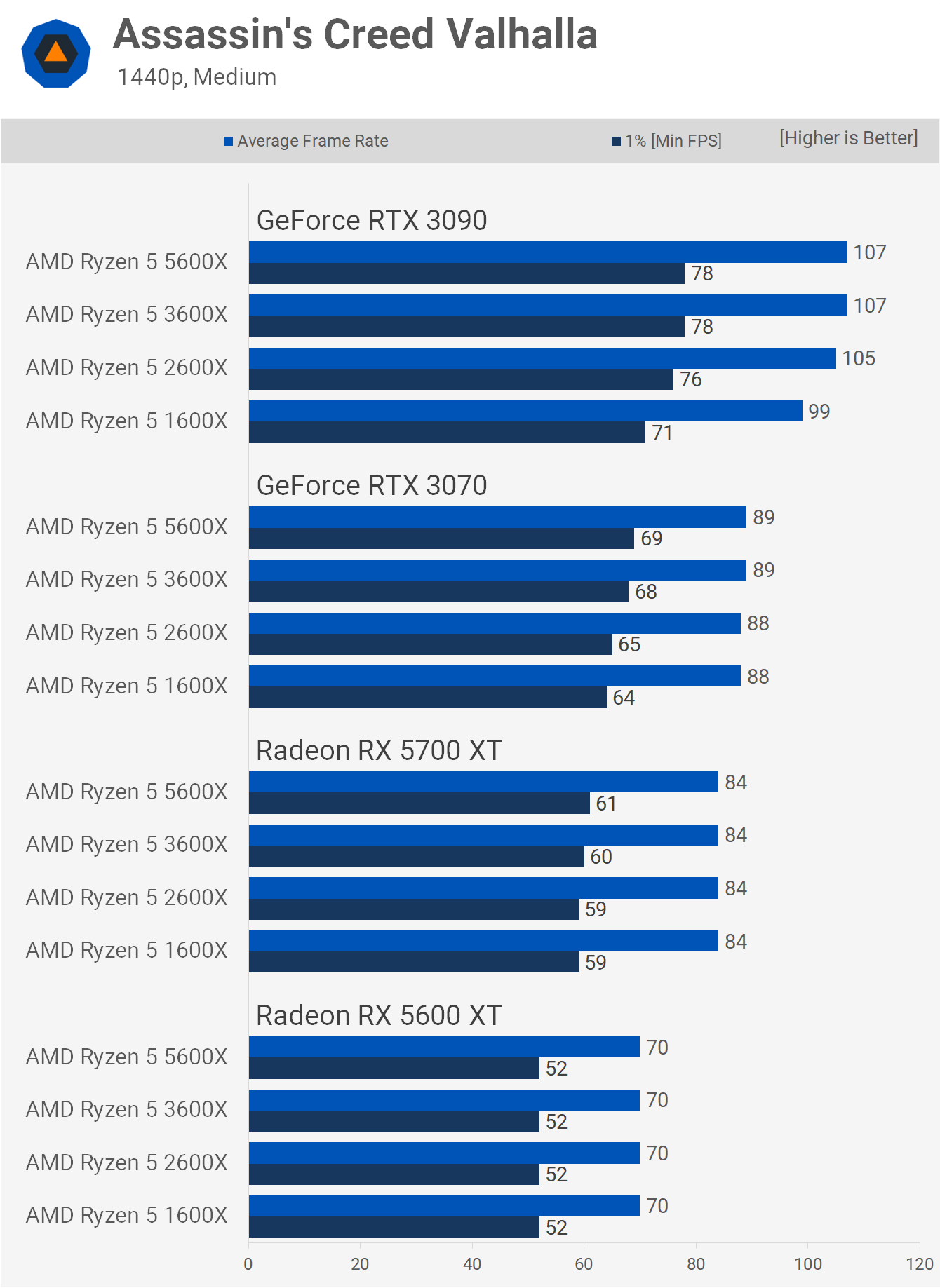

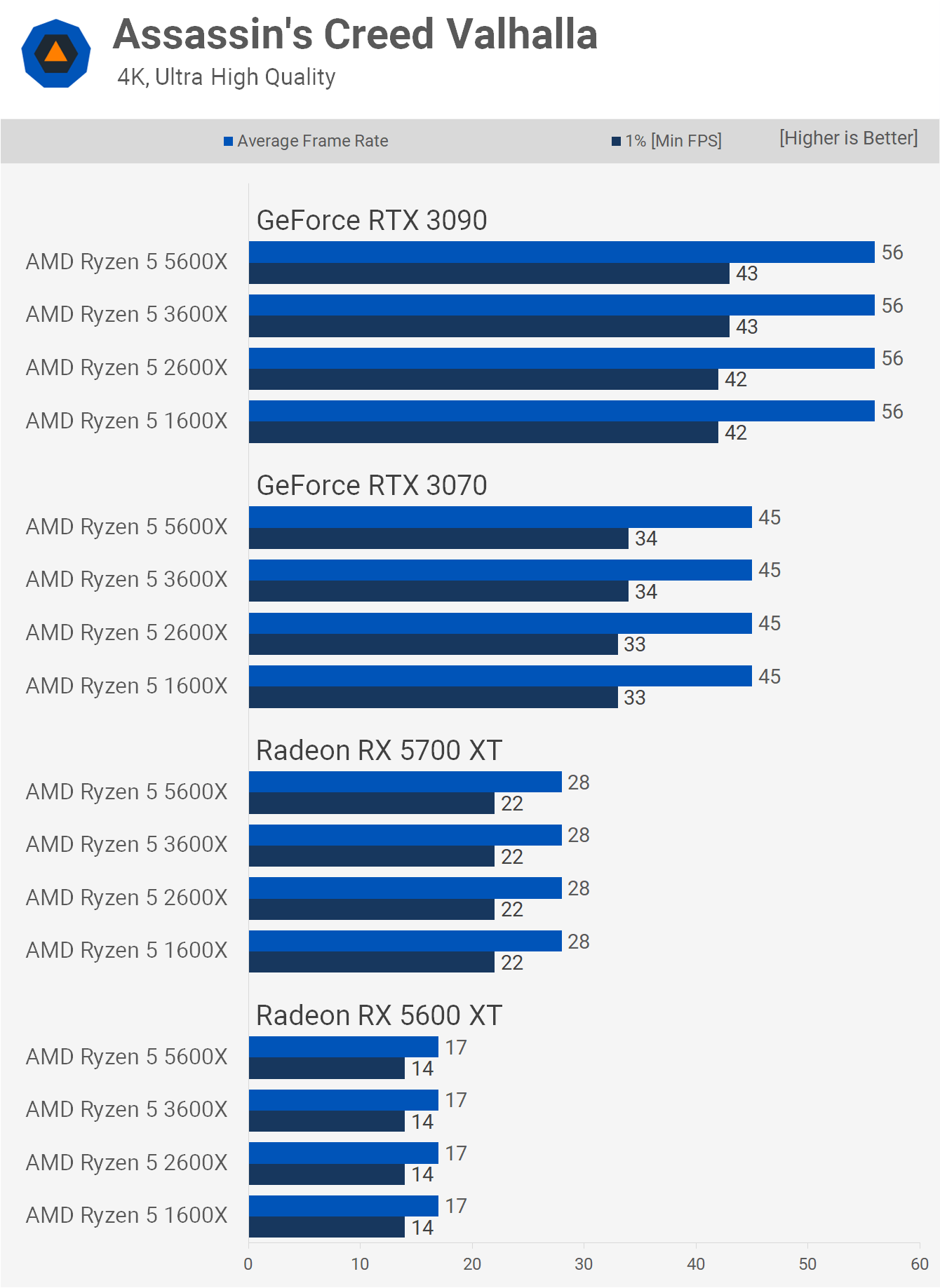

Assassin's Creed Valhalla

The last game we're going to look at is Assassin's Creed Valhalla. At 1080p using the ultra quality preset we find that the results are fairly heavily GPU limited with the 5600 XT, 5700 XT and RTX 3070. There is a small drop in 1% slow performance for the 1600X and 2600X with the 3070, but other than that we are looking at heavily GPU limited results.

Then with the RTX 3090 the 5600X was up to 3% faster than the 3600X, 21% faster than the 2600X and 25% faster than the 1600X. It's worth noting that the 5700 XT is similar to the RTX 3070 in terms of performance due to the fact that this AMD sponsored title is highly optimized for Radeon GPUs.

The margins using the medium quality preset really aren't that different, again all four CPUs come close to maxing out the 5600 XT and 5700 XT while we see a small variation in performance using the RTX 3070. Then with the 3090 the 1600X and 2600X are limiting performance while the 3600X and 5600X appear to be maximising what the 3090 is capable of at this resolution.

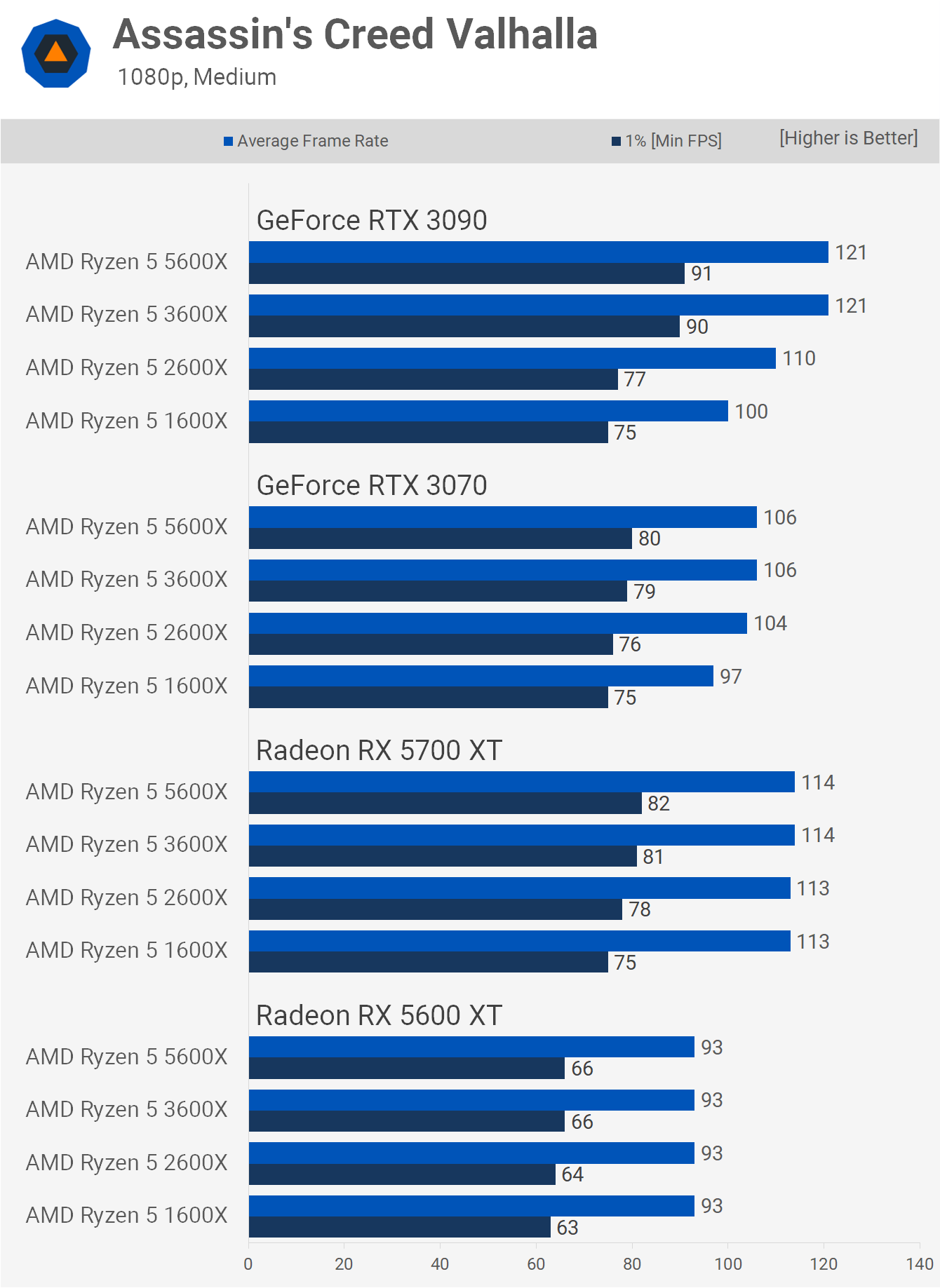

Jumping up to 1440p with ultra visual settings allows the RTX 3070 to pull ahead of the 5700 XT, though that's not really what we're interested in, rather it's the fact that all four CPUs are able to max out the 5600 XT, 5700 XT and RTX 3070. We find the limits of the 1600X and 2600X with the RTX 3090, particularly when looking at the 1% low performance.

Lowering the quality with the medium preset helps reduce CPU load and allows the 1600X and 2600X to deliver better more consistent 1% low performance when paired with the RTX 3090, this isn't totally unexpected and we have seen this sort of behaviour a number of times in the past.

Finally at the 4K resolution the game became extremely GPU bound and this was the case for both the ultra and medium quality settings, so not much to say here.

Performance Summary

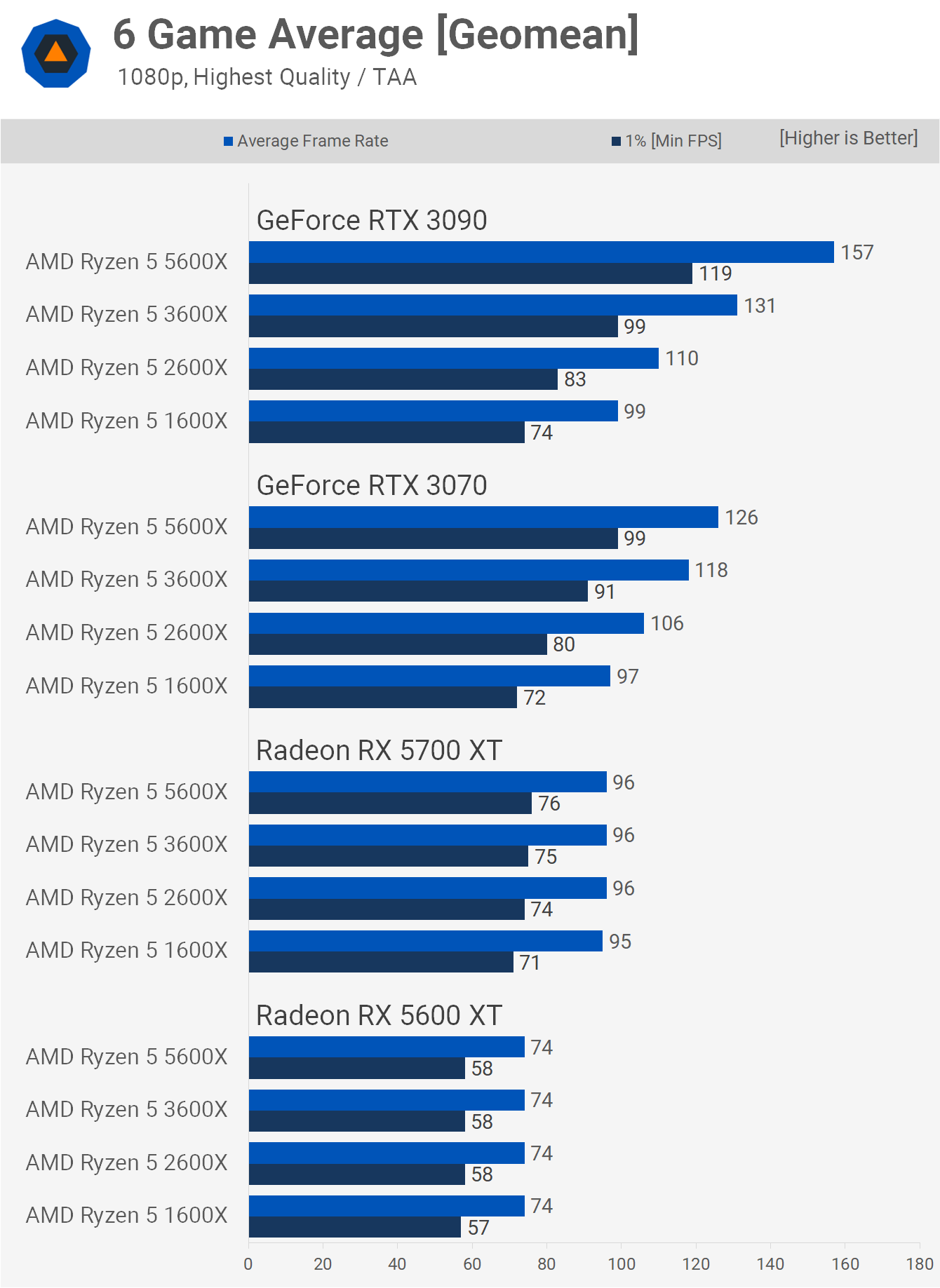

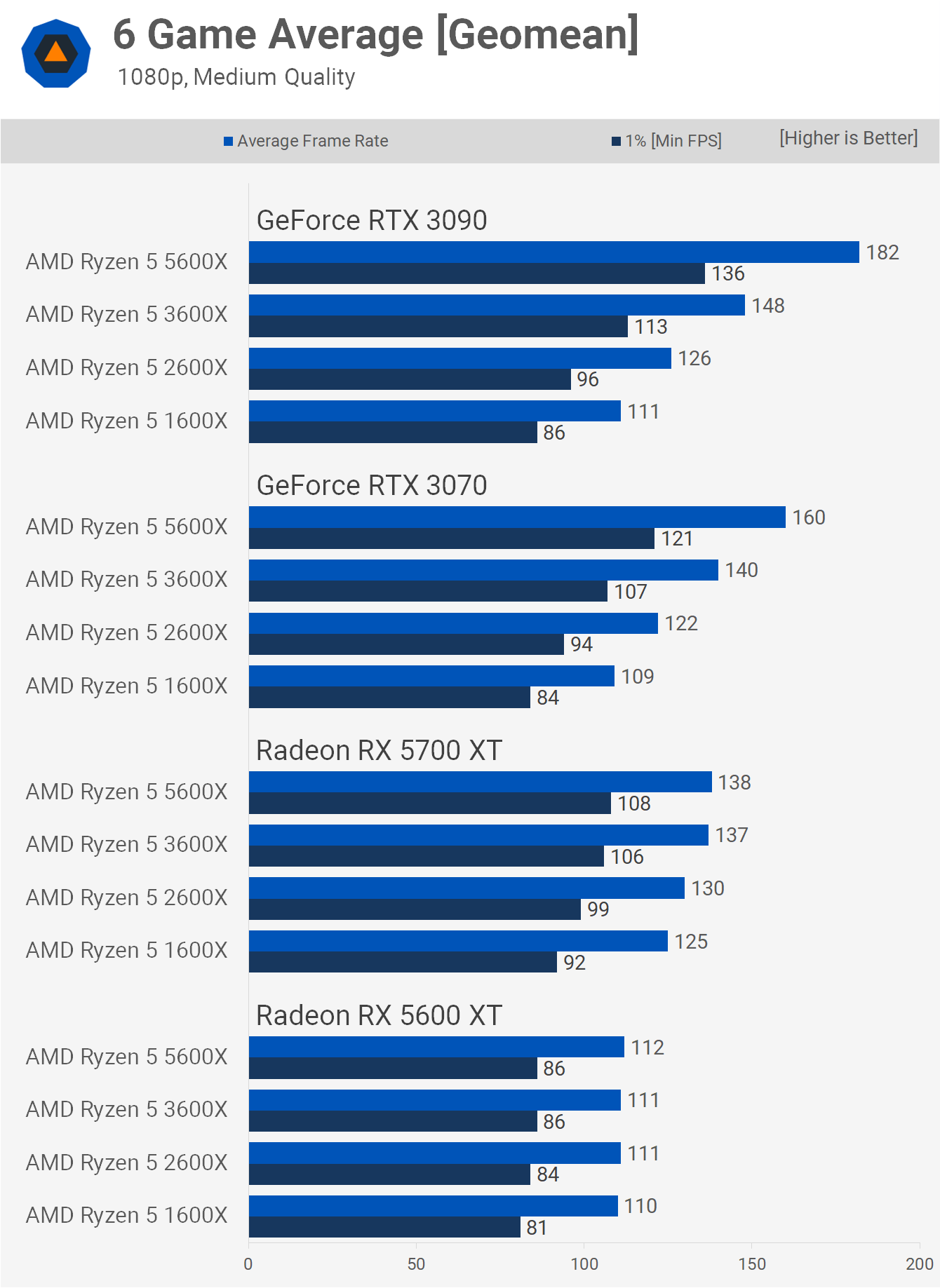

A lot of mixed data between resolutions and games, so let's move on to take a look at the 6 game averages.

The average 1080p ultra data looks pretty normal. On average across the half dozen games tested the four Ryzen 5 CPUs maxed out the 5600 XT and 5700 XT, with only a minor variation in 1% low data with the 5700 XT. Then as we upgrade to the faster RTX 3070 the 1600X typically saw no real performance improvement. The 2600X only saw a 10% boost while the 3600X became 23% faster and the 5600X 31% faster.

The margins widened further with the flagship RTX 3090, here the 5600X was on average 20% faster than the 3600X, 43% faster than the 2600X and 59% faster than the 1600X, that's basically double the margins seen with the RTX 3070.

With medium quality settings at 1080p we also see little to no difference between the various CPU tested. The margins opened up with the 5700 XT and now the 5600X was up to 17% faster than the 1600X, though it was basically matched by the 3600X.

Then as we saw numerous times, the 1600X and 2600X go backwards in terms of fps performance when moving to the RTX 3070, in this case the average frame rate dropped by 15% for the 1600X and 7% for the 2600X. The 1600X, 2600X and even the 3600X see a small extra boost when upgrading from the RTX 3070 to the 3090, just a 6% uptick in the case of the 3600X while the 5600X gained a further 14% performance.

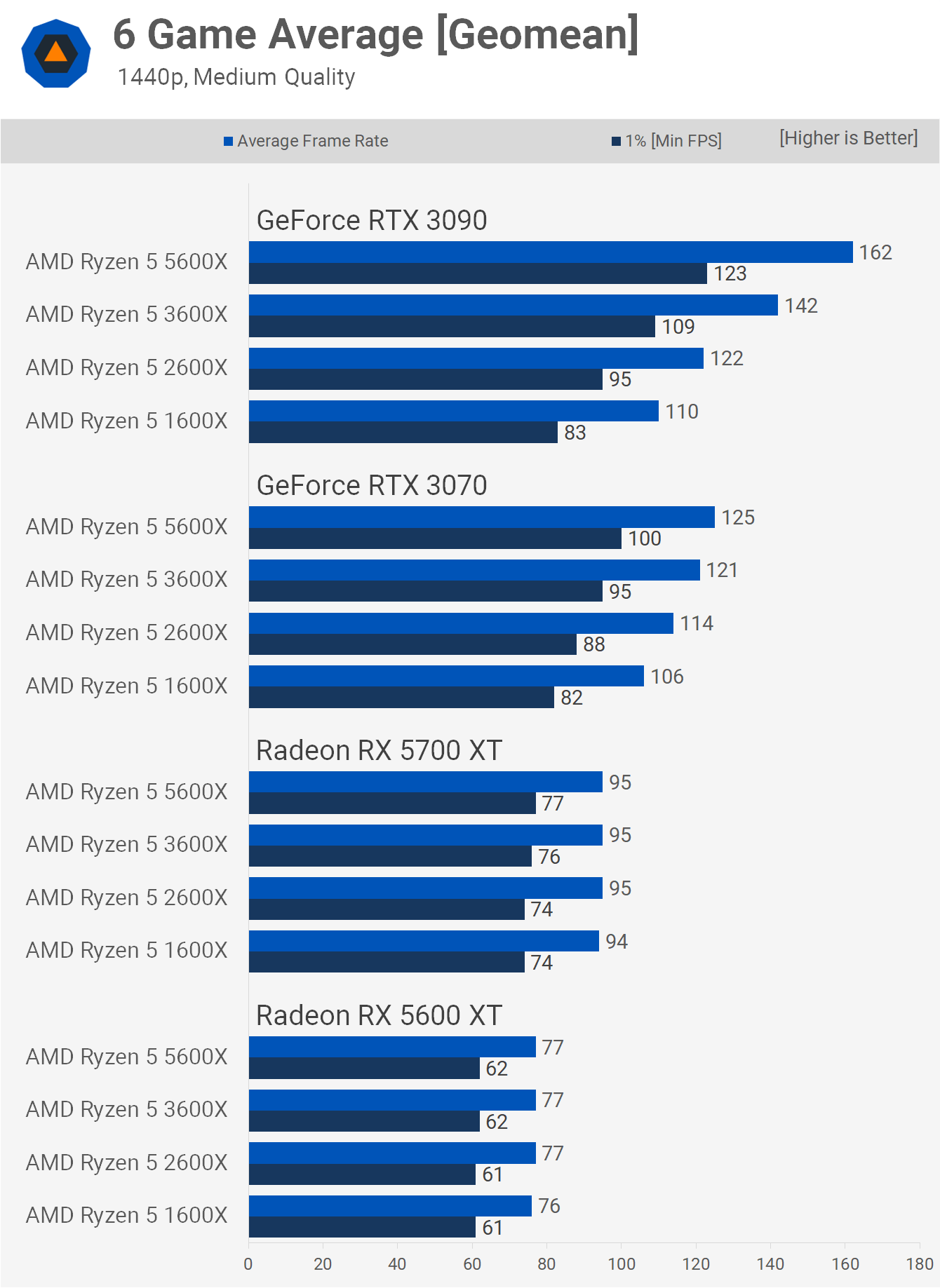

Now at 1440p using the ultra quality settings we find that all four CPUs were able to max out the 5600 XT and 5700 XT, while we see up to a 15% increase in performance from the 1600X to the 5600X with the RTX 3070. Of course, the real margins are seen with the RTX 3090 where the 5600X was on average up to 9% faster than the 3600X, 23% faster than the 2600X, and 38% faster than the 1600X.

The 1440p medium quality results are fairly similar to what we just saw though CPU bottlenecking with the RTX 3070 is more evident here.

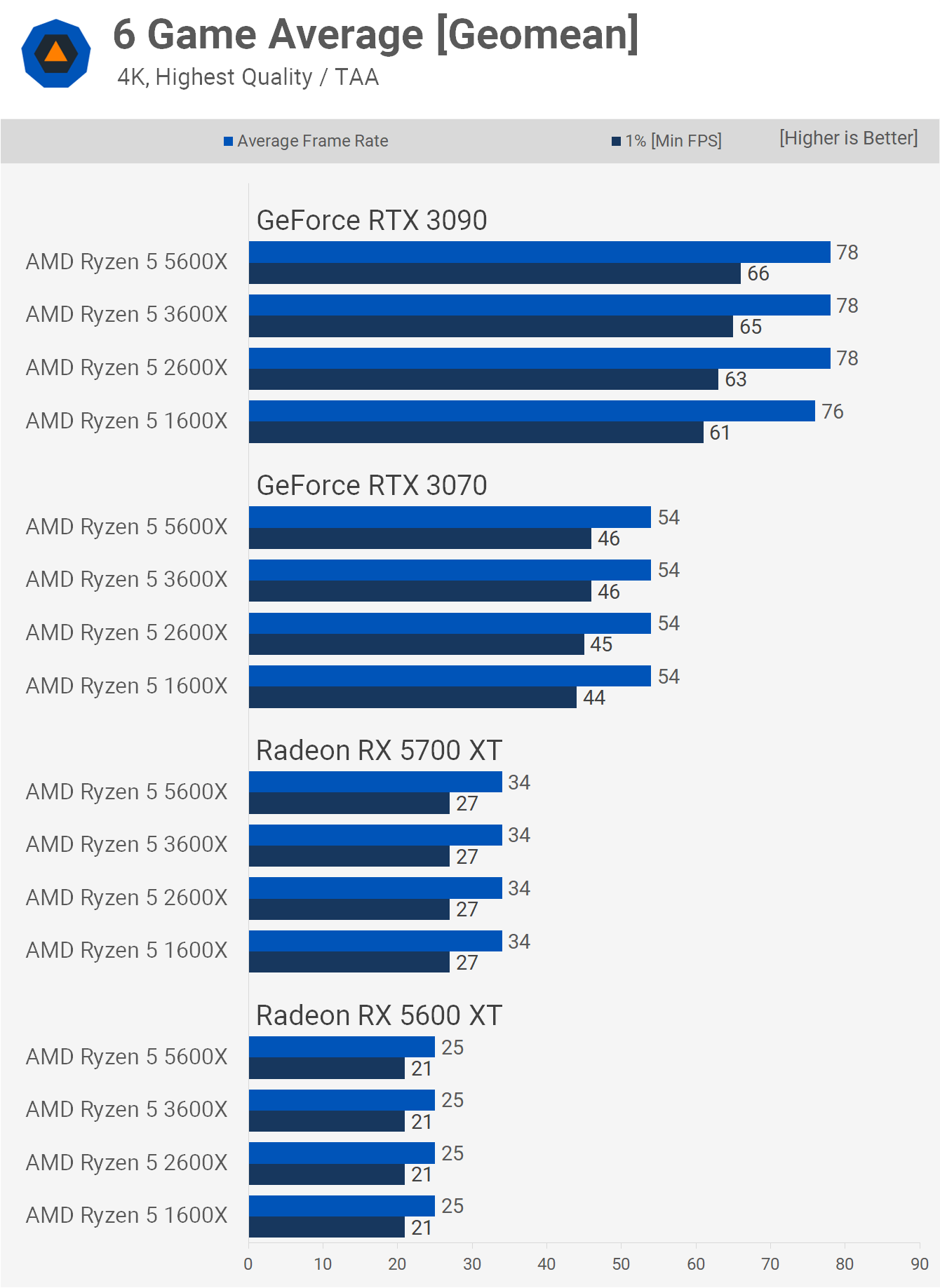

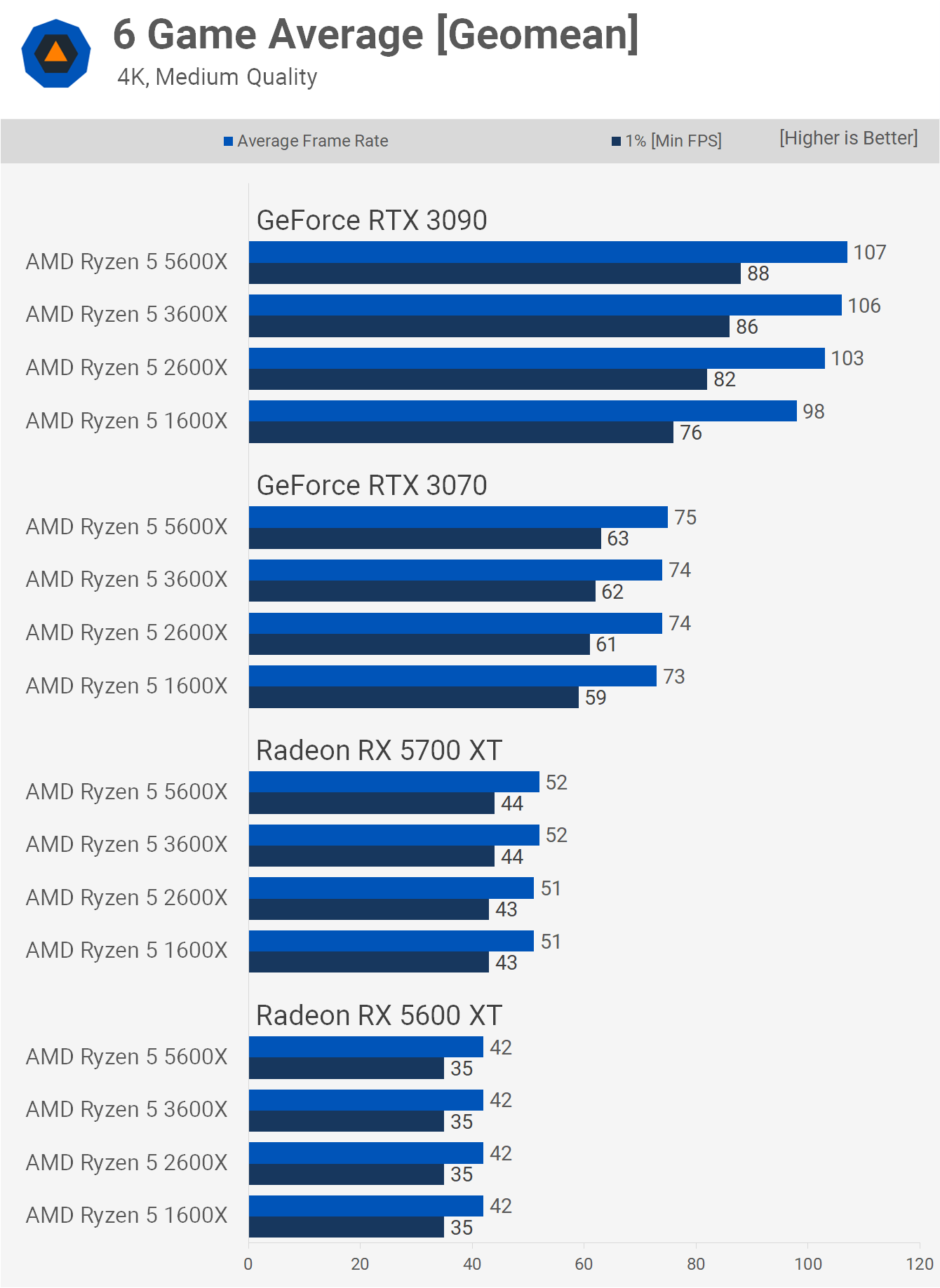

Then as we saw time and time again, the 4K ultra performance is similar across the board with only small margins for the 1% low performance seen when using the RTX 3090. Those margins opened up a little bit using the medium quality settings though if you're running an RTX 3090 chances are you won't be doing so with a 1st or 2nd generation Ryzen processor.

What We Learned

That was an incredible amount of data and we've got to admit we're walking about from this one with more questions than answers. We want to retest a few of these games with the 6900 XT, RX 6800 and RTX 2080 Ti while also including a few Intel CPUs for reference.

As for what we did learn here, there are a few takeaways.

First, while a massive step forward for AMD, Zen wasn't the most efficient architecture for gaming and while that won't necessarily be news to any of you it was interesting to see how it compared against Zen+, Zen 2 and Zen 3 across a range of settings and GPU hardware.

We know for example that the Ryzen 5 1600X has aged better than the Core i5-7600K, so it certainly wasn't a bad buy and if you're somewhat realistic about the hardware configuration, a part like the 1600X is still respectable even today. Of course, you can test it with an extreme GPU like the RTX 3090 and claim it's pathetic as the R5 5600X is often over 50% faster, but pair it with a more reasonable budget combo like the 5600 XT or even the 5700 XT and it does quite well.

Testing with a latest generation GPU like the RTX 3090 does help to highlight just how far AMD has come with Ryzen in a few years. Zen+ which is only a refined version of Zen, provides on average a 14% boost in gaming performance when CPU limited. Then from Zen+ to Zen 2 we see a larger 17% uptick and the most recent update (Zen 3) provided a further 23% performance uplift.

Without question the gaming performance of the AMD Zen architecture has improved by leaps and bounds, though you won't always see big performance gains when upgrading your CPU because as we just saw you need the right GPU to unleash the additional processing power. So if you're currently using an RTX 2060 or 5600 XT (or perhaps something slower), and your current CPU is a Ryzen 1600X or 2600X, then upgrading to a Core i5-10600K or Ryzen 5 5600X, for example, won't net you much additional performance.

This kind of assumption will always depend on the games you play and settings – what's optimal for you might not be optimal for a different gamer – but the rule will apply when you are striking a balanced choice of CPU and GPU.

We should also quickly discuss core counts, since that came up a lot in our previous CPU/GPU scaling feature which focused on Zen 3 parts. We want to reiterate what we said in our Ryzen 5 5600X review, and that is that gamers shouldn't focus on CPU core count and the only real consideration should be CPU performance and how much CPU performance you actually need.

For gaming all you need from the Zen 3 range is the 6-core/12-thread model right now, but that's not to generalize by saying any 6-core/12-thread processor is all you need for gaming because as we've seen here, 6-core/12-thread performance can mean just about anything with the 5600X up to 100% faster than the 1600X in the most extreme scenarios, so just focus on CPU performance and get over this core count obsession for gaming.

There's plenty more testing to be done and we're keen to add Intel's upcoming 11th-gen Core series to the mix shortly. If you enjoyed this feature, you can say thanks to Steve who dedicated an insane amount of time to this testing.