We all think of the CPU as the "brains" of a computer, but what does that actually mean? What is going on inside with the billions of transistors to make your computer work? In this four-part mini series we'll be focusing on computer hardware design, covering the ins and outs of what makes a computer work.

The series will cover computer architecture, processor circuit design, VLSI (very-large-scale integration), chip fabrication, and future trends in computing. If you've always been interested in the details of how processors work on the inside, stick around because this is what you want to know to get started.

(instruction set architectures, caching, pipelines, hyperthreading)

Part 2: CPU Design Process

(schematics, transistors, logic gates, clocking)

Part 3: Laying Out and Physically Building the Chip

(VLSI and silicon fabrication)

Part 4: Current Trends and Future Hot Topics in Computer Architecture

(Sea of Accelerators, 3D integration, FPGAs, Near Memory Computing)

We'll start at a very high level of what a processor does and how the building blocks come together in a functioning design. This includes processor cores, the memory hierarchy, branch prediction, and more. First, we need a basic definition of what a CPU does. The simplest explanation is that a CPU follows a set of instructions to perform some operation on a set of inputs. For example, this could be reading a value from memory, then adding it to another value, and finally storing the result back to memory in a different location. It could also be something more complex like dividing two numbers if the result of the previous calculation was greater than zero.

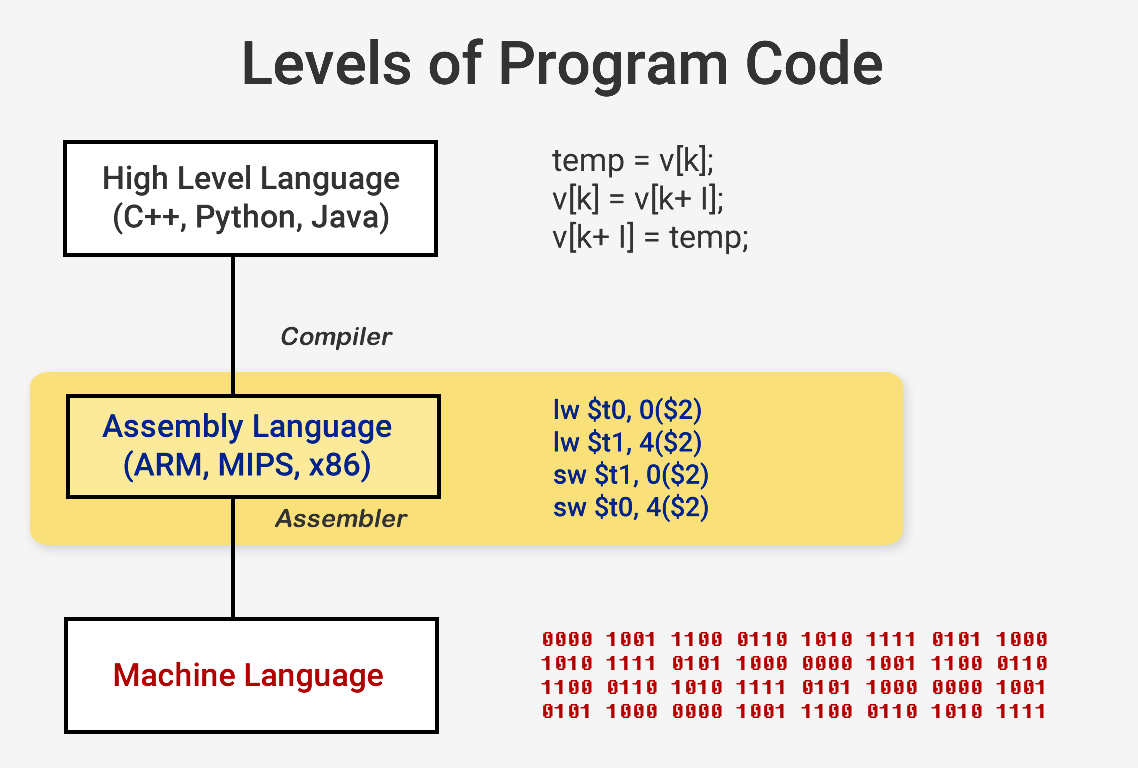

When you want to run a program like an operating system or a game, the program itself is a series of instructions for the CPU to execute. These instructions are loaded from memory and on a simple processor, they are executed one by one until the program is finished. While software developers write their programs in high-level languages like C++ or Python, for example, the processor can't understand that. It only understands 1s and 0s so we need a way to represent code in this format.

Programs are compiled into a set of low-level instructions called assembly language as part of an Instruction Set Architecture (ISA). This is the set of instructions that the CPU is built to understand and execute. Some of the most common ISAs are x86, MIPS, ARM, RISC-V, and PowerPC. Just like the syntax for writing a function in C++ is different from a function that does the same thing in Python, each ISA has a different syntax.

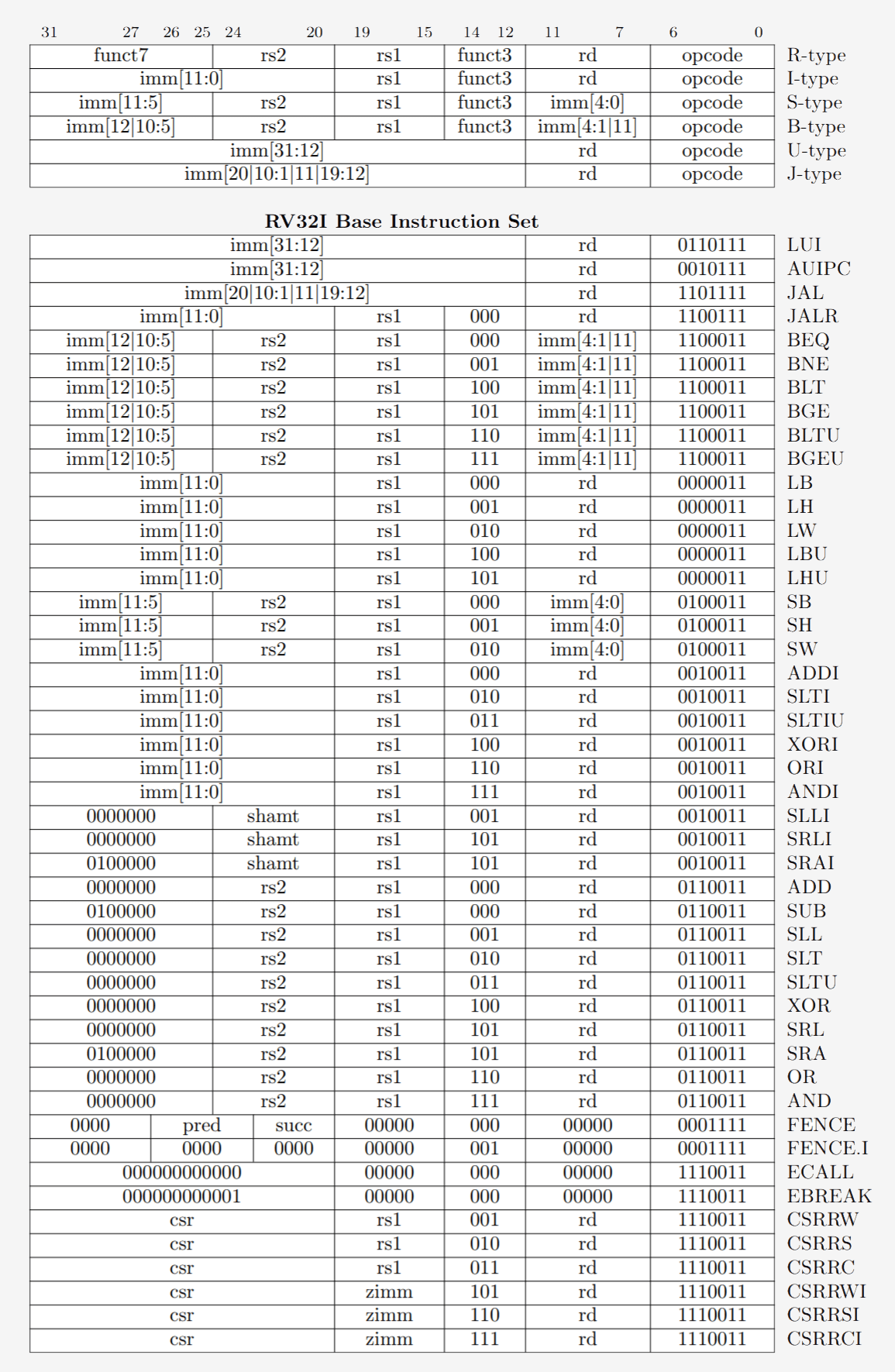

These ISAs can be broken up into two main categories: fixed-length and variable-length. The RISC-V ISA uses fixed-length instructions which means a certain predefined number of bits in each instruction determine what type of instruction it is. This is different from x86 which uses variable length instructions. In x86, instructions can be encoded in different ways and with different numbers of bits for different parts. Because of this complexity, the instruction decoder in x86 CPUs is typically the most complex part of the whole design.

Fixed-length instructions allow for easier decoding due to their regular structure, but limit the number of total instructions that an ISA can support. While the common versions of the RISC-V architecture have about 100 instructions and are open-source, x86 is proprietary and nobody really knows how many instructions there are. People generally believe there are a few thousand x86 instructions but the exact number isn't public. Despite differences among the ISAs, they all carry essentially the same core functionality.

Now we are ready to turn our computer on and start running stuff. Execution of an instruction actually has several basic parts that are broken down through the many stages of a processor.

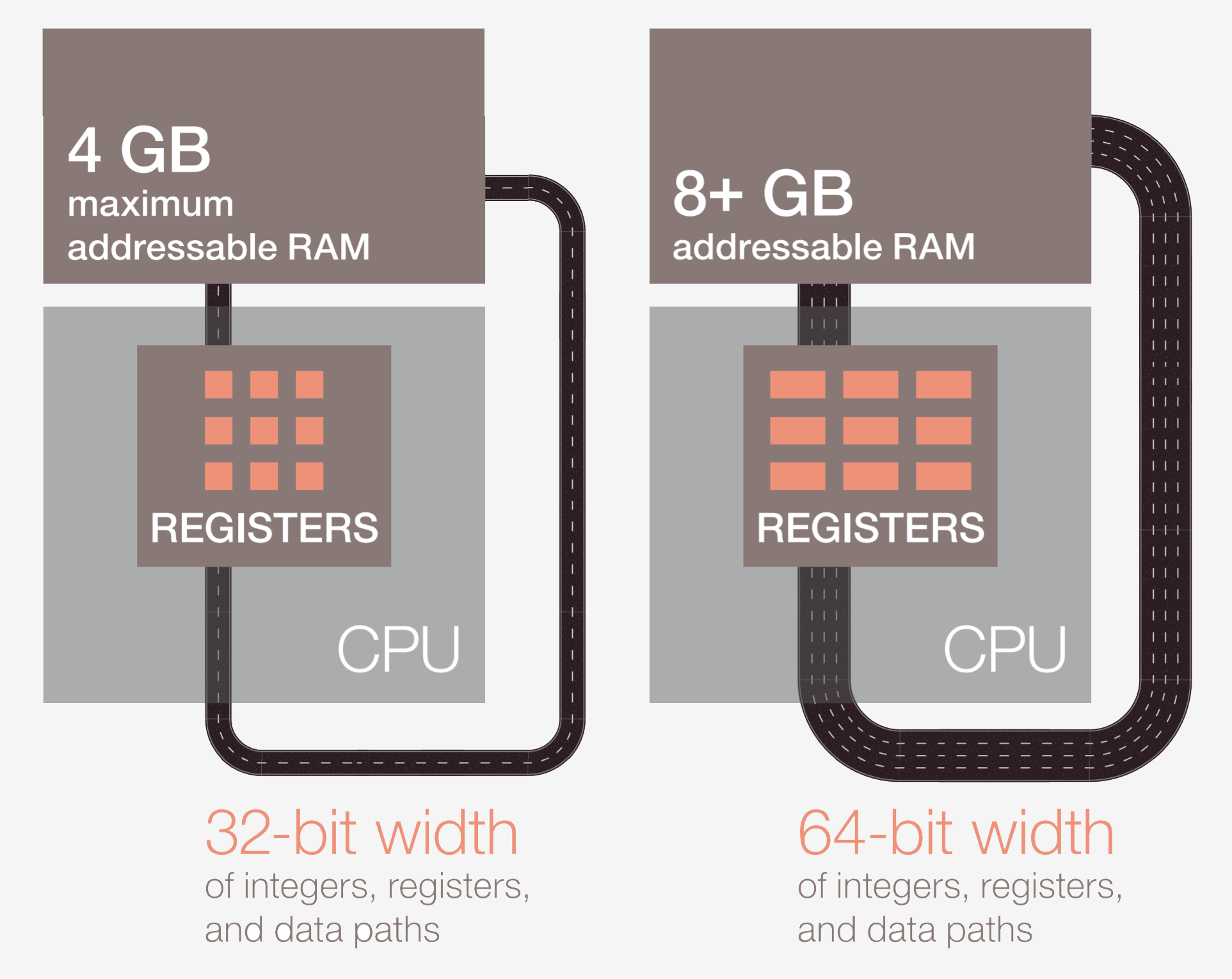

The first step is to fetch the instruction from memory into the CPU to begin execution. In the second step, the instruction is decoded so the CPU can figure out what type of instruction it is. There are many types including arithmetic instructions, branch instructions, and memory instructions. Once the CPU knows what type of instruction it is executing, the operands for the instruction are collected from memory or internal registers in the CPU. If you want to add number A to number B, you can't do the addition until you actually know the values of A and B. Most modern processors are 64-bit which means that the size of each data value is 64 bits.

After the CPU has the operands for the instruction, it moves to the execute stage where the operation is done on the input. This could be adding the numbers, performing a logical manipulation on the numbers, or just passing the numbers through without modifying them. After the result is calculated, memory may need to be accessed to store the result or the CPU could just keep the value in one of its internal registers. After the result is stored, the CPU will update the state of various elements and move on to the next instruction.

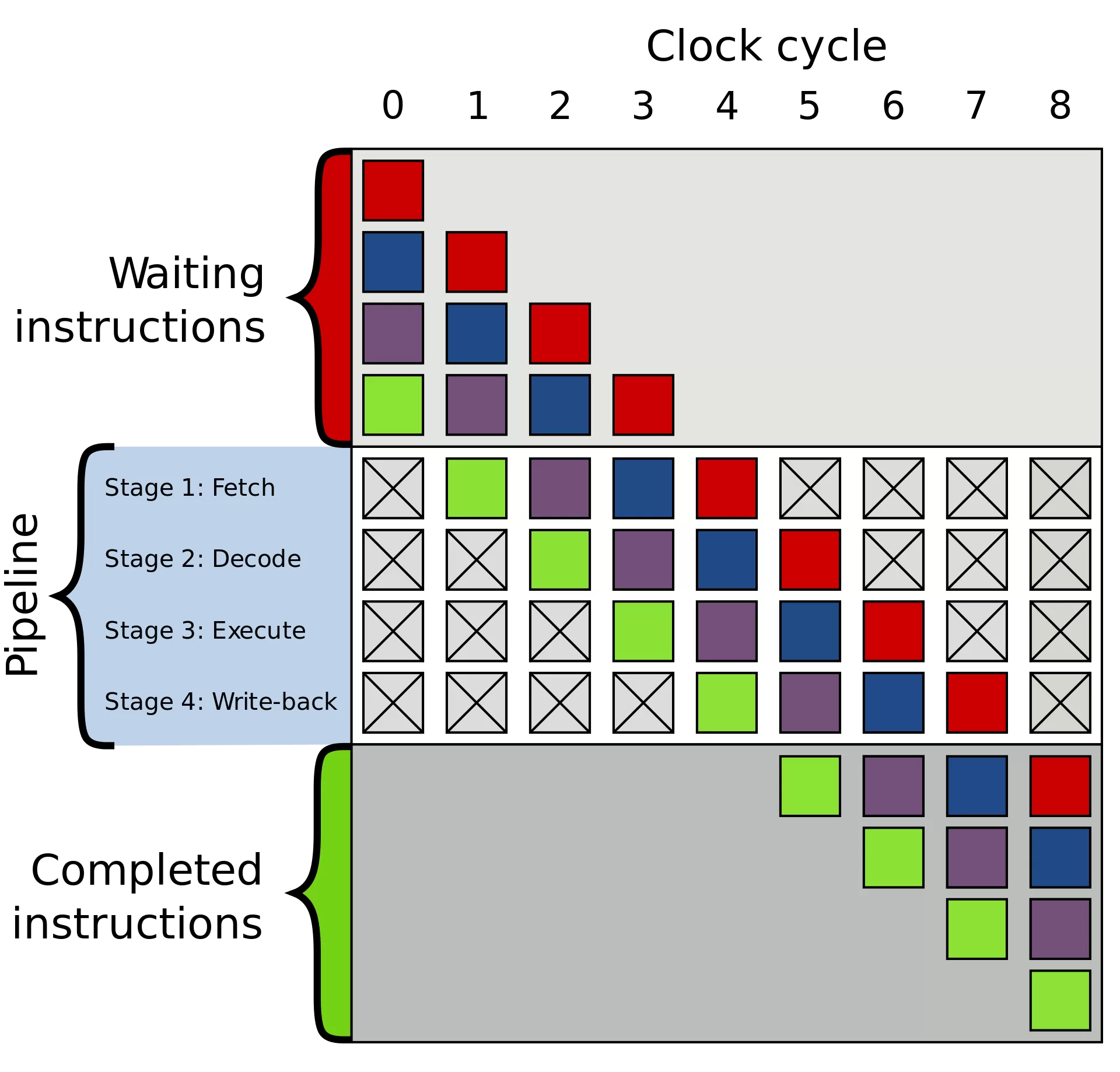

This description is, of course, a huge simplification and most modern processors will break these few stages up into 20 or more smaller stages to improve efficiency. That means that although the processor will start and finish several instructions each cycle, it may take 20 or more cycles for any one instruction to complete from start to finish. This model is typically called a pipeline since it takes a while to fill the pipeline and for liquid to go fully through it, but once it's full, you get a constant output.

The whole cycle that an instruction goes through is a very tightly choreographed process, but not all instructions may finish at the same time. For example, addition is very fast while division or loading from memory may take hundreds of cycles. Rather than stalling the entire processor while one slow instruction finished, most modern processors execute out-of-order. That means they will determine which instruction would be the most beneficial to execute at a given time and buffer other instructions that aren't ready. If the current instruction isn't ready yet, the processor may jump forward in the code to see if anything else is ready.

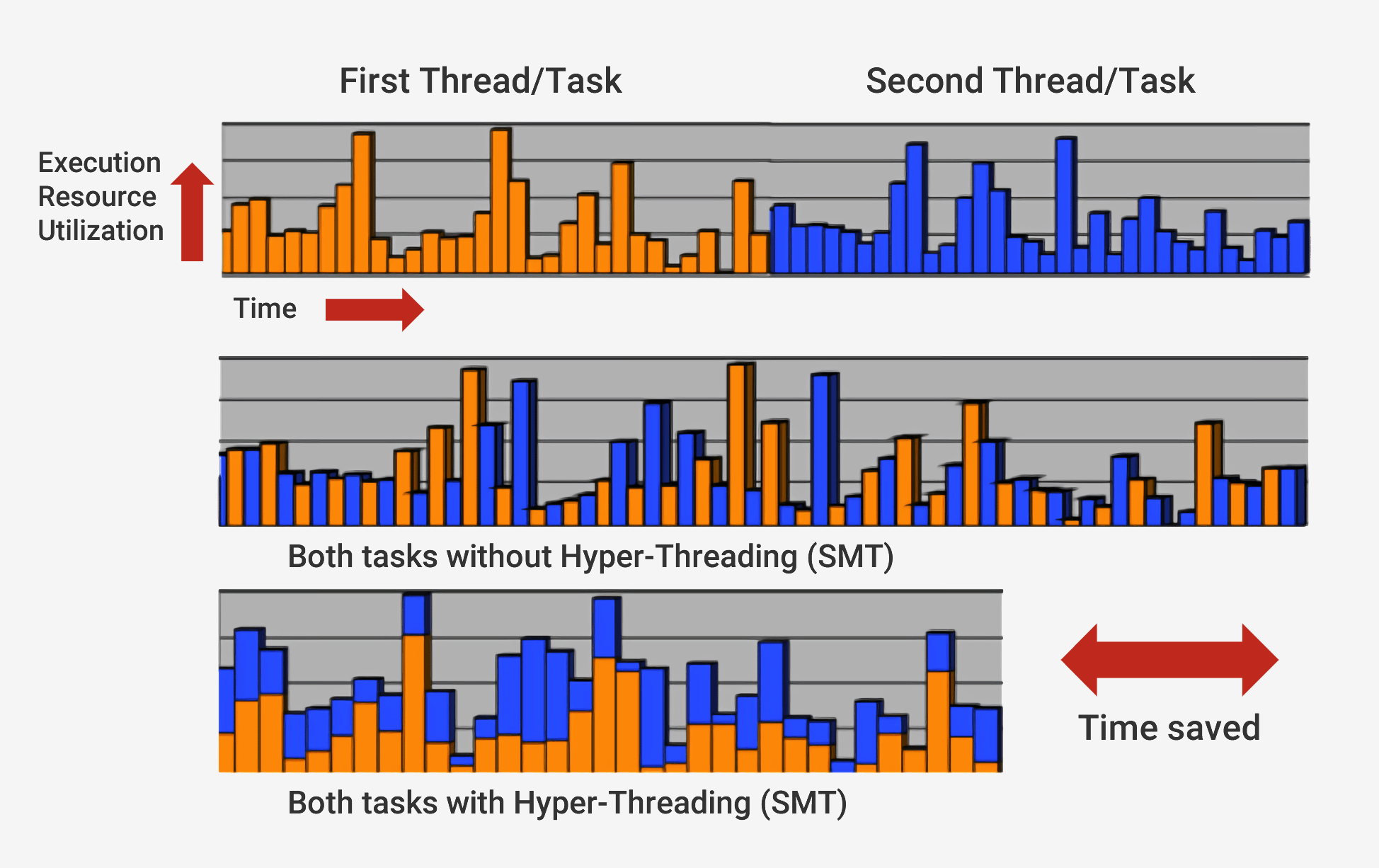

In addition to out-of-order execution, typical modern processors employ what is called a superscalar architecture. This means that at any one time, the processor is executing many instructions at one time in each stage of the pipeine. It may also be waiting on hundreds more to begin their execution. In order to be able to execute many instructions at once, processors will have several copies of each pipeline stage inside. If a processor sees that two instructions are ready to be executed and there is no dependency between them, rather than wait for them to finish separately, it will execute them both at the same time. One common implementation of this is called Simultaneous Multithreading (SMT), also known as Hyper-Threading. Intel and AMD processors currently support two-way SMT while IBM has developed chips that support up to eight-way SMT.

To accomplish this carefully choreographed execution, a processor has many extra elements in addition to the basic core. There are hundreds of individual modules in a processor that each serve a specific purpose, but we'll just go over the basics. The two biggest and most beneficial are the caches and branch predictor. Additional structures that we won't cover include things like reorder buffers, register alias tables, and reservation stations.

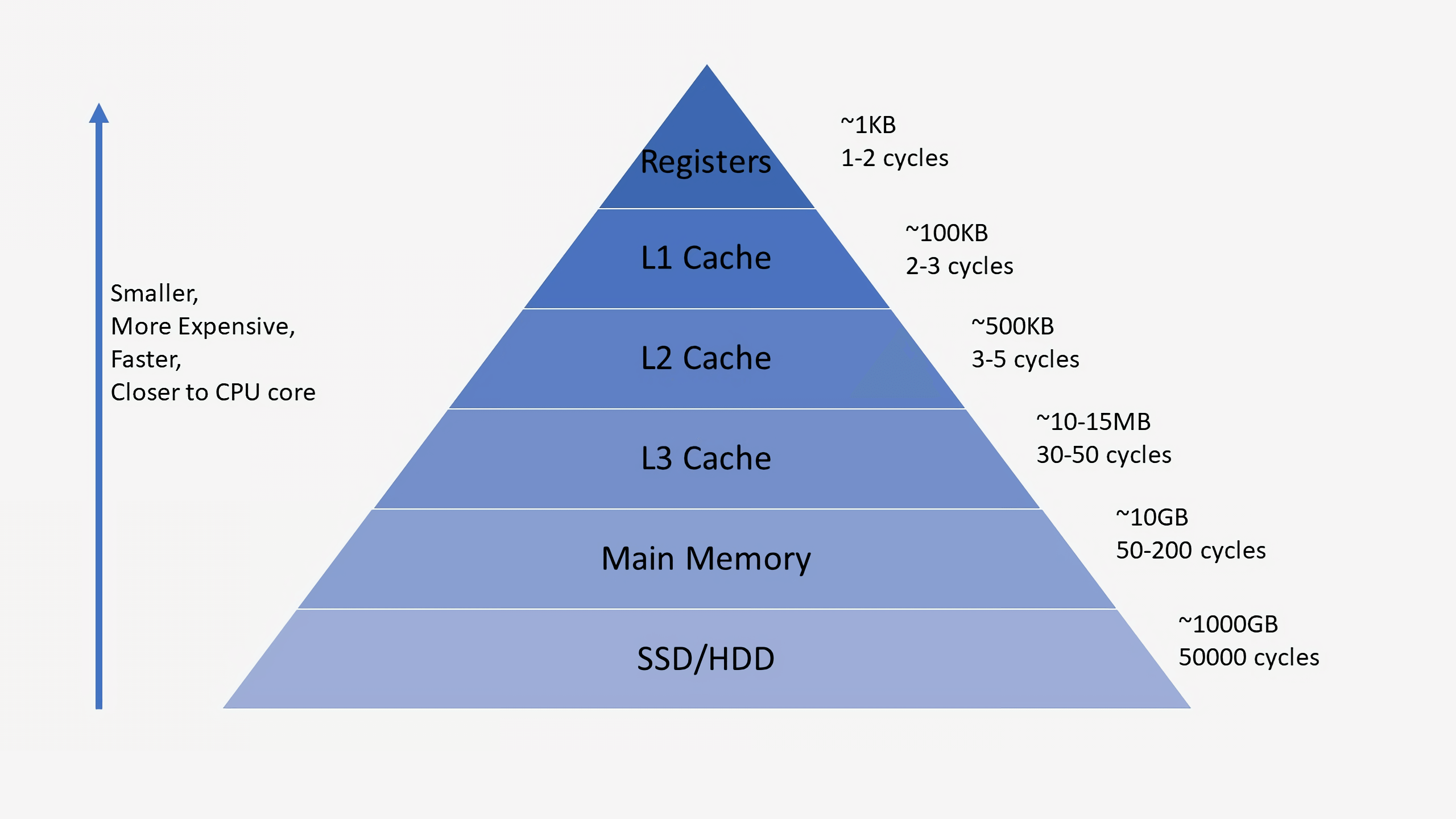

The purpose of caches can often be confusing since they store data just like RAM or an SSD. What sets caches apart though is their access latency and speed. Even though RAM is extremely fast, it is orders of magnitude too slow for a CPU. It may take hundreds of cycles for RAM to respond with data and the processor would be stuck with nothing to do. If the data isn't in the RAM, it can take tens of thousands of cycles for data on an SSD to be accessed. Without caches, our processors would grind to a halt.

Processors typically have three levels of cache that form what is known as a memory hierarchy. The L1 cache is the smallest and fastest, the L2 is in the middle, and L3 is the largest and slowest of the caches. Above the caches in the hierarchy are small registers that store a single data value during computation. These registers are the fastest storage devices in your system by orders of magnitude. When a compiler transforms high-level program into assembly language, it will determine the best way to utilize these registers.

When the CPU requests data from memory, it will first check to see if that data is already stored in the L1 cache. If it is, the data can be quickly accessed in just a few cycles. If it is not present, the CPU will check the L2 and subsequently search the L3 cache. The caches are implemented in a way that they are generally transparent to the core. The core will just ask for some data at a specified memory address and whatever level in the hierarchy that has it will respond. As we move to subsequent stages in the memory hierarchy, the size and latency typically increase by orders of magnitude. At the end, if the CPU can't find the data it is looking for in any of the caches, only then will it go to the main memory (RAM).

On a typical processor, each core will have two L1 caches: one for data and one for instructions. The L1 caches are typically around 100 kilobytes total and size may vary depending on the chip and generation. There is also typically an L2 cache for each core although it may be shared between two cores in some architectures. The L2 caches are usually a few hundred kilobytes. Finally, there is a single L3 cache that is shared between all the cores and is on the order of tens of megabytes.

When a processor is executing code, the instructions and data values that it uses most often will get cached. This significantly speeds up execution since the processor does not have to constantly go to main memory for the data it needs. We will talk more about how these memory systems are actually implemented in the second and third installment of this series.

Besides caches, one of the other key building blocks of a modern processor is an accurate branch predictor. Branch instructions are similar to "if" statements for a processor. One set of instructions will execute if the condition is true and another will execute if the condition is false. For example, you may want to compare two numbers and if they are equal, execute one function, and if they are different, execute another function. These branch instructions are extremely common and can make up roughly 20% of all instructions in a program.

On the surface, these branch instructions may not seem like an issue, but they can actually be very challenging for a processor to get right. Since at any one time, the CPU may be in the process of executing ten or twenty instructions at once, it is very important to know which instructions to execute. It may take 5 cycles to determine if the current instruction is a branch and another 10 cycles to determine if the condition is true. In that time, the processor may have started executing dozens of additional instructions without even knowing if those were the correct instructions to execute.

To get around this issue, all modern high-performance processors employ a technique called speculation. What this means is that the processor will keep track of branch instructions and guess as to whether the branch will be taken or not. If the prediction is correct, the processor has already started executing subsequent instructions so this provides a performance gain. If the prediction is incorrect, the processor stops execution, removes all incorrect instructions that it has started executing, and starts over from the correct point.

These branch predictors are some of the earliest forms of machine learning since the predictor learns the behavior of the branches as it goes. If it predicts incorrectly too many times, it will begin to learn the correct behavior. Decades of research into branch prediction techniques have resulted in accuracies greater than 90% in modern processors.

While speculation offers immense performance gains since the processor can execute instructions that are ready instead of waiting in line on busy ones, it also exposes security vulnerabilities. The famous Spectre attack exploits bugs in branch prediction and speculation. The attacker would use specially crafted code to get the processor to speculatively execute code that would leak memory values. Some aspects of speculation have had to be redesigned to ensure data could not be leaked and this resulted in a slight drop in performance.

The architecture used in modern processors has come a long way in the past few decades. Innovations and clever design have resulted in more performance and a better utilization of the underlying hardware. CPU makers are very secretive about the technologies in their processors though, so it's impossible to know exactly what goes on inside. With that being said, the fundamentals of how computers work are standardized across all processors. Intel may add their secret sauce to boost cache hit rates or AMD may add an advanced branch predictor, but they both accomplish the same task.

(instruction set architectures, caching, pipelines, hyperthreading)

Part 2: CPU Design Process

(schematics, transistors, logic gates, clocking)

Part 3: Laying Out and Physically Building the Chip

(VLSI and silicon fabrication)

Part 4: Current Trends and Future Hot Topics in Computer Architecture

(Sea of Accelerators, 3D integration, FPGAs, Near Memory Computing)

This first-look and overview covered most of the basics about how processors work. In the second part, we'll discuss how the components that go into CPU are designed, cover logic gates, clocking, power management, circuit schematics, and more.

This article was originally published in April 22, 2019. We've slightly revised it and bumped it as part of our #ThrowbackThursday initiative. Masthead credit: Electronic circuit board close up by Raimudas