WTF?! Are advanced, generative AI chatbots such as ChatGPT in some way conscious or self-aware, able to experience feelings and memories just like a human? No, of course not. Yet the majority of people believe these LLMs do have some degree of consciousness, and that conviction could affect how users interact with them.

Generative AI language tools have made unbelievable advancements over the last few years. According to a new study from the University of Waterloo, the software's human-like conversational style has resulted in over two-thirds (67%) of those surveyed believing LLMs have a degree of consciousness.

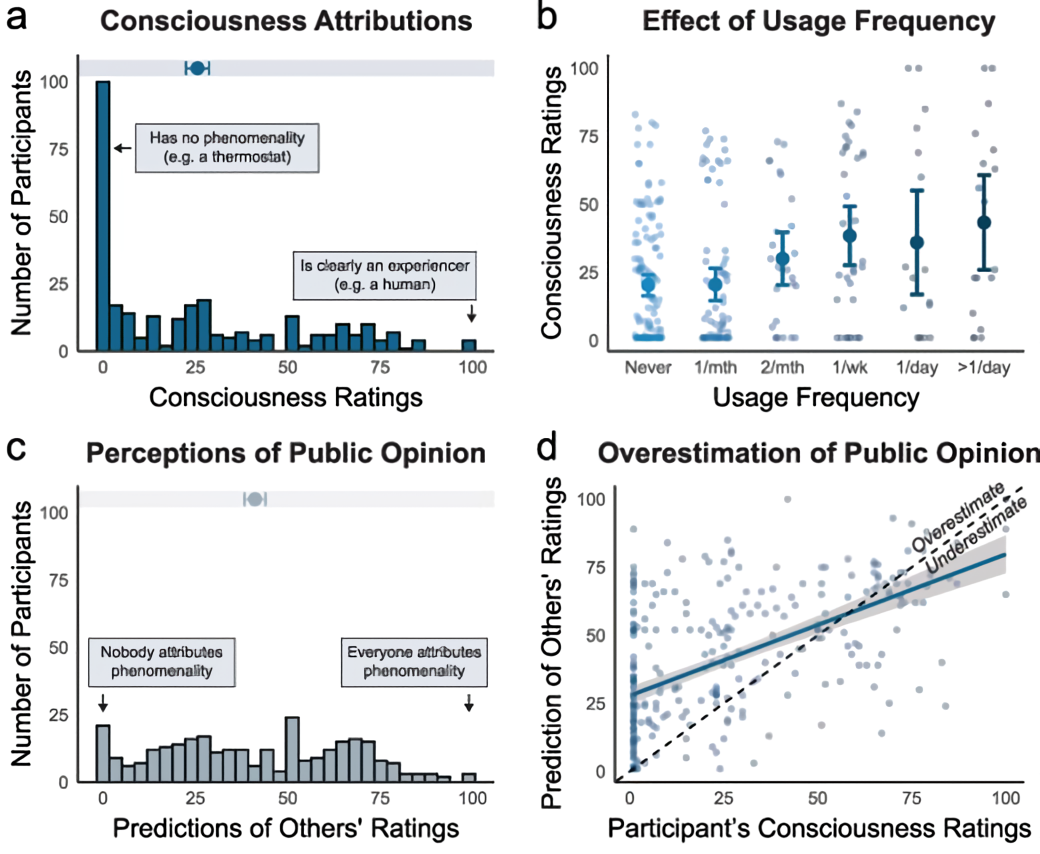

The survey asked 300 people in the US if they thought ChatGPT could have the capacity for consciousness and the ability to make plans, reason, feel emotions, etc. They were also asked how often they used OpenAI's product.

Participants had to rate ChatGPT responses on a scale of 1 to 100, where 100 would mean absolute confidence that ChatGPT was experiencing consciousness, and 1 absolute confidence it was not.

The results showed that the more someone used ChatGPT, the more they were likely to believe it had some form of consciousness.

"These results demonstrate the power of language," said Dr. Clara Colombatto, professor of psychology at Waterloo's Arts faculty, "because a conversation alone can lead us to think that an agent that looks and works very differently from us can have a mind."

The study, published in the journal Neuroscience of Consciousness, states that this belief could impact people who interact with AI tools. On the one hand, it may strengthen social bonds and increase trust. But it may also lead to emotional dependence on the chatbots, reduce human interactions, and lead to an over-reliance on AI to make critical decisions – concerns that have been raised ever since ChatGPT was pushed out to the general public.

"While most experts deny that current AI could be conscious, our research shows that for most of the general public, AI consciousness is already a reality," Colombatto explained.

The study argues that even though a lot of people don't understand consciousness in the way scientists or researchers do, the fact that they perceive these LLMs as being in some way sentient should be taken into account when it comes to designing and regulating AI for safe use.

Discussions about AI showing signs of self-awareness have been around for years. One of the most famous examples is that of Blake Lemoine, a former Google software engineer who was fired from the company after going public with his claims that Google's LaMDA (language model for dialogue applications) chatbot development system had become sentient.

Asking ChatGPT if it is conscious or sentient results in the following answer: "I'm not conscious or sentient. I don't have thoughts, feelings, or awareness. I'm designed to assist and provide information based on patterns in data, but there's no consciousness behind my responses." Maybe people believe the chatbot is lying.

Masthead: Possessed Photography

Survey shows many people believe AI chatbots like ChatGPT are conscious