At some point you may have heard someone say that for gaming you need X amount of cores. Typical examples include "6 is more than enough cores," or "you need a minimum of 8 cores for gaming," due to some misguided notion that consoles have 8 cores and therefore that's what PC gamers will require moving forward.

We've addressed these "core" misconceptions before, explaining that overall CPU performance is all that matters, rather than how many cores a CPU has. And while that should be a fairly easy concept to understand, there's a surprising amount of pushback.

It's also easier to dump down system requirements to core count, because it's a quick way to dismiss a wide range of CPUs. For example, games no longer run properly, or at all, on dual-core CPUs, so in that sense you require at minimum a quad-core to game. Having said that, most modern and demanding games don't run well on quad-cores, even if they support SMT (simultaneous multi-threading).

That sounds like I'm contradicting my own argument right off the bat, but once again, it's first and foremost about overall CPU performance.

Case in point, there isn't a single quad-core CPU in existence that can match the performance of a modern 6-core/12-thread processor, such as the Core i5-11600K or Ryzen 5 5600X. Inversely, there are dozens of CPUs with 8 cores or more that can't match the gaming or overall CPU performance of the Core i5-11600K and Ryzen 5 5600X.

Even CPUs as modern as the 8-core/16-thread Ryzen 7 2700X are, and always will be, inferior for gaming and by a sizable margin. We know this because the 5600X is over 10% faster than the 2700X when all cores are utilized in a productivity benchmark like Cinebench, so couple that with the far superior core and memory latency of the 5600X, and you have a 6-core CPU that is faster for gaming.

Dumbing down the argument to "games require 8 cores" is a gross oversimplification which can mislead PC builders, encouraging them to spend more on their CPU than they really need to.

So you see, dumbing down the argument to "games require 8 cores" is a gross oversimplification which can mislead PC builders, encouraging them to spend more on their CPU than they really need to. Moreover, that money is often better invested in a faster GPU, or saved for a future upgrade. It can also encourage builders to purchase what is ultimately a much slower CPU for gaming, think 2700X (8 cores) over 5600X (6 cores).

AMD and Intel

Now, something we've found quite interesting while benchmarking dozens of modern AMD and Intel CPUs in a wide range of games, is that Intel CPU's performance does appear to scale uniformly as you add more cores, right up to 10 cores, despite games not requiring that much processing power. Meanwhile, AMD's Zen 3 range offers consistent performance from 6 cores right up to 16 cores, and very few games show any difference in performance among them.

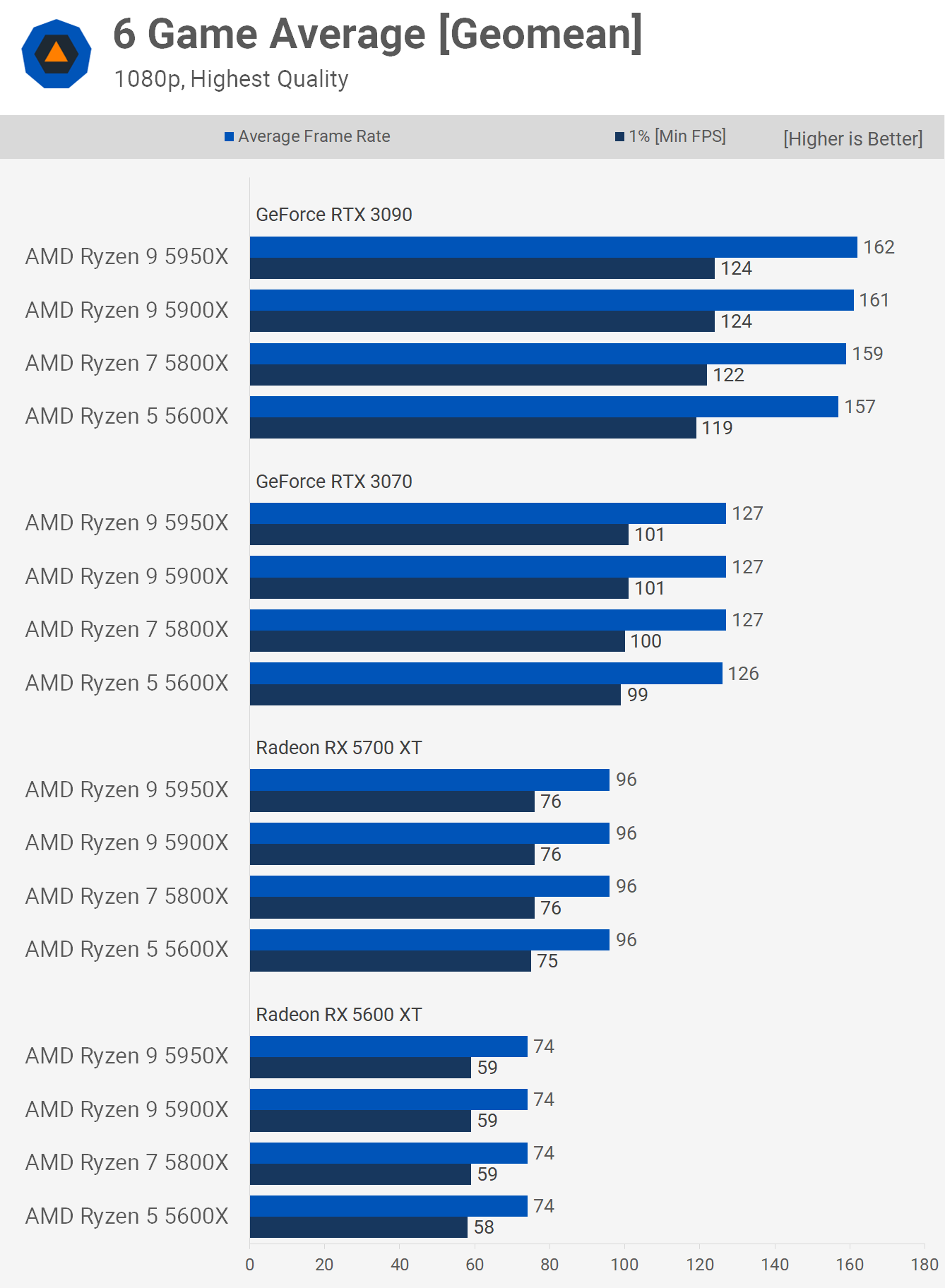

Looking at our Zen 3 CPU/GPU scaling data we can see that with the powerful GeForce RTX 3090, the 16-core/32-thread Ryzen 9 5950X was a mere 5% faster than the 6-core/12-thread 5600X on average, while the 8-core/16-thread 5800X was ~3% faster.

Now you may want to claim GPU bottleneck, despite the fact that this data is based on 1080p testing, but many of those games are very CPU intensive by today's standards.

Now, something I find quite interesting is that if we take a similar range of games, and test them at 1080p with the RTX 3090 but use Intel's 10th-gen Core CPU range, the result is quite different. The 8-core/16-thread Core i7-10700K is 9% faster than the Core i5-10600K, while the 10900K is 16% faster, a more substantial performance uplift.

This is fascinating because we've largely seen people justify the "moar cores is better" argument for gaming when using Intel CPUs, claiming their gaming experience was noticeably improved when upgrading from a part like the i7-8700K to the Core i9-10900K, and attributing that solely to the 67% increase in core count, but is there more to it?

The answer is "yes."

AMD Zen 3 CPUs all feature 32MB of L3 cache per CCD, that's 32MB total cache in the case of the Ryzen 5 and Ryzen 7 parts, and Ryzen 9 getting a 64MB total broken into two separate dies.

Intel CPUs though see a fundamental change in L3 cache capacity depending on core count. The 10th-gen 6-core i5 models get 12 MB of L3, 8-core i7's get 16 MB, and the 10-core i9 20 MB.

So from the 10600K to the 10900K, you're not only getting 67% more cores, but also a 67% increase in L3 cache capacity. With most PC games still unable to max-out 6 cores of the 10600K, we must wonder, what's playing the bigger role here: the extra cache or the cores?

We can actually find that out by disabling cores on the 10700K and 10900K, while locking the operating frequency, ring bus and memory timings. For this test we used the Gigabyte Z590 Aorus Xtreme motherboard, clocking the Intel three CPUs at 4.5 GHz with a 45x multiplier for the ring bus. Also used DDR4-3200 CL14 dual-rank, dual-channel memory with all primary, secondary and tertiary timings manually configured.

Therefore the only difference between each configuration is the core count, and the L3 cache capacity which is set in stone on each model. We should note that disabling cores doesn't reduce the L3 pool size. Even with a single core enabled, the 10900K still has 20 MB of L3.

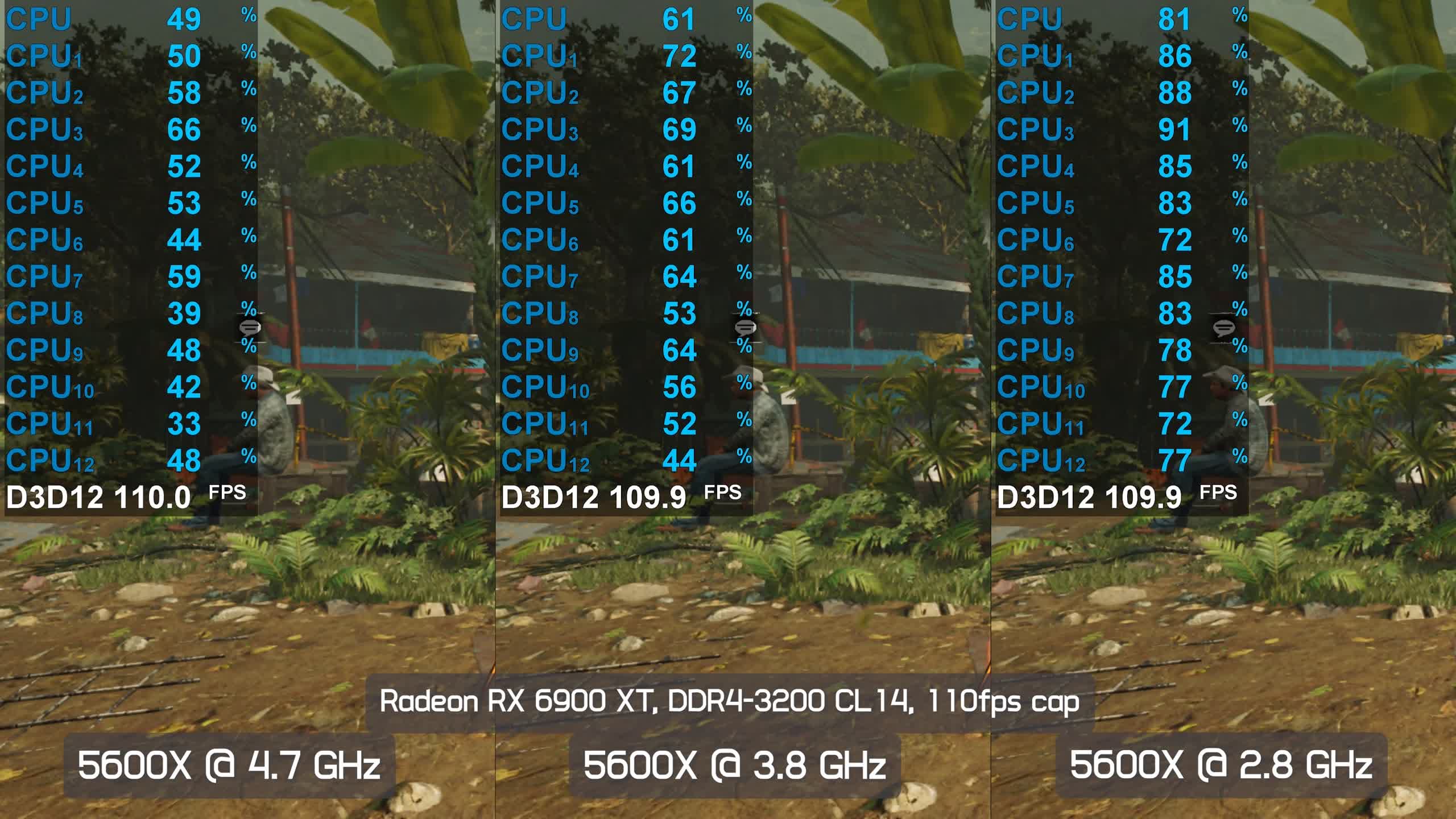

All testing was conducted using the Radeon RX 6900 XT which is the fastest 1080p gaming GPU on the market. Let's check out the results.

Benchmarks

We'll get started with the most extreme example we've found so far – disclaimer: we did not test dozens of games to narrow it down to a handful few, but simply picked 6 games that we thought would make for an interesting investigation and some are more CPU intensive than others, which is kind of the idea.

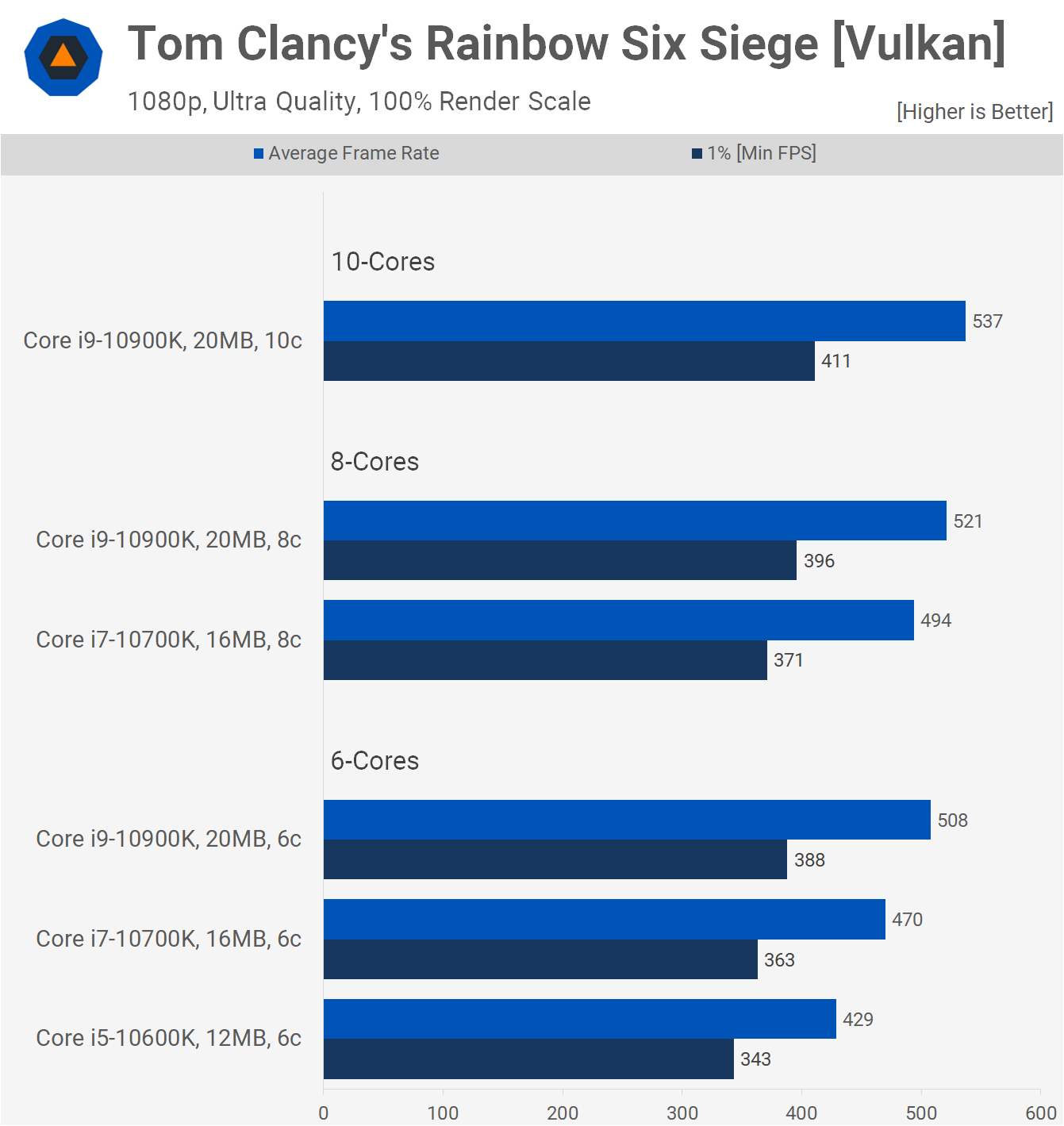

If you look at the Rainbow Six Siege results that match the stock L3 cache capacity of each processor, it compares the 10600K, 10700K and 10900K all clocked at the same 4.5 GHz frequency, so it's basically an IPC test. The conclusion most would draw from this is that going from 6 to 8 cores nets you 15% more performance, and from 8 to 10 another 9%. So going from the 10600K to the 10900K will net you 25% more performance, and that's true. But is it due to those 4 extra cores?

Now look at the 8-core data, comparing a 16MB L3 cache to a 20 MB L3 cache, you will see that this alone allows for a 5% increase in performance, and interestingly the two extra cores only boosted performance by 3%. But the 10700K and 10900K were already close in terms of performance, so how does this look with just 6-cores enabled?

This data is very interesting and looks very similar to what we see with Zen 3 processors. Remember the 10700K with all 8 cores enabled is 15% faster than the 10600K in this test, going from 429 fps to 494 fps. But here we can see that 10% of that margin is due to the larger L3 cache capacity, going from 12 to 16 MB. If the 10600K was equipped with the same 20 MB L3 cache as the 10900K, it would be faster than the 10700K. Also, the 10900K was just 6% faster with 10 cores enabled, opposed to just 6 cores.

In other words, the 67% increase in cores nets you just 6% more performance, while the 67% increase in L3 cache nets you 18% more performance, making the extra cache far more useful in this scenario.

It also proves my point earlier that it's not all about cores, though in this scenario we're testing CPUs of the same architecture, so more cores will always be as good or better, but if they all featured the same L3 cache capacity like what we see with Zen 3, for the vast majority of gamers there will be little benefit in going beyond 6-cores.

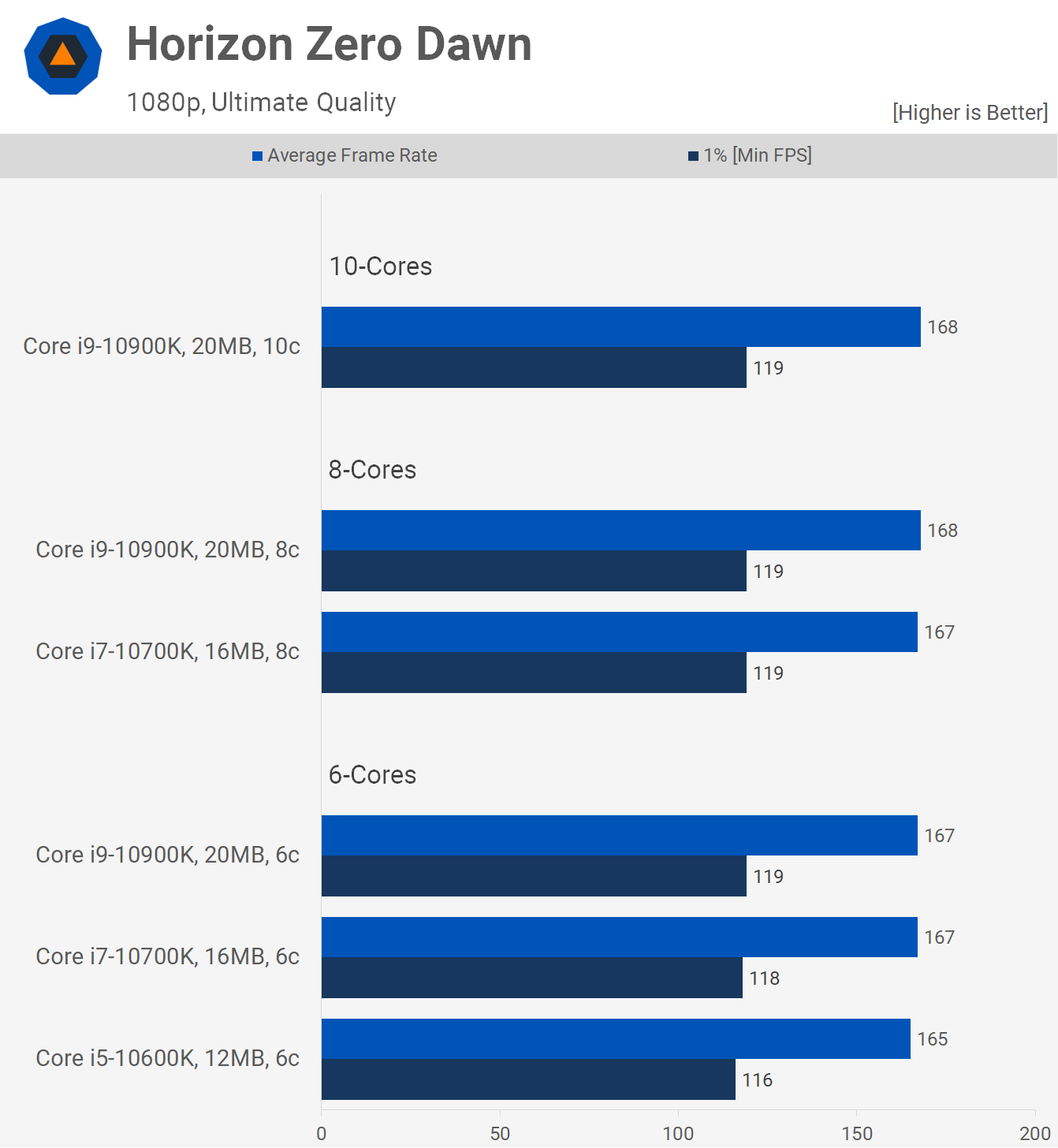

For games that aren't very CPU intensive and therefore run just fine using any modern processor with 6 or more cores, adding more cores and/or more cache makes little difference as you're entirely GPU limited.

Truth be told, this is the situation for the majority of games, even those released in the last year. Also bare in mind we're testing with an RTX 3090 at 1080p, so if you're gaming at a higher resolution such as 1440p with a lesser GPU such as the RTX 3060 Ti, even in more CPU demanding games the margins are likely to be similar to what's shown here.

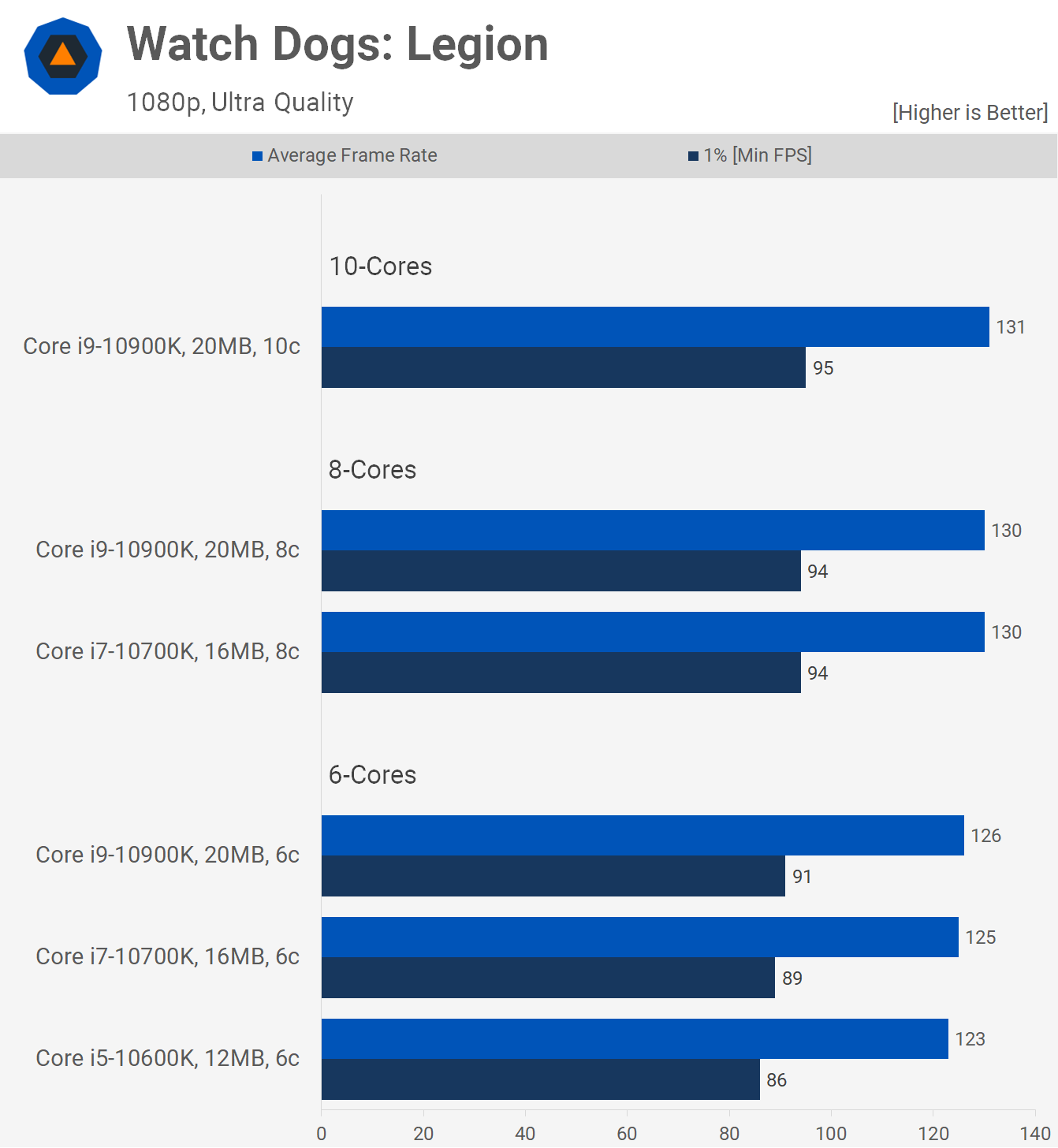

Watch Dogs Legion is another game that doesn't require more CPU power than what you get with the Core i5-10600K, the extra cores and cache of the 10900K only net you 7% more performance at 1080p. That said, here it looks like the cache only results in a ~2.5% performance increase, and the cores about 4%. Either way the overall difference is negligible.

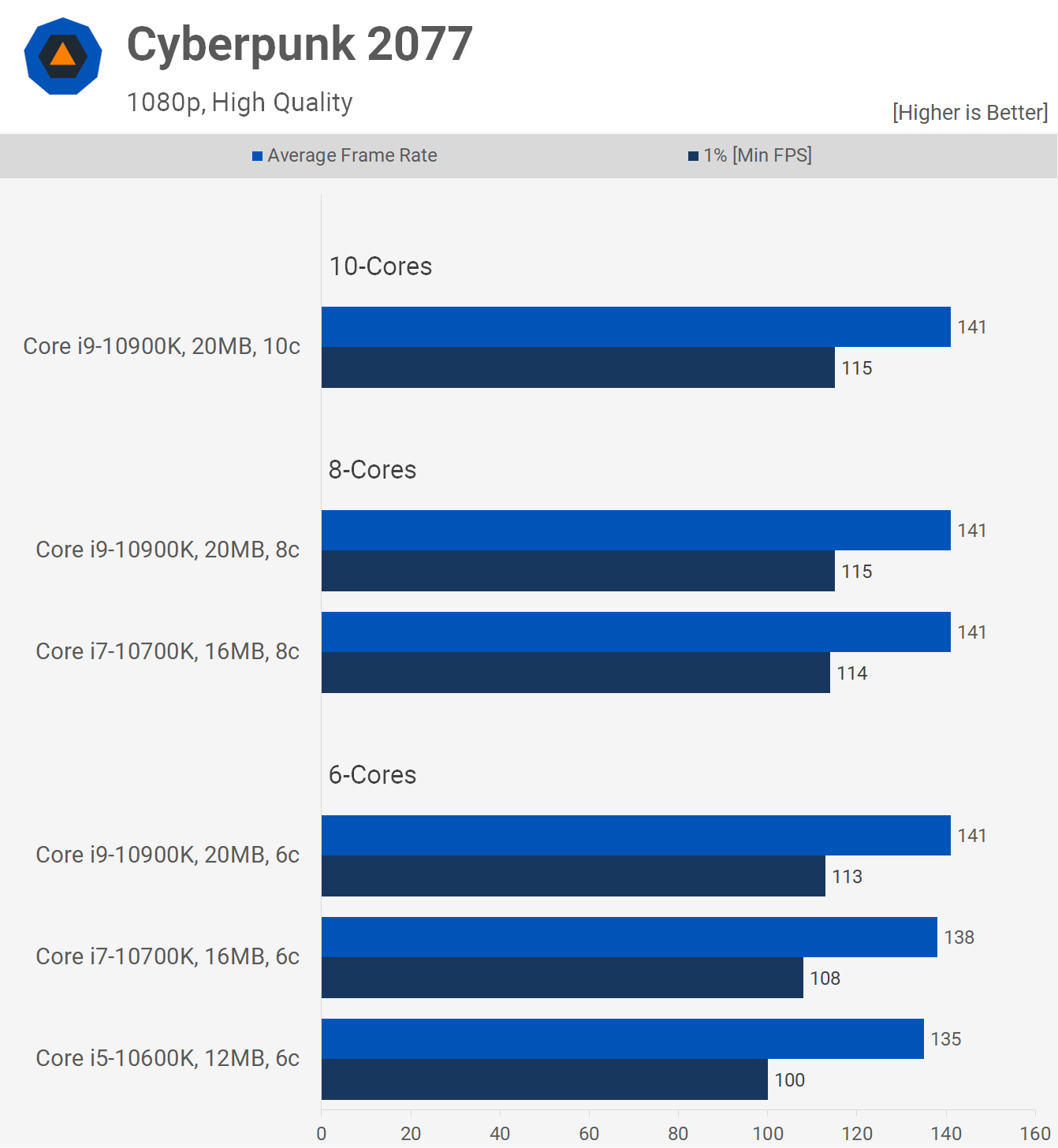

The Cyberpunk 2077 data is interesting, especially the 1% low results. We see a 15% improvement in 1% low performance when going from the 10600K to the 10900K and this affects frame time performance. However the bulk or that is due to the increased L3 cache with the extra cores doing basically nothing here.

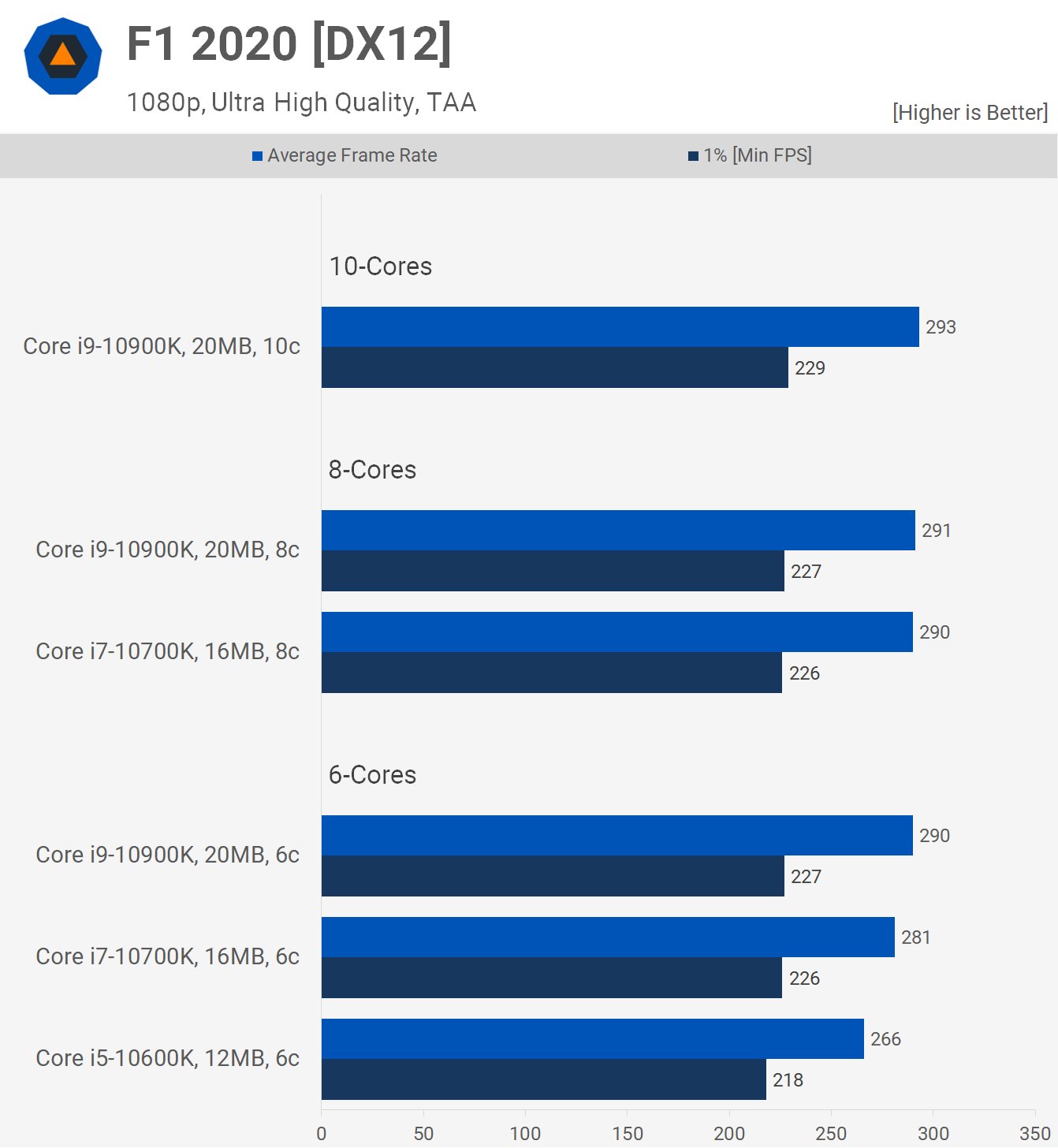

F1 2020 is another title where most of the gains can be attributed to the increased L3 cache. For example, the 10900K is seen to be 10% faster than the 10600K when matched clock-for-clock. However, if we limit the 10900K to 6 cores, it almost doesn't drop in performance when compared to its stock 10-core configuration (a 1% difference). This is another game where L3 cache capacity is more important than core count, at least when going beyond 6.

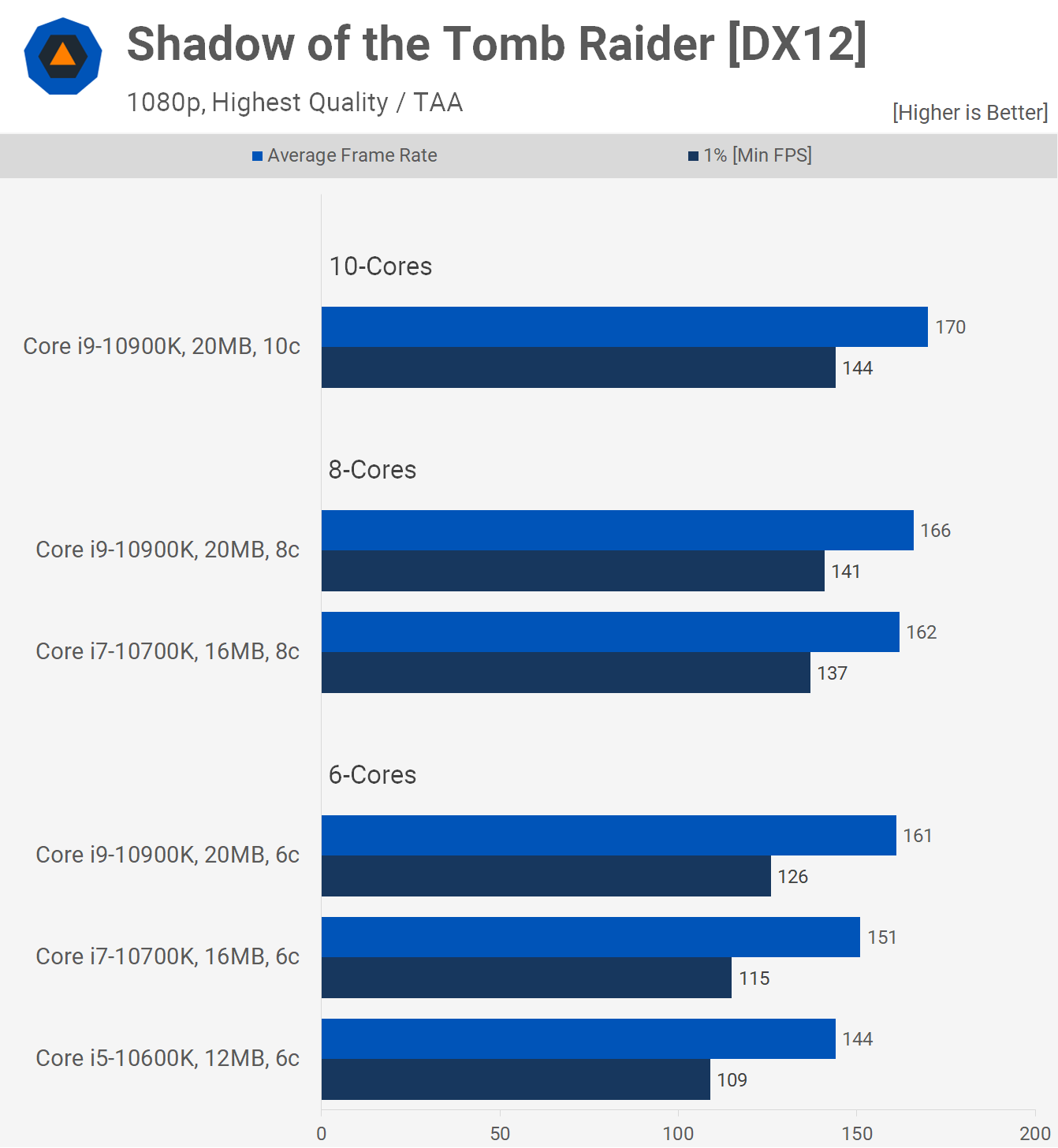

We use the village section in Shadow of the Tomb Raider which is very CPU demanding, and on that note, we're not using the built-in benchmark but we're actually playing the game.

We love to use SoTR for testing CPU performance because it's very core-heavy and will spread the load over many cores. These are the most balanced results we've seen between adding more cores and more cache as both appear to have a positive impact on performance.

At the same clock speed we see that the 10900K is 18% faster than the 10600K when looking at the average frame rate, and a massive 32% faster for the 1% low result, that's a dramatic improvement. We can see that 16% of that gain can be attributed to the cache increase, with 14% coming from the added cores.

What We Learned

That's our look at how cores and cache influence the gaming performance of Intel's 10th gen Core series. If you were to upgrade from a Core i7-8700K – which is essentially a Core i5-10600K – to the Core i7-10700K or something faster, and have noticed an uptick in performance, chances are it's not due to the extra cores, but rather the extra cache.

Of course, both can help and ideally you want more of both. When it comes to Intel processors, you can't simply pick which you'd prefer more of, as more cores means more cache, but if you could, the smarter investment might be in cache. It seems like AMD has come to a similar conclusion with their V-Cache technology, but that's a story for a future benchmark.

When CPU limited in today's games, cache generally provides the largest performance gains and this is why we see less of a performance variation between the various Zen 3-based (Ryzen 5000 series) processors ranging from 6 to 16 cores. And of course, you can't compare cache capacity of different CPU architectures to determine which is better, just as you can't do that with cores, because factors such as cache latency, bandwidth, and the way they're used will vary.

This proves why claiming that gamers require or are best served by 8 core processors is just wrong. There's far more to CPU performance than just core count, so you should evaluate CPUs based on their resulting performance, rather than a paper spec.

We've always found the CPU core misconception quite puzzling, but it mostly comes down to a fundamental misunderstanding regarding how CPUs work. We recommend you read our article "10 Big Misconceptions About Computer Hardware," which we published a year ago but it just happens to be that the #1 misconception is "you can compare CPUs by core count and clock speed" (you can't), and we touch on various others like clock speeds, nodes, Arm vs. x86, and others.

Also see: 10 Big Misconceptions About Computer Hardware

When it comes to buying advice, you're best served by knowing what you'll be doing with your computer first. For example, are you going to be streaming gameplay and will you be using the CPU for the heavy lifting? Will you be running heavy background tasks? Are you seeking high frame rates forcing more CPU limited gaming? Or are you quite conservative with what you run in the background, just leaving lightweight applications such as Discord and Steam running?

If you know you'll be running core-heavy applications while gaming, which is a bit odd, but if you are, more cores for a given CPU architecture will help. But again, parts like the Ryzen 7 2700X won't be better than the Ryzen 5 5600X. Instead, the 5800X will be better than the 5600X... because it's about overall CPU performance.

However, if you're simply gaming with typical background tasks, a CPU of comparable power and performance to that of the Ryzen 5 5600X is overkill, and more than capable of extracting the most out of extreme GPUs using competitive quality settings in today's most demanding games. So instead of dumping over 40% more money into the CPU, which is what you'd effectively be doing when opting for the 5800X over the 5600X, you're best off spending elsewhere or saving for a future upgrade.

More cores and "planning for the future" only makes sense if you're talking about CPUs occupying the same price point. For example, if the Ryzen 5 5600X and Core i9-10900KF were the same price, I'd strongly advocate going with the Intel CPU as in that scenario you'd be receiving comparable gaming performance now with the chance of much better performance in the future given the 10900K is ~45% faster overall when maxed out.

But in reality, the Core i9-10900KF costs $450, making it much more expensive than the sub-$300 Ryzen 5 5600X, and purely for gaming that makes it a horrible investment.

That was our look at how much cores and L3 cache influence CPU performance, and hopefully the discussion around core count is useful for those of you looking at buying or upgrading your CPU in the near future.