Why it matters: Depending on who you ask the market for AI semiconductors is estimated to be somewhere between a few billion and infinity dollars. We are firmly in the realm of high expectations when it comes to the category, and determining more precise market size estimates is crucial for the large group of people making investment decisions in the coming year.

Right now, the world is fixated on Nvidia's dominant position in the market, but it's important to delve into this a bit further. Nvidia is clearly the leader in the market for training chips, but that only makes up about 10% to 20% of the demand for AI chips. There is a considerably larger market for inference chips, which interpret trained models and respond to user queries. This segment is much bigger, and no single entity, not even Nvidia, has a lock on this market.

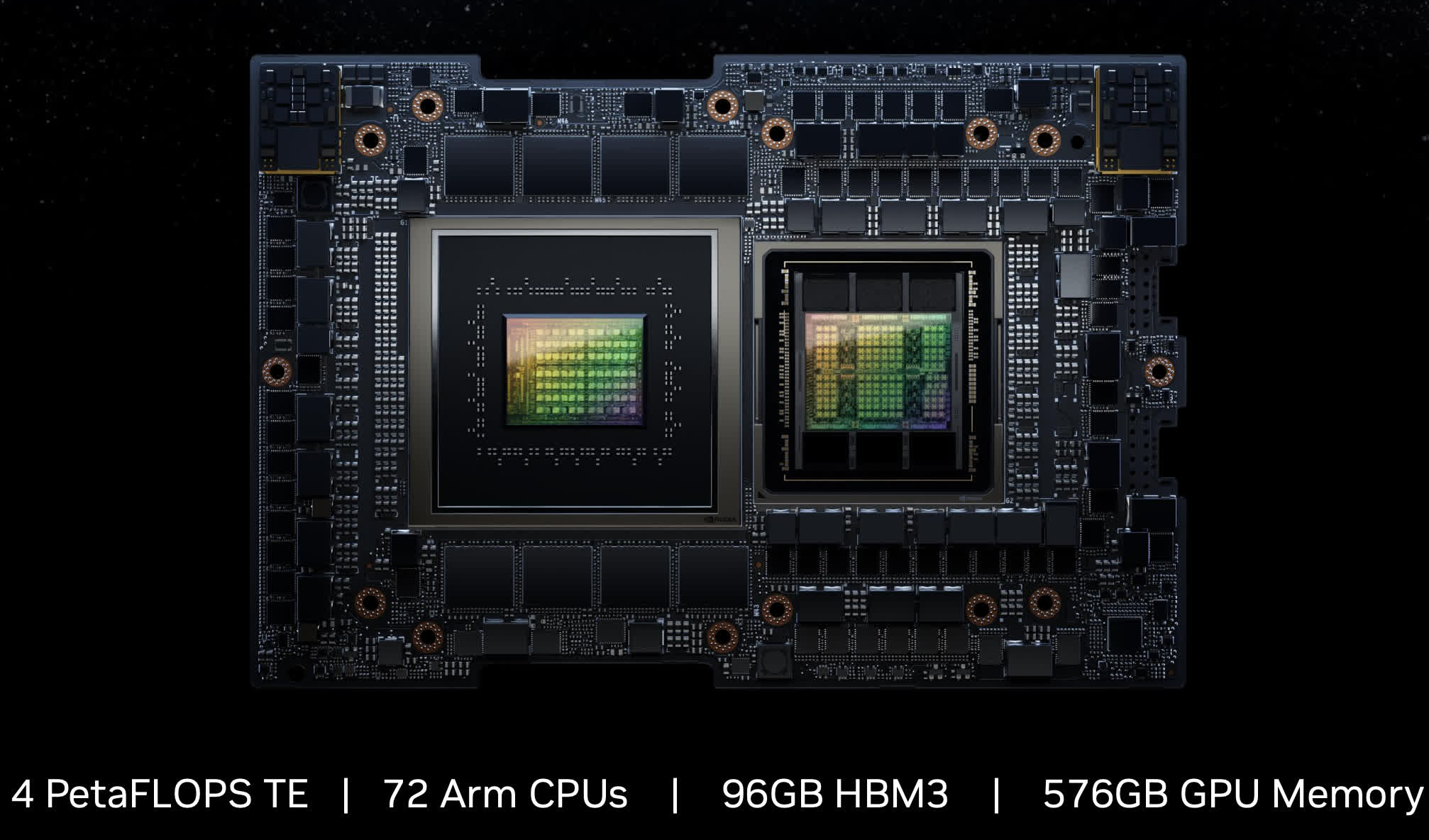

Further, we need to break down the market. Users will require inference processing in both edge and cloud environments. Cloud inference will be contingent on data center demand. The total market for data center semiconductors today stands at approximately $50 billion (not counting memory, which admittedly is a significant exclusion). This inference market is not only large but also quite fragmented.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

Nvidia arguably has a large share of this already, likely the largest, given that a substantial amount of AI work is conducted on GPUs. AMD is also targeting this market, but they trail significantly behind Nvidia. Moreover, this is an area where hyperscalers employ a lot of their proprietary chips - AWS on Inferentia and Google on TPU, to name a couple. It's worth noting that much of this work is still performed on CPUs, particularly with high-end GPUs in short supply. This segment of the market is anticipated to remain highly competitive in the foreseeable future. Like AMD, this is the market that all other CPU, GPU, and accelerator vendors will compete over with a healthy mix of products.

This brings us to another significant opportunity - Inference at the Edge. The term 'edge' is often misused, but for our purposes here, we are mainly referring to any device in the hands of an end-user. Today, this primarily encompasses phones and PCs, but is expanding into other sectors like cameras, robots, industrial systems, and cars. Predicting the size of this market is challenging. Beyond the broadening scope of usage, much of the silicon for these devices is likely to be bundled into a System on a Chip (SoC) that executes all the functions of those devices.

The iPhone serves as a prime example, dedicating a substantial chip area to AI cores in its A-Series processor. By some metrics, AI content already occupies 20% of the A-Series chips, which is considerable when we acknowledge that the remaining 80% has to run all other functions on the phone. Many other companies are also adopting SoC AI strategies.

A prevailing question in the AI realm is the amount of computing power needed to run the latest large language models (LLM) like GPT and Stable Diffusion. There is significant interest in running these models on the lightest possible computing footprint, and the open-source community has made remarkable advances in a relatively short period.

This implies that the Edge Inference market will likely remain highly fragmented. A pragmatic approach would be to assume that for existing categories, like phones and PCs, the AI silicon will be supplied by companies already providing chips for those devices, such as Qualcomm and Intel/AMD.

As stated earlier, obtaining reliable forecasts for the size of the AI silicon market is very hard to do right now. This situation isn't helped by the ambiguity surrounding the practical use of LLMs and other models for actual work. Then there are questions about what and how to count. For instance, if a hyperscaler purchases several hundred thousand CPUs, which will run neural networks as well as traditional workloads, or if someone adds a few dozen square millimeters of AI blocks to their SoC, how do we account for those? Currently, our rough estimate suggests that the market for AI silicon will comprise about 15% for training, 45% for data center inference, and 40% for edge inference.

This holds substantial implications for anyone considering entry into this market. For the foreseeable future, Nvidia will maintain its grip on the training market. The data center inference market looks attractive but already includes a myriad of companies, including giants like Nvidia, AMD, and Intel, plus Roll-Your-Own internal silicon from customers. Edge inference is likely to be dominated by existing vendors of traditional silicon, all of which are investing heavily in supporting transformers and LLMs. So, what opportunities exist for new entrants? We can see essentially four options:

- Supply IP or chiplets to one of the SoC vendors. This approach has the advantage of relatively low capital requirements; let your customer handle payments to TSMC. There is a plethora of customers aiming to build SoCs. While many may wish to handle everything in-house, many are likely to opt for assistance where necessary.

- Build up a big (really big) war chest and go after the data center market. This is a challenging endeavor for several reasons, not least because the number of customers is limited, but the potential rewards are enormous.

- Find some new edge device that could benefit from a tailored solution. Shift focus from phones and laptops to cameras, robots, industrial systems, etc. This route isn't easy either. Some of these devices are extremely cheap and thus cannot accommodate chips with high ASPs. A few years ago, we observed many pitches for companies looking to do low-power AI on cameras and drones. Very few have survived.

- Finally, there is automotive, the Great Hope of the whole industry. This market is still highly fragmented and somewhat nebulous. The window for entry isn't vast, but the opportunity is substantial.

To sum up, the AI market is largely spoken for already. This doesn't mean all hope is lost for those who venture in, but companies will need to be highly focused and exceedingly judicious in their choice of markets and customers.