Big quote: Finnish state-owned startup Flow Computing claims that its new hardware can boost any processor's speed by orders of magnitude through parallel processing. The technology is supposedly scalable across most devices, architectures, and software. The company plans to reveal the specific details behind what it calls the "holy grail" of CPU performance in August.

Flow Computing has secured around $4.3 million in venture capital funding from Nordic businesses to pursue an IP that it claims can multiply CPU performance. The company says its Parallel Processing Units (PPUs) can usher in a revolutionary "CPU 2.0" era.

A subsidiary of the Finnish state-owned VTT research institute, Flow claims that CPUs have become the weakest link in modern architecture, likely referring to the clock speed plateaus of the last couple of decades. PPUs can supposedly help legacy and future processors climb over that hump by helping to synchronize workloads more efficiently.

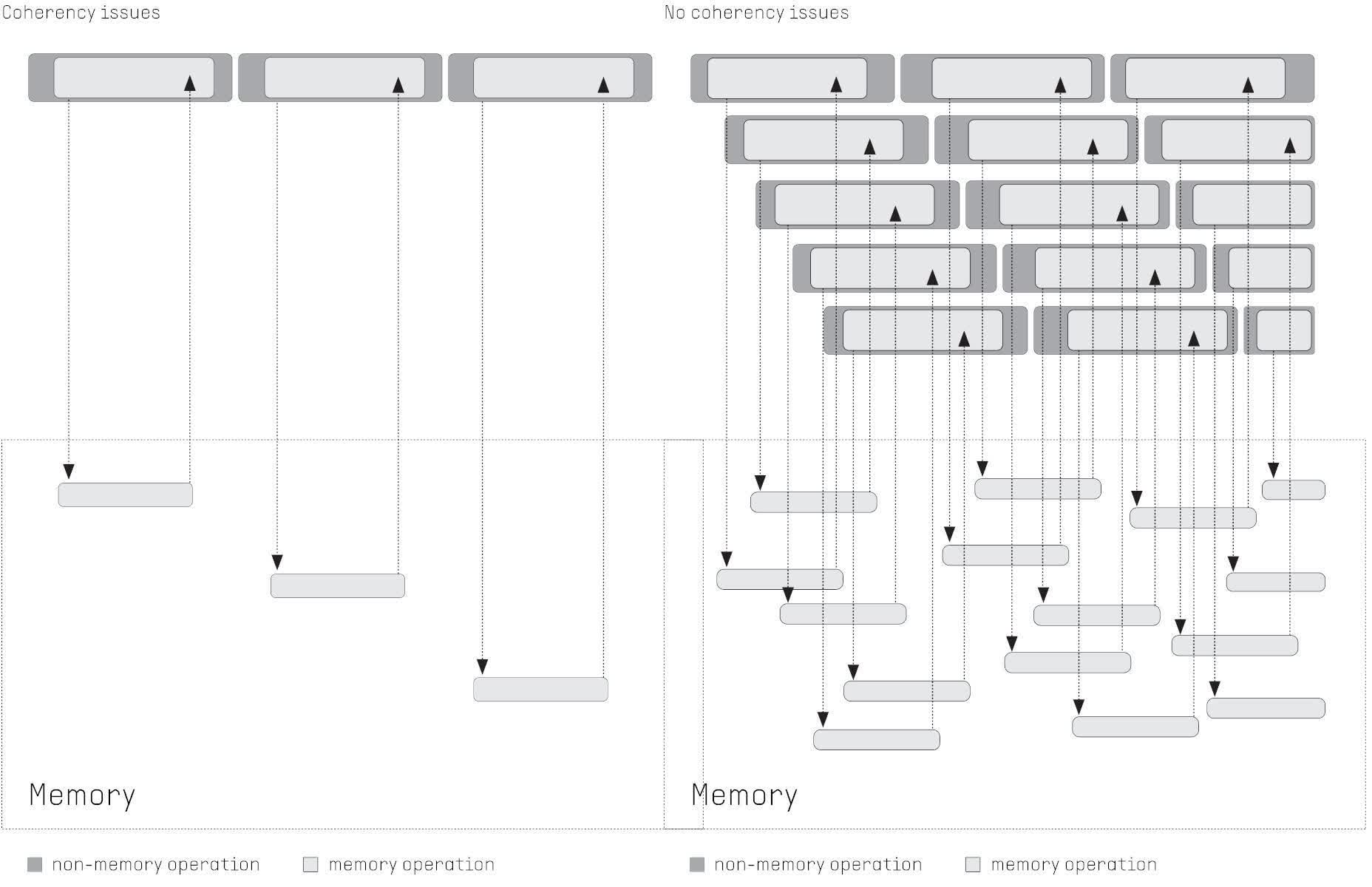

The startup's website explains that parallel processing can hide latency and thus minimize the amount of wasted clock cycles. With the extra chip backing them up, CPUs can switch between tasks much faster with the same hardware specs as before.

Flow's system works by integrating the PPU onto the processor die, so the technology requires new hardware. If vendors decide to take a chance on it, the first PPU-equipped devices would probably take a few years to appear on the market.

Click to enlarge

However, anyone using PPUs wouldn't need to dramatically alter their hardware or software pipelines. The chips support existing architectures like x86, Arm, and RISC-V. Furthermore, software that hasn't been rewritten to account for PPUs can see CPU performance double. Recompiling an operating system or programming system library can increase performance by up to a factor of 100. An in-development AI-based tool might help developers take maximum advantage of PPUs.

By changing the amount of PPU cores, the improvements can be applied to numerous form factors. Flow suggests that a 16-core PPU would be ideal for mobile devices, 64 cores for PCs, and 256 cores for servers.

Although parallel processing can supposedly boost performance for any application, the company points toward emerging sectors like AI and cloud computing as the most likely to benefit. More technical details will be available at the Stanford University Hot Chips 2024 event, running August 25-27.