Why it matters: The generative AI race shows no signs of slowing down, and Nvidia is looking to fully capitalize on it with the introduction of a new AI superchip, the H200 Tensor Core GPU. The biggest improvement when compared to its predecessor is the use of HBM3e memory, which allows for greater density and higher memory bandwidth, both crucial factors in improving the speed of services like ChatGPT and Google Bard.

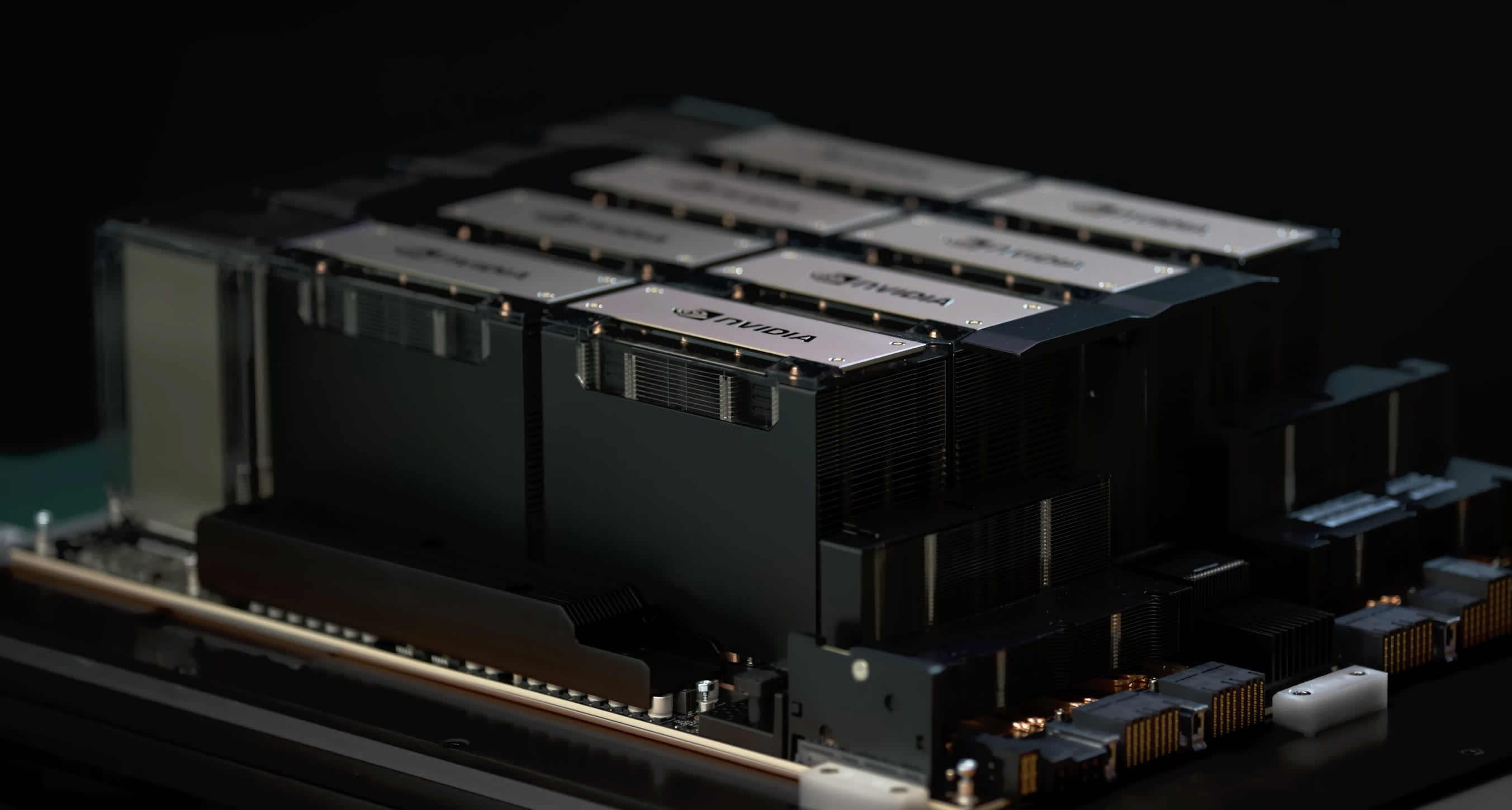

Nvidia this week introduced a new monster processing unit for AI workloads, the HGX H200. As the name suggests, the new chip is the successor to the wildly popular H100 Tensor Core GPU that debuted in 2022 when the generative AI hype train started picking up speed.

Team Green announced the new platform during the Supercomputing 2023 conference in Denver, Colorado. Based on the Hopper architecture, the H200 is expected to deliver almost double the inference speed of the H100 on Llama 2, which is a large language model (LLM) with 70 billion parameters. The H200 also provides around 1.6 times the inference speed when using the GPT-3 model, which has 175 billion parameters.

Some of these performance improvements came from the architectural refinements, but Nvidia says it's also done extensive optimization work on the software front. This is reflected in the recent release of open-source software libraries like TensorRT-LLM that can deliver up to eight times more performance and up to six times lower energy consumption when using the latest LLMs for generative AI.

Another highlight of the H200 platform is that it's the first to make use of fester spec, HBM3e memory. The new Tensor Core GPU's total memory bandwidth is a whopping 4.8 terabytes per second, a good bit faster than the 3.35 terabytes per second achieved by the H100's memory subsystem. The total memory capacity has also increased from 80 GB on the H100 to 141 GB on the H200 platform.

Nvidia says the H200 is designed to be compatible with the same systems that support the H100 GPU. That said, the H200 will be available in several form factors such as HGX H200 server boards with four or eight-way configurations or as a GH200 Grace Hopper Superchip where it will be paired with a powerful 72-core Arm-based CPU on the same board. The GH200 will allow for up to 1.1 terabytes of aggregate high-bandwidth memory and 32 petaflops of FP8 performance for deep-learning applications.

Just like the H100 GPU, the new Hopper superchip will be in high demand and command an eye-watering price. A single H100 sells for an estimated $25,000 to $40,000 depending on order volume, and many companies in the AI space are buying them by the thousands. This is forcing smaller companies to partner up just to get limited access to Nvidia's AI GPUs, and lead times don't seem to be getting any shorter as time goes on.

Speaking of lead times, Nvidia is making a huge profit on every H100 sold, so it's even shifted some of the production from the RTX 40-series towards making more Hopper GPUs. Nvidia's Kristin Uchiyama says supply won't be an issue as the company is constantly working on adding more production capacity, but declined to offer more details on the matter.

One thing is for sure – Team Green is much more interested in selling AI-focused GPUs, as sales of Hopper chips make up an increasingly large chunk of its revenues. It's even going to great lengths to develop and manufacture cut-down versions of its A100 and H100 chips just to circumvent US export controls and ship them to Chinese tech giants. This makes it hard to get too excited about the upcoming RTX 4000 Super graphics cards, as availability will be a big contributing factor towards their retail price.

Microsoft Azure, Google Cloud, Amazon Web Services, and Oracle Cloud Infrastructure will be the first cloud providers to offer access to H200-based instances starting in Q2 2024.