Editor's take: As someone who's researched and closely tracked the evolution of GenAI and how it's being deployed in real-world business environments, it never ceases to amaze me how quickly the landscape is changing. Ideas and concepts that seemed years away a few months ago – such as the ability to run foundation models directly on client devices – are already here. At the same time, some of our early expectations around how the technology might evolve and be deployed are shifting as well – and the implications could be big.

In the realm of basic technological advancement, particularly in the deployment of GenAI, there has been a growing recognition that the two-step process involving model training and inferencing does not occur as initially anticipated.

It has become apparent that only a select few companies are building and training their foundational models from the ground up. In contrast, the predominant approach involves customizing pre-existing models.

Some may consider the distinction between training and customizing large language models (LLMs) to be merely semantic. However, the reality suggests a far more significant impact.

Some may consider the distinction between training and customizing large language models (LLMs) to be merely semantic. However, the reality suggests a far more significant impact. This trend emphasizes that only the largest corporations, with ample resources and capital, are capable of developing these models from their inception and continuing to refine them.

Companies such as Microsoft, Google, Amazon, Meta, IBM, and Salesforce – along with the companies they're choosing to invest in and partner with, such as OpenAI, Anthropic, etc. – are at the forefront of original model development. Although numerous startups and smaller entities are diligently attempting to create their foundational models, there is growing skepticism about how viable those types of business models are in the long run. In other words, the market is increasingly looking like yet another case of big tech companies getting bigger.

The reasons for this go beyond the typical factors of skill set availability, experience with the technology, and trust in big brand names. Because of the extensive reach and influence that GenAI tools are already starting to have, there are increasing concerns about legal issues and related factors. To put it simply, if large organizations are going to start depending on a tool that will likely have a profound impact on their business, they need to know that there's a big company behind that tool that they can place the blame on in case something goes wrong.

This is very different from many other new technology products that were often brought into organizations via startups and other small companies. The reach that GenAI is expected to have is simply too deep into an organization to be entrusted to anyone but a large, well-established tech company.

And yet, despite this concern, one of the other surprising developments in the world of GenAI has been the rapid adoption and usage of open-source models from places like Hugging Face. Both tech suppliers and businesses are partnering with Hugging Face at an incredibly rapid pace because of the speed at which new innovations are being introduced into the open models that they house.

So, how does one reconcile these seemingly incongruous, incompatible developments? It turns out that many of the models in Hugging Face are not entirely new ones but instead are customizations of existing models. So, for example, you can find things that leverage something like Meta's open source and popular Llama 2 model as a baseline, but then are adapted to a particular use case.

As a result, businesses can feel comfortable using something that stems from a large tech company but offers the unique value that other open-source developers have added to. It's one of the many examples of the unique opportunities and benefits that the concept of separating the "engine" from the application – which GenAI is allowing developers to do – is now enabling.

From a market perspective, this means that the largest tech organizations will likely battle it out to produce the best "engines" for GenAI, but other companies and open-source developers can then leverage those engines for their own work. The implications of this are likely to be large when it comes to things like pricing, packaging, licensing, business models, and the money-making side of GenAI.

At this early stage, it's unclear exactly what those implications will be. One likely development, however, is the separation of these core foundation model engines and the applications or model customizations that sit on top of them when it comes to creating products – certainly something worth watching.

This separation of models from applications might also impact how foundation models run directly on devices. One of the challenges of this exercise is that foundation models require a great deal of memory to function efficiently. Also, many people believe that client devices are going to need to run multiple foundation models simultaneously in order to perform all the various tasks that GenAI is expected to enable.

The problem is, while PC and phone memory specs have certainly been on the rise over the last few years, it's still going to be challenging to load multiple foundation models into memory at the same time on a client device. One possible solution is to select a single foundation model that powers multiple independent applications. If this proves to be the case, it raises interesting questions about partnerships between device makers and foundation model suppliers and the ability to differentiate amongst them.

Rapidly growing technologies like RAG (Retrieval Augmented Generation) provide a powerful way to customize models using an organization's proprietary data.

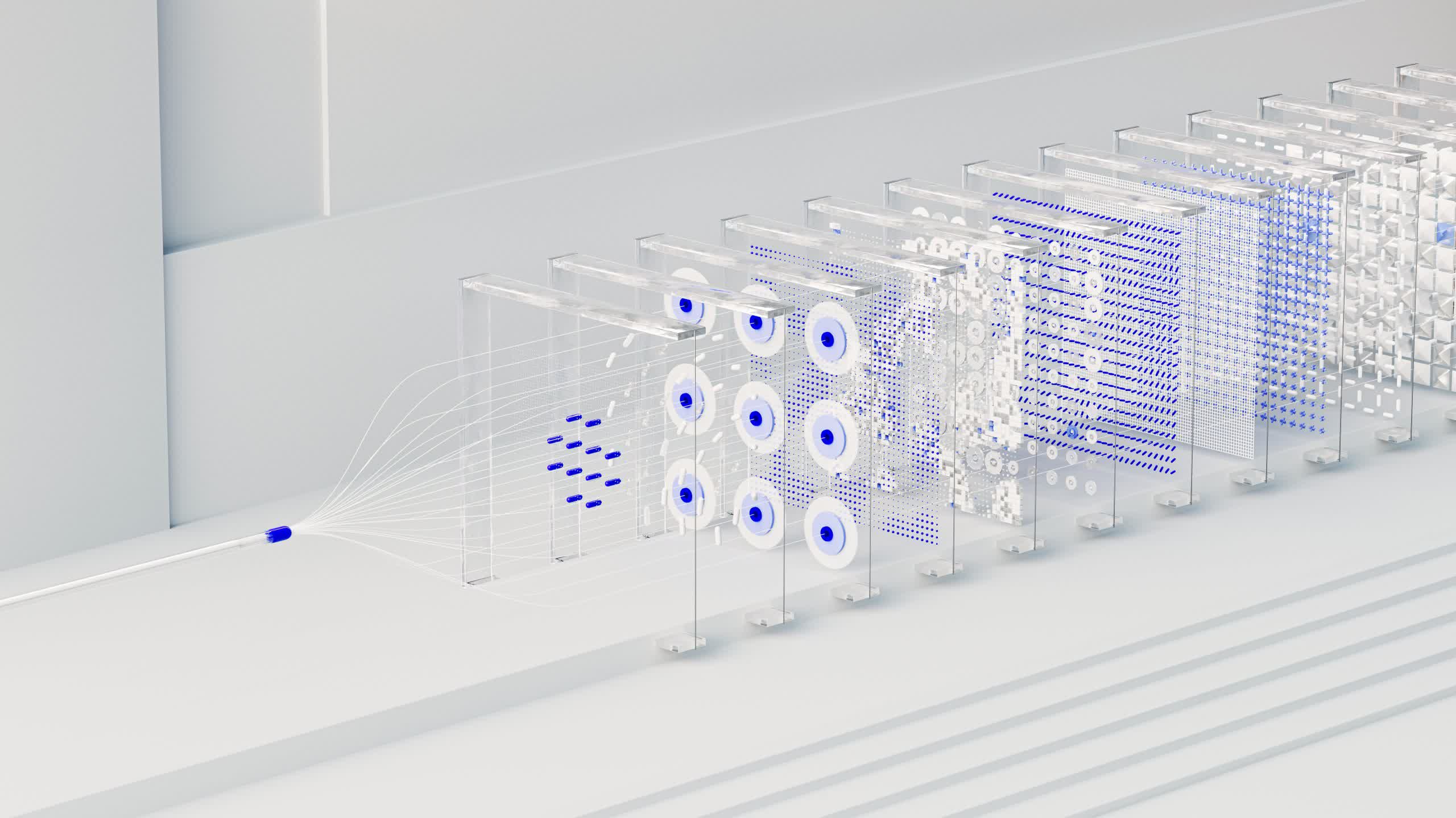

In addition to shifts in model training, significant advancements have been made in inference technology. For instance, technologies such as RAG (Retrieval Augmented Generation) provide a dynamic method for model customization using an organization's proprietary data. RAG works by integrating a standard query to a large language model (LLM) with responses generated from the organization's unique content cache.

Putting it another way, RAG applies the interpretive rules of a fully trained model to select relevant content, constructing responses that merge this selection mechanism with the organization's exclusive data.

The beauty of this approach is twofold. Firstly, it facilitates model customization in a more efficient and less resource-intensive manner. Secondly, it mitigates issues such as erroneous or 'hallucinated' content by sourcing responses directly from a tailored dataset, rather than the broader content pool used for initial model training. As a result, the RAG approach is being quickly adopted by many organizations and looks to be a key enabler for future developments. Notably, it transforms inferencing by reallocating computational resource demands from cloud-based to local data centers or client devices.

Given the swift pace of change in the GenAI sector, the arguments presented here might become outdated by next year. Nevertheless, it's evident that significant shifts are underway, necessitating a pivot in industry communication strategies. Switching from the focus on training and inferencing of models to one that highlights model customization, for example, seems overdue based on the realities of today's marketplace. Similarly, providing more information around technologies like RAG and their potential influence on the inferencing process also seems critical to help educate the market.

The profound influence that GenAI is poised to exert on businesses is no longer in question. Yet, the trajectory and speed of this impact remains uncertain. Therefore, initiatives aimed at educating the public about GenAI's evolution, through precise and insightful messaging, are going to be extremely important. The process won't be easy, but let's hope more companies are willing to take on the challenge.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech